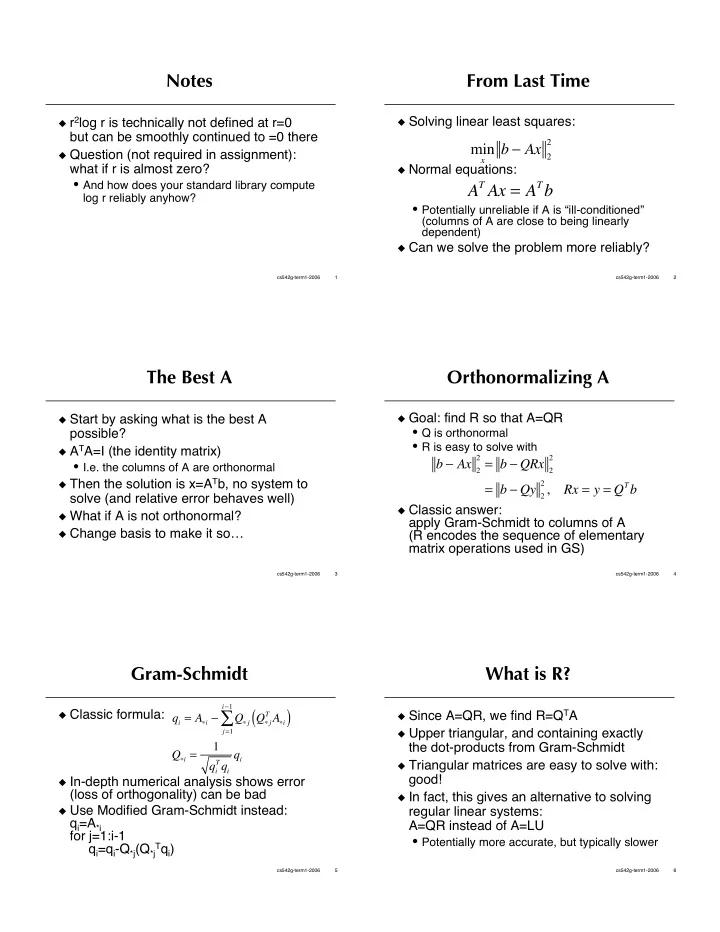

Notes From Last Time � r 2 log r is technically not defined at r=0 � Solving linear least squares: but can be smoothly continued to =0 there b � Ax 2 2 min � Question (not required in assignment): x what if r is almost zero? � Normal equations: A T Ax = A T b • And how does your standard library compute log r reliably anyhow? • Potentially unreliable if A is “ill-conditioned” (columns of A are close to being linearly dependent) � Can we solve the problem more reliably? cs542g-term1-2006 1 cs542g-term1-2006 2 The Best A Orthonormalizing A � Goal: find R so that A=QR � Start by asking what is the best A • Q is orthonormal possible? • R is easy to solve with � A T A=I (the identity matrix) 2 = b � QRx 2 b � Ax 2 2 • I.e. the columns of A are orthonormal 2 , � Then the solution is x=A T b, no system to = b � Qy 2 Rx = y = Q T b solve (and relative error behaves well) � Classic answer: � What if A is not orthonormal? apply Gram-Schmidt to columns of A � Change basis to make it so… (R encodes the sequence of elementary matrix operations used in GS) cs542g-term1-2006 3 cs542g-term1-2006 4 Gram-Schmidt What is R? i � 1 ( ) � T A � i � Classic formula: q i = A � i � � Since A=QR, we find R=Q T A Q � j Q � j j = 1 � Upper triangular, and containing exactly 1 the dot-products from Gram-Schmidt Q � i = q i T q i � Triangular matrices are easy to solve with: q i good! � In-depth numerical analysis shows error (loss of orthogonality) can be bad � In fact, this gives an alternative to solving � Use Modified Gram-Schmidt instead: regular linear systems: q i =A *i A=QR instead of A=LU for j=1:i-1 • Potentially more accurate, but typically slower T q i ) q i =q i -Q *j (Q *j cs542g-term1-2006 5 cs542g-term1-2006 6

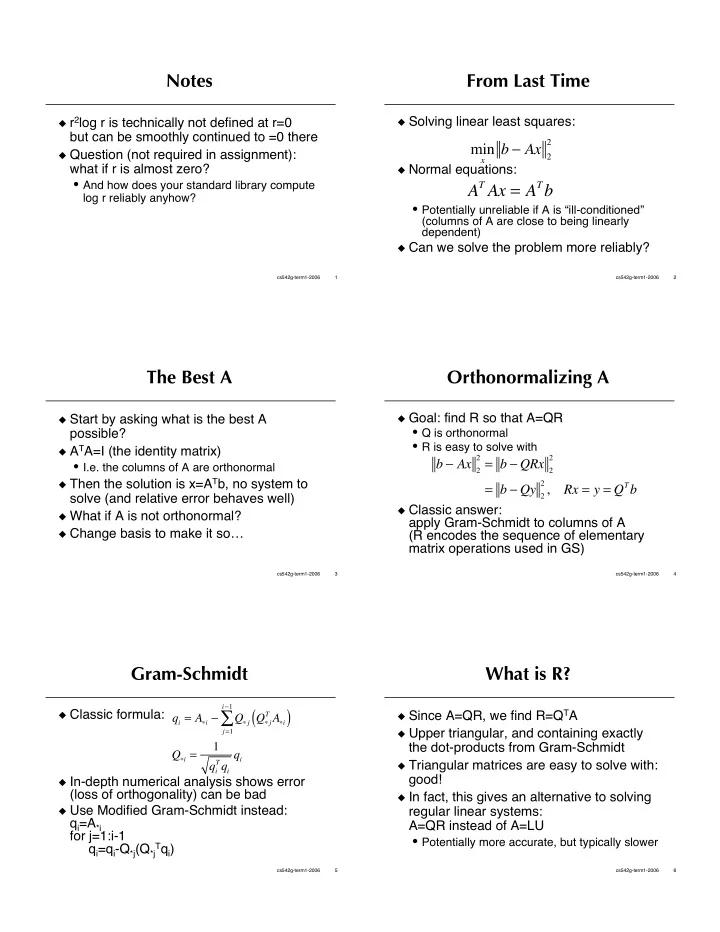

Another look at R Yet another look at R � Since A=QR, we have A T A=R T Q T QR=R T R � There is an even better way to compute R (than Modified Gram-Schmidt): � That is, R T is the Cholesky factor of A T A orthogonal transformations � But this is not a good way to compute it! � Idea: instead of upper-triangular elementary matrices turning A into Q, use orthogonal elementary matrices to turn A into R � Two main choices: • Givens rotations: rotate in selected two dimensions • Householder reflections: reflect across a plane cs542g-term1-2006 7 cs542g-term1-2006 8 Givens rotations Householder reflections � For c 2 +s 2 =1: � For a unit vector v (normal to plane): � � I 0 0 0 0 Q = I � 2 vv T � � 0 c 0 s 0 � � � � Q = 0 0 I 0 0 � Choose v to zero out entries below the � � � s � � 0 0 c 0 diagonal in a column � � � � 0 0 0 0 I � Note: can store Householder vectors and R in-place of A � Say we want QA to be zero at (i,j): • Don � t directly form Q, just multiply by sA jj = cA ij Householder factors when computing Q T b cs542g-term1-2006 9 cs542g-term1-2006 10 Full and Economy QR Weighted Least Squares � Even if A is rectangular, Givens and � What if we introduce nonnegative weights Householder implicitly give big square Q (some data points count more than others) (and rectangular R): n � ( ) 2 w i b i � ( Ax ) i min called the full QR x i = 1 • But you don � t have to form the big Q… T W b � Ax ( ) ( ) b � Ax min x � Modified Gram-Schmidt computes only the � Weighted normal equations: first k columns of Q (rectangular Q) and A T WAx = A T Wb gives only a square R: called the economy QR � Can also solve with W A = QR cs542g-term1-2006 11 cs542g-term1-2006 12

Moving Least Squares (MLS) Constant Fit MLS � Idea: estimate f(x) by fitting a low degree � Instructive to work out case of zero degree polynomial to data points, but weight polynomials (constants) nearby points more than others � Sometimes called Franke interpolation � Use a weighting kernel W(r) � Illustrates effect of weighting function • Should be big at r=0, decay to zero further • How do we force it to interpolate? away • What if we want local calculation? � At each point x, we have a (small) weighted linear least squares problem: n ( ) f i � p ( x i ) � [ ] x � x i 2 min W p i = 1 cs542g-term1-2006 13 cs542g-term1-2006 14

Recommend

More recommend