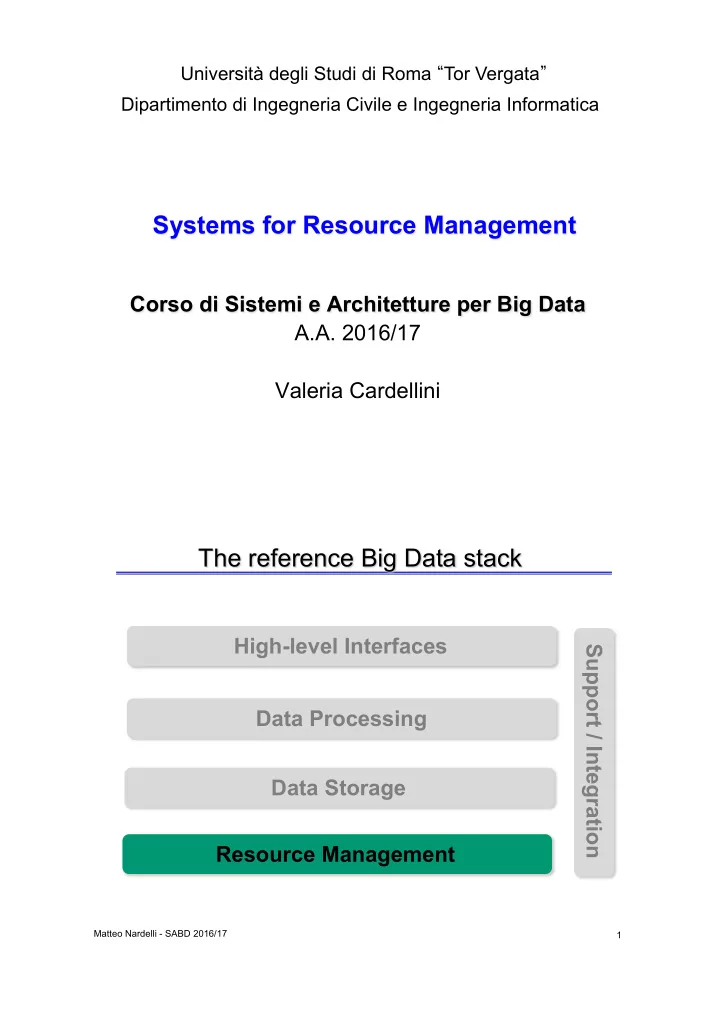

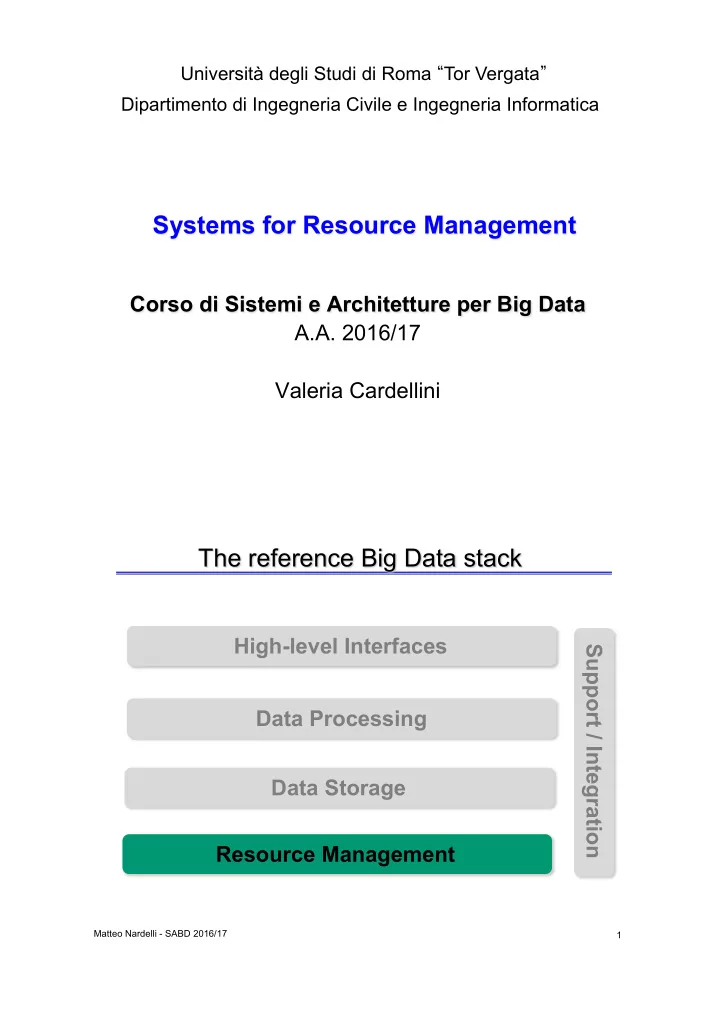

Università degli Studi di Roma “ Tor Vergata ” Dipartimento di Ingegneria Civile e Ingegneria Informatica Systems for Resource Management Corso di Sistemi e Architetture per Big Data A.A. 2016/17 Valeria Cardellini The reference Big Data stack High-level Interfaces Support / Integration Data Processing Data Storage Resource Management Matteo Nardelli - SABD 2016/17 1

Outline • Cluster management systems – Mesos – YARN – Borg – Omega – Kubernetes • Resource management policies – DRF Valeria Cardellini - SABD 2016/17 2 Motivations • Rapid innovation in cloud computing • No single framework optimal for all applications • Running each framework on its dedicated cluster: – Expensive – Hard to share data Valeria Cardellini - SABD 2016/17 3

A possible solution • Run multiple frameworks on a single cluster • How to share the (virtual) cluster resources among multiple and non homogeneous frameworks executed in virtual machines/ containers? • The classical solution: Static partitioning • Is it efficient? 4 Valeria Cardellini - SABD 2016/17 What we need • The Datacenter as a Computer idea by D. Patterson – Share resources to maximize their utilization – Share data among frameworks – Provide a unified API to the outside – Hide the internal complexity of the infrastructure from applications • The solution: A cluster-scale resource manager that employs dynamic partitioning Valeria Cardellini - SABD 2016/17 5

Apache Mesos • Cluster manager that provides a common resource sharing layer over which diverse frameworks can run • Abstracts the entire datacenter into a single pool of computing resources, simplifying running distributed systems at scale • A distributed system to run distributed systems on top of it Dynamic partitioning 6 Valeria Cardellini - SABD 2016/17 Apache Mesos (2) • Designed and developed at the University of Berkeley - Top open-source project by Apache mesos.apache.org • Twitter and Airbnb as first users; now supports some of the largest applications in the world • Cluster: a dynamically shared pool of resources Static partitioning Dynamic partitioning Valeria Cardellini - SABD 2016/17 7

Mesos goals • High utilization of resources • Supports diverse frameworks (current and future) • Scalability to 10,000's of nodes • Reliability in face of failures Valeria Cardellini - SABD 2016/17 8 Mesos in the data center • Where does Mesos fit as an abstraction layer in the datacenter? Valeria Cardellini - SABD 2016/17 9

Computation model • A framework (e.g., Hadoop, Spark) manages and runs one or more jobs • A job consists of one or more tasks • A task (e.g., map, reduce) consists of one or more processes running on same machine Valeria Cardellini - SABD 2016/17 10 What Mesos does • Enables fine-grained resource sharing (at the level of tasks within a job) of resources (CPU, RAM, … ) across frameworks • Provides common functionalities: - Failure detection - Task distribution - Task starting - Task monitoring - Task killing - Task cleanup Valeria Cardellini - SABD 2016/17 11

Fine-grained sharing • Allocation at the level of tasks within a job • Improves utilization, latency, and data locality Coarse-grain sharing Fine-grain sharing Valeria Cardellini - SABD 2016/17 12 Frameworks on Mesos • Frameworks must be aware of running on Mesos – DevOps tooling: Vamp (deployment and workflow tool for container orchestration) – Long running services: Aurora (service scheduler), … – Big Data processing: Hadoop, Spark, Storm, MPI, … – Batch scheduling: Chronos, … – Data storage: Alluxio, Cassandra, ElasticSearch, … – Machine learning: TFMesos (Tensorflow in Docker on Mesos) See the full list at mesos.apache.org/documentation/latest/frameworks/ Valeria Cardellini - SABD 2016/17 13

Mesos: architecture • Master-slave architecture • Slaves publish available resources to master • Master sends resource offers to frameworks • Master election and service discovery via ZooKeeper Source: Mesos: a platform for fine-grained resource sharing in the data center, NSDI'11 Valeria Cardellini - SABD 2016/17 14 Mesos component: Apache ZooKeeper • Coordination service for maintaining configuration information, naming, providing distributed synchronization, and providing group services • Used in many distributed systems, among which Mesos, Storm and Kafka • Allows distributed processes to coordinate with each other through a shared hierarchical name space of data ( znodes ) – File-system-like API – Name space similar to a standard file system – Limited amount of data in znodes – It is no really a: file system, database, key-value store, lock service • Provides high throughput, low latency, highly available, strictly ordered access to the znodes Valeria Cardellini - SABD 2016/17 15

Mesos component: ZooKeeper (2) • Replicated over a set of machines that maintain an in-memory image of the data tree – Read requests processed locally by the ZooKeeper server – Write requests forwarded to other ZooKeeper servers and consensus before a response is generated (primary-backup system) – Uses Paxos as leader election protocol to determine which server is the master • Implements atomic broadcast – Processes deliver the same messages (agreement) and deliver them in the same order (total order) – Message = state update Valeria Cardellini - SABD 2016/17 16 Mesos and framework components • Mesos components - Master - Slaves or agents • Framework components - Scheduler : registers with the master to be offered resources - Executors : launched on agent nodes to run the framework’s tasks Valeria Cardellini - SABD 2016/17 17

Scheduling in Mesos • Scheduling mechanism based on resource offers - Mesos offers available resources to frameworks • Each resource offer contains a list of <agent ID, resource1: amount1, resource2: amount2, ...> ! - Each framework chooses which resources to use and which tasks to launch • Two-level scheduler architecture - Mesos delegates the actual scheduling of tasks to frameworks - Improves scalability: the master does not have to know the scheduling intricacies of every type of application that it supports Valeria Cardellini - SABD 2016/17 18 Mesos: resource offers • Resource allocation is based on Dominant Resource Fairness (DRF) Valeria Cardellini - SABD 2016/17 19

Mesos: resource offers in details • Slaves continuously send status updates about resources to the Master Valeria Cardellini - SABD 2016/17 20 Mesos: resource offers in details (2) Valeria Cardellini - SABD 2016/17 21

Mesos: resource offers in details (3) • Framework scheduler can reject offers Valeria Cardellini - SABD 2016/17 22 Mesos: resource offers in details (4) • Framework scheduler selects resources and provides tasks • Master sends tasks to slaves Valeria Cardellini - SABD 2016/17 23

Mesos: resource offers in details (5) • Framework executors launch tasks Valeria Cardellini - SABD 2016/17 24 Mesos: resource offers in details (6) Valeria Cardellini - SABD 2016/17 25

Mesos: resource offers in details (7) Valeria Cardellini - SABD 2016/17 26 Mesos fault tolerance • Task failure • Slave failure • Host or network failure • Master failure • Framework scheduler failure Valeria Cardellini - SABD 2016/17 27

Fault tolerance: task failure Valeria Cardellini - SABD 2016/17 28 Fault tolerance: task failure (2) Valeria Cardellini - SABD 2016/17 29

Fault tolerance: slave failure Valeria Cardellini - SABD 2016/17 30 Fault tolerance: slave failure (2) Valeria Cardellini - SABD 2016/17 31

Fault tolerance: host or network failure Valeria Cardellini - SABD 2016/17 32 Fault tolerance: host or network failure (2) Valeria Cardellini - SABD 2016/17 33

Fault tolerance: host or network failure (3) Valeria Cardellini - SABD 2016/17 34 Fault tolerance: master failure Valeria Cardellini - SABD 2016/17 35

Fault tolerance: master failure (2) • When the leading Master fails, the surviving masters use ZooKeeper to elect a new leader Valeria Cardellini - SABD 2016/17 36 Fault tolerance: master failure (3) • The slaves and frameworks use ZooKeeper to detect the new leader and reregister Valeria Cardellini - SABD 2016/17 37

Fault tolerance: framework scheduler failure Valeria Cardellini - SABD 2016/17 38 Fault tolerance: framework scheduler failure (2) • When a framework scheduler fails, another instance can reregister to the Master without interrupting any of the running tasks Valeria Cardellini - SABD 2016/17 39

Fault tolerance: framework scheduler failure (3) Valeria Cardellini - SABD 2016/17 40 Fault tolerance: framework scheduler failure (4) Valeria Cardellini - SABD 2016/17 41

Resource allocation 1. How to assign the cluster resources to the tasks? – Design alternatives • Global (monolithic) scheduler • Two-level scheduler 2. How to allocate resources of different types? Valeria Cardellini - SABD 2016/17 42 Global (monolithic) scheduler • Job requirements • Pros – Response time – Throughput – Can achieve optimal schedule (global knowledge) – Availability • Cons: • Job execution plan – Task direct acyclic graph (DAG) – Complexity: hard to scale and ensure resilience – Inputs/outputs – Hard to anticipate future • Estimates frameworks requirements – Task duration – Need to refactor existing – Input sizes frameworks – Transfer sizes Valeria Cardellini - SABD 2016/17 43

Recommend

More recommend