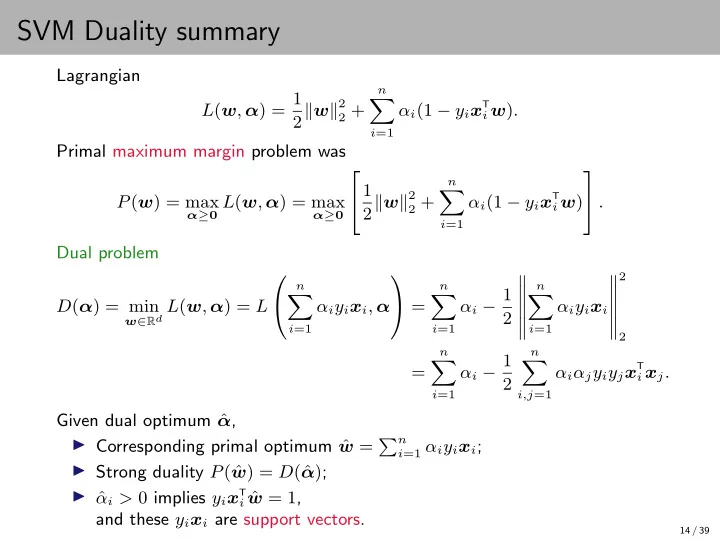

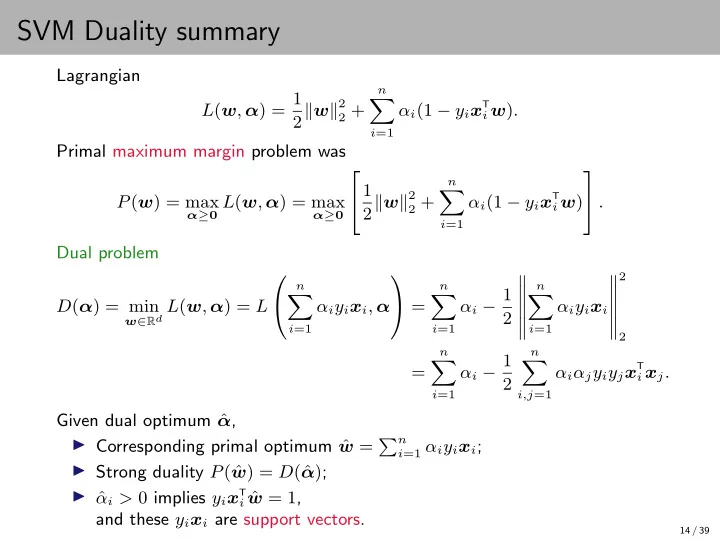

SVM Duality summary Lagrangian n L ( w , α ) = 1 � 2 � w � 2 T 2 + α i (1 − y i x i w ) . i =1 Primal maximum margin problem was n 1 � 2 � w � 2 . T P ( w ) = max α ≥ 0 L ( w , α ) = max 2 + α i (1 − y i x i w ) α ≥ 0 i =1 Dual problem � � 2 n n � n � α i − 1 � � � � � = D ( α ) = min w ∈ R d L ( w , α ) = L α i y i x i , α α i y i x i � � 2 � � � � i =1 i =1 i =1 2 n n α i − 1 � � T = α i α j y i y j x i x j . 2 i =1 i,j =1 Given dual optimum ˆ α , w = � n ◮ Corresponding primal optimum ˆ i =1 α i y i x i ; ◮ Strong duality P ( ˆ w ) = D (ˆ α ) ; ◮ ˆ α i > 0 implies y i x T i ˆ w = 1 , and these y i x i are support vectors. 14 / 39

4. Non-separable case

Soft-margin SVMs (Cortes and Vapnik, 1995) When training examples are not linearly separable, the (primal) SVM optimization problem 1 2 � w � 2 min 2 w ∈ R d T s.t. y i x i w ≥ 1 for all i = 1 , 2 , . . . , n has no solution (it is infeasible ). 15 / 39

Soft-margin SVMs (Cortes and Vapnik, 1995) When training examples are not linearly separable, the (primal) SVM optimization problem 1 2 � w � 2 min 2 w ∈ R d T s.t. y i x i w ≥ 1 for all i = 1 , 2 , . . . , n has no solution (it is infeasible ). Introduce slack variables ξ 1 , . . . , ξ n ≥ 0 , and a trade-off parameter C > 0 : n 1 � 2 � w � 2 min 2 + C ξ i w ∈ R d ,ξ 1 ,...,ξ n ∈ R i =1 T s.t. y i x i w ≥ 1 − ξ i for all i = 1 , 2 , . . . , n, ξ i ≥ 0 for all i = 1 , 2 , . . . , n, which is always feasible . This is called soft-margin SVM . (Slack variables are auxiliary variables ; not needed to form the linear classifier.) 15 / 39

Interpretation of slack varables n 1 � 2 � w � 2 min 2 + C ξ i w ∈ R d ,ξ 1 ,...,ξ n ∈ R i =1 T s.t. y i x i w ≥ 1 − ξ i for all i = 1 , 2 , . . . , n, ξ i ≥ 0 for all i = 1 , 2 , . . . , n. H For given w , ξ i / � w � 2 is distance that x i would have to move to satisfy T y i x i w ≥ 1 . 16 / 39

Another interpretation of slack variables Constraints with non-negative slack variables : n 1 � 2 � w � 2 min 2 + C ξ i w ∈ R d ,ξ 1 ,...,ξ n ∈ R i =1 T s.t. y i x i w ≥ 1 − ξ i for all i = 1 , 2 , . . . , n, ξ i ≥ 0 for all i = 1 , 2 , . . . , n. 17 / 39

Another interpretation of slack variables Constraints with non-negative slack variables : n 1 � 2 � w � 2 min 2 + C ξ i w ∈ R d ,ξ 1 ,...,ξ n ∈ R i =1 T s.t. y i x i w ≥ 1 − ξ i for all i = 1 , 2 , . . . , n, ξ i ≥ 0 for all i = 1 , 2 , . . . , n. Equivalent unconstrained form : n 1 � � � 2 � w � 2 T min 2 + C 1 − y i x i w + . w ∈ R d i =1 Notation : [ a ] + := max { 0 , a } (ReLU!). 17 / 39

Another interpretation of slack variables Constraints with non-negative slack variables : n 1 � 2 � w � 2 min 2 + C ξ i w ∈ R d ,ξ 1 ,...,ξ n ∈ R i =1 T s.t. y i x i w ≥ 1 − ξ i for all i = 1 , 2 , . . . , n, ξ i ≥ 0 for all i = 1 , 2 , . . . , n. Equivalent unconstrained form : n 1 � � � 2 � w � 2 T min 2 + C 1 − y i x i w + . w ∈ R d i =1 Notation : [ a ] + := max { 0 , a } (ReLU!). � � 1 − y x T w + is hinge loss of w on example ( x , y ) . 17 / 39

Convex dual in non-separable case Lagrangian n n L ( w , ξ , α ) = 1 � � 2 � w � 2 T 2 + C ξ i + α i (1 − ξ i − y i x i w ) . i =1 i =1 Dual problem D ( α ) = min L ( w , ξ , α ) . w ∈ R d , ξ ∈ R n ≥ 0 18 / 39

Convex dual in non-separable case Lagrangian n n L ( w , ξ , α ) = 1 � � 2 � w � 2 T 2 + C ξ i + α i (1 − ξ i − y i x i w ) . i =1 i =1 Dual problem D ( α ) = min L ( w , ξ , α ) . w ∈ R d , ξ ∈ R n ≥ 0 As before, evaluating gradient gives w = � n i =1 α i y i x i ; plugging in, � � 2 n n � n � n α i − 1 � � � � � � = D ( α ) = min L α i y i x i , ξ , α α i y i x i + ξ i ( C − α i ) . � � 2 ξ ∈ R n � � ≥ 0 � � i =1 i =1 i =1 i =1 18 / 39

Convex dual in non-separable case Lagrangian n n L ( w , ξ , α ) = 1 � � 2 � w � 2 T 2 + C ξ i + α i (1 − ξ i − y i x i w ) . i =1 i =1 Dual problem D ( α ) = min L ( w , ξ , α ) . w ∈ R d , ξ ∈ R n ≥ 0 As before, evaluating gradient gives w = � n i =1 α i y i x i ; plugging in, � � 2 n n � n � n α i − 1 � � � � � � = D ( α ) = min L α i y i x i , ξ , α α i y i x i + ξ i ( C − α i ) . � � 2 ξ ∈ R n � � ≥ 0 � � i =1 i =1 i =1 i =1 The goal is to maximize D ; if α i > C , then ξ i ↑ ∞ gives D ( α ) = −∞ . Otherwise, minimized at ξ i = 0 . 18 / 39

Convex dual in non-separable case Lagrangian n n L ( w , ξ , α ) = 1 � � 2 � w � 2 T 2 + C ξ i + α i (1 − ξ i − y i x i w ) . i =1 i =1 Dual problem D ( α ) = min L ( w , ξ , α ) . w ∈ R d , ξ ∈ R n ≥ 0 As before, evaluating gradient gives w = � n i =1 α i y i x i ; plugging in, � � 2 n n � n � n α i − 1 � � � � � � = D ( α ) = min L α i y i x i , ξ , α α i y i x i + ξ i ( C − α i ) . � � 2 ξ ∈ R n � � ≥ 0 � � i =1 i =1 i =1 i =1 The goal is to maximize D ; if α i > C , then ξ i ↑ ∞ gives D ( α ) = −∞ . Otherwise, minimized at ξ i = 0 . Therefore the dual problem is 2 � � n � n � α i − 1 � � � � max α i y i x i � � α ∈ R n 2 � � i =1 � i =1 � 0 ≤ α i ≤ C Can solve this with constrained convex opt (e.g., projected gradient descent). 18 / 39

Nonseparable case: bottom line Unconstrained primal: n � � 1 � 2 � w � 2 + C T min 1 − y i x + . i w w ∈ R d i =1 Dual: 2 � � n � n � α i − 1 � � � � max α i y i x i � � 2 α ∈ R n � � � � i =1 i =1 0 ≤ α i ≤ C w = � n Dual solution ˆ α gives primal solution ˆ i =1 α i y i x i . 19 / 39

Nonseparable case: bottom line Unconstrained primal: n � � 1 � 2 � w � 2 + C T min 1 − y i x + . i w w ∈ R d i =1 Dual: 2 � � n � n � α i − 1 � � � � max α i y i x i � � 2 α ∈ R n � � � � i =1 i =1 0 ≤ α i ≤ C w = � n Dual solution ˆ α gives primal solution ˆ i =1 α i y i x i . Remarks. ◮ Can take C → ∞ to recover the separable case. ◮ Dual is a constrained convex quadratic (can be solved with projected gradient descent). ◮ Some presentations include bias in primal ( x T i w + b ); this introduces a constraint � n i =1 y i α i = 0 in dual. ◮ Some presentations replace 1 2 and C with λ 2 and 1 n , respectively. 19 / 39

5. Kernels

Looking at the dual again SVM dual problem only depends on x i through inner products x T i x j . n n α i − 1 � � T max α i α j y i y j x i x j . 2 α 1 ,α 2 ,...,α n ≥ 0 i =1 i,j =1 20 / 39

Looking at the dual again SVM dual problem only depends on x i through inner products x T i x j . n n α i − 1 � � T max α i α j y i y j x i x j . 2 α 1 ,α 2 ,...,α n ≥ 0 i =1 i,j =1 If we use feature expansion (e.g., quadratic expansion) x �→ φ ( x ) , this becomes n n α i − 1 � � T φ ( x j ) . max α i α j y i y j φ ( x i ) 2 α 1 ,α 2 ,...,α n ≥ 0 i =1 i,j =1 20 / 39

Looking at the dual again SVM dual problem only depends on x i through inner products x T i x j . n n α i − 1 � � T max α i α j y i y j x i x j . 2 α 1 ,α 2 ,...,α n ≥ 0 i =1 i,j =1 If we use feature expansion (e.g., quadratic expansion) x �→ φ ( x ) , this becomes n n α i − 1 � � T φ ( x j ) . max α i α j y i y j φ ( x i ) 2 α 1 ,α 2 ,...,α n ≥ 0 i =1 i,j =1 w = � n Solution ˆ i =1 ˆ α i y i φ ( x i ) is used in the following way: n � T ˆ T φ ( x i ) . x �→ φ ( x ) w = ˆ α i y i φ ( x ) i =1 20 / 39

Looking at the dual again SVM dual problem only depends on x i through inner products x T i x j . n n α i − 1 � � T max α i α j y i y j x i x j . 2 α 1 ,α 2 ,...,α n ≥ 0 i =1 i,j =1 If we use feature expansion (e.g., quadratic expansion) x �→ φ ( x ) , this becomes n n α i − 1 � � T φ ( x j ) . max α i α j y i y j φ ( x i ) 2 α 1 ,α 2 ,...,α n ≥ 0 i =1 i,j =1 w = � n Solution ˆ i =1 ˆ α i y i φ ( x i ) is used in the following way: n � T ˆ T φ ( x i ) . x �→ φ ( x ) w = ˆ α i y i φ ( x ) i =1 Key insight : ◮ Training and prediction only use φ ( x ) T φ ( x ′ ) , never an isolated φ ( x ) ; ◮ Sometimes computing φ ( x ) T φ ( x ′ ) is much easier than computing φ ( x ) . 20 / 39

Quadratic expansion ◮ φ : R d → R 1+2 d + ( d 2 ) , where √ √ � 2 x d , x 2 1 , . . . , x 2 φ ( x ) = 1 , 2 x 1 , . . . , d , √ √ √ � 2 x 1 x 2 , . . . , 2 x 1 x d , . . . , 2 x d − 1 x d √ (Don’t mind the 2 ’s. . . ) 21 / 39

Quadratic expansion ◮ φ : R d → R 1+2 d + ( d 2 ) , where √ √ � 2 x d , x 2 1 , . . . , x 2 φ ( x ) = 1 , 2 x 1 , . . . , d , √ √ √ � 2 x 1 x 2 , . . . , 2 x 1 x d , . . . , 2 x d − 1 x d √ (Don’t mind the 2 ’s. . . ) ◮ Computing φ ( x ) T φ ( x ′ ) in O ( d ) time : T φ ( x ′ ) = (1 + x T x ′ ) 2 . φ ( x ) 21 / 39

Recommend

More recommend