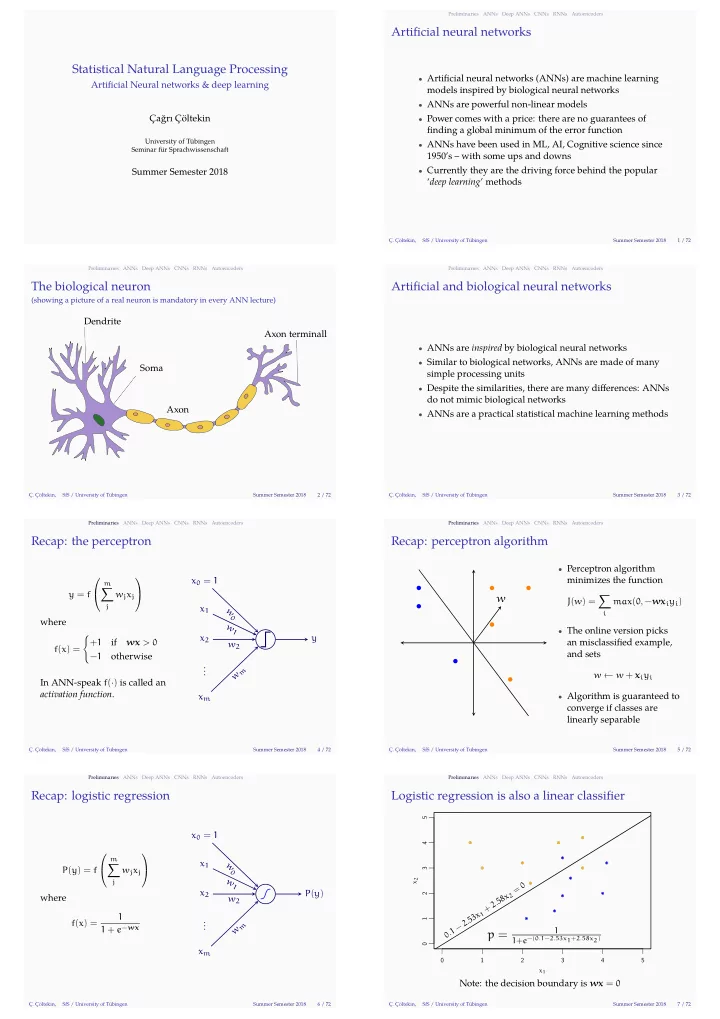

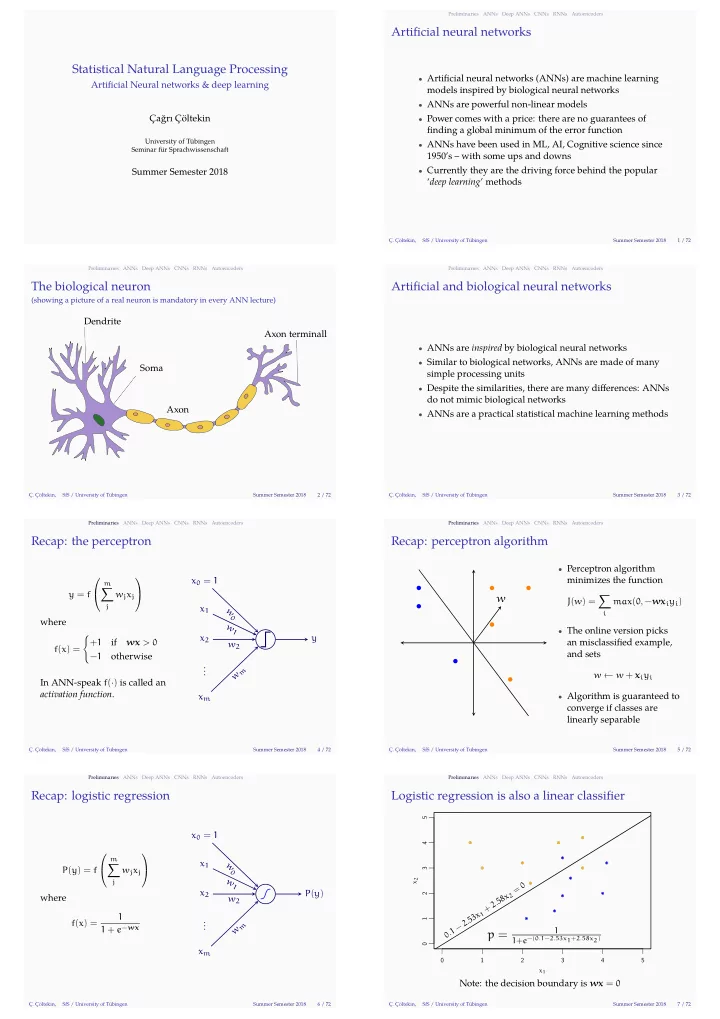

Statistical Natural Language Processing Recap: perceptron algorithm CNNs Deep ANNs ANNs Preliminaries 5 / 72 Summer Semester 2018 SfS / University of Tübingen Ç. Çöltekin, linearly separable converge if classes are and sets an misclassifjed example, minimizes the function Autoencoders Autoencoders Artifjcial Neural networks & deep learning CNNs Deep ANNs ANNs Preliminaries 4 / 72 Summer Semester 2018 SfS / University of Tübingen Ç. Çöltekin, . . . activation function . otherwise RNNs Recap: logistic regression where 1 Summer Semester 2018 SfS / University of Tübingen Ç. Çöltekin, 5 4 3 2 1 0 5 4 3 2 0 where Logistic regression is also a linear classifjer Autoencoders RNNs CNNs Deep ANNs ANNs Preliminaries 6 / 72 Summer Semester 2018 SfS / University of Tübingen Ç. Çöltekin, . . . if RNNs 7 / 72 ANNs do not mimic biological networks simple processing units Artifjcial and biological neural networks 1950’s – with some ups and downs Autoencoders ‘ deep learning ’ methods Ç. Çöltekin, SfS / University of Tübingen Summer Semester 2018 RNNs CNNs 1 / 72 Preliminaries Deep ANNs Preliminaries SfS / University of Tübingen 2 / 72 Summer Semester 2018 SfS / University of Tübingen Ç. Çöltekin, Axon terminall Axon Soma Dendrite (showing a picture of a real neuron is mandatory in every ANN lecture) The biological neuron ANNs Deep ANNs CNNs RNNs Ç. Çöltekin, Autoencoders Summer Semester 2018 Deep ANNs Çağrı Çöltekin University of Tübingen Recap: the perceptron Seminar für Sprachwissenschaft Summer Semester 2018 Preliminaries Autoencoders ANNs Deep ANNs RNNs CNNs CNNs RNNs 3 / 72 Autoencoders ANNs Preliminaries Artifjcial neural networks models inspired by biological neural networks fjnding a global minimum of the error function • Artifjcial neural networks (ANNs) are machine learning • ANNs are powerful non-linear models • Power comes with a price: there are no guarantees of • ANNs have been used in ML, AI, Cognitive science since • Currently they are the driving force behind the popular • ANNs are inspired by biological neural networks • Similar to biological networks, ANNs are made of many • Despite the similarities, there are many difgerences: ANNs • ANNs are a practical statistical machine learning methods • Perceptron algorithm x 0 = 1 m ∑ y = f w j x j ∑ w J ( w ) = max ( 0 , − wx i y i ) j x 1 w 0 i w • The online version picks 1 { x 2 y + 1 wx > 0 w 2 f ( x ) = − 1 w m w ← w + x i y i In ANN-speak f ( · ) is called an x m • Algorithm is guaranteed to x 0 = 1 m ∑ x 1 w 0 P ( y ) = f w j x j w x 2 j 0 1 = x 2 P ( y ) x 2 8 w 2 5 2 . + x 1 1 3 5 f ( x ) = 2 w m . − 1 + e − wx 1 1 p = 0 . 1 + e −( 0 . 1 − 2 . 53x1 + 2 . 58x2 ) x m x 1 Note: the decision boundary is wx = 0

Preliminaries SfS / University of Tübingen . . . weighted sum of the inputs transformation non-linear activation Ç. Çöltekin, Summer Semester 2018 An artifjcial neuron 12 / 72 Preliminaries ANNs Deep ANNs CNNs RNNs Autoencoders Artifjcial neuron ANNs Autoencoders . Each unit takes a weighted sum of their input, Autoencoders Multi-layer perceptron the picture y Input Hidden Output and applies a (non-linear) activation function . RNNs Ç. Çöltekin, SfS / University of Tübingen Summer Semester 2018 11 / 72 Preliminaries ANNs Deep ANNs CNNs an example . CNNs Autoencoders Summer Semester 2018 14 / 72 Preliminaries ANNs Deep ANNs CNNs RNNs Activation functions in ANNs Ç. Çöltekin, output units the task – For regression, identity function – For binary classifjcation, logistic sigmoid – For multi-class classifjcation, softmax Ç. Çöltekin, SfS / University of Tübingen Summer Semester 2018 SfS / University of Tübingen Rectifjed linear unit (relu) . Preliminaries function is logistic sigmoid function becomes Ç. Çöltekin, SfS / University of Tübingen Summer Semester 2018 13 / 72 ANNs Hyperbolic tangent (tanh) Deep ANNs CNNs RNNs Autoencoders Activation functions in ANNs hidden units (difgerentiable) functions Sigmoid (logistic) RNNs 15 / 72 Deep ANNs 1 if one can fjnd a linear Ç. Çöltekin, discriminator RNNs engineering example is the logical XOR problem 0 1 1 1 0 1 1 0 Linear separability 0 0 0 ANNs There is no line that can separate positive and negative classes. Ç. Çöltekin, SfS / University of Tübingen Summer Semester 2018 8 / 72 Preliminaries ANNs Deep ANNs CNNs Can a linear classifjer learn the XOR problem? said to be linearly separable Autoencoders Autoencoders Deep ANNs SfS / University of Tübingen activation function – It can be used for both regression and classifjcation perceptron-like units Ç. Çöltekin, multi-layer perceptron (MLP) Multi-layer perceptron Autoencoders RNNs CNNs ANNs Deep ANNs CNNs 10 / 72 Summer Semester 2018 9 / 72 Preliminaries SfS / University of Tübingen Preliminaries problems Summer Semester 2018 RNNs • We can use non-linear basis functions x 2 w 0 + w 1 x 1 + w 2 x 2 + w 3 φ ( x 1 , x 2 ) • A classifjcation problem is 1 + − is still linear in w for any choice of φ ( · ) • For example, adding the product x 1 x 2 as an additional feature would allow a solution like: x 1 + x 2 − 2x 1 x 2 • A well-known counter x 1 − + x 1 x 2 x 1 + x 2 − 2x 1 x 2 0 1 • Choosing proper basis functions like x 1 x 2 is called feature • The simplest modern ANN architecture is called x 1 • (MLP) is a fully connected , feed-forward network consisting of x 2 • Unlike perceptron, the units in an MLP use a continuous x 3 • The MLP can be trained using gradient-based methods x 4 • The MLP can represent many interesting machine learning • The unit calculates a x 0 = 1 x 0 = 1 • A common activation m ∑ w j x j = wx w 0 x 1 w 0 x 1 j w 1 1 w f ( x ) = • Result is a linear 1 ∑ 1 + e − x x 2 ∑ y f ( · ) x 2 y w 2 w 2 • The output of the network • Then the unit applies a w m w m function f ( · ) 1 y = • Output of the unit is 1 + e − wx x m x m y = f ( wx ) • The activation functions of the output units depends on • The activation functions in MLP are typically continuous • For hidden units common choices are 1 e wx 1 1 + e x P ( y = 1 | x ) = 1 + e − wx = 1 + e − wx e 2x − 1 e 2x + 1 e w k x P ( y = k | x ) = ∑ j e w j x max ( 0 , x )

Recommend

More recommend