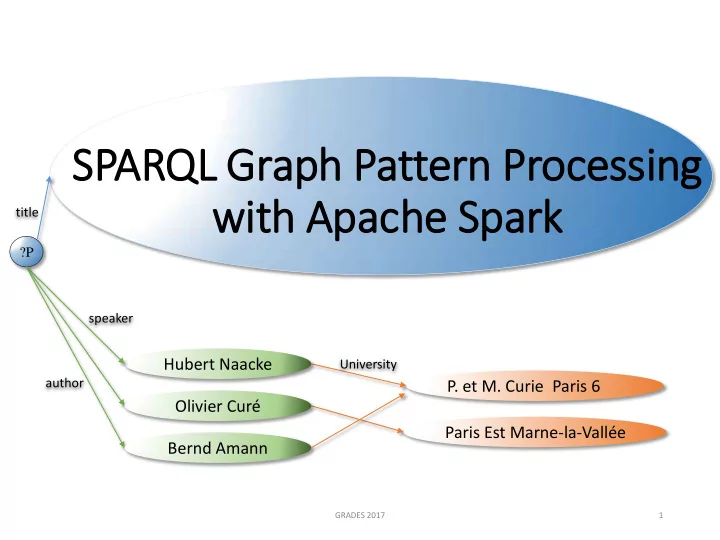

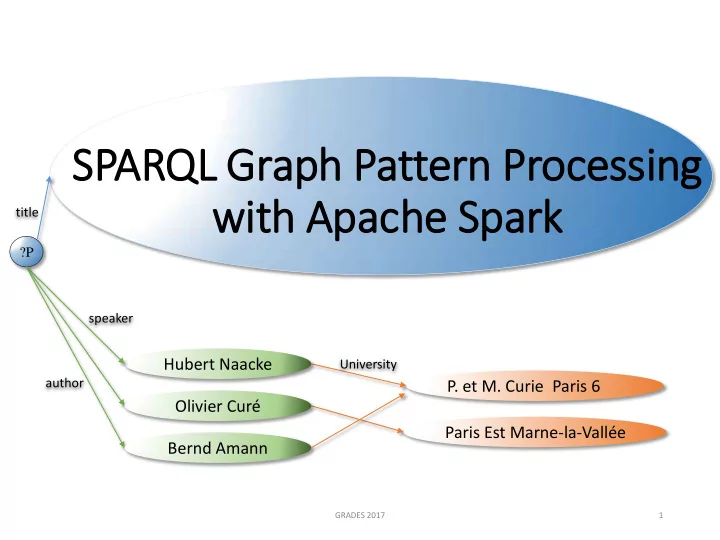

SPARQL Graph Pattern Processing with Apache Spark title ?P speaker Hubert Naacke University author P. et M. Curie Paris 6 Olivier Curé Paris Est Marne-la-Vallée Bernd Amann GRADES 2017 1

Context • Big RDF data • Linked Open Data impulse: ever growing RDF content • Large datasets: billions of <subject, prop, object> triples • e.g. DBPedia • Query RDF data in SPARQL • The building block is a Basic Graph Pattern (BGP) query • e.g.: Snowflake pattern offers includes ? u Retail0 ? x from WatDiv benchmark Chain pattern t1 t2 t3 from LUBM benchmark type Course ?y ?z advisor teacherOf 2 GRADES 2017

Cluster computing platforms • Cluster computing platforms provide • main memory data management • distributed and parallel data access and processing • fault-tolerance, highly availability ➭ Leverage on existing platform • e.g. Apache Spark GRADES 2017 3

SPARQL on Spark Architecture SPARQL Graph Pattern query Our Hybrid Hybrid SPARQL SPARQL SPARQL DF RDD solutions SQL DF RDD SQL data compression GraphX DataFrame (DF) no compression Resilient Distributed Datastructures (RDD) Cluster ressource management Distributed File system RDF RDF triples triples 4 GRADES 2017

SPARQL query evaluation: Challenges • Requirements • Low memory usage: no data replication, no indexing • Fast data preparation: simple hash-based <Subject> partitioning • Challenges • Efficiently evaluate parallel and distributed join plans with Spark ➭ Favor local computation ➭ Reduce data transfers • Benefit from several join algorithms • Local partitioned join: no transfer • Distributed partitioned join • Broadcast join GRADES 2017 5

Solution • Local subquery evaluation • Merge multiple triple selections aka shared scan • Distributed query evaluation • Cost model for partitioned and broadcast joins • Generate Hybrid join plans, dynamic programming GRADES 2017 6

Hybrid plan : example and cost model Legend: SELECT * WHERE { Partitioned Broadcast B P Distribute Broadcast ⋈ ?x advisor ?y . ⋈ join join ?y teacherOf ?z . ?z type Course } Q9 1 Q9 2 Q9 3 P ⋈ y B P ⋈ y ⋈ z t1 t2 t3 ?y ?z advisor teacherOf type x t 3 x t 1 P t 1 B ⋈ z B Triple patterns of Q9 ⋈ y ⋈ z x y z y t 2 t 1 t 2 t 3 t 3 t 2 Plan cost : SPARQL RDD SPARQL DF SPARQL Hybrid with : plan plan plan C pattern = transferCost(pattern) cost(Q9 1 ) = cost(Q9 2 ) = cost(Q9 3 ) = θ comm is the unit tranfer cost C t1 + C t2 + C t2 ⨝ t3 m * (C t2 + C t3 ) C t1 + m * C t3 m = #computeNodes - 1

Performance comparison with S2RDF • S2RDF at VLDB 2016 • Same dataset (1B triples) & queries • Various query patterns: • S tar, snow F lake, C omplex Star Snowfake Complex ➭ One dataset: ➭ One dataset per property: <Subject> partitioning <Property> and <Subject> partitioning Hybrid DF accelerates DF Hybrid accelerates S2RDF up to 2.4 times up to 2.2 times GRADES 2017 8

Take home message • Existing cluster computing platforms are mature enough to process SPARQL queries at large scale. • To accelerate query plans: • Provide several distributed join algorithms • Allows for mixing several join algorithms More info at the poster session … Thank you. Questions ? GRADES 2017 9

Existing solutions • S2RDF (VLDB 2016) • Spark • Long data preparation time • Use a single join algo • CliqueSquare (ICDE 2015) • Hadoop platform • Data replicated 3 times: by subject, prop and object • AdPart (VLDBJ 2016) • Native distributed layer • "semi-join based" join algorithm • Distributed RDFox (ISWC 2016) • Native distributed layer • Data shuffling GRADES 2017 10

Conclusion • First detailed analysis of SPARQL processing on Spark • Cost model aware of data transfers • Efficient query plan generation • Optimality not studied (future works) • Extensive experiments at large scale • Future works: incorporate other recent join algo • Handle data bias • Hypercube n-way join: targets load balancing GRADES 2017 11

Thank you Questions ? GRADES 2017 12

Extra slides • GRADES 2017 13

Hybrid plan: Cost model Q9 1 Q9 3 Q9 2 Plan cost : P ⋈ y B P ⋈ y ⋈ z with : C pattern = θ comm * size(pattern) x t 3 x t 1 P t 1 θ comm is the unit tranfer cost B ⋈ z ⋈ y B ⋈ z m = #computeNodes - 1 x y z y t 2 t 1 t 2 t 3 t 3 t 2 SPARQL RDD SPARQL SQL SPARQL Hybrid plan plan plan cost(Q9 1 ) = cost(Q9 2 ) = cost(Q9 3 ) = C t1 + C t2 + C t2 ⨝ t3 m * (C t2 + C t3 ) C t1 + m * C t3

Data distribution (1/2) Hash-based partitioning Dataset ( subject , prop, object) s1 p1 o1 Partitioning is s2 p1 o2 s3 p1 o2 • Straightforward s1 p2 o3 • Simple map-reduce task s2 p3 o4 ... • No preparation overhead requirement • Hash-based partitioning on subject s1 p1 o1 s2 p1 o2 s3 p1 o2 s1 p2 o3 s2 p3 o4 Part 1 Part 2 Part N BDA 2016 15

Data distribution (2/2) over a cluster Compute node N node 2 node 1 Ressources: Piece of Memory data CPU Operation Memory Result Comm is expensive BDA 2016 16

Parallel and distributed data processing workflow (1/2) Compute node 1 node 2 node N Part Part Part Partitioned dataset 1 2 N Local (MAP) select select select Operation Result Result Result Partitioned Result 1 2 N Examples of local MAP operations: selection, projection, join on subject BDA 2016 17

Parallel and distributed data processing workflow (2/2) Part Part Part Dataset 1 2 n Data transfers Global (REDUCE) Global Global Global Operation Operation Operation Operation Result Result Result 1 2 n Examples of global REDUCE operations : join , sort, distinct BDA 2016 18

Join processing wrt. query pattern Data: Star query: • Find laboratory and name of persons P1 lab L1 L1 at Poitiers P1 name Ali L1 since 2000 lab ?L P3 lab L2 L3 at Paris P3 name Clo L3 staff 200 name No transfer ?P ?N age P2 lab L3 L2 at Aix ?L P2 age 20 L2 at Toulon P2 name Bob L2 partner L1 Chain query: P4 lab L1 … • Find lab and its city for persons Transfer at lab ?P ?L ?V lab or at Snowflake ?n name ?s query: staff lab at ?P ?L ?V Complex query partner age ?N ?a BDA 2016 19

Join algorithms • Partitioned join (Pjoin) • Distribute data • Broadcast join (Brjoin) • Broadcast to all • Hybrid join (contribution) • Distribute and/or broadcast • Based on a cost model BDA 2016 20

Cost of Join (1/2) Partitioned join Part 1 Part n Part 1 Part n C1 loc L3 C2 loc L1 Partitioned P1 lab L1 P2 lab L3 C3 loc L1 C4 loc L2 P3 lab L2 P4 lab L1 dataset hash on L hash on L hash on L hash on L P1 lab L1 P4 lab L1 C3 loc L1 C2 loc L1 Data transfers = sum of repartitioned datasets Join on L1 Join on L2 Join on L3 P3 lab L2 at Aix Result is P1 lab L1 at Poitiers P3 lab L2 at Toulon P2 lab L3 at Paris partitioned on L P4 lab L1 at Poitiers P4 lab L1 at Poitiers BDA 2016 21

Cost of join(2/2) Broadcast Join Larger target dataset Smaller broadcast dataset Part 1 Part n Part 1 Part n L1 at Poitiers L2 at Toulon P1 lab L1 P2 lab L3 L2 at Aix L3 at Paris P3 lab L2 P4 lab L1 Data transfers = Small dataset * nb of compute nodes Join on L Join on L P1 lab L1 at Poitiers P2 lab L3 at Paris Result preserves the target partitioning P3 lab L2 at Aix P4 lab L1 at Poitiers P3 lab L2 at Toulon BDA 2016 22

Proposed Solution: Hybrid join plan • Cost Model for Pjoin and BrJoin • Aware of data partitioning, number of compute nodes • Size of intermediate results • Handle plans of star patterns • Star = local Pjoin Get a linear join plan of stars • Often with successive BrJoins between selective stars • Build plan at runtime • Get size of intermediate results BDA 2016 23

Build Hybrid join plan 1) Compute all stars: S 1 , S 2 ,… • S i = Pjoin (t1, t2, …) 2) Join 2 stars, say S i with S j • Ensure cost(S i ⨝ S j ) is minimal get Si, Sj and a join algorithm • Let Temp = S i ⨝ S j 3) Continue with a 3 rd star, say S k • Ensure cost(Temp ⨝ S k ) is minimal a nd so on … BDA 2016 24

SPARQL on Spark: Qualitative comparison Method co-partitioning Join plan Merged selection Query Data Optimizer Compression SPARQL Pjoin RDD SPARQL DF v 1.5 Pjoin,BrJoin1 poor Spark SPARQL SQL v 1.5 Pjoin,BrJoin1 cross prod interface Hybrid RDD Pjoin,BrJoin + cost based Hybrid DF Pjoin,BrJoin + cost based Our solutions supported not supported BDA 2016 25

Experimental validation: setup • Datasets Dataset Name Nb of triples Description DrugBank 500K Real dataset LUBM 1.3B Synthetic data, LeHigh Univ WatDiv 1.1B Synthetic data, Waterloo Univ • Cluster • 17 compute nodes • Resource per node: 12 cores x 2 hyperthreads, 64 GB memory • 1Gb/s interconnect • Spark • 16 worker nodes • Aggregated resources: 300 cores, 800 GB memory • Solution • Implem written in scala, see companion website BDA 2016 26

Experiments: Performance gain • Response time for Snowflake Q8 query from LUBM • 2 dataset sizes: medium (100M triples), large (1B triples) Achieve higher gain for larger datasets No compression: 4,7 times faster Compressed data 3 times faster Dataset size BDA 2016 27

Thanks for your attention Questions ? BDA 2016 28

Extra slides BDA 2016 29

Recommend

More recommend