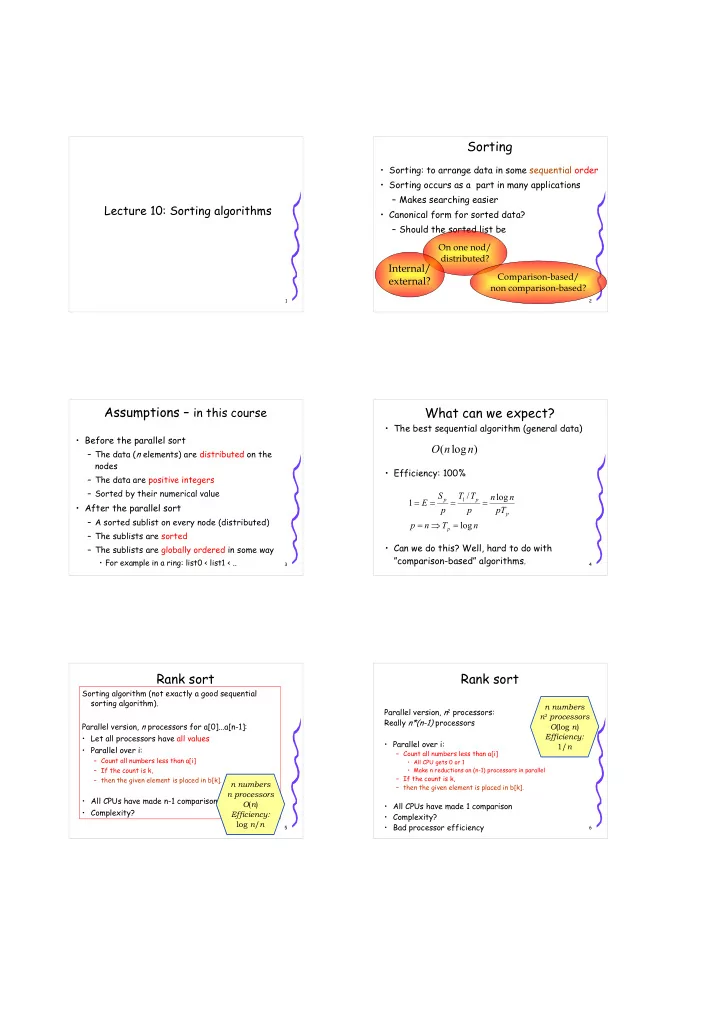

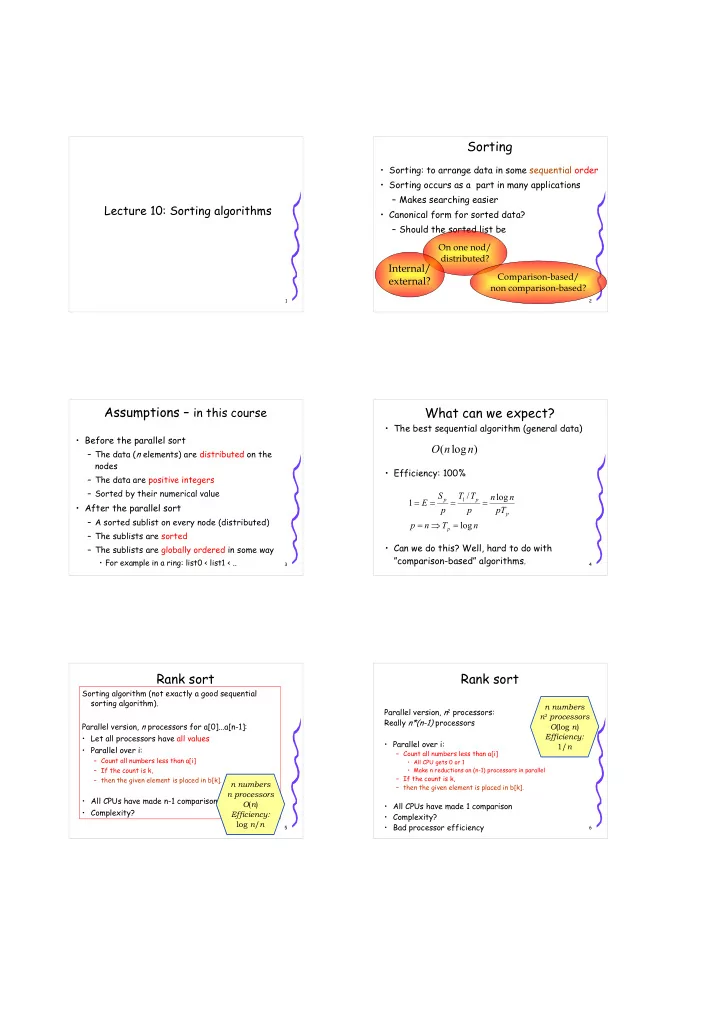

Sorting • Sorting: to arrange data in some sequential order • Sorting occurs as a part in many applications – Makes searching easier Lecture 10: Sorting algorithms • Canonical form for sorted data? – Should the sorted list be On one nod/ distributed? Internal/ Comparison-based/ external? non comparison-based? 1 2 Assumptions – in this course What can we expect? • The best sequential algorithm (general data) • Before the parallel sort O ( n log n ) – The data ( n elements) are distributed on the nodes • Efficiency: 100% – The data are positive integers – Sorted by their numerical value S T / T n log n = = p = 1 p = 1 E • After the parallel sort p p pT p – A sorted sublist on every node (distributed) = ⇒ = p n T log n p – The sublists are sorted • Can we do this? Well, hard to do with – The sublists are globally ordered in some way ”comparison-based” algorithms. • For example in a ring: list0 < list1 < .. 3 4 Rank sort Rank sort Sorting algorithm (not exactly a good sequential sorting algorithm). n numbers Parallel version, n 2 processors: n 2 processors Really n*(n-1) processors Parallel version, n processors for a[0]...a[n-1]: O (log n ) Efficiency: • Let all processors have all values • Parallel over i: 1/ n • Parallel over i: – Count all numbers less than a[i] – Count all numbers less than a[i] • All CPU gets 0 or 1 – If the count is k, • Make n reductions on (n-1) processors in parallel – If the count is k, – then the given element is placed in b[k]. n numbers – then the given element is placed in b[k]. n processors • All CPUs have made n-1 comparisons O ( n ) • All CPUs have made 1 comparison • Complexity? Efficiency: • Complexity? log n / n • Bad processor efficiency 5 6

Merge strategy Compare-and-exchange Bitonic-, Shell-, Quick- sort Bubble sort on an array/ring, n CPUs on n elements: 1) Sort every sublist by using a fast 1. The left node sends its largest number to the right, sequential method (e.g., quicksort) the right node send its smallest to the left 2) Exchange data between neighbors so that 2. Inserts the elements in their lists, puts an element all elements in the list on a node are smaller over the processor border than the other's (compare-exchange) 3. When they are not able to insert a number, ready A B • Communication is cheaper per exchange if larger < > parts are sent • Sending larger parts (the whole list ⇒ to much memory) 7 8 Mergesort Bubble sort/Odd-even Transposition Good sequentially • The bubbling of the next element can be n numbers But parallel? Results started before the previous has been n processors 4 8 7 2 finished: pipeline in a tree structure! O ( n ) • Odd-even Transposition sort: Efficiency: Unfortunately, we will not 4 8 7 2 log n / n reach log( n ) . • Every other (odd/even) comparison can O ( n ) is the minimum be done in parallel 4 8 7 2 1. Compare every odd element A 2 i-1 with the n numbers 4 8 2 7 next, even, element A 2 i n processors O ( n ) 2. Compare every even element A 2 i with the Efficiency: 2 4 7 8 next, odd, element A 2 i+1 log( n )/ n … 3. Last pass 9 10 2D sorting: Shearsort 2D sorting: Shearsort ”Snakesort” ”Snakesort” with transposition Sort the rows, every other 1. Sort the rows, 1. ascending (according to every other the arrow), and every 4 14 8 2 2 4 8 14 proc. 0 ascending other descending (according to the arrow Sort the columns, 2. 10 3 13 16 16 13 10 3 proc. 1 pilen), every ascending other Sort the rows 3. descending 7 15 1 5 1 5 7 15 proc. 2 Sort the columns 4. Local sorting. O (log n ). Finished 12 6 11 9 12 11 9 6 proc. 3 11 12

Shearsort Shearsort n numbers processors n ( ( ) ) + O log n n log n T ( n ) Efficiency: n n log n + T ( n ) 1 2 12 16 proc. 0 2 16 1 12 proc. 0 Transpose 2. 4 5 11 13 proc. 1 4 13 5 11 proc. 1 Sort the 3. columns 7 8 9 10 proc. 2 8 10 7 9 proc. 2 3 6 14 15 proc. 3 14 3 15 6 proc. 3 13 14 Bitonic sort Bitonic sort A bitonic sequence is a sequence that, if you shift it, results in an increasing sequence This we can explore recursively by then followed by a decreasing: sorting the two resulting lists bitonically as well!!! 5 2 2 5 5 6 8 9 7 5 2 3, is after shift: 2 3 5 6 8 9 7 5 5 3 2 6 2 5 5 8 The interesting thing is that we can, after a 3 5 5 9 7 7 6 compare-exchange between a i and a i + n /2 for all 7 6 6 7 5 i , 0 ≤ i < n / 2 , get two bitonic sequences, where 8 8 8 2 all numbers in the first is less or equal to the 9 9 9 3 numbers in the second. 15 16 Bitonic sort Sorting on a HC 100 110 100 110 First we build bitonic sequences, then we split 010 010 000 000 them. Time: O (log 2 n ) n numbers 101 111 101 111 n processors O (log 2 n ) Efficiency: 001 001 011 011 1/log( n ) Binary Reflected Gray Code RGC S1 = 0, 1 Sk = 0[Sk-1], 1[Sk-1]R 00,01, 11, 10 000, 001, 011, 010, 110, 111, 101, 100 17 18

Shellsort Shellsort • d = dimension of the HC • Explores that a list is almost n·m numbers sorted after d compare- exchanges n processors • The list will be sorted in ring order O (( k +log n ) ·m log m ) 1.Local quicksort on each sublist Efficiency: log n /( k +log n ) 2. d compare-exchange and merge in the direction pointed to by the d:th bit 3.Mopping up 19 20 Parallel Quicksort 1 on a HC QuickSort Repeat (1, 2, 3) d times • Divide and conquer algorithm 1. Find splitting key, k • Sorted in ring order or binary order 2. Send all elements > k to one subcube • Results in a tree structure (cmp. Mergesort) 3. Send all elements < k to the other subcube • Sequentially: 4. Sort sequentially on the node 1) Find a splitting key Disadvantages: 2) Place all elements < key to the left, all larger – badly chosen k results in quadratic time in to the right the sorting 3) Split the list – badly chosen k results in load imbalance 4) Goto 1 21 22 Parallel Quicksort 2 Quicksort 3 Sample Sort 1) Let all nodes sample l elements randomly 2) Sort the l 2 d elements (shellsort) 1) Make a global guess on the median 3) Choose 2 d -1 splitting keys 2) Split local sublist according to the guess 4) Broadcast all keys 3) Decide wrt. list length where the median is 5) Perform d splits 6) Sort each sublist (one of the two global lists are longer!) • The right number of elements in the sampling is important (depends on the length of the list) Repeat 1,2,3 until median is found, then apply • Large l results in better load balance with more Parallel Quicksort 1 overhead due to the sorting in step 2 23 24

Assignment 2 Sorting parallel quicksort with MPI • Implement a special variant of parallel quicksort with MPI (similar to • Why is sorting on parallel systems so Quicksort I): difficult? 1 Divide the data into p equal parts, one per processor 2 Sort the data locally for each processor • Idea for an answer: the number of 3 Perform global sort 3.1 Select pivot element within each processor set operations you make on the data set are too 3.2 Locally in each processor, divide the data into two sets according to the pivot (smaller or larger) small to be easily parallelized without 3.3 Split the processors into two groups and exchange data pairwise between them so that all processors in one group get data less getting too large overhead – the relation than the pivot and the others get data larger than the pivot. between number of elements and number of 3.4 Merge the two lists in each processor in one sorted list 4 Repeat 3.1 - 3.4 recursively for each half. operations is not good! The algorithm converges in log2 p steps. For steps 2 and 3.4 you should use a serial qsort routine. There you may 25 26 choose the pivot as you wish. Do not time step 1!!! Assignment 2 Kontrollfrågor parallel quicksort with MPI • Sortera följande lista genom att använda parallell shell sort med mopp-up: 4, 5, 2, 1, 7, 9, 9, 3, 3, 5, 6, For the numerical tests implement the following 4, 1, 2, 8, 0. Antag att du har en hyperkub av pivot strategies: dimension 3 och att en initala datadistributionen är som följer: 1. Select the median in one processor in each group node: 0 1 2 3 4 5 6 7 of processors data: 4,5, 2,1, 7,9, 9,3, 3,5, 6,4, 1,2, 8,0 2. Select the median of all medians in each processor • Sortera listan ovan mha shearsort på fyra group processorer 3. Select the mean value of all medians in each processor group • Sortera följande lista genom att använda bitonic sort • You are free to test other pivot selecting 3, 5, 6, 4, 1, 2, 8, 0 techniques as well 27 28 Kontrollfrågor • Vad kostar en compare-exchange mellan två processorer, om varje processor håller m element? • Vad blir komplexiteten på merge sort av m · p tal på p processorer? • Vilken är den minsta möjliga komplexiteten för jämförelsebaserad sortering av n element givet 1 processor? Varför? Givet godtyckligt antal processorer? Varför? • Hur lång tid tar transponering av p tal på en hyperkub av dimension log p ? På en mesh med dimensionen √ p × √ p ? 29

Recommend

More recommend