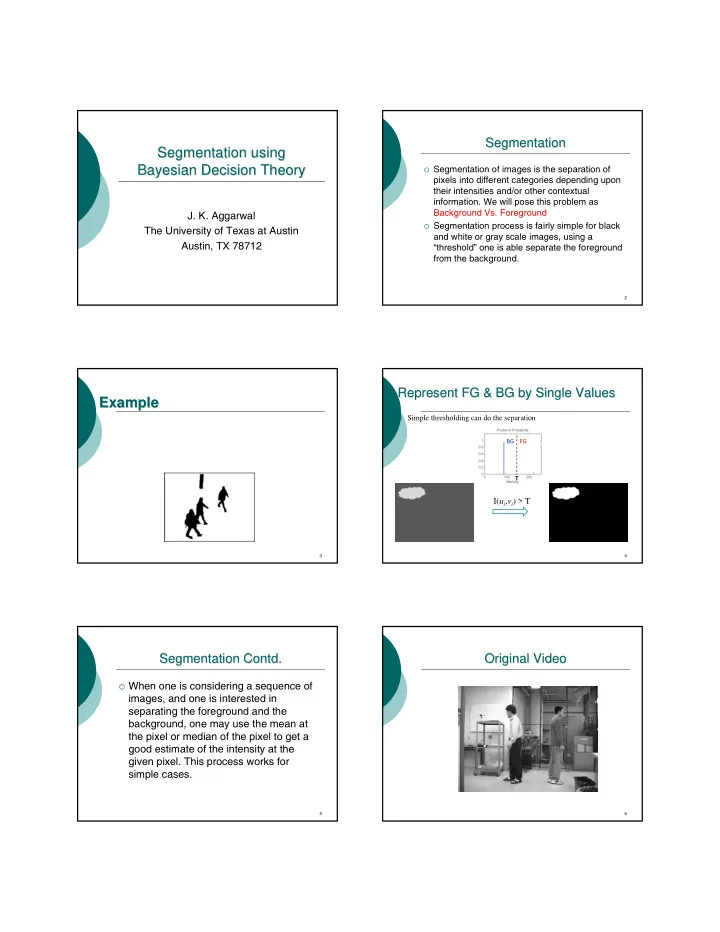

Segmentation Segmentation Segmentation using Segmentation using Bayesian Decision Theory Bayesian Decision Theory � Segmentation of images is the separation of pixels into different categories depending upon their intensities and/or other contextual information. We will pose this problem as Background Vs. Foreground J. K. Aggarwal � Segmentation process is fairly simple for black The University of Texas at Austin and white or gray scale images, using a Austin, TX 78712 “threshold” one is able separate the foreground from the background. 2 Represent FG & BG by Single Values Represent FG & BG by Single Values Example Example � Simple thresholding can do the separation BG FG T I( u i , v i ) > T 3 4 Segmentation Contd. Segmentation Contd. Original Video Original Video � When one is considering a sequence of images, and one is interested in separating the foreground and the background, one may use the mean at the pixel or median of the pixel to get a good estimate of the intensity at the given pixel. This process works for simple cases. 5 6

Mean & Median Images Segmentation cont. Mean & Median Images Segmentation cont. � Mean � Median � However, for more robust segmentation, one may assume that the intensity for the background and the foreground each is described by a probability density function. � One may use Bayesian Decision Theory for separating the foreground and background. 7 8 Prior probability Decision Using Only Priors Prior probability Decision Using Only Priors ω � Decide on the type of the “pixel” without � In this B vs. F example, let denote the being allowed to know the intensity state of nature ω � Decision Rule = Background � 1 ω ω > ω ω P P � Decide if ( ) ( ) = Foreground 1 2 � 1 2 ω ω < ω � Decide if P P ( ) ( ) � Prior ( a priori ) probabilities ω ω 1 2 P P 2 ( ), ( ) 1 2 � This rule decides on the same class for � Reflects knowledge of what the next pixel all pixels! might be before the pixel appears � But under these conditions, no other ω + ω = P P assuming 2 classes ( ) ( ) 1, � 1 2 classifier can perform better. 9 9 10 10 Density Functions Density Functions A Practical Decision Scenario A Practical Decision Scenario p x ω x ω p ( | ) ( | ) � Classify based on some feature, say intensity, m l 1 2 of the pixel samples x � We will capture the variability of this feature using a continuous class-conditional probability distribution p x ω ( | ) x � Probability density functions, where denotes the intensity, l and m indicate pixel position. In the most general case one can assume that each pixel has different probability density. 11 11 12 12

Bayes Rule Rule Bayes Decision Rule Decision Rule Bayes Bayes � Bayes Rule states: � Bayes decision rule: ω ω p x P Select ω ω > ω ( | ) ( ) P x P x if ( | ) ( | ) j j ω = 1 1 2 P x ( | ) j ω ω > ω ω p x i.e. p x P p x P ( ) ( | ) ( ) ( | ) ( ) 1 1 2 2 ( ) � If decision is based entirely ω = ω p x p x p x ω is the likelihood of x being in class ω j ( | ) ( | ) | � 1 2 j on priors ( ) is the prior probability of class ω j P ω � ω = ω j � If decision is based entirely on P P ( ) ( ) = ∑ 2 1 2 p x p x ω P ω ensures that is a ω ( ) ( | ) ( ) P x � ( | ) likelihoods j j j = j 1 valid posterior probability function that sums � Bayes rule combines both to achieve minimum probability of error to one. 13 13 14 Levels of Difficulty About Bayes Bayes Rule Rule Levels of Difficulty About � One knows probability density functions � Bayes Rule is derived from the joint and a priori probabilities. distribution ( ) ( ) ( ) ( ) ( ) � One estimates these probabilities from p ω x = P ω x p x = p x ω P ω , | | j j j j samples. One may assume normal ( ) ( ) ω ω p x P | distributions or more general forms ( ) j j ∴ ω = P x | ( ) j p x � You do not have a way of estimating these probabilities, you pose it as an � In words, Bayes rule says optimization problem or a clustering likelihood prior * problem. posterior = evidence 15 15 16 16 Bayes Rule (cont.) Bayes Rule (cont.) Bayes Rule (cont.) Bayes Rule (cont.) � Note that the product of likelihood and prior � Likelihood: p ( x| ω j ) simply denotes that all other probabilities governs the shape of the posterior things being equal, the category ω j for which p ( x| ω j ) is large is more “likely” to be the category P ω = ( ) 2/3 1 ω = P ( ) 1/3 � Evidence: p ( x ) is simply a scale factor to ensure 2 that P ( ω j |x ) is a valid probability function. � Bayes rule converts the prior and the likelihood to a posterior probability, which can now be used to make decisions � Decision Rule: ω ω > ω ω P x P x if ( | ) ( | ) else 1 1 2 2 17 17 18 18

Error Analysis Variations of the Bayes Bayes Rule Rule Error Analysis Variations of the ω ω ⎧ P x ( | ) if we decide � Bayes decision rule: = ⎨ 1 2 � P error x ( | ) ω ω ⎩ P x ( | ) if we decide Select ω ω > ω 2 1 P x P x if ( | ) ( | ) 1 1 2 � Average probability of error is ω ω > ω ω i.e. p x P p x P ( | ) ( ) ( | ) ( ) 1 1 2 2 ∞ ∞ = = P error P error x dx P error x p x dx ( ) ∫ ( , ) ∫ ( | ) ( ) � If decision is based entirely ω = ω p x p x ( | ) ( | ) 1 2 −∞ −∞ on priors � If P ( error|x ) is as small as possible for ω = ω � If decision is based entirely on P P every x , the above integral will be ( ) ( ) 1 2 likelihoods minimized � Bayes rule combines both to achieve � Hence using Bayes rule minimum probability of error [ ] = ω ω P error x P x P x ( | ) min ( | ), ( | ) 1 2 19 19 20 20 Univariate Density Density Univariate Denisty Denisty (cont.) (cont.) Univariate Univariate ∞ [ ] ( ) ( ) μ ≡ E x = xp x dx � A Univariate normal density ( ) ∫ ∼ N μ σ � Expected value p x 2 , −∞ � Points tend to cluster around the mean ⎡ ⎤ ⎛ x − μ ⎞ 2 1 1 ( ) = − p x exp ⎢ ⎜ ⎟ ⎥ ∞ πσ ⎝ σ ⎠ ⎡ ( ) ⎤ ( ) ( ) 2 ⎣ 2 ⎦ σ ≡ − μ 2 = − μ 2 2 E x x p x dx ∫ � Variance ⎣ ⎦ −∞ � Measure of spread of values ∞ ( ( ) ) ( ) ( ) H p x = − p x p x dx � Entropy ∫ ln −∞ � Measure of uncertainty � Normal has max. entropy of all distributions given mean and variance 21 22 Multivariate Density Multivariate Density Parameters of the multivariate normal Parameters of the multivariate normal ( ) ( ) � Mean � d -dimensional normal density ∼ p x N μ Σ , = ∫ [ ] ( ) μ ≡ E x x p x d x � ⎡ ⎤ 1 1 ( ) ( ) ( ) t − p x = − x − μ Σ x − μ 1 [ ] exp μ = ⎢ ⎥ � Computed component-wise; i.e. E x ( ) π d /2 Σ 1/ 2 ⎣ ⎦ 2 2 i i � Covariance Matrix � x is a d -component column vector ⎡ ( )( ) ⎤ ( )( ) ( ) t ⎦ ∫ t Σ ≡ E x − μ x − μ = x − μ x − μ p x d x � ⎣ ) ( ) ( � μ is the d -component mean vector σ = ⎡ − μ − μ ⎤ E x x � ⎣ ⎦ ij i i j j � Always symmetric and positive semidefinite � Σ is the d -by- d covariance matrix and | Σ | σ and Σ -1 are its determinant and inverse, is variance of x i � ii σ = implies that x i and x j are statistically 0 � respectively ij independent 23 24

Recommend

More recommend