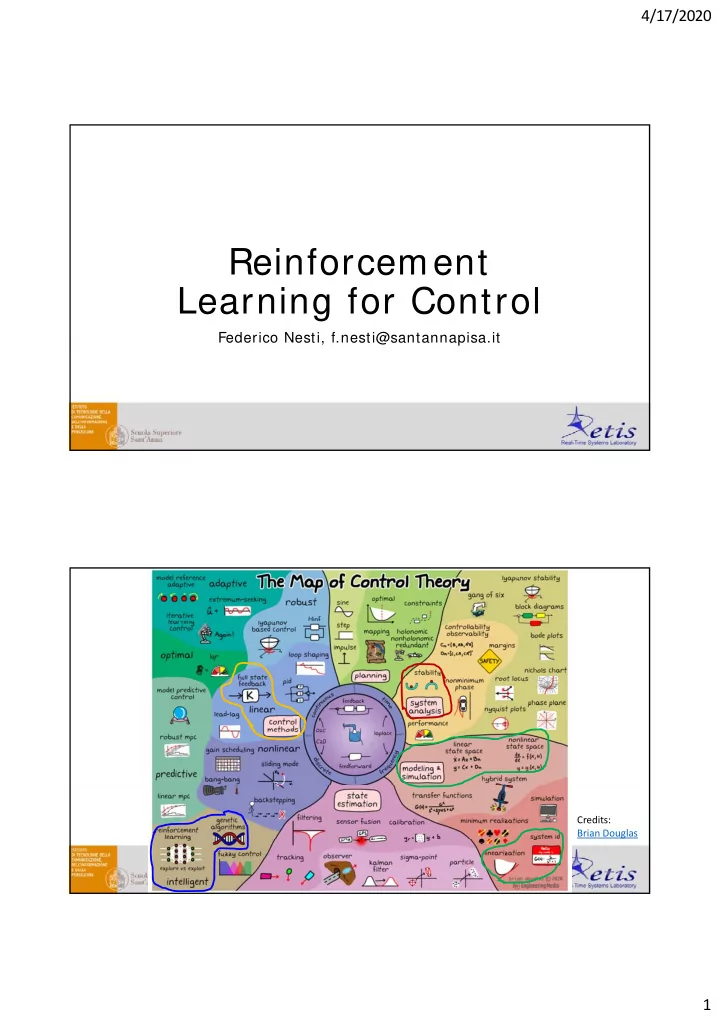

4/17/2020 Reinforcement Learning for Control Federico Nesti, f.nesti@santannapisa.it Federico Nesti, f.nesti@santannapisa.it Credits: Brian Douglas 1

4/17/2020 Layout • Reinforcement Learning Recap • Applications: o Q-Learning o Deep Q-Learning o Policy Gradients o Actor Critic (DDPG) • Major Difficulties in Deep RL M j Diffi lti i D RL Layout • Reinforcement Learning Recap • Applications: o Q-Learning o Deep Q-Learning o Policy Gradients o Actor Critic (DDPG) • Major Difficulties in Deep RL M j Diffi lti i D RL 2

4/17/2020 Reinforcement Learning Agent Environment Reinforcement Learning Agent Environment 3

4/17/2020 Reinforcement Learning Start State Terminal State Reinforcement Learning START HOLE HOLE GOAL 4

4/17/2020 Reinforcement Learning Reinforcement Learning 5

4/17/2020 Layout • Reinforcement Learning Recap • Applications: o Q-Learning o Deep Q-Learning o Policy Gradients o Actor Critic (DDPG) • Major Difficulties in Deep RL M j Diffi lti i D RL The two approaches of RL Policy search («primal» formulation): («primal» formulation): the search for the optimal policy is done directly in the policy space. 6

4/17/2020 The two approaches of RL Policy search («primal» formulation): («primal» formulation): the search for the optimal policy is done directly in the policy space. Function-based approach 7

4/17/2020 TD-Learning Q-Learning T Target t TD error TD error 8

4/17/2020 Q-Learning Q-Learning Notebook (not slippery) START 0 0 0 1 2 3 1 2 HOLE HOLE 3 4 5 4 5 6 7 6 7 HOLE 8 9 8 9 1 0 1 1 1 0 1 1 HOLE GOAL 1 2 1 3 1 3 1 4 1 2 1 3 1 4 1 5 1 5 9

4/17/2020 Q-Learning Notebook (SLIPPERY) START 0 0 0 1 2 3 1 2 3 HOLE HOLE 4 5 4 5 6 7 6 7 HOLE 8 9 8 9 1 0 1 1 1 0 1 1 HOLE GOAL 1 2 1 3 1 3 1 4 1 2 1 3 1 4 1 5 1 5 Q-Learning Notebook Remember: Reward is 1 only when Goal is reached, else is 0. • In the non-slippery case , the optimal solution is found: each state has a correspondent optimal action. • In the slippery case , where NO OPTIMAL SOLUTION CAN BE FOUND, the actions were learned to avoid all possible actions that could lead to a hole. 10

4/17/2020 Deep Q-Learning Extension of Q-learning for continuous state-space Target TD error Problems with Deep Q-Learning Solution: use of TARGET NETW ORKS . Solution: use of REPLAY BUFFER . 11

4/17/2020 Deep Q-Learning Random Target Sampling p g y Replay Netw ork Netw ork Buffer r Evict old data Deep Q-Learning Notebook Visual Gridw orld Agent Hole GOAL 12

4/17/2020 Deep Q-Learning Notebook A few advices: • Keep exploration rate high, learning rate low: lots of episodes are needed for satisfying solutions • Always save good solutions! • When the agent is not learning, try adding noise! • Reward does not always go high, there is a certain variance. Deep Q-Learning Notebook 13

4/17/2020 The two approaches of RL Policy search («primal» formulation): («primal» formulation): the search for the optimal policy is done directly in the policy space. Policy Search 14

4/17/2020 Policy Gradients Expand Expectation Expand Expectation Bring gradient under integral Return to expectation form Return to expectation form Expression for grad-log-prob Policy Search How to Full com pute p episode p this??? Return 15

4/17/2020 Pseudo-loss trick to change to gradient descent Intuition 16

4/17/2020 Pseudo-loss trick W arnings : • This pseudo-loss is useful because we use automatic p differentiation of TensorFlow to compute the gradient. • This is not an actual loss function. It does not measure performance and has no meaning. There is no guarantee that this works. • The loss function is defined on a dataset that is dependent on the parameters (it should not in gradient descent) the parameters (it should not, in gradient descent). • Only care about the average reward, which is always a good performance indicator. Policy Gradients notebook 17

4/17/2020 Beyond discrete action spaces Deep Deterministic Policy Gradients 18

4/17/2020 Deep Deterministic Policy Gradients (Chain Rule) Deep Deterministic Policy Gradients 19

4/17/2020 DDPG notebook A few advices: • • DDPG presents high reward variance It is wise to keep track of all the best DDPG presents high reward variance. It is wise to keep track of all the best solutions and rank them in some clever way (e.g., prefer solutions with correspondent high average reward, or use some other performance measure – oscillations, for instance). • Sometimes no satisfying solution is found within the first N episodes. Don’t worry: if you find a set of parameters that had promising performance, save them and resume training starting from those parameters. This might require smaller learning rates. require smaller learning rates. • DDPG is very sensitive to hyperparameters, that must vary for different cases. No standard set of hyperparameters will work out of the box. Layout • Reinforcement Learning Recap • Applications: o Q-Learning o Deep Q-Learning o Policy Gradients o Actor Critic (DDPG) • Major Difficulties in Deep RL M j Diffi lti i D RL 20

4/17/2020 Algorithms recap Off-Policy On-Policy algorithms algorithms Computational Sample Efficiency Efficiency Efficiency Model-based Gradient-free shallow RL m ethods • PILCO • Evolutionary Replay Buffer/ Value (CMA-ES) Estim ation m ethods Model-based Fully online PG m ethods / Deep RL • Q-Learning Q Learning m ethods m ethods Actor-Critic Actor Critic • PETS • DDPG • A3C • REINFORCE • Guided Policy • NAF • TRPO Search • SAC Other useful references for RL • OpenAI SpinningUp: OpenAI educational resources. It explains RL basics and the major Deep RL algorithms • Sutton-Barto: Reinforcement Learning, an Introduction. Great basics book, full of examples. A bit hard to read at first but nice handbook. • Szepesvàri: Algorithms for Reinforcement Learning. Handbook for the main RL algorithms. • S. Levine: Deep RL course @ Berkeley 21

4/17/2020 Practical problems in RL • Rew ard Hacking : since the reward is a scalar value, it is crucial to design it in such a way that there is no «clever way» to maximize it. • O Optim al solution exists only for tabular m ethods : as soon as i l l i i l f b l h d approximation/ continuous spaces are used, no optimal solution is guaranteed; • RL suffers from the curse of dim ensionality : exploration of the state space scales exponentially with the number of state space dimensions. • Real-world system often break if used randomly • • Deep RL inherits all the advantages and challenges of Deep Learning Deep RL inherits all the advantages and challenges of Deep Learning (approximation and generalization, overfitting, high unpredictability, debugging difficulties) 22

Recommend

More recommend