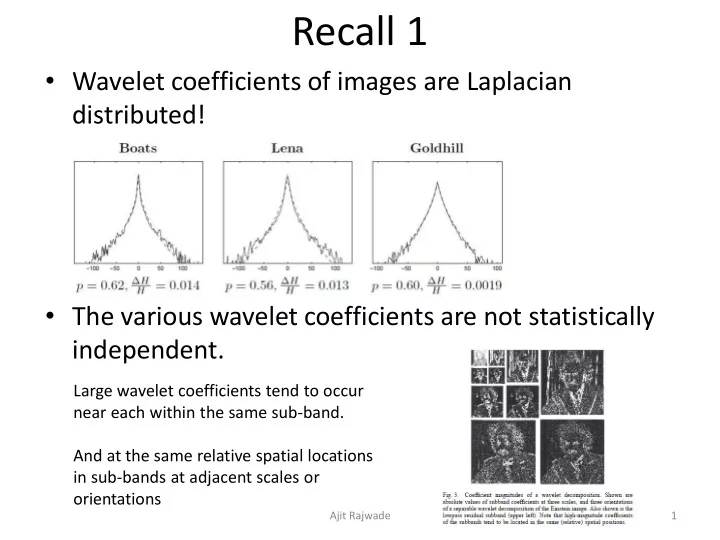

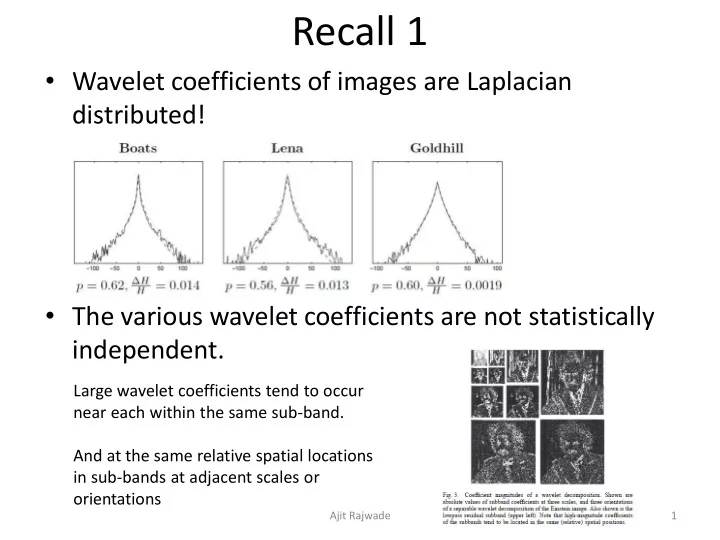

Recall 1 • Wavelet coefficients of images are Laplacian distributed! • The various wavelet coefficients are not statistically independent. Large wavelet coefficients tend to occur near each within the same sub-band. And at the same relative spatial locations in sub-bands at adjacent scales or orientations Ajit Rajwade 1

Image source: Buccigrossi et al, Image Compression via Joint Statistical Characterization in the Wavelet Domain, IEEE Transactions on Image Processing, 1997 Wavelet coefficient dependency Ajit Rajwade 2

Wavelet coefficient dependency • The conditional density of the child wavelet coefficient ( c ) given the parent ( p ) (figure 6A,B two slides before) reveals: 1. E( c | p ) = 0 for all values of p . 2. They are not independent statistically – because the variance of c depends on the value of p . 3. The right side of the conditional density of the log of the squared coefficient is unimodal and concentrated on a unit slope line. 4. Left side shows c being constant (not dependent on p ). • This pattern is also observed for siblings (adjacent spatial locations), cousins (same spatial location, adjacent orientations). • This pattern is robust across a wide range of images. Ajit Rajwade 3

Wavelet coefficient dependency • So how do we model this mathematically? • Here is one statistical model: Neighbors of coefficient c 2 2 2 c w k p k (some cousins & siblings, parent) k Can be obtained by least squares method Ajit Rajwade 4

2 2 2 c w k p k k Can be obtained by least squares method 2 2 2 c w p , i index for coefficien t (1 i n ) i k ik k 2 1 n 1 K c wP , c R , w R ( assuming K neighbors) K n P R 2 Bringing in , we have : P ˆ ˆ ˆ ˆ 2 1 ( K 1 ) ( K 1 ) n c ( w ) w P , w R , P R 1 ˆ ˆ ˆ ˆ T T 1 w c P ( P P ) Least squares estimate of w Ajit Rajwade 5

Wavelet coefficient dependency Ajit Rajwade 6

Application to denoising • We have already seen the formula: T 2 y x n U n ( U y ) ˆ T i ( U y ) ˆ i i 2 2 1 • The same formula is applicable here with the following modification: 2 2 2 2 2 w p w k p k k k ˆ T k k ( U y ) i i 2 2 2 w p k k k Ajit Rajwade 7

Application to denoising • But this is a chicken and egg problem – because we do not know the values of {w k } or α beforehand! • But we can estimate these values by minimizing: • The values of {p k } are obtained from a denoising algorithm that ignores wavelet coefficient dependency, e.g. using 2 ˆ T ( U y ) i i 2 2 and then used for estimating {w k } or α . Ajit Rajwade 8

Sample results (Left) Original and (Right) noisy image (Left) Marginal MAP with independent Gaussian prior and (Right) new model MMSE estimator using GGD prior on wavelet coefficients Ajit Rajwade 9

Matrix Completion CS 754 Lecture Notes Ajit Rajwade 10

Matrix Completion in Practice: Scenario 1 • Consider a survey of m people where each is asked q questions. • It may not be possible to ask each person all q questions. • Consider a matrix of size m by q (each row is the set of questions asked to any given person). • This matrix is only partially filled (many missing entries). • Is it possible to infer the full matrix given just the recorded entries?

Matrix Completion in Practice: Scenario 2 • Some online shopping sites such as Amazon, Flipkart, Ebay, Netflix etc. have recommender systems. • These websites collect product ratings from users (especially Netflix). • Based on user ratings, these websites try to recommend other products/movies to the user that he/she will like with a high probability. • Consider a matrix with the number of rows equal to the number of users, and number of columns equal to the number of movies/products. • This matrix will be HIGHLY incomplete (no user has the patience to rate too many movies!!) – maybe only 5% of the entries will be filled up. • Can the recommender system infer user preferences from just the defined entries?

Matrix Completion in Practice: Scenario 2 • Read about the Netflix Prize to design a better recommender system: http://en.wikipedia.org/wiki/Netflix_Prize

Matrix Completion in Practice: Scenario 3 • Consider an image or a video with several pixel values missing. • This is not uncommon in range imagery or remote sensing applications! • Consider a matrix whose each column is a (vectorized) patch of m pixels. Let the number of columns be K . • This m by K matrix will have many missing entries. • Is it possible to infer the complete matrix given just the defined pixel values? • If the answer were yes, note the implications for image compression!

Matrix Completion in Practice: Scenario 4 • Consider a long video sequence of F frames. • Suppose I mark out M salient (interesting points) { P i }, 1<= i <= m , in the first frame. • And track those points in all subsequent frames. • Consider a matrix M of size m x 2F where row j contains the X and Y coordinates of points on the motion trajectory of initial point P j (in each of the F frames). • Unfortunately, many salient points may not be trackable due to occlusion or errors from the tracking algorithms. • So M is highly incomplete. • Is it possible to infer the true matrix from only the available measurements?

A property of these matrices • Scenario 1: Many people will tend to give very similar or identical answers to many survey questions. • Scenario 2: Many people will have similar preferences for movies (only a few factors affect user choices). • Scenario 3: Non-local self-similarity! • This makes the matrices in all these scenarios (approximately) low in rank!

A property of these matrices • Scenario 4: The true matrix underlying M in question has been PROVED to be of low rank (in fact, rank 3 ) under orthographic projection ( ref: Tomasi and Kanade, “Shape and Motion from Image Streams Under Orthography: a Factorization Method”, IJCV 1992 ) and a few other more complex camera models (up to rank 9). • In case of orthographic projection, M can be expressed as a product of two matrices – a rotation matrix of size 2F x 3 , and a shape matrix of size 3 x P . Hence it has rank 3. • M is useful for many computer vision problems such as structure from motion, motion segmentation and multi-frame point correspondences.

Recommend

More recommend