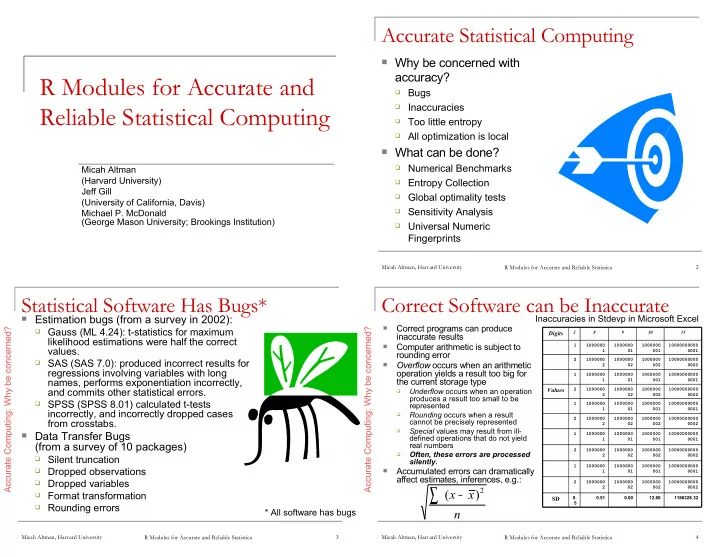

Accurate Statistical Computing Why be concerned with accuracy? R Modules for Accurate and Bugs Inaccuracies Reliable Statistical Computing Too little entropy All optimization is local What can be done? Numerical Benchmarks Micah Altman Entropy Collection (Harvard University) Jeff Gill Global optimality tests (University of California, Davis) Sensitivity Analysis Michael P. McDonald (George Mason University; Brookings Institution) Universal Numeric Fingerprints Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 2 Statistical Software Has Bugs* Correct Software can be Inaccurate Estimation bugs (from a survey in 2002): Inaccuracies in Stdevp in Microsoft Excel Correct programs can produce Accurate Computing: Why be concerned? Gauss (ML 4.24): t-statistics for maximum Accurate Computing: Why be concerned? Digits 2 8 9 10 15 inaccurate results likelihood estimations were half the correct Computer arithmetic is subject to 1 1000000 1000000 1000000 10000000000 values. 1 01 001 0001 rounding error SAS (SAS 7.0): produced incorrect results for 2 1000000 1000000 1000000 10000000000 Overflow occurs when an arithmetic 2 02 002 0002 regressions involving variables with long operation yields a result too big for 1 1000000 1000000 1000000 10000000000 names, performs exponentiation incorrectly, the current storage type 1 01 001 0001 and commits other statistical errors. Underflow occurs when an operation Values 2 1000000 1000000 1000000 10000000000 2 02 002 0002 produces a result too small to be SPSS (SPSS 8.01) calculated t-tests represented 1 1000000 1000000 1000000 10000000000 1 01 001 0001 incorrectly, and incorrectly dropped cases Rounding occurs when a result 2 1000000 1000000 1000000 10000000000 from crosstabs. cannot be precisely represented : 2 02 002 0002 Special values may result from ill- Data Transfer Bugs 1 1000000 1000000 1000000 10000000000 defined operations that do not yield 1 01 001 0001 (from a survey of 10 packages) real numbers 2 1000000 1000000 1000000 10000000000 Often, these errors are processed Silent truncation 2 02 002 0002 silently. 1 1000000 1000000 1000000 10000000000 Dropped observations Accumulated errors can dramatically 1 01 001 0001 affect estimates, inferences, e.g.: Dropped variables 2 1000000 1000000 1000000 10000000000 ∑ 2 02 002 0002 − 2 ( x x ) Format transformation SD 0. 0.51 0.00 12.80 1186328.32 Rounding errors 5 n * All software has bugs Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 3 Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 4

Easy Inaccuracy in R Random Numbers Aren’t Accurate Computing: Why be concerned? # Three formulas for the standard deviation of the population Pseudo Random number generators are assumed > sdp.formula1 <- function(x) { n = length(x); sqrt(n * sum(x^2) - sum(x)^2)/n } Deterministic > sdp.formula2 <- function(x) { sum(sqrt((x - sum(x)/length(x))^2))/length(x) } Meant to be seeded with true random values > sdp.formula3 <- function(x) { sqrt(var(x) * (length(x) - 1)/length(x)) } Generate sequences of fixed length Period puts (theoretical) limits on size of sample, before > dat = testMat(50) correlation may occur among sub sequences > print(rbind(sapply(dat, sdp.formula1), sapply(dat, sdp.formula2), sapply(dat, sdp.formula3)), digits = 3) 3 5 7 9 11 13 15 17 19 [1,] 0.5 0.5 0.48 NaN NaN 265271.1 4.43e+07 NaN 4.31e+11 A basic linear congruential [2,] 0.5 0.5 0.50 0.5 0.5 0.5 0.5 0 0 generator [3,] 0.5 0.5 0.50 0.5 0.5 0.5 0.5 0 0 ( ) = + X aX * b mod m + t 1 t Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 5 Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 6 What can go wrong with PRNGS? Easy Optimization Seed isn’t chosen randomly. Accurate Computing: Why be concerned? Applications of maximum likelihood, Too many draws. nonlinear least squares, etc implicitly Used for t-dimensional point for t large. assume: Draws do not follow a uniform distribution. There is a single global optimum Hidden structure to supposed randomness. We’ll find it. We need more entropy ! Local optima, if they exist, are substantively x y unimportant x y z Use me instead z Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 7 Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 8

Computational Threats to Inference* Statistical Benchmarks Accurate Computing: Why be concerned? Statistical benchmark: Estimation of confidence Convergence to Local Optima interval around optimum LL(B| M ,X) Accurate Computing: Benchmark [--] feed the computer a set (algorithmic limitations) (algorithmic limitations, limits of confidence of difficult problems for intervals) Early Convergence which you know the (Numerical Stability) right answer If the answers given back are accurate, you can have more Numerically Induced Discontinuities confidence (Numerical Stability, “Bugs”, Approximation Error) B * All optimization is local Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 9 Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 10 Statistical Benchmarks from Accuracy Tests of Global Optimality Tables of basic distributions Supplements NIST StrD, Diehard, TestUO1 Count Basins of Attraction for random starting values. Turing; Starr (1979); Finch, Mendell, and Thode (1989) # compute log-relative-error (LRE) of qt() results, compared to correct values Take likelihood at random samples of parameter space, de data(ttst) lrq = LRE (qt(ttst$p,ttst$df),ttst$invt) Haan (1981); Veall (1989). Choose, n , samples for the parameter vector using a uniform # if there are low LRE's avoid qt() in those areas table(trunc(lrq)) density, evaluate likelihood L() (1-p) level confidence interval for global max: -Inf 3 4 5 6 7 8 9 Inf 2 1 17 1558 5143 650 40 7 34 − L L + max 2 nd max L , L # can use LRE to explore stability of inverse functions max max - 1 n − p 1 > p.rand=runif(100000) > df=trunc(runif(10000,min=1,max=200)) No guarantees, but acts as a sanity check > p.rand=runif(100000) > df.rand=trunc(runif(100000,min=1,max=200)) > table(trunc(LRE(p.rand,qt(pt(p.rand,df.rand),df.rand) ))) Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 11 Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 12

Truly Random Sensitivity Analysis Replication on multiple platforms Sensitivity to PRNG choice Sensitivity to choice of optimization algorithm Sensitivity to data perturbations Use entropy gathered from outside sources: Local keystrokes and hardware resetSeed() interrupts Set PRNG seed using true random value Radioactive decay at Fermilab runift() True random variates, from entropy pool (Lead underwear optional) (slow) runifs() PRNG sequences, periodically reseeded Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 13 Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 14 Data Perturbations Interpreted in Other Perturbations of Data In Statistical Context Frameworks Cook [1986], Laurent & Cook [1993] Beaton, Rubin & Baron [1976]; Gill, et. al [1981]; If L and ω well behaved… Straightforward mapping between perturbation of Chaitin-Chatelin, F, and Traviesas-Caasan [2004] Perturbation in data as sensitivity test for computational data and perturbation of model problems Small normally-distributed noise added to data Belsley [1991]; Hendrickx J, Belzer B, te Grotenhuis small shift to L M, Lammers J (2004) Cook defines worst case likelihood distance: [ ] ( ) ( ) Perturbation/permutation of data as collinearity diagnostic ( ) ˆ ˆ = − LD ω 2 L θ L θ ω Can be interpreted in terms of [ ] { ( ) } ( ) ( ) Bottom Line: ˆ − < 2 θ | 2 L θ L θ χ p α If the model is sensitive to a little noise, beware! Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 15 Micah Altman, Harvard University R Modules for Accurate and Reliable Statistica 16

Recommend

More recommend