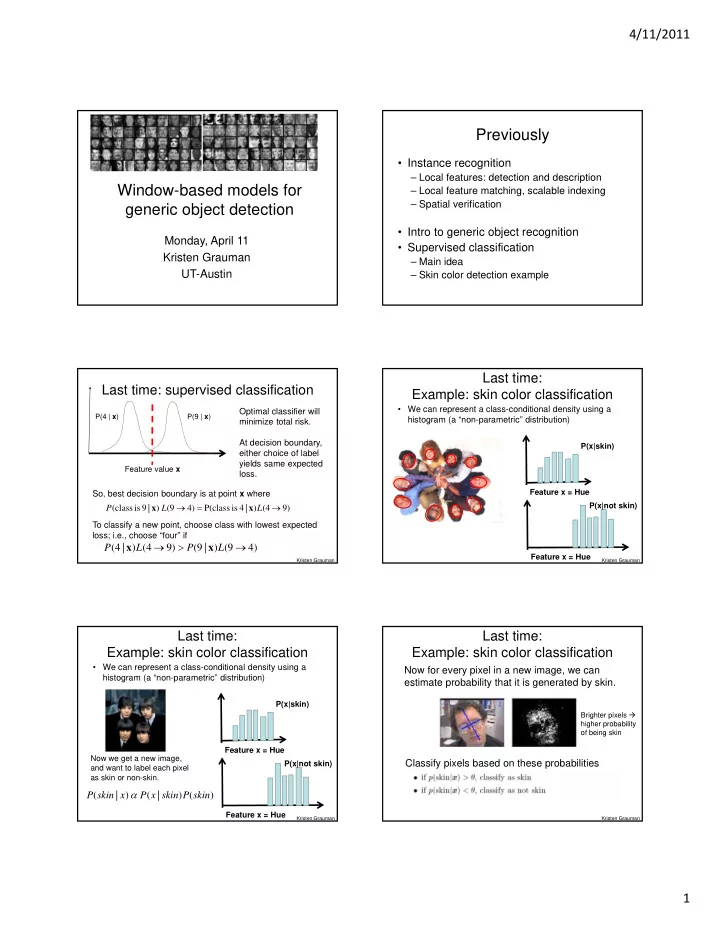

4/11/2011 Previously • Instance recognition – Local features: detection and description Window-based models for – Local feature matching, scalable indexing – Spatial verification generic object detection • Intro to generic object recognition Monday, April 11 • Supervised classification Kristen Grauman – Main idea UT-Austin – Skin color detection example Last time: Last time: supervised classification Example: skin color classification • We can represent a class-conditional density using a Optimal classifier will P(4 | x ) P(9 | x ) histogram (a “non-parametric” distribution) minimize total risk. At decision boundary, P(x|skin) either choice of label yields same expected Feature value x loss. Feature x = Hue So, best decision boundary is at point x where P(x|not skin) P ( class is 9 | x ) L (9 4) P(class is 4 | x ) L (4 9) To classify a new point, choose class with lowest expected loss; i.e., choose “four” if P ( 4 | x ) L ( 4 9 ) P ( 9 | x ) L ( 9 4 ) Feature x = Hue Kristen Grauman Kristen Grauman Last time: Last time: Example: skin color classification Example: skin color classification • We can represent a class-conditional density using a Now for every pixel in a new image, we can histogram (a “non-parametric” distribution) estimate probability that it is generated by skin. P(x|skin) Brighter pixels higher probability of being skin Feature x = Hue Now we get a new image, Classify pixels based on these probabilities P(x|not skin) and want to label each pixel as skin or non-skin. ( | ) ( | ) ( ) P skin x P x skin P skin Feature x = Hue Kristen Grauman Kristen Grauman 1

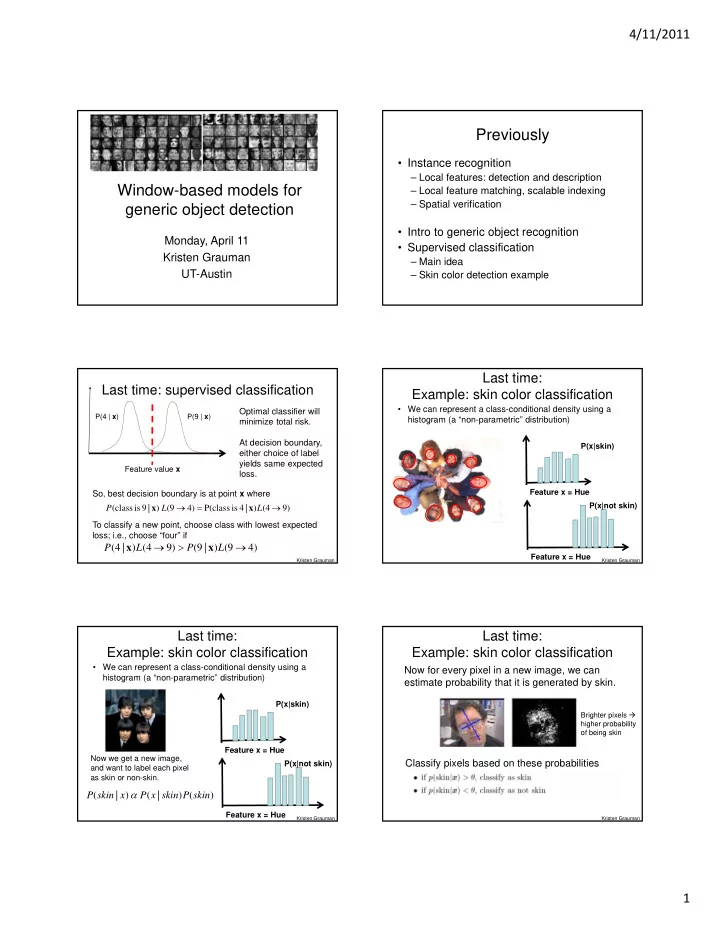

4/11/2011 Generic category recognition: Today basic framework • Window-based generic object detection • Build/train object model – basic pipeline – Choose a representation – boosting classifiers – face detection as case study – Learn or fit parameters of model / classifier • Generate candidates in new image • Score the candidates Window-based models Generic category recognition: Building an object model representation choice Simple holistic descriptions of image content grayscale / color histogram vector of pixel intensities Window ‐ based Part ‐ based Kristen Grauman Window-based models Window-based models Building an object model Building an object model • Consider edges, contours, and (oriented) intensity • Pixel-based representations sensitive to small shifts gradients • Color or grayscale-based appearance description can be sensitive to illumination and intra-class appearance variation Kristen Grauman Kristen Grauman 2

4/11/2011 Window-based models Window-based models Building an object model Building an object model • Consider edges, contours, and (oriented) intensity Given the representation, train a binary classifier gradients Car/non-car Classifier • Summarize local distribution of gradients with histogram No, not a car. Yes, car. Locally orderless: offers invariance to small shifts and rotations Contrast-normalization: try to correct for variable illumination Kristen Grauman Kristen Grauman Generic category recognition: Discriminative classifier construction basic framework Nearest neighbor Neural networks • Build/train object model 10 6 examples LeCun, Bottou, Bengio, Haffner 1998 Shakhnarovich, Viola, Darrell 2003 – Choose a representation Rowley, Baluja, Kanade 1998 Berg, Berg, Malik 2005... … – Learn or fit parameters of model / classifier Conditional Random Fields Support Vector Machines Boosting • Generate candidates in new image • Score the candidates Guyon, Vapnik Viola, Jones 2001, McCallum, Freitag, Pereira Heisele, Serre, Poggio, Torralba et al. 2004, 2000; Kumar, Hebert 2003 2001,… Opelt et al. 2006,… … Slide adapted from Antonio Torralba Window-based models Window-based object detection: recap Generating and scoring candidates Training: 1. Obtain training data 2. Define features 3. Define classifier Given new image: 1. Slide window Training examples Car/non-car 2. Score by classifier Classifier Car/non-car Classifier Feature extraction Kristen Grauman Kristen Grauman 3

4/11/2011 Boosting intuition Discriminative classifier construction Nearest neighbor Neural networks 10 6 examples LeCun, Bottou, Bengio, Haffner 1998 Shakhnarovich, Viola, Darrell 2003 Rowley, Baluja, Kanade 1998 Weak Berg, Berg, Malik 2005... … Classifier 1 Support Vector Machines Conditional Random Fields Boosting Guyon, Vapnik Viola, Jones 2001, McCallum, Freitag, Pereira Heisele, Serre, Poggio, Torralba et al. 2004, 2000; Kumar, Hebert 2003 2001,… Opelt et al. 2006,… … Slide adapted from Antonio Torralba Slide credit: Paul Viola Boosting illustration Boosting illustration Weights Increased Weak Classifier 2 Boosting illustration Boosting illustration Weights Increased Weak Classifier 3 4

4/11/2011 Boosting illustration Boosting: training • Initially, weight each training example equally • In each boosting round: – Find the weak learner that achieves the lowest weighted training error – Raise weights of training examples misclassified by current weak learner Final classifier is • Compute final classifier as linear combination of all weak a combination of weak classifiers learners (weight of each learner is directly proportional to its accuracy) • Exact formulas for re-weighting and combining weak learners depend on the particular boosting scheme (e.g., AdaBoost) Slide credit: Lana Lazebnik Boosting: pros and cons Viola-Jones face detector • Advantages of boosting • Integrates classification with feature selection • Complexity of training is linear in the number of training examples • Flexibility in the choice of weak learners, boosting scheme • Testing is fast • Easy to implement • Disadvantages • Needs many training examples • Often found not to work as well as an alternative discriminative classifier, support vector machine (SVM) – especially for many-class problems Slide credit: Lana Lazebnik Viola-Jones face detector Viola-Jones detector: features “ Rectangular” filters Main idea: Feature output is difference between – Represent local texture with efficiently computable adjacent regions “rectangular” features within window of interest – Select discriminative features to be weak classifiers Value at (x,y) is Efficiently computable sum of pixels above and to the – Use boosted combination of them as final classifier with integral image: any left of (x,y) sum can be computed in – Form a cascade of such classifiers, rejecting clear constant time. negatives quickly Integral image Kristen Grauman Kristen Grauman 5

4/11/2011 Computing sum within a rectangle Viola-Jones detector: features • Let A,B,C,D be the values of the integral “ Rectangular” filters image at the corners of a Feature output is difference between D B rectangle adjacent regions • Then the sum of original image values within the Value at (x,y) is A rectangle can be C Efficiently computable sum of pixels computed as: above and to the with integral image: any left of (x,y) sum = A – B – C + D sum can be computed in constant time • Only 3 additions are required for any size of Avoid scaling images rectangle! scale features directly Integral image for same cost Lana Lazebnik Kristen Grauman Viola-Jones detector: AdaBoost Viola-Jones detector: features • Want to select the single rectangle feature and threshold that best separates positive (faces) and negative (non- Considering all faces) training examples, in terms of weighted error. possible filter parameters: position, scale, and type: Resulting weak classifier: 180,000+ possible features associated with each 24 x 24 window For next round, reweight the … Which subset of these features should we examples according to errors, Outputs of a possible choose another filter/threshold use to determine if a window has a face? rectangle feature on combo. faces and non-faces. Use AdaBoost both to select the informative features and to form the classifier Kristen Grauman Kristen Grauman AdaBoost Algorithm Viola-Jones Face Detector: Results S tart with uniform weights on training examples {x 1 ,… x n } Perceptual and Sensory Augmented Computing Perceptual and Sensory Augmented Computing First two features For T rounds selected Evaluate Visual Object Recognition Tutorial Visual Object Recognition Tutorial weighted error Visual Object Recognition Tutorial Visual Object Recognition Tutorial for each feature, pick best. Re-weight the examples: Incorrectly classified -> more weight Correctly classified -> less weight Final classifier is combination of the weak ones, weighted according to error they had. Freund & Schapire 1995 6

Recommend

More recommend