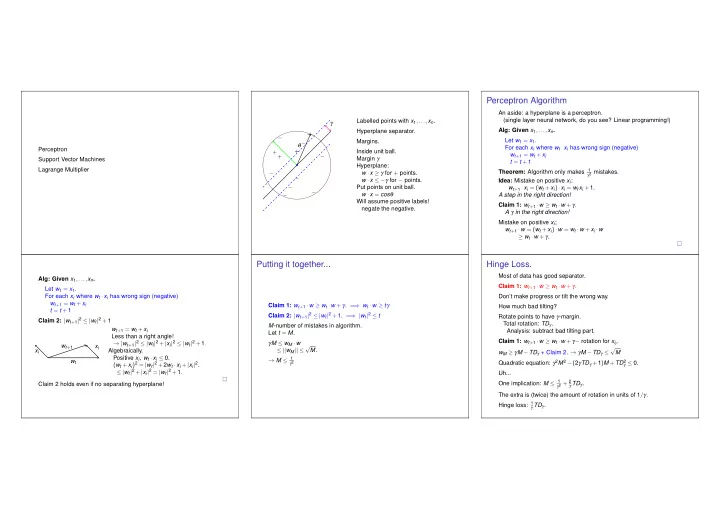

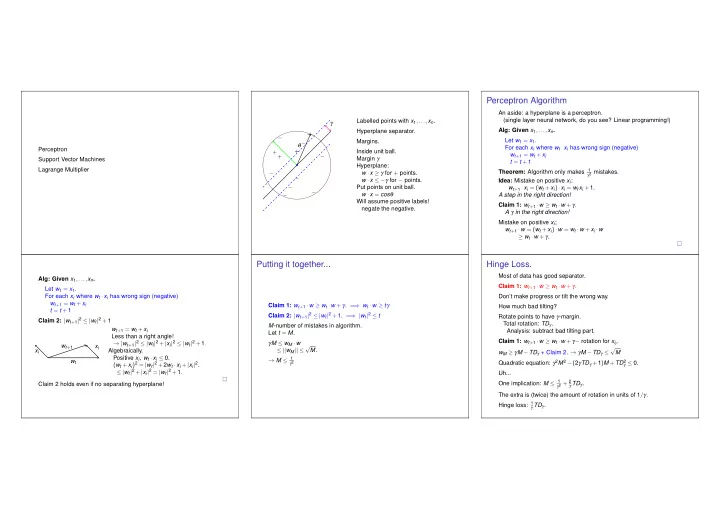

Perceptron Algorithm An aside: a hyperplane is a perceptron. (single layer neural network, do you see? Linear programming!) Labelled points with x 1 ,..., x n . γ Alg: Given x 1 ,..., x n . Hyperplane separator. − + − Let w 1 = x 1 . Margins. − θ For each x i where w t · x i has wrong sign (negative) Perceptron − + ++ Inside unit ball. w t + 1 = w t + x i − Margin γ Support Vector Machines t = t + 1 Hyperplane: Lagrange Multiplier Theorem: Algorithm only makes 1 γ 2 mistakes. − w · x ≥ γ for + points. − w · x ≤ − γ for − points. Idea: Mistake on positive x i : − Put points on unit ball. w t + 1 · x i = ( w t + x i ) · x i = w t x i + 1. − w · x = cos θ A step in the right direction! − Will assume positive labels! Claim 1: w t + 1 · w ≥ w t · w + γ . negate the negative. A γ in the right direction! Mistake on positive x i ; w t + 1 · w = ( w t + x i ) · w = w t · w + x i · w ≥ w t · w + γ . Putting it together... Hinge Loss. Most of data has good separator. Alg: Given x 1 ,..., x n . Claim 1: w t + 1 · w ≥ w t · w + γ . Let w 1 = x 1 . For each x i where w t · x i has wrong sign (negative) Don’t make progress or tilt the wrong way. w t + 1 = w t + x i Claim 1: w t + 1 · w ≥ w t · w + γ . = ⇒ w t · w ≥ t γ How much bad tilting? t = t + 1 Claim 2: | w t + 1 | 2 ≤ | w t | 2 + 1. = ⇒ | w t | 2 ≤ t Rotate points to have γ -margin. Claim 2: | w t + 1 | 2 ≤ | w t | 2 + 1 Total rotation: TD γ . M -number of mistakes in algorithm. w t + 1 = w t + x i Analysis: subtract bad tilting part. Let t = M . Less than a right angle! Claim 1: w t + 1 · w ≥ w t · w + γ − rotation for x i t . → | w t + 1 | 2 ≤ | w t | 2 + | x i | 2 ≤ | w t | 2 + 1 . γ M ≤ w M · w √ w t + 1 x i √ x i Algebraically. ≤ || w M || ≤ M . w M ≥ γ M − TD γ + Claim 2. → γ M − TD γ ≤ M Positive x i , w t · x i ≤ 0. → M ≤ 1 Quadratic equation: γ 2 M 2 − ( 2 γ TD γ + 1 ) M + TD 2 w t ( w t + x i ) 2 = | w t | 2 + 2 w t · x i + | x i | 2 . γ ≤ 0. γ 2 ≤ | w t | 2 + | x i | 2 = | w t | 2 + 1 . Uh... One implication: M ≤ 1 γ 2 + 2 γ TD γ . Claim 2 holds even if no separating hyperplane! The extra is (twice) the amount of rotation in units of 1 / γ . Hinge loss: 1 γ TD γ .

Approximately Maximizing Margin Algorithm Margin Approximation: Claim 2 Claim 2(?): | w t + 1 | 2 ≤ | w t | 2 + 1?? Adding x i to w t even if in correct direction. There is a γ separating hyperplane. Find it! (Kind of.) Obtuse triangle. v x i | v | 2 ≤ | w t | 2 + 1 Any point within γ / 2 is still a mistake. w t + 1 Support Vector Machines. 1 → | v | ≤ | w t | + w t Let w 1 = x 1 , 2 | w t | < γ / 2 (square right hand side.) For each x 2 ,... x n , Red bit is at most γ / 2. if w t · x i < γ / 2, w t + 1 = w t + x i , t = t + 1 2 | w t | + γ 1 Together: | w t + 1 | ≤ | w t | + 2 Claim 1: w t + 1 · w ≥ w t · w + γ . If | w t | ≥ 2 γ , then | w t + 1 | ≤ | w t | + 3 4 γ . Same (ish) as before. M updates | w M | ≤ 2 γ + 3 4 γ M . Claim 1: Implies | w M | ≥ γ M . γ M ≤ 2 γ + 3 4 γ M → M ≤ 8 γ 2 Other fat separators? Kernel Functions. Video Map x to φ ( x ) . x 2 + y 2 Hyperplane separator for points under φ ( · ) . + + + Problem: complexity of computing in higher dimension. + + + Recall perceptron. Only compute dot products! − + Test: w t · x i > γ − w t = x i 1 + x i 2 + x i 3 ··· − − − “http://www.youtube.com/watch?v=3liCbRZPrZA” − Support Vectors: x i 1 , x i 2 ,... − − → Support Vector Machine. − − + Kernel trick: compute dot products in original space. y x Kernel function for mapping φ ( · ) : K ( x , y ) = φ ( x ) · φ ( y ) No hyperplane separator. K ( x , y ) = ( 1 + x · y ) d φ ( x ) = [ 1 ,..., x i ,..., x i x j ... ] . Polynomial. Circle separator! Map points to three dimensions. K ( x , y ) = ( 1 + x 1 y 1 )( 1 + x 2 y 2 ) ··· ( 1 + x n y n ) map point ( x , y ) to point ( x , y , x 2 + y 2 ) . φ ( x ) - products of all subsets. Boolean Fourier basis. Hyperplane separator in three dimensions. K ( x , y ) = exp ( C | x − y | 2 ) Infinite dimensional space. Expansion of e z . Gaussian Kernel.

Support Vector Machine Lagrangian Dual. Find x , subject to Pick Kernel. f i ( x ) ≤ 0 , i = 1 ,... m . Run algorithm that: (1) Uses dot products. Remember calculus (constrained optimization.) (2) Outputs hyperplane that is linear combination of points. L ( x , λ ) = ∑ m Lagrangian: i = 1 λ i f i ( x ) Perceptron. λ i ≥ 0 - Lagrangian multiplier for inequality i . Lagrange Multipliers. Max Margin Problem as Convex optimization: min | w | 2 where ∀ i w · x i ≥ 1. For feasible solution x , L ( x , λ ) is (A) non-negative in expectation (B) positive for any λ . Algorithms output: tight hyperplanes! (C) non-positive for any valid λ . X Solution is linear combination of hyperplanes w = α 1 x 1 + α 2 x 2 + ··· . If ∃ λ ≥ 0, where L ( x , λ ) is positive for all x With Kernel: φ ( · ) Problem is to find α i where (A) there is no feasible x . ∀ i ( ∑ j α j φ ( x j )) · φ ( x i ) ≥ 1 (B) there is no x , λ with L ( x , λ ) < 0. Lagrangian:constrained optimization. Why important: KKT. Linear Program. Karash, Kuhn and Tucker Conditions. min cx , Ax ≥ b min f ( x ) subject to f i ( x ) ≤ 0 , i = 1 ,..., m min f ( x ) min c · x Lagrangian function: subject to b i − a i · x ≤ 0 , i = 1 ,..., m subject to f i ( x ) ≤ 0 , i = 1 ,..., m L ( x , λ ) = f ( x )+ ∑ m i = 1 λ i f i ( x ) L ( x , λ ) = f ( x )+ ∑ m i = 1 λ i f i ( x ) Lagrangian (Dual): If (primal) x has value v f ( x ) = v and all f i ( x ) ≤ 0 For all λ ≥ 0 have L ( x , λ ) ≤ v Local minima for feasible x ∗ . L ( λ , x ) = cx + ∑ i λ i ( b i − a i x ) . Maximizing λ , only positive λ i when f i ( x ) = 0 There exist multipliers λ , where or which implies L ( x , λ ) ≥ f ( x ) = v ∇ f ( x ∗ )+ ∑ i λ i ∇ f i ( x ∗ ) = 0 L ( λ , x ) = − ( ∑ j x j ( a j λ − c j ))+ b λ . If there is λ with L ( x , λ ) ≥ α for all x Feasible primal, f i ( x ∗ ) ≤ 0, and feasible dual λ i ≥ 0. Optimum value of program is at least α Best λ ? max b · λ where a j λ = c j . Complementary slackness: λ i f i ( x ∗ ) = 0. Primal problem: max b λ , λ T A = c , λ ≥ 0 x , that minimizes L ( x , λ ) over all λ ≥ 0. Launched nonlinear programming! See paper. Duals! Dual problem: λ , that maximizes L ( x , λ ) over all x .

Recommend

More recommend