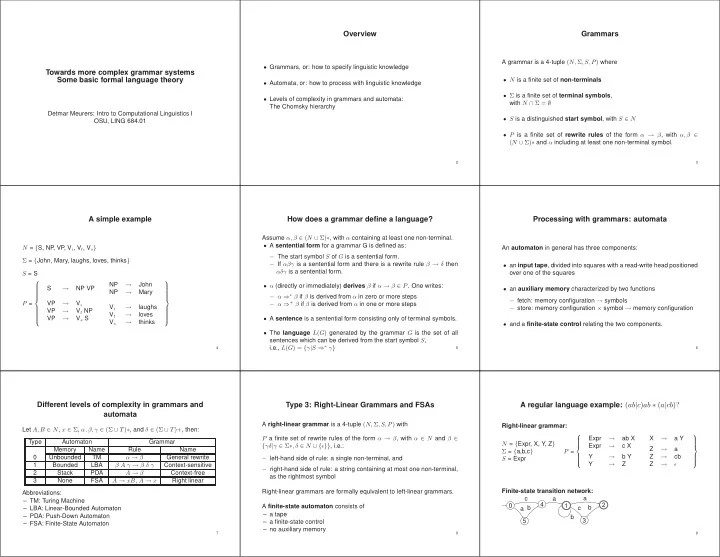

Overview Grammars A grammar is a 4-tuple ( N, Σ , S, P ) where • Grammars, or: how to specify linguistic knowledge Towards more complex grammar systems Some basic formal language theory • N is a finite set of non-terminals • Automata, or: how to process with linguistic knowledge • Σ is a finite set of terminal symbols , • Levels of complexity in grammars and automata: with N ∩ Σ = ∅ The Chomsky hierarchy Detmar Meurers: Intro to Computational Linguistics I • S is a distinguished start symbol , with S ∈ N OSU, LING 684.01 • P is a finite set of rewrite rules of the form α → β , with α, β ∈ ( N ∪ Σ) ∗ and α including at least one non-terminal symbol. 2 3 A simple example How does a grammar define a language? Processing with grammars: automata Assume α, β ∈ ( N ∪ Σ) ∗ , with α containing at least one non-terminal. • A sentential form for a grammar G is defined as: N = { S, NP , VP , V i , V t , V s } An automaton in general has three components: − The start symbol S of G is a sentential form. Σ = { John, Mary, laughs, loves, thinks } − If αβγ is a sentential form and there is a rewrite rule β → δ then • an input tape , divided into squares with a read-write head positioned αδγ is a sentential form. S = S over one of the squares → NP John • α (directly or immediately) derives β if α → β ∈ P . One writes: → S NP VP • an auxiliary memory characterized by two functions NP → Mary − α ⇒ ∗ β if β is derived from α in zero or more steps − fetch: memory configuration → symbols − α ⇒ + β if β is derived from α in one or more steps P = VP → V i V i → laughs − store: memory configuration × symbol → memory configuration VP → V t NP → V t loves → VP V s S • A sentence is a sentential form consisting only of terminal symbols. V s → thinks • and a finite-state control relating the two components. • The language L ( G ) generated by the grammar G is the set of all sentences which can be derived from the start symbol S , i.e., L ( G ) = { γ | S ⇒ ∗ γ } 4 5 6 Different levels of complexity in grammars and Type 3: Right-Linear Grammars and FSAs A regular language example: ( ab | c ) ab ∗ ( a | cb )? automata A right-linear grammar is a 4-tuple ( N, Σ , S, P ) with Right-linear grammar: Let A, B ∈ N , x ∈ Σ , α, β, γ ∈ (Σ ∪ T ) ∗ , and δ ∈ (Σ ∪ T )+ , then: → → P a finite set of rewrite rules of the form α → β , with α ∈ N and β ∈ Expr ab X X a Y Type Automaton Grammar N = { Expr, X, Y, Z } { γδ | γ ∈ Σ ∗ , δ ∈ N ∪ { ǫ }} , i.e.: Expr → c X → Memory Name Rule Name Z a Σ = { a,b,c } P = 0 Unbounded TM α → β General rewrite Y → b Y Z → cb − left-hand side of rule: a single non-terminal, and S = Expr Y → Z Z → ǫ 1 Bounded LBA β A γ → β δ γ Context-sensitive − right-hand side of rule: a string containing at most one non-terminal, A → β 2 Stack PDA Context-free as the rightmost symbol 3 None FSA A → xB , A → x Right linear Right-linear grammars are formally equivalent to left-linear grammars. Finite-state transition network: Abbreviations: c a a – TM: Turing Machine 4 2 A finite-state automaton consists of 0 1 b c b – LBA: Linear-Bounded Automaton a – a tape – PDA: Push-Down Automaton b 3 5 – a finite-state control – FSA: Finite-State Automaton – no auxiliary memory 7 8 9

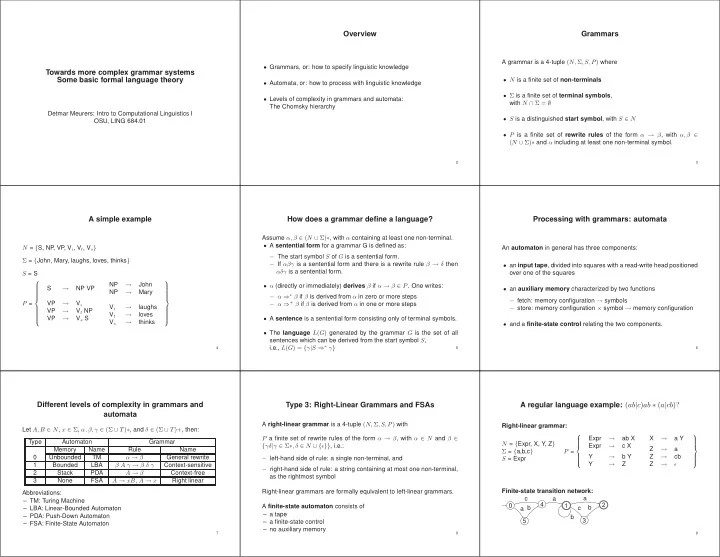

Thinking about regular languages Type 2: Context-Free Grammars and Push-Down A context-free language example: a n b n Automata Context-free grammar: Push-down automaton: − A language is regular iff one can define a FSM (or regular expression) ǫ A context-free grammar is a 4-tuple ( N, Σ , S, P ) with N = { S } for it. 0 1 P a finite set of rewrite rules of the form α → β , with α ∈ N and β ∈ Σ = { a, b } − An FSM only has a fixed amount of memory, namely the number of (Σ ∪ N ) ∗ , i.e.: states. S = S − left-hand side of rule: a single non-terminal, and a + push x b + pop x − Strings longer than the number of states, in particular also any infinite � S → a S b � P = ones, must result from a loop in the FSM. → − right-hand side of rule: a string of terminals and/or non-terminals S ǫ − Pumping Lemma: if for an infinite string there is no such loop, the A push-down automaton is a string cannot be part of a regular language. − finite state automaton, with a − stack as auxiliary memory 10 11 12 A context-sensitive language example: a n b n c n Type 1: Context-Sensitive Grammars and Type 0: General Rewrite Grammar and Turing Machines Linear-Bounded Automata Context-sensitive grammar: A rule of a context-sensitive grammar • In a general rewrite grammar there are no restrictions on the form N = { S, B, C } – rewrites at most one non-terminal from the left-hand side. of a rewrite rule. – right-hand side of a rule required to be at least as long as the left- Σ = { a, b } hand side, i.e. only contains rules of the form • A turing machine has an unbounded auxiliary memory. S = S α → β with | α | ≤ | β | • Any language for which there is a recognition procedure can be S → a S B C, and optionally S → ǫ with the start symbol S not occurring in any β . defined, but recognition problem is not decidable. S → a b C, → b B b b, A linear-bounded automaton is a P = b C → b c, – finite state automaton, with an c C → c c, – auxiliary memory which cannot exceed the length of the input string. C B → B C 13 14 15 Properties of different language classes Criteria under which to evaluate grammar formalisms Language classes and natural languages Natural languages are not regular Languages are sets of strings, so that one can apply set operations to There are three kinds of criteria: languages and investigate the results for particular language classes. – linguistic naturalness (1) a. The mouse escaped. Some closure properties: – mathematical power b. The mouse that the cat chased escaped. – computational effectiveness and efficiency c. The mouse that the cat that the dog saw chased escaped. − All language classes are closed under union with themselves . . . d. . − All language classes are closed under intersection with regular The weaker the type of grammar: (2) a. aa languages . – the stronger the claim made about possible languages b. abba – the greater the potential efficiency of the parsing procedure − The class of context-free languages is not closed under c. abccba . intersection with itself . . d. . Reasons for choosing a stronger grammar class: Proof: The intersection of the two context-free languages L 1 and L 2 – to capture the empirical reality of actual languages Center-embedding of arbitrary depth needs to be captured to capture is not context free: – to provide for elegant analyses capturing more generalizations ( → language competence → Not possible with a finite state automaton. more “compact” grammars) � a n b n c i | n ≥ 1 and i ≥ 0 � − L 1 = − L 2 = � a j b n c n | n ≥ 1 and j ≥ 0 � 16 17 18 − L 1 ∩ L 2 = { a n b n c n | n ≥ 1 }

Language classes and natural languages (cont.) Accounting for the facts Example for weakly equivalent grammars vs. linguistically sensible analyses Example string: • Any finite language is a regular language. Looking at grammars from a linguistic perspective, one can distinguish if x then if y then a else b their • The argument that natural languages are not regular relies on competence as an idealization, not performance. − weak generative capacity , considering only the set of strings Grammar 1: generated by a grammar S → if T then S else S , • Note that even if English were regular, a context-free grammar − strong generative capacity , considering the set of strings and their S → if T then S , characterization could be preferable on the grounds that it is more syntactic analyses generated by a grammar S → a transparent than one using only finite-state methods. S → b T → x Two grammars can be strongly or weakly equivalent. T → y 19 20 21 S First analysis: Grammar 2 rules: A weekly equivalent grammar eliminating the Reading assignment ambiguity (only licenses second structure). if T then else S S S1 → if T then S1 , S1 → if T then S2 else S1 , • Chapter 2 “Basic Formal Language Theory” of our Lecture Notes S1 → a , x b if then T S S1 → b , S2 → if T then S2 else S2 , • Chapter 3 “Formal Languages and Natural Languages” of our y a S2 → a Lecture Notes S2 → b S Second analysis: T → x • Chapter 13 “Language and complexity” of Jurafsky and Martin (2000) T → y if then T S x if then else T S S y a b 22 23 24

Recommend

More recommend