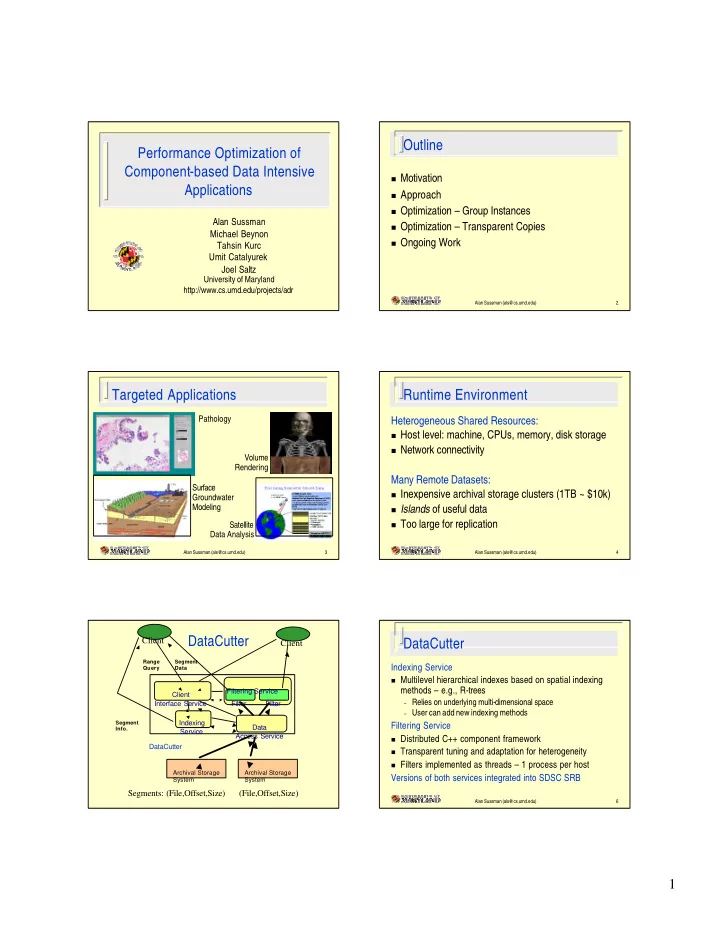

Outline Performance Optimization of Component-based Data Intensive � Motivation Applications � Approach � Optimization – Group Instances Alan Sussman � Optimization – Transparent Copies Michael Beynon � Ongoing Work Tahsin Kurc Umit Catalyurek Joel Saltz University of Maryland http://www.cs.umd.edu/projects/adr Alan Sussman (als@cs.umd.edu) 2 Targeted Applications Runtime Environment Pathology Heterogeneous Shared Resources: � Host level: machine, CPUs, memory, disk storage � Network connectivity Volume Rendering Many Remote Datasets: Surface � Inexpensive archival storage clusters (1TB ~ $10k) Groundwater Modeling � Islands of useful data � Too large for replication Satellite Data Analysis Alan Sussman (als@cs.umd.edu) 3 Alan Sussman (als@cs.umd.edu) 4 DataCutter Client DataCutter Client Range Segment Indexing Service Query Data � Multilevel hierarchical indexes based on spatial indexing methods – e.g., R-trees Filtering Service Client – Relies on underlying multi-dimensional space Interface Service Filter Filter – User can add new indexing methods Segment Indexing Filtering Service Data Info. Service Access Service � Distributed C++ component framework DataCutter � Transparent tuning and adaptation for heterogeneity � Filters implemented as threads – 1 process per host Archival Storage Archival Storage Versions of both services integrated into SDSC SRB System System Segments: (File,Offset,Size) (File,Offset,Size) Alan Sussman (als@cs.umd.edu) 6 1

Indexing - Subsetting Filter-Stream Programming (FSP) Purpose: Specialized components for processing data Datasets are partitioned into segments � based on Active Disks research – used to index the dataset, unit of retrieval [Acharya, Uysal, Saltz: ASPLOS’98] , dataflow, functional parallelism, message passing. – Spatial indexes built from bounding boxes of all � filters – logical unit of computation elements in a segment high level tasks – Indexing very large datasets View result init , process , finalize interface – � streams – how filters communicate – Multi-level hierarchical indexing scheme 3D reconstruction Extract ref unidirectional buffer pipes – – Summary index files -- for a collection of segments or uses fixed size buffers (min, good) Extract raw – detailed index files Reference DB � manually specify filter connectivity Raw Dataset – Detailed index files -- to index the individual segments and filter-level characteristics Alan Sussman (als@cs.umd.edu) 7 Alan Sussman (als@cs.umd.edu) 8 Placement FSP: Abstractions Filter Group � The dynamic assignment of filters to particular hosts logical collection of filters to use together – for execution is placement(or mapping) S application starts filter group instances – A B � Optimization criteria: uow 2 uow 1 uow 0 Unit-of-work cycle – Communication � leverage filter affinity to dataset “work” is application defined (ex: a query) – buf buf buf buf � minimize communication volume on slower connections work is appended to running instances – init(), process(), finalize() called for each work � co-locate filters with large communication volume – process() returns { EndOfWork | EndOfFilter } – Computation – allows for adaptivity � expensive computation on faster, less loaded hosts – Alan Sussman (als@cs.umd.edu) 9 Alan Sussman (als@cs.umd.edu) 10 Optimization - Group Instances Experiment - Application Emulator Parameterized dataflow filter P 0 F 0 C 0 – consume from all inputs out Work in process – compute out Filter – produce to all outputs P 1 F 1 C 1 Application emulated: – process 64 units of work host1 (2 cpu) host2 (2 cpu) host3 (2 cpu) P F C – single batch Match # instances to environment (CPU capacity, network) Alan Sussman (als@cs.umd.edu) 11 Alan Sussman (als@cs.umd.edu) 12 2

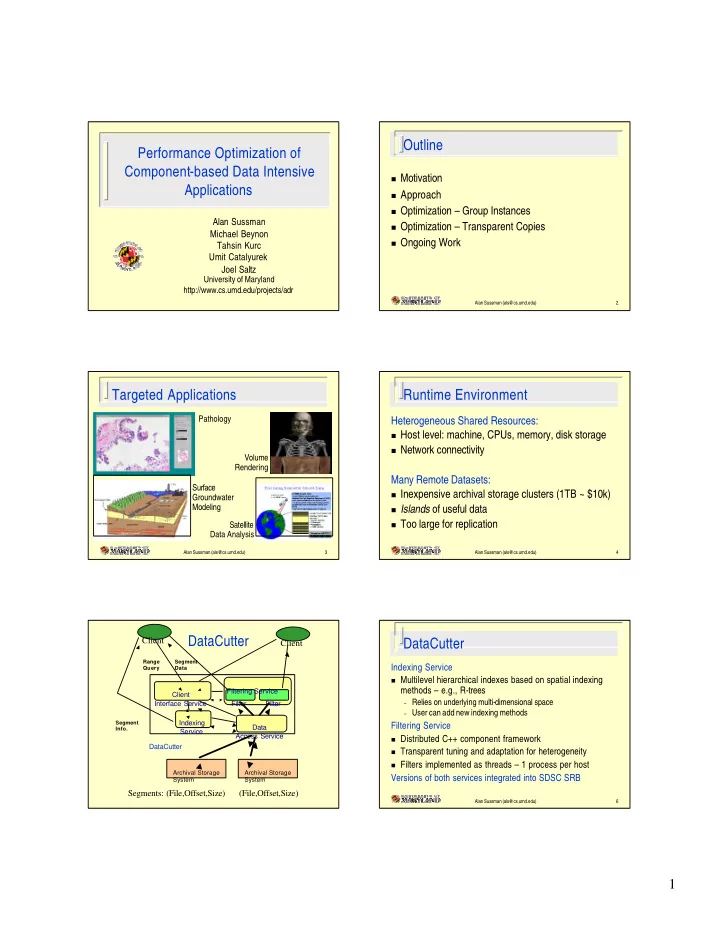

Instances: Vary Number, Application Group Instances (Batches) I/O Intensive CPU Intensive Batch 0 40 450 Batch 2 P 0 F 0 C 0 35 400 Response Time (sec) Response Time (sec) 350 30 Batch 1 300 25 250 20 200 15 150 P 1 F 1 C 1 10 100 5 50 Batch 0 0 0 1 2 4 8 16 32 64 1 2 4 8 16 32 64 Number of Filter Group Instances Number of Filter Group Instances min mean max min mean max host1 (2 cpu) host2 (2 cpu) host3 (2 cpu) Setup: UMD Red Linux cluster (2 processor PII 450 nodes) Work issued in instance batches until all complete. Point: # instances depends on application and environment Matching # instances to environment (CPU capacity) Alan Sussman (als@cs.umd.edu) 13 Alan Sussman (als@cs.umd.edu) 14 Instances: Vary Number, Batch Size Adding Heterogeneity CPU Intensive P 0 F 0 C 0 450 450 400 400 Response Time (sec) 350 Response Time (sec) 350 300 300 P 3 F 3 C 3 250 250 200 Work 200 host1 (2 cpu) host2 (2 cpu) 150 150 100 100 50 50 0 0 1 2 4 8 16 P 4 F 4 C 4 1 (8) 2 (32) 4 (8) 8 (4) 16 (4) 32 (2) 64 (1) Number of Filter Group Instances Batch Size (Optimal Num Instances) min mean max all min mean max all P 7 F 7 C 7 Setup: (Optimal) is lowest all time Point: # instances depends on application and environment host1 (2 cpu) host2 (2 cpu) hostSMP (8 cpu) Alan Sussman (als@cs.umd.edu) 15 Alan Sussman (als@cs.umd.edu) 16 Optimization - Transparent Copies Runtime Workload Balancing Use local information: FSP Abstraction Ra 0 – queue size, send time / receiver acks R E M � replicate individual filters � Adjust number of transparent copies Ra k � transparent � Demand based dataflow (choice of consumer) – work-balance among copies – Within a host – perfect shared queue among copies – better tune resources to actual filter needs – Across hosts � Provide “single-stream” illusion � Round Robin – Multiple producers and consumers, deadlock, flow control � Weighted Round Robin – Invariant: UOW i < UOW i+1 � Demand-Driven sliding window (on buffer consumption rate) � Problem: filter state � User-defined Alan Sussman (als@cs.umd.edu) 17 Alan Sussman (als@cs.umd.edu) 18 3

Experiment – Virtual Microscope Virtual Microscope Results � Client-server system for interactively visualizing digital slides Response Time (seconds) Image Dataset (100MB to 5GB per focal plane) R-D-C-Z-V Average 400x 200x 100x 50x Rectangular region queries, multiple data chunk reply h-h-h-h-h 2.10 0.38 0.73 1.73 6.95 � Hopkins Linux cluster – 4 1-processor, 1 2-processor PIII-800, h-g-g-g-g 1.49 0.37 0.62 1.27 4.60 2 80GB IDE disks, 100Mbit Ethernet h-g(2)-g-g-g 1.15 0.39 0.50 0.95 3.41 � Decompress filter is most expensive, so good candidate for replication h-g(2)-g(2)-g-g 1.15 0.37 0.49 0.95 3.43 � 50 queries at various magnifications, 512x512 pixel output h-g(4)-g(2)-g-g 1.17 0.39 0.50 0.96 3.50 h-g(2)-g(2)-b-b 1.68 0.45 0.68 1.27 5.34 g-g-g-g-g 1.44 0.33 0.58 1.26 4.46 read data decompress clip zoom view g-g(2)-g-g-g 1.08 0.33 0.45 0.92 3.24 Alan Sussman (als@cs.umd.edu) 19 Alan Sussman (als@cs.umd.edu) 20 Experiment - Isosurface Rendering Sample Isosurface Visualization � UT Austin ParSSim species transport simulation Single time step visualization, read all data � Setup: UMD Red Linux cluster (2 processor PII 450 nodes) 1.5 GB 38.6 MB 11.8 MB 28.5 MB R E Ra M read isosurface shade + merge dataset extraction rasterize / view 0.64s 1.64s 11.67s 0.73s = 14.68s (sum) V = 0.35 V = 0.7 4.3% 11.2% 79.5% 5.0% = 12.65s (time) Alan Sussman (als@cs.umd.edu) 21 Alan Sussman (als@cs.umd.edu) 22 Transparent Copies: Replicate Raster Experiment – Resource Heterogeneity � Isosurface rendering on Red, Blue, Rogue Linux clusters at 1 node 2 nodes 4 nodes 8 nodes Maryland 1 copy of – Red – 16 2-processor PII-450, 256MB, 18GB SCSI disk 12.18s 7.32s 4.17s 3.00s Raster – Blue –8 2-processor PIII-550, 1GB, 2-8GB SCSI disk + 1 8-processor PIII-450, 4GB, 2-18GB SCSI disk 2 copies 8.16s 5.70s 3.88s 3.24s – Rogue – 8 1-processor PIII-650, 128MB, 2-75GB IDE disks of Raster (33%) (22%) (7%) (– 8%) – Red, Blue connected via Gigabit Ethernet, Rogue via 100Mbit Ethernet � Two implementations of Raster filter – z-buffer and active Setup: SPMD style, partitioned input dataset per node pixels (the one used in previous experiment) Point: copies of bottleneck filter enough to balance flow Alan Sussman (als@cs.umd.edu) 23 Alan Sussman (als@cs.umd.edu) 24 4

Recommend

More recommend