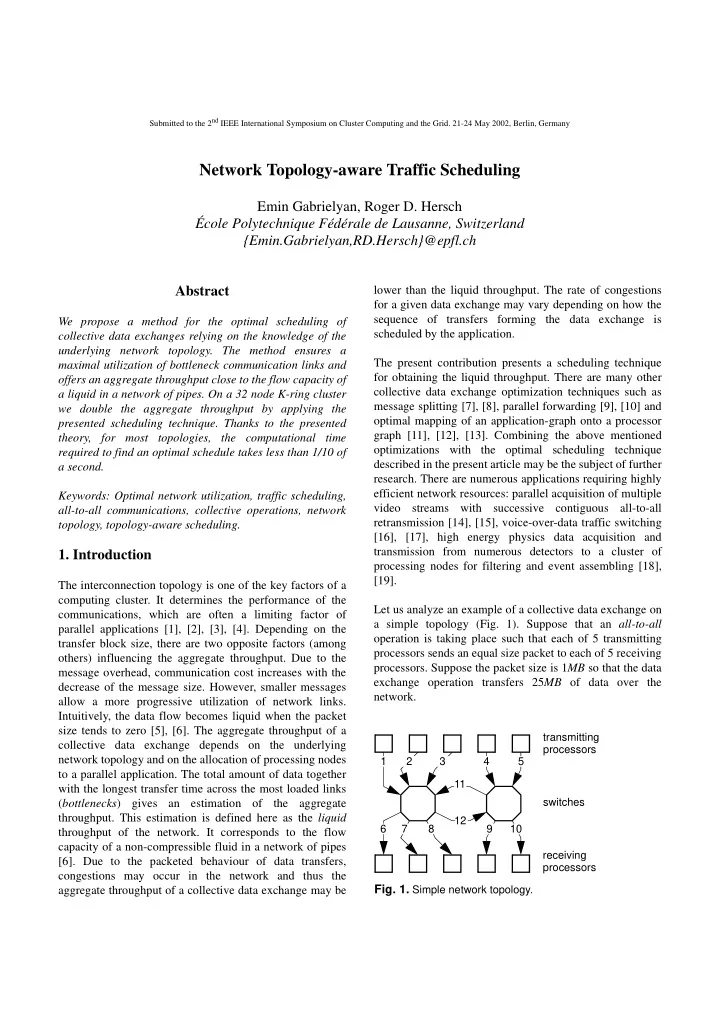

Submitted to the 2 nd IEEE International Symposium on Cluster Computing and the Grid. 21-24 May 2002, Berlin, Germany Network Topology-aware Traffic Scheduling Emin Gabrielyan, Roger D. Hersch École Polytechnique Fédérale de Lausanne, Switzerland {Emin.Gabrielyan,RD.Hersch}@epfl.ch Abstract lower than the liquid throughput. The rate of congestions for a given data exchange may vary depending on how the sequence of transfers forming the data exchange is We propose a method for the optimal scheduling of scheduled by the application. collective data exchanges relying on the knowledge of the underlying network topology. The method ensures a The present contribution presents a scheduling technique maximal utilization of bottleneck communication links and for obtaining the liquid throughput. There are many other offers an aggregate throughput close to the flow capacity of collective data exchange optimization techniques such as a liquid in a network of pipes. On a 32 node K-ring cluster message splitting [7], [8], parallel forwarding [9], [10] and we double the aggregate throughput by applying the optimal mapping of an application-graph onto a processor presented scheduling technique. Thanks to the presented graph [11], [12], [13]. Combining the above mentioned theory, for most topologies, the computational time optimizations with the optimal scheduling technique required to find an optimal schedule takes less than 1/10 of described in the present article may be the subject of further a second. research. There are numerous applications requiring highly efficient network resources: parallel acquisition of multiple Keywords: Optimal network utilization, traffic scheduling, video streams with successive contiguous all-to-all all-to-all communications, collective operations, network retransmission [14], [15], voice-over-data traffic switching topology, topology-aware scheduling. [16], [17], high energy physics data acquisition and transmission from numerous detectors to a cluster of 1. Introduction processing nodes for filtering and event assembling [18], [19]. The interconnection topology is one of the key factors of a computing cluster. It determines the performance of the Let us analyze an example of a collective data exchange on communications, which are often a limiting factor of a simple topology (Fig. 1). Suppose that an all-to-all parallel applications [1], [2], [3], [4]. Depending on the operation is taking place such that each of 5 transmitting transfer block size, there are two opposite factors (among processors sends an equal size packet to each of 5 receiving others) influencing the aggregate throughput. Due to the processors. Suppose the packet size is 1 MB so that the data message overhead, communication cost increases with the exchange operation transfers 25 MB of data over the decrease of the message size. However, smaller messages network. allow a more progressive utilization of network links. Intuitively, the data flow becomes liquid when the packet size tends to zero [5], [6]. The aggregate throughput of a transmitting collective data exchange depends on the underlying processors network topology and on the allocation of processing nodes 1 2 3 4 5 to a parallel application. The total amount of data together 11 with the longest transfer time across the most loaded links ( bottlenecks ) gives an estimation of the aggregate switches throughput. This estimation is defined here as the liquid 12 6 7 8 9 10 throughput of the network. It corresponds to the flow capacity of a non-compressible fluid in a network of pipes receiving [6]. Due to the packeted behaviour of data transfers, processors congestions may occur in the network and thus the Fig. 1. Simple network topology. aggregate throughput of a collective data exchange may be

During the collective data exchange, links 1 to 10 transfer 1 MB ⎛ ⎛ ⎞ ⎞ ⁄ × ⁄ 25 MB 357.14 MB s 7 - - - - - - - - - - - - - - - - - - - - - - - - = . It is therefore ⎝ ⎝ ⎠ ⎠ 5 MB of data each (Fig. 1). Links 11 and 12 are the ⁄ 100 MB s bottlenecks and transfer 6 MB each. Suppose that the less than the liquid throughput (416.67 MB/s ). Can we throughput of a link is 100 MB/s . Since links 11 and 12 are propose an improved schedule for the all-to-all exchange the bottleneck, the longest transfer of the collective data such that the liquid throughput is reached? ⁄ ( ⁄ ) 6 MB 100 MB s 0.06 s exchange lasts = . Therefore the By ensuring that at each step the bottlenecks are always liquid throughput of the global operation is used, we create an improved schedule, having the ⁄ ⁄ 25 MB 0.06 s 416.67 MB s = . Let us now propose a performance of the network’s liquid throughput (Fig. 3). schedule for successive data transfers and analyze its According to this improved schedule only 6 steps are throughput. needed for the implementation of the collective operation, i.e. the throughput is: timeframe 1 1 MB ⎛ ⎛ ⎞ ⎞ ⁄ × ⁄ 25 MB 416.67 MB s 6 - - - - - - - - - - - - - - - - - - - - - - - - = . ⎝ ⎝ ⎠ ⎠ ⁄ 100 MB s step 1 timeframe 2 step 2 timeframe 1 timeframe 2 timeframe 3 timeframe 3 timeframe 4 step 3 step 3 timeframe 4 timeframe 5 timeframe 6 timeframe 5 timeframe 6 Fig. 3. An optimal schedule. step 4 step 4 Section 2 shows how to describe the liquid throughput as a function of the number of contributing processing nodes and their underlying network topologies. An introduction to the formal theory of traffic scheduling is given in section 3. timeframe 7 Section 4 presents measurements for the considered sub- step 5 topologies and draws the conclusions. 2. Throughput as a function of sub-topology Fig. 2. Round-robin schedule of transfers. In order to evaluate the throughput of collective data Intuitively, a good schedule for an all-to-all exchange is a exchanges we need to specify along an independent axis the round-robin schedule where at each step each sender has a number of processing nodes as well as significant receiver shifted by one position. Let us now examine the variations of their underlying network topologies. To round-robin schedule of an all-to-all data exchange on the simplify the model let us limit the configuration to an network topology of figure 1. Figure 2 shows that logical identical number of receiving and transmitting processors steps 1, 2 and 5 can be processed in the timeframe of a forming successions of node pairs. The applications single transfer. But logical steps 3 and 4 can not be perform all-to-all data exchanges over the allocated nodes processed in a single timeframe, since there are two (each transmitting processor sends one packet to each transfers trying to simultaneously use the same links 11 and receiving processor). 12, causing a congestion. Two conflicting transfers need to be scheduled in two single timeframe substeps. Thus the Let us demonstrate how to create variations of processing round-robin schedule takes 7 timeframes instead of the node allocations by considering the specific network of the expected 5 and accordingly, the throughput of the round- Swiss-T1 cluster (called henceforth T1, see Fig. 4). The robin all-to-all exchange is: network of the T1 forms a K-ring [20] and has a static

Recommend

More recommend