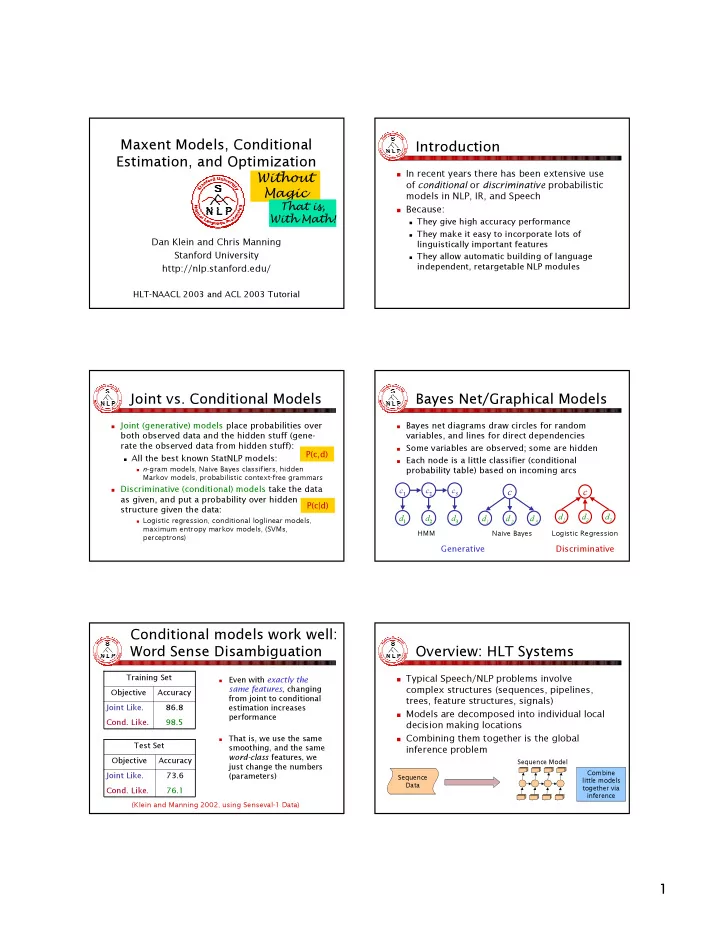

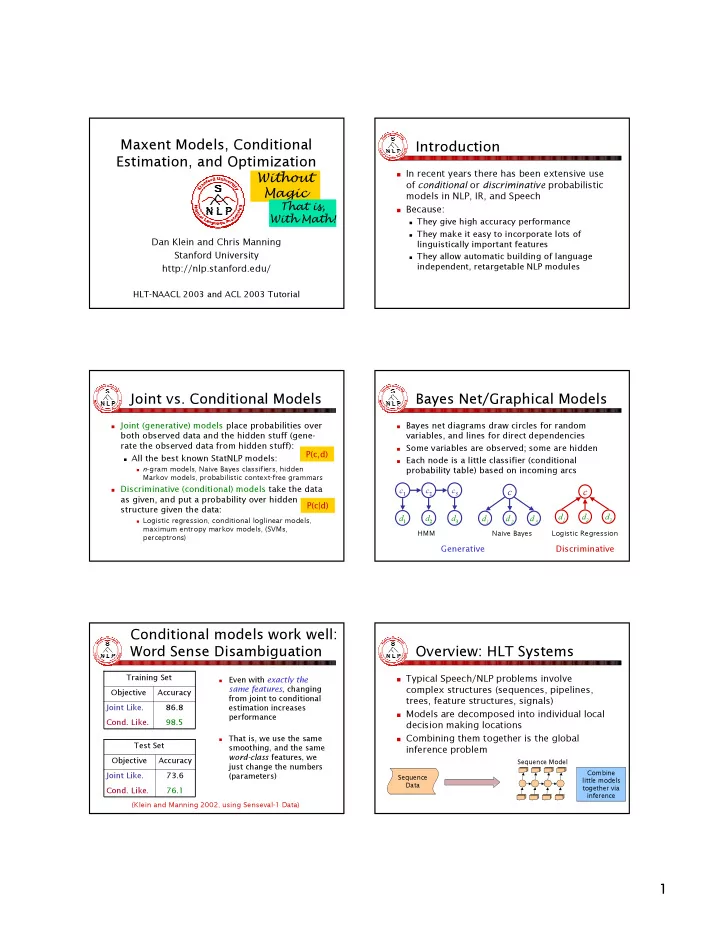

Maxent Models, Conditional Introduction Estimation, and Optimization � In recent years there has been extensive use Without of conditional or discriminative probabilistic Magic models in NLP, IR, and Speech That is, � Because: With Math! � They give high accuracy performance � They make it easy to incorporate lots of Dan Klein and Chris Manning linguistically important features Stanford University � They allow automatic building of language independent, retargetable NLP modules http://nlp.stanford.edu/ HLT-NAACL 2003 and ACL 2003 Tutorial Joint vs. Conditional Models Bayes Net/Graphical Models � Joint (generative) models place probabilities over � Bayes net diagrams draw circles for random both observed data and the hidden stuff (gene- variables, and lines for direct dependencies rate the observed data from hidden stuff): � Some variables are observed; some are hidden P(c,d) � All the best known StatNLP models: � Each node is a little classifier (conditional � n - gram models, Naive Bayes classifiers, hidden probability table) based on incoming arcs Markov models, probabilistic context-free grammars � Discriminative (conditional) models take the data c 1 c 2 c 3 c c as given, and put a probability over hidden P(c|d) structure given the data: d 1 d 2 d 3 d 1 d 2 d 3 d 1 d 2 d 3 � Logistic regression, conditional loglinear models, maximum entropy markov models, (SVMs, HMM Naive Bayes Logistic Regression perceptrons) Generative Discriminative Conditional models work well: Word Sense Disambiguation Overview: HLT Systems Training Set � Typical Speech/NLP problems involve � Even with exactly the same features , changing complex structures (sequences, pipelines, Objective Accuracy from joint to conditional trees, feature structures, signals) Joint Like. 86.8 estimation increases � Models are decomposed into individual local performance Cond. Like. 98.5 decision making locations � That is, we use the same � Combining them together is the global Test Set smoothing, and the same inference problem word-class features, we Objective Accuracy Sequence Model just change the numbers Combine Joint Like. 73.6 (parameters) Sequence little models Data together via Cond. Like. 76.1 inference (Klein and Manning 2002, using Senseval-1 Data) 1

Overview: The Local Level Tutorial Plan Sequence Model Sequence Level Inference Sequence 1. Exponential/Maximum entropy models Data 2. Optimization methods Local Level Classifier Type Label Label 3. Linguistic issues in using these models Feature Local Local Local Optimization Extraction Data Data Data Features Smoothing Features Maximum Conjugate Quadratic NLP Issues Entropy Models Gradient Penalties Part I: Maximum Entropy Models Features a. Examples of Feature-Based Modeling � In this tutorial and most maxent work: features are elementary pieces of evidence that link aspects of what we observe d with a category b. Exponential Models for Classification c that we want to predict . � A feature has a real value: f: C × D → R c. Maximum Entropy Models � Usually features are indicator functions of properties of the input and a particular class d. Smoothing ( every one we present is ). They pick out a subset. � f i ( c, d ) ≡ [Φ( d) ∧ c = c i ] [Value is 0 or 1] We will use the term “maxent” models, but will introduce them as loglinear or exponential models, � We will freely say that Φ( d ) is a feature of the data deferring the interpretation as “maximum entropy d , when, for each c i , the conjunction Φ( d) ∧ c = c i is models” until later. a feature of the data-class pair ( c, d ) . Features Feature-Based Models � For example: � The decision about a data point is based � f 1 ( c, d ) ≡ [ c = “NN” ∧ islower( w 0 ) ∧ ends( w 0 , “d”)] only on the features active at that point. � f 2 ( c, d ) ≡ [ c = “NN” ∧ w -1 = “to” ∧ t -1 = “TO”] Data Data Data � f 3 ( c, d ) ≡ [ c = “VB” ∧ islower( w 0 )] BUSINESS: Stocks … to restructure DT JJ NN … hit a yearly low … bank:MONEY debt. The previous fall … IN NN TO NN TO VB IN JJ in bed to aid to aid in blue Label Label Label BUSINESS MONEY NN � Models will assign each feature a weight Features Features Features � Empirical count (expectation) of a feature: {…, stocks, hit, a, {…, P=restructure, {W=fall, PT=JJ empirical E ( f ) f ( c , d ) = ∑ yearly, low, …} N=debt, L=12, …} PW=previous} i i ( c , d ) ∈ observed ( C , D ) � Model expectation of a feature: Word-Sense Text POS Tagging E ( f ) ∑ P ( c , d ) f ( c , d ) = i i Disambiguation Categorization ( c , d ) ∈ ( C , D ) 2

Example: Text Categorization Example: NER (Zhang and Oles 2001) (Klein et al. 2003; also, Borthwick 1999, etc.) Sequence model across words � � Features are a word in document and class (they Each word classified by local model do feature selection to use reliable indicators) � Decision Point: Features include the word, previous � � Tests on classic Reuters data set (and others) and next words, previous classes, State for Grace � Naïve Bayes: 77.0% F 1 previous, next, and current POS tag, character n- gram features and � Linear regression: 86.0% shape of word Local Context � Logistic regression: 86.4% � Best model had > 800K features Prev Cur Next � Support vector machine: 86.5% High (> 92% on English devtest set) � performance comes from Class Other ??? ??? � Emphasizes the importance of regularization combining many informative Word at Grace Road (smoothing) for successful use of discriminative features. Tag IN NNP NNP methods (not used in most early NLP/IR work) With smoothing / regularization, � Sig x Xx Xx more features never hurt! Example: NER Example: Tagging Feature Weights � Features can include: (Klein et al. 2003) Feature Type Feature PERS LOC � Current, previous, next words in isolation or together. Previous word at -0.73 0.94 � Previous (or next) one, two, three tags. Decision Point: Current word Grace 0.03 0.00 � Word-internal features: word types, suffixes, dashes, etc. State for Grace Beginning bigram <G 0.45 -0.04 Current POS tag NNP 0.47 0.45 Features Decision Point Prev and cur tags IN NNP -0.10 0.14 W 0 22.6 Local Context Local Context Previous state Other -0.70 -0.92 W +1 % Current signature Xx 0.80 0.46 Prev Cur Next -3 -2 -1 0 +1 W -1 fell Prev state, cur sig O-Xx 0.68 0.37 Class Other ??? ??? DT NNP VBD ??? ??? T -1 VBD Prev-cur-next sig x-Xx-Xx -0.69 0.37 Word at Grace Road The Dow fell 22.6 % T -1 -T -2 NNP-VBD P. state - p-cur sig O-x-Xx -0.20 0.82 Tag IN NNP NNP hasDigit? true … Sig x Xx Xx … … (Ratnaparkhi 1996; Toutanova et al. 2003, etc.) Total: -0.58 2.68 Other Maxent Examples Conditional vs. Joint Likelihood � We have some data {( d , c )} and we want to place � Sentence boundary detection (Mikheev 2000) probability distributions over it. � Is period end of sentence or abbreviation? � A joint model gives probabilities P( d,c ) and tries � PP attachment (Ratnaparkhi 1998) to maximize this likelihood. � Features of head noun, preposition, etc. � It turns out to be trivial to choose weights: � Language models (Rosenfeld 1996) just relative frequencies. � P(w 0 |w -n ,…,w -1 ). Features are word n-gram � A conditional model gives probabilities P( c | d ) . It features, and trigger features which model takes the data as given and models only the repetitions of the same word. conditional probability of the class. � We seek to maximize conditional likelihood. � Parsing (Ratnaparkhi 1997; Johnson et al. 1999, etc.) � Either: Local classifications decide parser � Harder to do (as we’ll see…) actions or feature counts choose a parse. � More closely related to classification error. 3

Recommend

More recommend