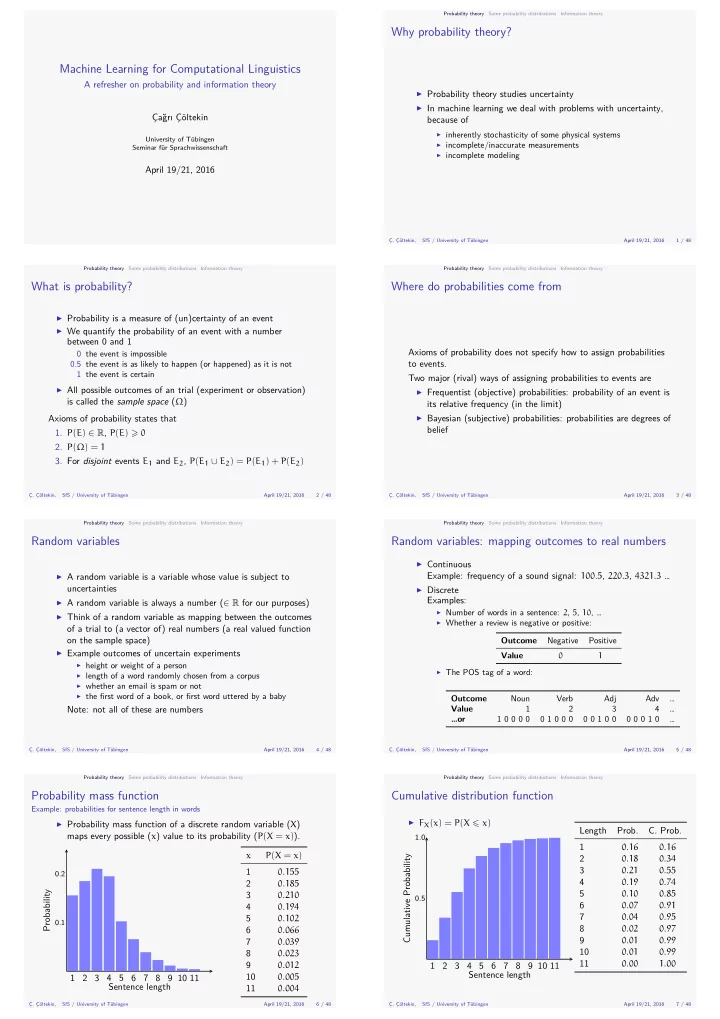

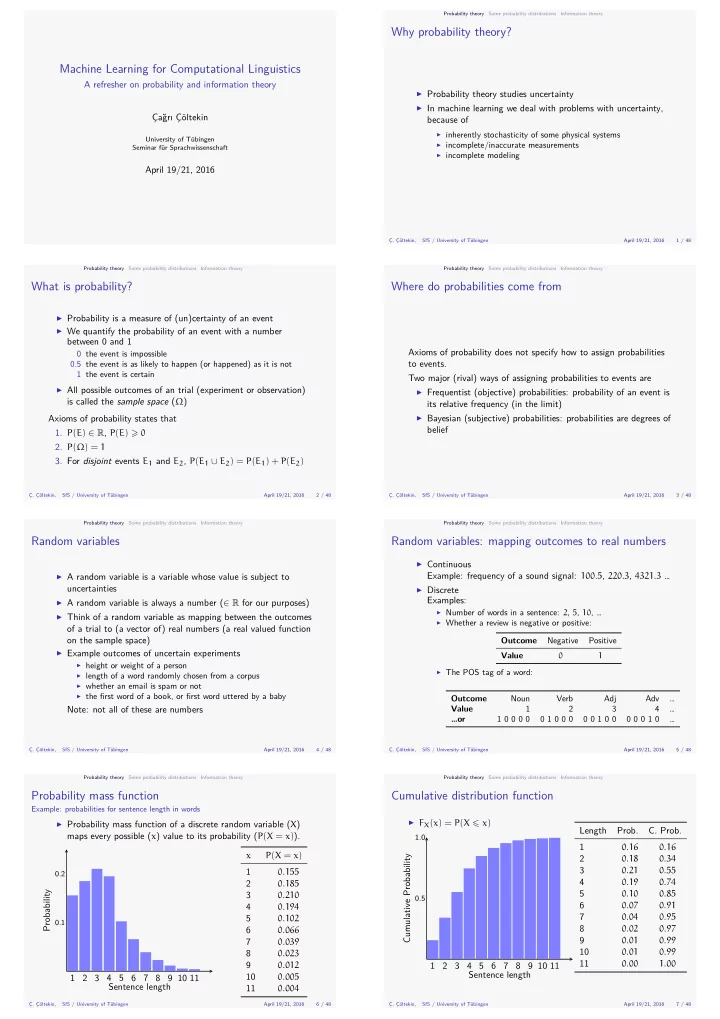

Machine Learning for Computational Linguistics Some probability distributions … Ç. Çöltekin, SfS / University of Tübingen April 19/21, 2016 5 / 48 Probability theory Information theory 0 0 1 0 0 Probability mass function Example: probabilities for sentence length in words Probability Sentence length 0.1 0.2 1 0 0 0 1 0 0 1 0 0 0 3 Adv Negative Positive Value Outcome Noun Verb Adj … 1 0 0 0 0 Value 1 2 3 A refresher on probability and information theory … …or 2 4 Examples: 6 C. Prob. 1 2 3 4 5 7 Length 8 9 10 11 Ç. Çöltekin, SfS / University of Tübingen April 19/21, 2016 Prob. 1.0 5 SfS / University of Tübingen 6 7 8 9 10 11 Ç. Çöltekin, April 19/21, 2016 0.5 6 / 48 Probability theory Some probability distributions Information theory Cumulative distribution function Cumulative Probability Sentence length Outcome 4 Random variables: mapping outcomes to real numbers April 19/21, 2016 0 the event is impossible 0.5 the event is as likely to happen (or happened) as it is not 1 the event is certain Axioms of probability states that Ç. Çöltekin, SfS / University of Tübingen 2 / 48 What is probability? Probability theory Some probability distributions Information theory Where do probabilities come from Axioms of probability does not specify how to assign probabilities to events. between 0 and 1 Information theory its relative frequency (in the limit) Information theory Çağrı Çöltekin University of Tübingen Seminar für Sprachwissenschaft April 19/21, 2016 Probability theory Some probability distributions Why probability theory? Some probability distributions because of Ç. Çöltekin, SfS / University of Tübingen April 19/21, 2016 1 / 48 Probability theory Two major (rival) ways of assigning probabilities to events are 7 / 48 belief Information theory Ç. Çöltekin, Note: not all of these are numbers on the sample space) of a trial to (a vector of) real numbers (a real valued function April 19/21, 2016 uncertainties 4 / 48 Probability theory Random variables Some probability distributions SfS / University of Tübingen Information theory Some probability distributions Probability theory SfS / University of Tübingen 3 / 48 April 19/21, 2016 Ç. Çöltekin, ▶ Probability theory studies uncertainty ▶ In machine learning we deal with problems with uncertainty, ▶ inherently stochasticity of some physical systems ▶ incomplete/inaccurate measurements ▶ incomplete modeling ▶ Probability is a measure of (un)certainty of an event ▶ We quantify the probability of an event with a number ▶ All possible outcomes of an trial (experiment or observation) ▶ Frequentist (objective) probabilities: probability of an event is is called the sample space ( Ω ) ▶ Bayesian (subjective) probabilities: probabilities are degrees of 1. P ( E ) ∈ R , P ( E ) ⩾ 0 2. P ( Ω ) = 1 3. For disjoint events E 1 and E 2 , P ( E 1 ∪ E 2 ) = P ( E 1 ) + P ( E 2 ) ▶ Continuous Example: frequency of a sound signal: 100 . 5 , 220 . 3 , 4321 . 3 … ▶ A random variable is a variable whose value is subject to ▶ Discrete ▶ A random variable is always a number ( ∈ R for our purposes) ▶ Number of words in a sentence: 2 , 5 , 10 , … ▶ Think of a random variable as mapping between the outcomes ▶ Whether a review is negative or positive: ▶ Example outcomes of uncertain experiments 0 1 ▶ height or weight of a person ▶ The POS tag of a word: ▶ length of a word randomly chosen from a corpus ▶ whether an email is spam or not ▶ the fjrst word of a book, or fjrst word uttered by a baby ▶ F X ( x ) = P ( X ⩽ x ) ▶ Probability mass function of a discrete random variable ( X ) maps every possible ( x ) value to its probability ( P ( X = x ) ). 0 . 16 0 . 16 x P ( X = x ) 0 . 18 0 . 34 0 . 21 0 . 55 0 . 155 0 . 19 0 . 74 0 . 185 0 . 10 0 . 85 0 . 210 0 . 07 0 . 91 0 . 194 0 . 04 0 . 95 0 . 102 0 . 02 0 . 97 0 . 066 0 . 01 0 . 99 0 . 039 0 . 01 0 . 99 0 . 023 0 . 00 1 . 00 0 . 012 1 2 3 4 5 6 7 8 9 10 11 0 . 005 1 2 3 4 5 6 7 8 9 10 11 0 . 004

Probability theory f Probability distribution of letters following probabilities Lett. a b c d e g Some probability distributions h Prob. Probability Letter Some probability distributions 0.2 e a Information theory Probability theory d Mode, median, mean, standard deviation functions Ç. Çöltekin, SfS / University of Tübingen April 19/21, 2016 11 / 48 Probability theory Some probability distributions Information theory Visualization on sentence length example 12 / 48 Probability Sentence length 0.1 0.2 mode = median = 3.0 Ç. Çöltekin, SfS / University of Tübingen April 19/21, 2016 h g Median and mode of a random variable SfS / University of Tübingen b c d e f g h Ç. Çöltekin, April 19/21, 2016 h 14 / 48 Probability theory Some probability distributions Information theory Expected values of joint distributions Ç. Çöltekin, SfS / University of Tübingen April 19/21, 2016 a g c Information theory b f Ç. Çöltekin, SfS / University of Tübingen April 19/21, 2016 13 / 48 Probability theory Some probability distributions Joint and marginal probability f Two random variables form a joint probability distribution . An examaple: consider the bigrams of letters in our earlier example. The joint distribution can be defjned with a table like the one below. a b c d e Mode is the value that occurs most often in the data. 0.1 Information theory random variable Probability Short divergence: Chebyshev’s inequality Information theory Some probability distributions Probability theory 9 / 48 April 19/21, 2016 SfS / University of Tübingen Ç. Çöltekin, Variance and standard deviation 0.11 Information theory Some probability distributions Probability theory 8 / 48 April 19/21, 2016 SfS / University of Tübingen Ç. Çöltekin, Expected value Information theory 0.25 15 / 48 0.04 Ç. Çöltekin, 0.0001 This also shows why standardizing values of random variables, 0.01 Some probability distributions Probability theory 10 / 48 April 19/21, 2016 SfS / University of Tübingen ▶ Expected value (mean) of a random variable X is, ▶ Variance of a random variable X is, n n ∑ ∑ Var ( X ) = σ 2 = P ( x i )( x i − µ ) 2 = E [ X 2 ] − ( E [ X ]) 2 E [ X ] = µ = P ( x i ) x i = P ( x 1 ) x 1 + P ( x 2 ) x 2 + . . . + P ( x n ) x n i = 1 i = 1 ▶ More generally, expected value of a function of X is ▶ It is a measure of spread, divergence from the central tendency n ▶ The square root of variance is called standard deviation ∑ E [ f ( X )] = P ( x i ) f ( x i ) � n i = 1 � ∑ � ( P ( x i ) x 2 ) σ = − µ 2 � i ▶ Expected value is an important measure of central tendency i = 1 ▶ Note: it is not the ‘most likely’ value ▶ Standard deviation is in the same units as the values of the ▶ Expected value is linear ▶ Variance is not linear: σ 2 X + Y ̸ = σ 2 X + σ 2 Y (neither the σ ) E [ aX + bY ] = aE [ X ] + bE [ Y ] For any probability distribution, and k > 1 , Median is the mid-point of a distribution. Median of a random variable is defjned as the number m that satisfjes P ( | x − µ | > kσ ) ⩽ 1 k 2 P ( X ⩽ m ) ⩾ 1 and P ( X ⩾ m ) ⩾ 1 2 2 ▶ Median of 1 , 4 , 5 , 8 , 10 is 5 Distance from µ 2σ 3σ 5σ 10σ 100σ ▶ Median of 1 , 4 , 5 , 7 , 8 , 10 is 6 ▶ Modes appear as peaks in probability mass (or density) z = x − µ σ ▶ Mode of 1 , 4 , 4 , 8 , 10 is 4 ▶ Modes of 1 , 4 , 4 , 8 , 9 , 9 are 4 and 9 makes sense (the normalized quantity is often called the z-score). ▶ We have a hypothetical language with 8 letters with the µ = 3 . 56 0 . 23 0 . 04 0 . 05 0 . 08 0 . 29 0 . 02 0 . 07 0 . 22 9 0 . 2 = σ 1 2 3 4 5 6 7 8 9 10 11 ∑ ∑ E [ f ( X , Y )] = P ( x , y ) f ( x , y ) x y ∑ ∑ µ X = E [ X ] = P ( x , y ) x x y ∑ ∑ µ Y = E [ Y ] = P ( x , y ) y 0 . 04 0 . 02 0 . 02 0 . 03 0 . 05 0 . 01 0 . 02 0 . 06 0 . 23 x y 0 . 01 0 . 00 0 . 00 0 . 00 0 . 01 0 . 00 0 . 00 0 . 01 0 . 04 We can simplify the notation by vector notation, for µ = ( µ x , µ y ) , 0 . 02 0 . 00 0 . 00 0 . 00 0 . 01 0 . 00 0 . 00 0 . 01 0 . 05 0 . 02 0 . 00 0 . 00 0 . 01 0 . 02 0 . 00 0 . 01 0 . 02 0 . 08 ∑ 0 . 06 0 . 02 0 . 01 0 . 03 0 . 08 0 . 01 0 . 01 0 . 07 0 . 29 µ = x P ( x ) 0 . 00 0 . 00 0 . 00 0 . 00 0 . 01 0 . 00 0 . 00 0 . 01 0 . 02 x ∈ XY 0 . 01 0 . 00 0 . 00 0 . 01 0 . 02 0 . 00 0 . 01 0 . 02 0 . 07 0 . 08 0 . 00 0 . 00 0 . 01 0 . 10 0 . 00 0 . 01 0 . 02 0 . 22 where vector x ranges over all possible combinations of the values of random variables X and Y . 0 . 23 0 . 04 0 . 05 0 . 08 0 . 29 0 . 02 0 . 07 0 . 22

Recommend

More recommend