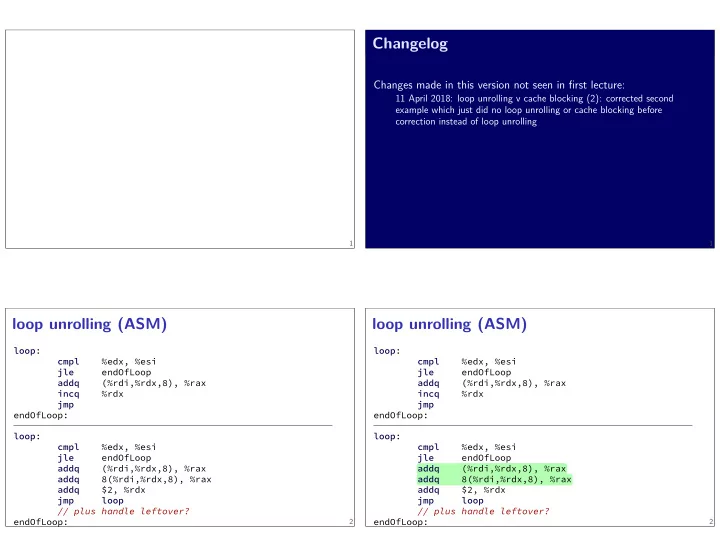

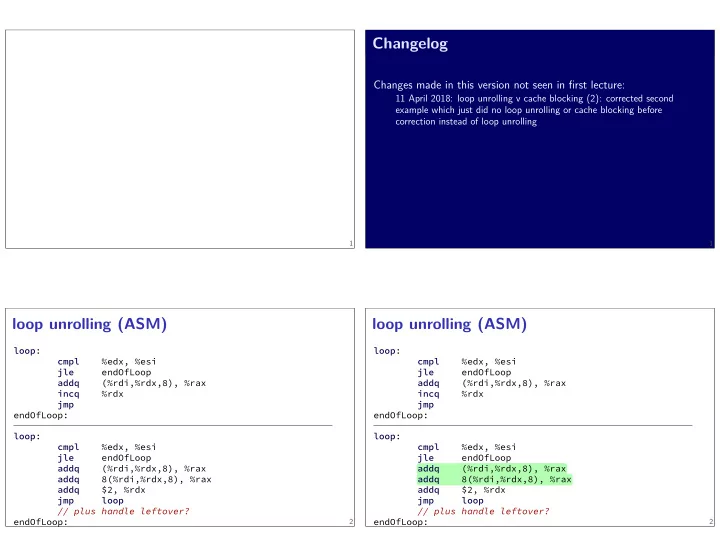

1 loop : 2 loop unrolling (ASM) loop : cmpl %edx, %esi jle endOfLoop addq (%rdi,%rdx,8), %rax incq %rdx jmp endOfLoop: cmpl // plus handle leftover? %edx, %esi jle endOfLoop addq (%rdi,%rdx,8), %rax addq 8(%rdi,%rdx,8), %rax addq $2, %rdx jmp loop // plus handle leftover? endOfLoop: endOfLoop: loop Changelog incq Changes made in this version not seen in fjrst lecture: 11 April 2018: loop unrolling v cache blocking (2): corrected second example which just did no loop unrolling or cache blocking before correction instead of loop unrolling 1 loop unrolling (ASM) loop : cmpl %edx, %esi jle endOfLoop addq (%rdi,%rdx,8), %rax %rdx jmp jmp endOfLoop: loop : cmpl %edx, %esi jle endOfLoop addq (%rdi,%rdx,8), %rax addq 8(%rdi,%rdx,8), %rax addq $2, %rdx 2

loop unrolling (C) 1.01 1 1.33 4.02 2 1.03 2.52 4 1.02 1.77 8 1.39 for ( int i = 0; i < N; ++i) 16 1.01 1.21 32 1.01 1.15 instruction cache/etc. overhead 5 performance labs this week — loop optimizations next week — vector instructions (AKA SIMD) instructions/element cycles/element times unrolled more loop unrolling (C) sum += A[i]; int i; for (i = 0; i + 1 < N; i += 2) { sum += A[i]; sum += A[i+1]; } // handle leftover, if needed if (i < N) sum += A[i]; 3 int i; on my laptop with 992 elements (fjts in L1 cache) for (i = 0; i + 4 <= N; i += 4) { sum += A[i]; sum += A[i+1]; sum += A[i+2]; sum += A[i+3]; } // handle leftover, if needed for (; i < N; i += 1) sum += A[i]; 4 loop unrolling performance 6 1.01 cycles/element — latency bound

performance HWs partners or individual (your choice) int y = 100*2; // expands to: int y = DOUBLE(100); #define DOUBLE(x) x*2 preprocessor macros 9 } src[RIDX(i, j, dim)]; for (j = 0; j < dim; j++) for (i = 0; i < dim; i++) int i, j; rotate assignment 8 ... // at (x=5, y=4) // at (x=0, y=0) image[0] typedef struct { unsigned char red, green, blue, alpha; } pixel; image representation 7 assignment 2: smooth (blur) an image assignment 1: rotate an image 10 pixel *image = malloc(dim * dim * sizeof (pixel)); image[4 * dim + 5] void rotate(pixel *src, pixel *dst, int dim) { dst[RIDX(dim − 1 − j, i, dim)] =

macros are text substitution (1) 12 thresholds based on results with certain optimizations grade: achieving certain speedup on my machine web viewer for results (with some delay — has to run) don’t delete stufg that works! grade: best performance you can submit multiple variants in one fjle performance grading 13 #define BAD_DOUBLE(x) x*2 RIDX? // y == 9, not 12 macros are text substitution (2) int y = BAD_DOUBLE(3 + 3); // expands to: int y = 3+3*2; // y == 9, not 12 11 #define FIXED_DOUBLE(x) (x)*2 int y = (3+3)*2; int y = DOUBLE(3 + 3); // expands to: 14 #define RIDX(x, y, n) ((x) * (n) + (y)) dst[RIDX(dim − 1 − j, 1, dim)] // becomes *at compile-time*: dst[((dim − 1 − j) * (dim) + (1))] we will measure speedup on my machine

general advice for ( int k = 0; k < N; k += 2) { loop unrolling v cache blocking (2) (assuming we started with kij loop order…:) loop unrolling and cache blocking: for ( int k = 0; k < N; k += 2) for ( int i = 0; i < N; ++i) for ( int j = 0; j < N; ++j) { } pretty useless loop unrolling for ( int i = 0; i < N; ++i) (for when we don’t give specifjc advice) for ( int j = 0; j < N; ++j) for ( int i = 0; i < N; ++i) for ( int j = 0; j < N; ++j) 17 interlude: real CPUs modern CPUs: execute multiple instructions at once execute instructions out of order — whenever values available 16 } 18 loop unrolling and cache blocking: try techniques from book/lecture that seem applicable vary numbers (e.g. cache block size) often — too big/small is worse some techniques combine well loop unrolling and cache blocking loop unrolling and reassociation/multiple accumulators 15 loop unrolling v cache blocking (1) (assuming we started with kij loop order…:) for ( int k = 0; k < N; k += 2) for ( int i = 0; i < N; ++i) for ( int j = 0; j < N; ++j) { } loop unrolling for ( int k = 0; k < N; k++) for ( int i = 0; i < N; ++i) for ( int j = 0; j < N; j += 2) { B[i*N+j] += A[i*N+k+0] * A[(k+0)*N+j]; B[i*N+j] += A[i*N+k+1] * A[(k+1)*N+j]; B[i*N+j] += A[i*N+k+0] * A[(k+0)*N+j]; B[i*N+j+1] += A[i*N+k+0] * A[(k+0)*N+j+1]; B[i*N+j] += A[i*N+k+0] * A[(k+0)*N+j]; B[i*N+j] += A[i*N+k+1] * A[(k+1)*N+j]; B[i*N+j] += A[i*N+k+0] * A[(k+0)*N+j]; B[i*N+j] += A[i*N+k+1] * A[(k+1)*N+j];

beyond pipelining: out-of-order ALU 3 e.g. possibly many ALUs multiple “execution units” to run instructions forwarding handled here run instructions from list when operands available keep list of pending instructions fetch multiple instructions/cycle back Write- Bufger Reorder … load/store (stage 2) (stage 1) collect results of fjnished instructions ALU 3 ALU 2 ALU 1 Decode Fetch Queue Instr Decode Fetch modern CPU design (instruction fmow) 20 helps with forwarding, squashing collect results of fjnished instructions sometimes pipelined, sometimes not helps with forwarding, squashing e.g. possibly many ALUs … helps with forwarding, squashing collect results of fjnished instructions sometimes pipelined, sometimes not e.g. possibly many ALUs multiple “execution units” to run instructions forwarding handled here run instructions from list when operands available keep list of pending instructions fetch multiple instructions/cycle back Write- Bufger Reorder load/store 20 (stage 2) ALU 3 (stage 1) ALU 3 ALU 2 ALU 1 Decode Fetch Queue Instr Decode Fetch modern CPU design (instruction fmow) sometimes pipelined, sometimes not multiple “execution units” to run instructions fjnd later instructions to do instead of stalling F F addq %r10, %r11 W E D F subq %r8, %r9 W M M M E D mrmovq 0(%rbx), %r8 forwarding handled here 8 7 6 5 4 3 2 1 0 cycle # much more complicated hazard handling logic take any instruction with available values lists of available instructions in pipeline registers D E W xorq %r12, %r13 run instructions from list when operands available keep list of pending instructions fetch multiple instructions/cycle back Write- Bufger Reorder … load/store (stage 2) ALU 3 (stage 1) ALU 3 ALU 2 ALU 1 Decode Fetch Queue Instr Decode Fetch modern CPU design (instruction fmow) 19 … W E D F 20 provide illusion that work is still done in order

modern CPU design (instruction fmow) 1 6 7 — … 21 instruction queue operation status addq %rax, %rdx — running 2 addq %rbx, %rdx waiting for 1 3 addq %rcx, %rdx waiting for 2 4 — — waiting for 3 7 … … execution unit cycle# 1 2 3 4 5 6 … ALU 2 ALU 1 1 2 3 4 5 8 9 cmpq %r8, %rdx 5 Fetch 8 … ALU 1 1 2 3 4 5 9 6 ALU 2 — — — 6 7 — … 7 5 jne ... addq %rcx, %rdx waiting for 4 6 addq %rax, %rdx waiting for 3 7 addq %rbx, %rdx waiting for 6 8 waiting for 7 4 9 cmpq %r8, %rdx waiting for 8 … … execution unit cycle# 1 2 3 waiting for 8 cmpq %r8, %rdx 9 Decode e.g. possibly many ALUs sometimes pipelined, sometimes not collect results of fjnished instructions helps with forwarding, squashing 20 modern CPU design (instruction fmow) Fetch Instr forwarding handled here Queue Fetch Decode ALU 1 ALU 2 ALU 3 (stage 1) ALU 3 multiple “execution units” to run instructions run instructions from list when operands available load/store (stage 1) Decode Instr Queue Fetch Decode ALU 1 ALU 2 ALU 3 ALU 3 keep list of pending instructions (stage 2) load/store … Reorder Bufger Write- back fetch multiple instructions/cycle (stage 2) … waiting for 7 jne ... waiting for 1 3 addq %rcx, %rdx waiting for 2 4 cmpq %r8, %rdx waiting for 3 5 waiting for 4 2 6 addq %rax, %rdx waiting for 3 7 addq %rbx, %rdx waiting for 6 8 addq %rcx, %rdx addq %rbx, %rdx ready Reorder e.g. possibly many ALUs Bufger Write- back fetch multiple instructions/cycle keep list of pending instructions run instructions from list when operands available forwarding handled here multiple “execution units” to run instructions sometimes pipelined, sometimes not addq %rax, %rdx collect results of fjnished instructions helps with forwarding, squashing 20 instruction queue operation status 1 21 # instruction # instruction

Recommend

More recommend