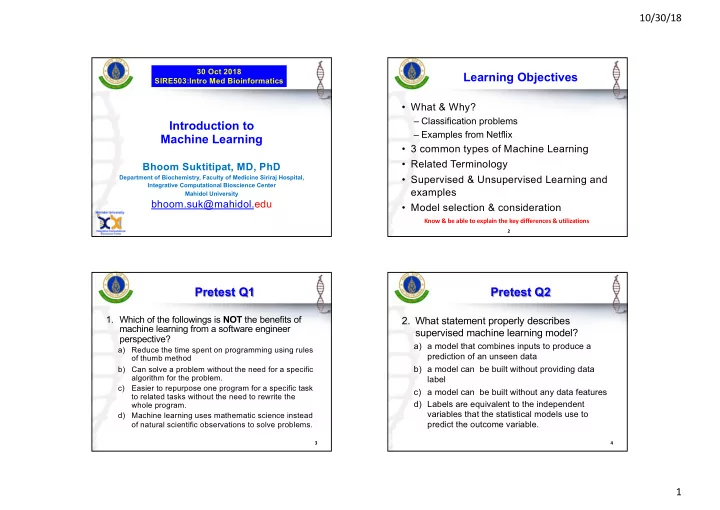

10/30/18 30 Oct 2018 Learning Objectives SIRE503:Intro Med Bioinformatics • What & Why? – Classification problems Introduction to – Examples from Netflix Machine Learning • 3 common types of Machine Learning • Related Terminology Bhoom Suktitipat, MD, PhD Department of Biochemistry, Faculty of Medicine Siriraj Hospital, • Supervised & Unsupervised Learning and Integrative Computational Bioscience Center examples Mahidol University bhoom.suk@mahidol.edu • Model selection & consideration Know & be able to explain the key differences & utilizations 2 Pretest Q1 Pretest Q2 1. Which of the followings is NOT the benefits of 2. What statement properly describes machine learning from a software engineer supervised machine learning model? perspective? a) a model that combines inputs to produce a a) Reduce the time spent on programming using rules prediction of an unseen data of thumb method b) a model can be built without providing data b) Can solve a problem without the need for a specific algorithm for the problem. label c) Easier to repurpose one program for a specific task c) a model can be built without any data features to related tasks without the need to rewrite the d) Labels are equivalent to the independent whole program. variables that the statistical models use to d) Machine learning uses mathematic science instead predict the outcome variable. of natural scientific observations to solve problems. 3 4 1

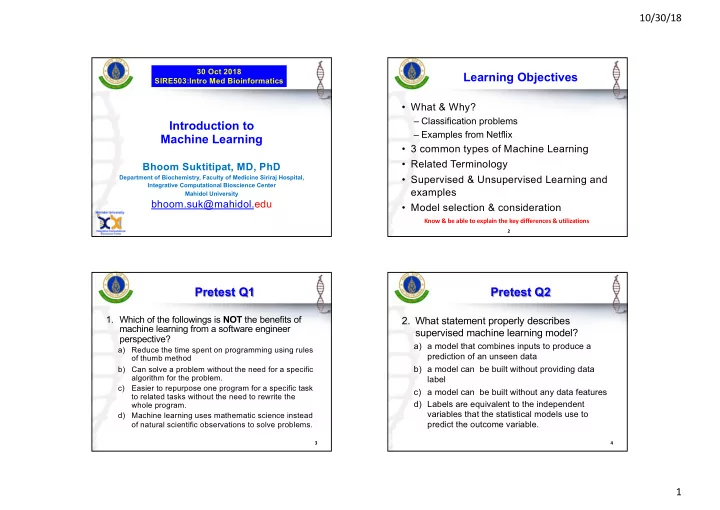

10/30/18 Pretest (Q3) Pretest (Q4) 3. Suppose you want to develop a supervised 4. Suppose an online shoe store wants to create a machine learning model to predict whether a supervised ML model that will provide personalized given email is "spam" or "not spam." Which shoe recommendations to users. That is, the model of the following statements is NOT true? will recommend certain pairs of shoes to Marty and different pairs of shoes to Janet. Which of the a) The labels applied to some examples might be unreliable. following statements are true? b) We’ll use unlabeled examples to train the a) “Shoe beauty” is a useful feature. model. b) “The user added a star to the shoes” is a useful feature. c) Emails not marked as “spam” or “not spam” are c) “Shoes that a user adores” is a useful label. unlabeled examples. d) “The user clicked on the shoe’s description” is a useful label. d) Words in the subject header will make a good features. 5 6 Pretest (Q5) Pretest (Q6) 6. Which model has lower Mean Squared 5. Which of the following does not describe Error (MSE)? a loss function? a) Loss can be measured as the “mean square error”. b) Loss is a number indicating how bad the model's prediction was. c) Loss is the error associated with the prediction of each data point. d) ML model with higher loss function performs better than the one with lower loss function. 7 8 2

10/30/18 Pretest (Q7) Pretest (Q8) 7. When performing gradient descent on a 8. Which of the followings does not describe large data set, which of the following batch TensorFlow? sizes will likely be more efficient ? a) a graph-based computation framework a) a small batch or a single example batch b) a software library for high-performance numerical computation b) the full dataset c) a popular software for machine learning and deep learning d) a type of machine learning model invented by Google 9 10 Stanford’s Machine Learning Stanford’s Machine Learning Course (CS229) Course (CS229) 1.Supervised learning, discriminative algorithms 2.Linear regression 3.Weighted least squares, logistic regression, Newton’s method 4.Perceptron. Exponential Family. Generalized Linear Models. 5.Gaussian discriminant analysis. Naive Bayes 6.Laplace smoothing. Support vector machines. 7.Support Vector Machines. Kernels. 8.Bias-Variance tradeoff. Regularization and model/feature selection. 9.Tree Ensembles. 10.Neural Networks. Back propagation. 11.Error Analysis. Practical Advice on structuring ML projects. 12.K-Means. Expectation Maximization. 13.EM. Gaussian Mixture Model. 14.Factor analysis 15.PCA & independent component analysis 16.MDPs. Bellman Equations 17.Value iteration and policy iteration. LQR/LQG 18.Q-learning. Value function approximation 19.Policy search. REINFORCE. POMDPs http://cs229.stanford.edu/syllabus.html 1 1 http://cs229.stanford.edu/syllabus.html 1 2 3

10/30/18 The Era of Big Data The Era of Big Data 360Kb 1.2M 700M 25G 4.7G The competitor had made massive investments in its Volume, Velocity, Variety ability to collect, integrate, and analyze data from each store and every sales unit and had used this ability to run myriad real-world experiments. 13 14 16 The Era of Big Data Typical Size of One genome data 70k variants from WES 10 Mb 3.96M SNP Data 15 Mb 1.5 10M variants 3 RNASeq 1.It’s not just for big company 5 WES (80x) 2.Data visualization may be the next big thing 100 WGS (30x) 3.Intuition isn’t dead 0.01 0.1 1 10 100 File size (Gb) 4.It isn’t a Panacea. Big data can reduce uncertainty, not eliminate it! 15 4

10/30/18 What & Why Machine Learning vs Statistics Machine Learning Statistics • Understanding big data Network, graphs Model – Netflix’s recommendation Weights Parameters Learning Fitting • Machine learning: Generalization Test set performance – a set of method that can automatically detect Supervised learning Regression/classification patterns in data Unsupervised learning density estimation, clustering Large grant = $1,000,000 Large grant = $50,000 – Use the pattern to predict the future/making Nice place to have a meeting: Nice place to have a meeting: decision Snowbird, Utah, French Alps Las Vegas in August • Uncertainty & probabilistic model http://statweb.stanford.edu/~tibs/stat315a/glossary.pdf 17 18 Cinematch – the Netflix Algorithm Cinematch uses "straightforward statistical linear models with a lot of data conditioning". [Ref 7 on Wikipedia] To make matches, a computer: 1.Searches the CineMatch database for people who have rated the same movie - for example, "The Return of the Jedi" 2.Determines which of those people have also rated a second movie, such as "The Matrix" 3.Calculates the statistical likelihood that people who liked "Return of the Jedi" will also like "The Matrix" 4.Continues this process to establish a pattern of correlations between subscribers' ratings of many different films https://electronics.howstuffworks.com/netflix2.htm 19 20 5

10/30/18 Problems The Winner Netflix provided a training data set of 100,480,507 ratings that 480,189 A blend of several • team BellKor’s Pragmatic Chaos , who users gave to 17,770 movies. Each training rating is a quadruplet of the form <user, movie, date of grade, grade>. The user and movie fields are integer IDs, predictive models produced an algorithm which apparently while grades are from 1 to 5 (integral) stars. [3] The qualifying data set contains over 2,817,131 triplets of the form improved the search by 10.06% <user, movie, date of grade>, with grades known only to the jury. A participating team's algorithm must predict grades on the entire qualifying set, but they are only informed of the score for half of the data, the quiz set of 1,408,342 ratings. The other half is the test set of 1,408,789, and performance on this is used by the jury to determine potential prize winners. Only the judges know which ratings are in the quiz set, and which are in the test set—this arrangement is intended to make it difficult to hill climb on the test set. Submitted predictions are scored against the true grades in terms of root mean squared error (RMSE), and the goal is to reduce this error as much as possible. Note that while the actual grades are integers in the range 1 to 5, submitted predictions need not be. Netflix also identified a probe subset of 1,408,395 ratings within the training data set. The probe , quiz , and test data sets were chosen to have similar statistical properties. Netflix Tech Blog, Apr 6, 2012 https://www.netflixprize.com/assets/GrandPrize2009_BPC_BellKor.pdf https://www.netflixprize.com/assets/GrandPrize2009_BPC_BigChaos.pdf 21 22 Type of Machine Learning Type of Machine Learning • Predictive (supervised) learning Output • Descriptive (unsupervised) learning Input Knowledge Discovery Common Output Finding pattern in the data - Categorical/Nominal Classification Problem Prediction Pattern Recognition - - Continuous Regression Features Response Attributes variable y i covariates x i 23 24 6

Recommend

More recommend