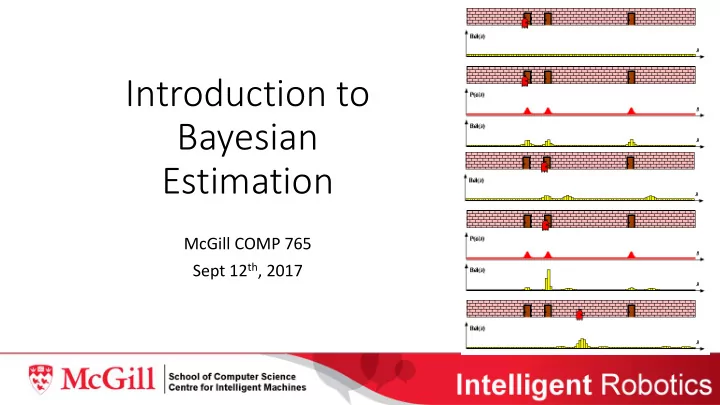

Introduction to Bayesian Estimation McGill COMP 765 Sept 12 th , 2017

“Where am I?” – our first core problem • Last class: • W e can model a robot’s motions and the world as spatial quantities • These are not perfect and therefore it is up to algorithms to compensate • Today: • Representing motion and sensing probabilistically • Formulation of localization as Bayesian inference • Describe and analyze a first simple algorithm

Example: Landing on mars

Complementary input sources • A GPS tells our global position, with constant noise (+/- 5m): appears as jitter around path • An IMU tell our relative motion, with unknown yaw drift: diverges over time • A good algorithm will fuse these inputs intelligently and recover a path which best explains both: • Smoother than GPS • Less drift than IMU

Example: Self-driving Source: Dave Ferguson “Solve for X” talk, July 2013 http://www.youtube.com/watch?v=KA_C6OpL_Ao

Raw Sensor Data Sonar Laser Measured distances for expected distance of 300 cm. It is crucial that we measure the noise well to integrate. 7

Probabilistic Robotics Key idea: Explicit representation of uncertainty using the calculus of probability theory • Perception = state estimation • Action = utility optimization 8

Discrete Random Variables • X denotes a random variable. • X can take on a countable number of values in {x 1 , x 2 , …, x n }. • P(X=x i ) , or P(x i ) , is the probability that the random variable X takes on value x i . • P( ) is called probability mass function. . • E.g. P ( Room ) 0 . 7 , 0 . 2 , 0 . 08 , 0 . 02 9

Continuous Random Variables • X takes on values in the continuum. • p(X=x) , or p(x) , is a probability density function. b Pr( x ( a , b )) p ( x ) dx a p(x) • E.g. x 10

Joint and Conditional Probability • P(X=x and Y=y) = P(x,y) • If X and Y are independent then P(x,y) = P(x) P(y) • P(x | y) is the probability of x given y P(x | y) = P(x,y) / P(y) P(x,y) = P(x | y) P(y) • If X and Y are independent then P(x | y) = P(x) 11

Law of Total Probability, Marginals Discrete case Continuous case 1 P ( x ) 1 p ( x ) dx x P ( x ) P ( x , y ) p ( x ) p ( x , y ) dy y P ( x ) P ( x | y ) P ( y ) p ( x ) p ( x | y ) p ( y ) dy y 12

Bayes Formula P ( x , y ) P ( x | y ) P ( y ) P ( y | x ) P ( x ) P ( y | x ) P ( x ) likelihood prior P ( x y ) P ( y ) evidence 13

Normalization P ( y | x ) P ( x ) P ( x y ) P ( y | x ) P ( x ) P ( y ) 1 1 P ( y ) P ( y | x ) P ( x ) x Algorithm: x : aux P ( y | x ) P ( x ) x | y 1 aux x | y x x : P ( x | y ) aux x | y 14

Conditioning • Law of total probability: P ( x ) P ( x , z ) dz P ( x ) P ( x | z ) P ( z ) dz P ( x y ) P ( x | y , z ) P ( z | y ) dz 15

Bayes Rule with Background Knowledge P ( y | x , z ) P ( x | z ) P ( x | y , z ) P ( y | z ) 16

Conditioning • Total probability: P ( x ) P ( x , z ) dz P ( x ) P ( x | z ) P ( z ) dz P ( x y ) P ( x | y , z ) P ( z ) dz 17

Conditional Independence P ( x , y z ) P ( x | z ) P ( y | z ) equivalent to P ( x z ) P ( x | z , y ) and P ( y z ) P ( y | z , x ) 18

Simple Example of State Estimation • Suppose a robot obtains measurement z • What is P(open|z)? 19

Causal vs. Diagnostic Reasoning • P(open|z) is diagnostic. • P(z|open) is causal. • Often causal knowledge is easier to obtain. • Bayes rule allows us to use causal knowledge: count frequencies! P ( z | open ) P ( open ) P ( open | z ) P ( z ) 20

Example • P(z|open) = 0.6 P(z| open) = 0.3 • P(open) = P( open) = 0.5 P ( z | open ) P ( open ) P ( open | z ) P ( z | open ) p ( open ) P ( z | open ) p ( open ) 0 . 6 0 . 5 2 P ( open | z ) 0 . 67 0 . 6 0 . 5 0 . 3 0 . 5 3 • z raises the probability that the door is open. 21

Combining Evidence • Suppose our robot obtains another observation z 2 . • How can we integrate this new information? • More generally, how can we estimate P(x| z 1 ...z n ) ? 22

Recursive Bayesian Updating P ( z | x , z , , z ) P ( x | z , , z ) n 1 n 1 1 n 1 P ( x | z , , z ) 1 n P ( z | z , , z ) n 1 n 1 Assumption : z n is independent of z 1 ,...,z n-1 if we know x. P ( z | x ) P ( x | z , , z ) n 1 n 1 P ( x | z , , z ) 1 n P ( z | z , , z ) n 1 n 1 P ( z | x ) P ( x | z , , z ) n 1 n 1 P ( z | x ) P ( x ) i 1 ... n i 1 ... n 23

Example: Second Measurement • P(z 2 |open) = 0.5 P(z 2 | open) = 0.6 • P(open|z 1 )=2/3 P ( z | open ) P ( open | z ) 2 1 P ( open | z , z ) 2 1 P ( z | open ) P ( open | z ) P ( z | open ) P ( open | z ) 2 1 2 1 1 2 5 2 3 0 . 625 1 2 3 1 8 2 3 5 3 • z 2 lowers the probability that the door is open. 24

A Typical Pitfall • Two possible locations x 1 and x 2 • P(x 1 )=0.99 • P(z| x 2 )=0.09 P(z| x 1 )=0.07 1 p(x2 | d) • Integrate same z repeatedly p(x1 | d) 0.9 0.8 • What are we doing wrong? 0.7 0.6 p( x | d) 0.5 0.4 0.3 0.2 0.1 0 5 10 15 20 25 30 35 40 45 50 Number of integrations 25

Actions • Often the world is dynamic since • actions carried out by the robot , • actions carried out by other agents , • or just the time passing by change the world. • How can we incorporate such actions ? 26

Typical Actions • The robot turns its wheels to move • The robot uses its manipulator to grasp an object • Plants grow over time … • Actions are never carried out with absolute certainty . • In contrast to measurements, actions generally increase the uncertainty . 27

Modeling Actions • To incorporate the outcome of an action u into the current “belief”, we use the conditional pdf P(x|u,x’) • This term specifies the pdf that executing u changes the state from x’ to x . 28

Example: Closing the door 29

State Transitions P(x|u,x ’) for u = “close door”: 0.9 0.1 open closed 1 0 If the door is open, the action “close door” succeeds in 90% of all cases. 30

Integrating the Outcome of Actions Continuous case: P ( x | u ) P ( x | u , x ' ) P ( x ' ) dx ' Discrete case: P ( x | u ) P ( x | u , x ' ) P ( x ' ) 31

Example: P ( closed | u ) P ( closed | u , x ' ) P ( x ' ) The Resulting Belief P ( closed | u , open ) P ( open ) P ( closed | u , closed ) P ( closed ) 9 5 1 3 15 10 8 1 8 16 P ( open | u ) P ( open | u , x ' ) P ( x ' ) P ( open | u , open ) P ( open ) P ( open | u , closed ) P ( closed ) 1 5 0 3 1 10 8 1 8 16 1 P ( closed | u ) 32

Bayes Filters: Framework • Given: • Stream of observations z and action data u: d { u , z , u , z } t 1 1 t t • Sensor model P(z|x). • Action model P(x|u,x’) . • Prior probability of the system state P(x). • Wanted: • Estimate of the state X of a dynamical system. • The posterior of the state is also called Belief : Bel ( x ) P ( x | u , z , u , z ) t t 1 1 t t 33

Markov Assumption p ( z | x , z , u ) p ( z | x ) t 0 : t 1 : t 1 : t t t p ( x | x , z , u ) p ( x | x , u ) t 1 : t 1 1 : t 1 : t t t 1 t Underlying Assumptions • Static world • Independent noise • Perfect model, no approximation errors 34

Recommend

More recommend