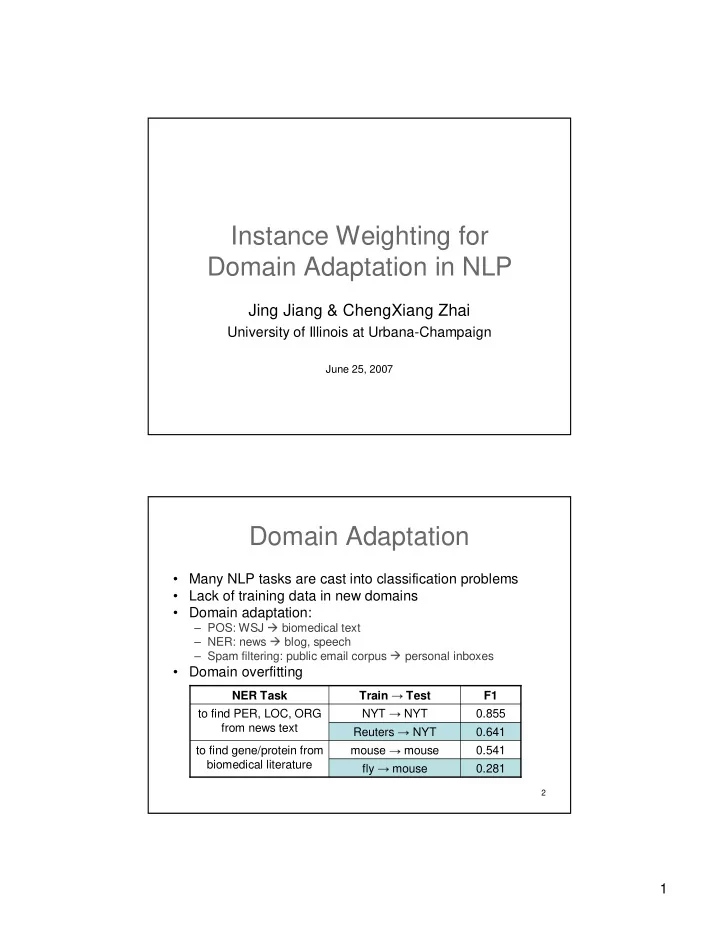

✁ ✁ ✁ ✁ � � � ✂ Instance Weighting for Domain Adaptation in NLP Jing Jiang & ChengXiang Zhai University of Illinois at Urbana-Champaign June 25, 2007 Domain Adaptation • Many NLP tasks are cast into classification problems • Lack of training data in new domains • Domain adaptation: – POS: WSJ biomedical text – NER: news blog, speech – Spam filtering: public email corpus personal inboxes • Domain overfitting NER Task Train Test F1 to find PER, LOC, ORG NYT NYT 0.855 from news text Reuters NYT 0.641 to find gene/protein from mouse mouse 0.541 biomedical literature fly mouse 0.281 2 1

Existing Work on Domain Adaptation • Existing work – Prior on model parameters [Chelba & Acero 04] – Mixture of general and domain-specific distributions [Daumé III & Marcu 06] – Analysis of representation [Ben-David et al. 07] • Our work – A fresh instance weighting perspective – A framework that incorporates both labeled and unlabeled instances 3 Outline • Analysis of domain adaptation • Instance weighting framework • Experiments • Conclusions 4 2

The Need for Domain Adaptation source domain target domain 5 The Need for Domain Adaptation source domain target domain 6 3

� Where Does the Difference Come from? p(x, y) p s (y | x) p t (y | x) p(x)p(y | x) � p t (x) p s (x) labeling difference instance difference ? labeling adaptation instance adaptation 7 An Instance Weighting Solution (Labeling Adaptation) source domain target domain p t (y | x) ✁ p s (y | x) remove/demote instances 8 4

An Instance Weighting Solution (Labeling Adaptation) source domain target domain p t (y | x) ✁ p s (y | x) remove/demote instances 9 An Instance Weighting Solution (Labeling Adaptation) source domain target domain p t (y | x) ✁ p s (y | x) remove/demote instances 10 5

An Instance Weighting Solution (Instance Adaptation: p t (x) < p s (x)) source domain target domain p t (x) < p s (x) remove/demote instances 11 An Instance Weighting Solution (Instance Adaptation: p t (x) < p s (x)) source domain target domain p t (x) < p s (x) remove/demote instances 12 6

An Instance Weighting Solution (Instance Adaptation: p t (x) < p s (x)) source domain target domain p t (x) < p s (x) remove/demote instances 13 An Instance Weighting Solution (Instance Adaptation: p t (x) > p s (x)) source domain target domain p t (x) > p s (x) promote instances 14 7

An Instance Weighting Solution (Instance Adaptation: p t (x) > p s (x)) source domain target domain p t (x) > p s (x) promote instances 15 An Instance Weighting Solution (Instance Adaptation: p t (x) > p s (x)) source domain target domain p t (x) > p s (x) promote instances 16 8

� ✁ An Instance Weighting Solution (Instance Adaptation: p t (x) > p s (x)) source domain target domain p t (x) > p s (x) • Labeled target domain instances are useful • Unlabeled target domain instances may also be useful 17 The Exact Objective Function true marginal and log likelihood (log conditional probabilities in loss function) the target domain θ = θ p x p y x p y x dx * arg max ( ) ( | ) log ( | ; ) t t t X θ ∈ y Y unknown 18 9

� ✂ ✁ ✂ ✁ � Three Sets of Instances D t, l D t, u D s θ = θ p x p y x p y x dx * arg max ( ) ( | ) log ( | ; ) t t t X θ ∈ y Y 19 Three Sets of Instances: Using D s D s D t, l D t, u θ = θ p x p y x p y x dx * arg max ( ) ( | ) log ( | ; ) t t t X θ y ∈ Y N 1 s ≈ α β p y s x s θ arg max log ( | ; ) i i i i N X ≈ D s α β s θ i = s 1 p x i i i = ( ) 1 β = t i i p x s ( ) p y s x s s i ( | ) α = t i i in principle, non-parametric density i s s p y x ( | ) estimation; in practice, high s i i dimensional data (future work) need labeled target data 20 10

☎ ✆ � ✁ ✄ ✆ ✂ Three Sets of Instances: Using D t,l D t, l D t, u D s θ = θ p x p y x p y x dx * arg max ( ) ( | ) log ( | ; ) t t t X θ ∈ y Y N t l 1 , ≈ t t θ p y x arg max log ( | ; ) j j X ≈ D t,l N θ j = t l 1 , small sample size, estimation not accurate 21 Three Sets of Instances: Using D t,u D s D t, l D t, u θ = θ p x p y x p y x dx * arg max ( ) ( | ) log ( | ; ) t t t X θ ∈ y Y N t u 1 , ≈ γ t θ y p y x arg max ( ) log ( | ; ) X ≈ D t,u k k C θ k = y ∈ t u 1 Y , γ y = t , u p y x ( ) ( | ) k t k pseudo labels (e.g. bootstrapping, EM) 22 11

✂ ✂ ✂ � ✁ ✂ Using All Three Sets of Instances D t, l D s D t, u X ≈ D s + D t,l + D t,u ? θ = p x p y x p y x θ dx * arg max ( ) ( | ) log ( | ; ) t t t X θ y ∈ Y ≈ ? 23 A Combined Framework N s 1 θ = λ α β s s θ ˆ p y x arg max [ log ( | ; ) s i i i i C θ = s i 1 N t l 1 , + λ t t θ p y x log ( | ; ) t l i i C , j = t l 1 , N t u 1 , + λ γ θ y p y x t ( ) log ( | ; ) t u k k , C k = y ∈ t u 1 Y , + θ p log ( )] λ + λ + λ = 1 s t l t u , , a flexible setup covering both standard methods and new domain adaptive methods 24 12

☎ ☎ ☎ ☎ ✁ � � � � Standard Supervised Learning using only D s N 1 s θ = λ α β s s θ ˆ p y x arg max [ log ( | ; ) s i i i i C θ = i s 1 N t l 1 , + λ t t θ p y x log ( | ; ) t l i i C , = j t l 1 , N t u 1 , + λ γ θ y p y x t ( ) log ( | ; ) t u k k , C k = y ∈ t u Y 1 , + θ p log ( )] i = ✂ i = 1, ✄ s = 1, ✄ t,l = ✄ t,u = 0 25 Standard Supervised Learning using only D t,l N 1 s θ = λ α β s s θ ˆ p y x arg max [ log ( | ; ) s i i i i C θ i = s 1 N t l 1 , + λ t t θ p y x log ( | ; ) t l i i , C j = t l , 1 N t u 1 , + λ γ t θ y p y x ( ) log ( | ; ) t u k k C , = ∈ k y t u Y 1 , + θ p log ( )] ✄ t,l = 1, ✄ s = ✄ t,u = 0 26 13

✁ � ✁ � � � ✁ ✁ ✁ ✁ Standard Supervised Learning using both D s and D t,l N 1 s θ ˆ = λ α β p y s x s θ arg max [ log ( | ; ) s i i i i C θ i = s 1 N t l 1 , + λ p y t x t θ log ( | ; ) t l i i C , j = t l 1 , N t u 1 , + λ γ y p y x t θ ( ) log ( | ; ) t u k k , C k = y ∈ t u 1 Y , + θ p log ( )] i = ✂ i = 1, ✄ s = N s /(N s +N t,l ), ✄ t,l = N t,l /(N s +N t,l ), ✄ t,u = 0 27 Domain Adaptive Heuristic: 1. Instance Pruning N 1 s θ ˆ = λ α β p y s x s θ arg max [ log ( | ; ) s i i i i C θ = i s 1 N t l 1 , + λ t t θ p y x log ( | ; ) t l i i C , j = t l 1 , N t u 1 , + λ γ y p y x t θ ( ) log ( | ; ) t u k k , C = ∈ k y t u Y 1 , + θ p log ( )] i = 0 if (x i , y i ) are predicted incorrectly by a model trained from D t,l ; 1 otherwise 28 14

✁ ✁ ✂ ✁ ✁ � � � � Domain Adaptive Heuristic: 2. D t,l with higher weights N 1 s θ ˆ = λ α β s s θ p y x arg max [ log ( | ; ) s i i i i C θ = i s 1 N t l 1 , + λ t t θ p y x log ( | ; ) t l i i , C j = t l 1 , N t u 1 , + λ γ t θ y p y x ( ) log ( | ; ) t u k k , C k = y ∈ t u 1 Y , + θ p log ( )] ✄ s < N s /(N s +N t,l ), ✄ t,l > N t,l /(N s +N t,l ) 29 Standard Bootstrapping N 1 s θ = λ α β s s θ ˆ p y x arg max [ log ( | ; ) s i i i i C θ i = s 1 N t l 1 , + λ t t θ p y x log ( | ; ) t l i i , C = j t l 1 , N t u 1 , + λ γ y p y x t θ ( ) log ( | ; ) t u k k C , k = y ∈ t u 1 Y , + θ p log ( )] k (y) = 1 if p(y | x k ) is large; 0 otherwise 30 15

✂ ✂ ✂ ✂ � � Domain Adaptive Heuristic: 3. Balanced Bootstrapping N 1 s θ = λ α β s s θ ˆ p y x arg max [ log ( | ; ) s i i i i C θ i = s 1 N t l 1 , + λ t t θ p y x log ( | ; ) t l i i , C j = t l 1 , N t u 1 , + λ γ θ y p y x t �✁� ( ) log ( | ; ) t u k k C , k = y ∈ t u 1 Y , + θ p log ( )] k (y) = 1 if p(y | x k ) is large; 0 otherwise ✄ s = ✄ t,u = 0.5 31 Experiments • Three NLP tasks: – POS tagging: WSJ (Penn TreeBank) Oncology (biomedical) text (Penn BioIE) – NE type classification: newswire conversational telephone speech (CTS) and web-log (WL) (ACE 2005) – Spam filtering: public email collection personal inboxes (u01, u02, u03) (ECML/PKDD 2006) 32 16

Recommend

More recommend