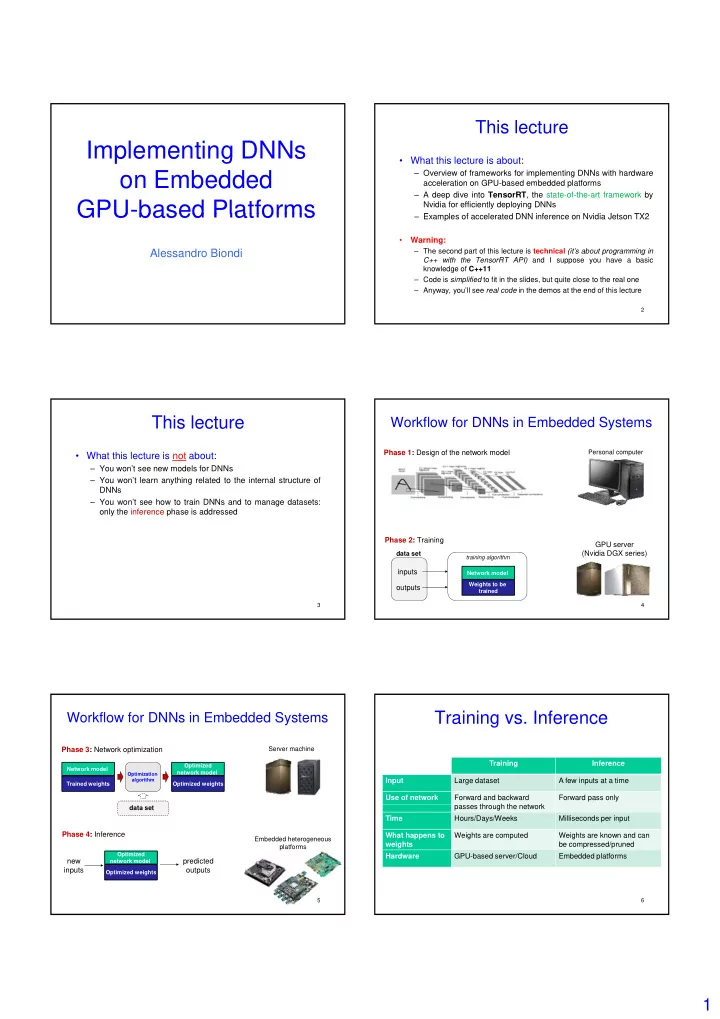

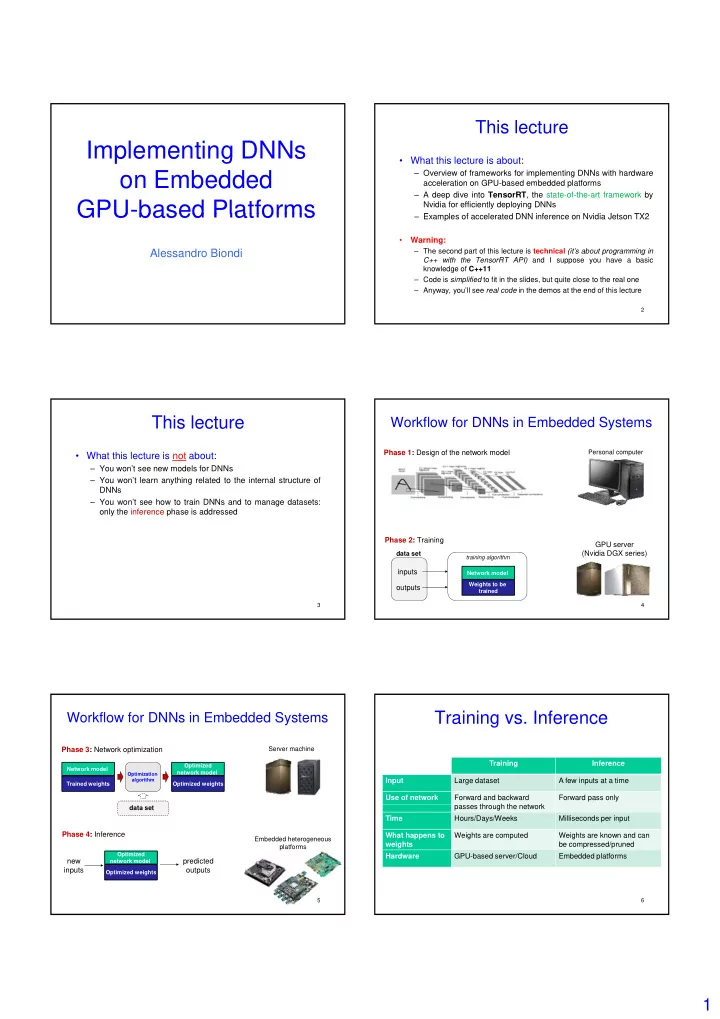

This lecture Implementing DNNs • What this lecture is about: on Embedded – Overview of frameworks for implementing DNNs with hardware acceleration on GPU-based embedded platforms – A deep dive into TensorRT , the state-of-the-art framework by GPU-based Platforms GPU based Platforms Nvidia for efficiently deploying DNNs – Examples of accelerated DNN inference on Nvidia Jetson TX2 E l f l t d DNN i f N idi J t TX2 • Warning: Alessandro Biondi – The second part of this lecture is technical (it’s about programming in C++ with the TensorRT API) and I suppose you have a basic knowledge of C++11 – Code is simplified to fit in the slides, but quite close to the real one – Anyway, you’ll see real code in the demos at the end of this lecture 2 This lecture Workflow for DNNs in Embedded Systems Phase 1: Design of the network model Personal computer • What this lecture is not about: – You won’t see new models for DNNs – You won’t learn anything related to the internal structure of DNNs – You won’t see how to train DNNs and to manage datasets: only the inference phase is addressed l th i f h i dd d Phase 2: Training GPU server (Nvidia DGX series) data set training algorithm inputs Network model Weights to be outputs trained 3 4 Training vs. Inference Workflow for DNNs in Embedded Systems Server machine Phase 3: Network optimization Training Inference Optimized Network model network model Optimization Input Large dataset A few inputs at a time algorithm Trained weights Optimized weights Use of network Forward and backward Forward pass only passes through the network th h th t k data set Time Hours/Days/Weeks Milliseconds per input Phase 4: Inference What happens to Weights are computed Weights are known and can Embedded heterogeneous weights be compressed/pruned platforms Optimized Hardware GPU-based server/Cloud Embedded platforms new predicted network model inputs outputs Optimized weights 5 6 1

Landscape of Embedded Het. Platforms Embedded GPUs This lecture 7 8 Data from connecttech.com Nvidia Jetson TX2 Embedded GPUs: performance DNN Jetson TX2 module 9 Data from nvidia.com 10 Jetson TX2 Module Tegra X2 Main CPUs (ARMv8) - asymmetric GPU Figure from nvidia.com 11 Figure from nvidia.com 12 2

JetPack DNNs on GPUs • Nvidia JetPack SDK is a comprehensive resource for • DNNs on GPUs can be deployed by: building AI applications – Implementing layers from scratch with CUDA • JetPack bundles all of the Jetson platform software (unrealistic for modern DNNs, it’s like re-inventing the wheel) – accelerated software libraries, APIs, sample applications, developer – Using cuBLAS tools, and documentation – Using cuDNN • • …and includes the Nvidia Jetson Linux Driver Package and includes the Nvidia Jetson Linux Driver Package – Using standard DNN frameworks (TensorFlow, Caffe, …) (L4T). (internally, they leverage cuBLAS or cuDNN) – L4T provides the Linux kernel, bootloader, NVIDIA drivers, flashing – Using TensorRT utilities, sample filesystem, and more for the Jetson platform. (state-of-the-art for high-performance inference) 13 14 CUDA cuBLAS • CUDA is a parallel computing platform and programming model • Basic Linear Algebra Subprograms ( BLAS ) is a that makes using a GPU for general purpose computing simple specification that prescribes a set of low-level routines for and elegant. performing common linear algebra operations • The developer still programs in C/C++ (also other widespread – De-facto standard low-level routines for linear algebra languages are supported). • The NVIDIA cuBLAS library is a fast GPU-accelerated • CUDA incorporates extensions of these languages in the form of implementation of the standard basic linear algebra subroutines implementation of the standard basic linear algebra subroutines a few basic keywords. (BLAS). • Using cuBLAS APIs, you can speed up your applications by deploying compute-intensive operations to a single GPU or scale up and distribute work across multi-GPU configurations efficiently. https://developer.nvidia.com/cublas 15 16 cuDNN cuDNN • cuDNN by Nvidia is a GPU-accelerated library of primitives for deep neural networks. Speed-up for training ImageNet with Caffe (on a standard dataset) • cuDNN provides highly tuned implementations for standard routines such as forward and backward convolution, pooling, normalization, and activation layers. • Deep learning researchers and framework developers worldwide rely on cuDNN for high-performance GPU acceleration. – It allows them to focus on training neural networks and developing software applications rather than spending time on low-level GPU performance tuning. https://developer.nvidia.com/cudnn 17 Source: gigaom.com 18 3

GPU Support in DNN Frameworks • Most (if not all) DNN frameworks, such as TensorFlow and Caffe, dispose of a built-in support for Nvidia GPUs • The support consists in implementations of DNN TensorRT TensorRT layers with cuBLAS and cuDNN y TensorFlow (python API) Caffe (python API) tf.device('/device:GPU:0') caffe.set_mode_gpu() 19 TensorRT TensorRT: performance • Up to 140x in throughput (images per sec) on Nvidia • TensorRT is a framework that facilitates high Tesla v100 w.r.t. CPUs performance inference on NVIDIA graphics processing units (GPUs). • TensorRT takes a trained network which consists of TensorRT takes a trained network, which consists of a network definition and a set of trained parameters, and produces a highly optimized runtime engine which performs inference for that network. Intro, generalities, etc. • It’s built upon cuDNN 21 Image from nvidia.com 22 Using TensorRT Using TensorRT • Step 1: Optimize trained networks with TensorRT Optimizer • Step 2: Deploy optimized networks with TensorRT Runtime Engine Image from nvidia.com Images from nvidia.com 23 TensorRT 24 4

Using TensorRT Using TensorRT • TensorRT inputs the DNN model and the corresponding weights (trained network) • The input can be provided with a custom API or by loading files exported by DNN frameworks such as Caffe and TensorFlow DNN Model DNN Weights TensorRT Internally uses CUDA (cuDNN) to execute on GPUs GPGPU 25 Image from nvidia.com 26 (not exposed to users) TensorRT: optimizations Quantized DNNs TensorRT performs four types of optimizations: • The performance of modern DNNs is impressive but they require to perform heavy computations that involve – Weight quantization and precision calibration a huge set of parameters (weights, activations, etc.) – Layer and tensor fusion – In particular, the convolution operations are very – Kernel auto-tuning computationally intensive! – Dynamic tensor memory D i t • Such computations may be unsuitable for resource- constrained platforms such as those that are typically available in embedded systems – Inference may be very slow on an embedded platform violating timing constraints – They also consume a lot of energy 27 28 Quantized DNNs Quantized DNNs • The research of quantized DNNs has attracted a lot • Standard DNNs come with 32-bit floating points of attention from the deep learning community in parameters and hence require floating-point units (FPU) recent years to perform the corresponding computations • The goal of quantization is to contain the memory • Conversely, the operations required to infer a quantized footprint of trained DNNs to achieve faster inference p neural networks (e.g., with integer parameters) are ( g , g p ) while not significantly penalizing the network accuracy typically bitwise operations that can be carried out by arithmetic logic units (ALU) • Joint solutions from machine learning, numerical optimization, and computer architectures are required – ALU are faster than FPU and consume much less energy! to achieve this goal Y. Guo, “A Survey on Methods and Theories of Quantized Neural Networks”, arXiv:1808.04752v2 29 30 5

Recommend

More recommend