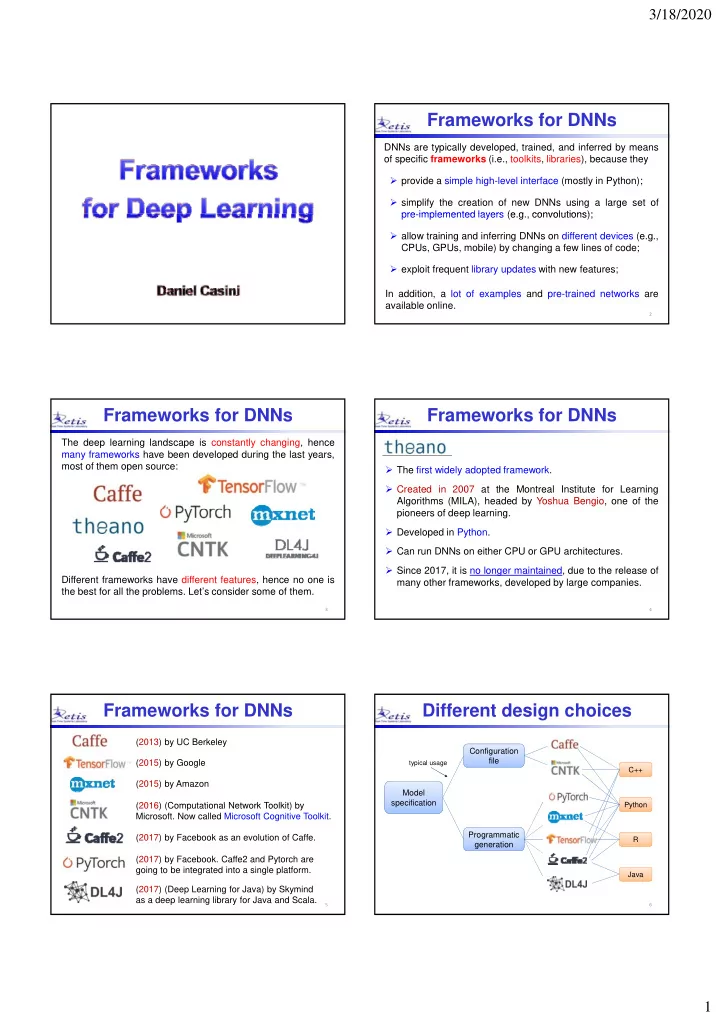

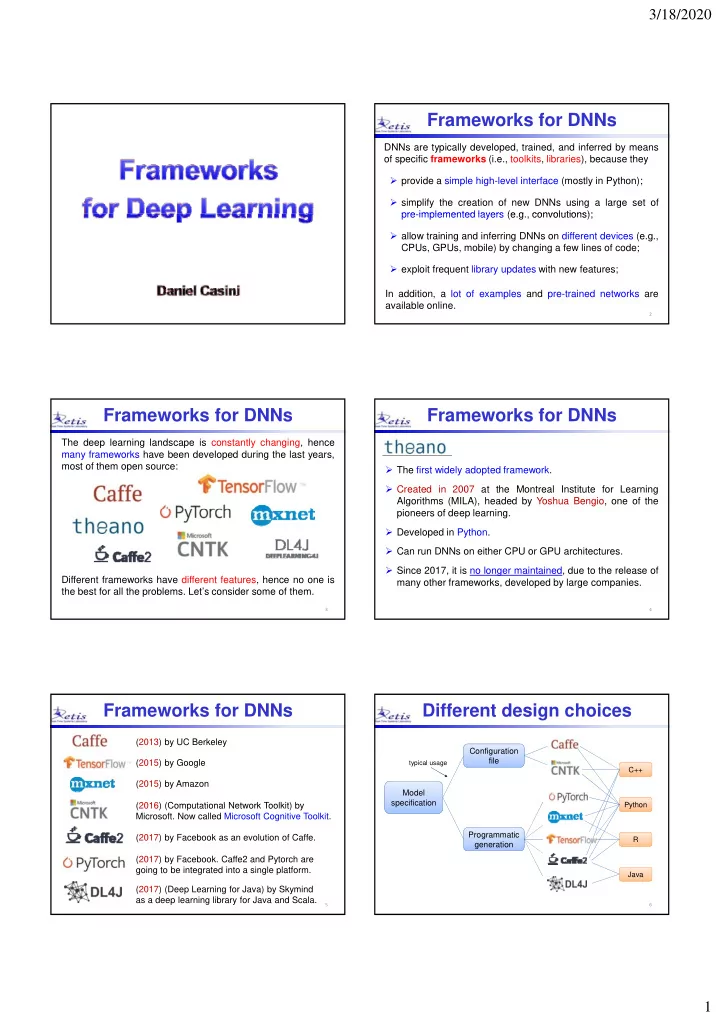

3/18/2020 Frameworks for DNNs DNNs are typically developed, trained, and inferred by means of specific frameworks (i.e., toolkits, libraries), because they provide a simple high-level interface (mostly in Python); simplify the creation of new DNNs using a large set of pre implemented layers (e g pre-implemented layers (e.g., convolutions); convolutions); allow training and inferring DNNs on different devices (e.g., CPUs, GPUs, mobile) by changing a few lines of code; exploit frequent library updates with new features; In addition, a lot of examples and pre-trained networks are available online. 2 Frameworks for DNNs Frameworks for DNNs The deep learning landscape is constantly changing, hence many frameworks have been developed during the last years, most of them open source: The first widely adopted framework. Created in 2007 at the Montreal Institute for Learning Algorithms (MILA), headed by Yoshua Bengio, one of the pioneers of deep learning. i f d l i Developed in Python. Can run DNNs on either CPU or GPU architectures. Since 2017, it is no longer maintained, due to the release of Different frameworks have different features, hence no one is many other frameworks, developed by large companies. the best for all the problems. Let’s consider some of them. 3 4 Frameworks for DNNs Different design choices (2013) by UC Berkeley Configuration file (2015) by Google typical usage C++ (2015) by Amazon Model specification specification (2016) (Computational Network Toolkit) by (2016) (Computational Network Toolkit) by Python Python Microsoft. Now called Microsoft Cognitive Toolkit. Programmatic (2017) by Facebook as an evolution of Caffe. R generation (2017) by Facebook. Caffe2 and Pytorch are going to be integrated into a single platform. Java (2017) (Deep Learning for Java) by Skymind as a deep learning library for Java and Scala. 5 6 1

3/18/2020 High-level APIs High-level APIs Besides these frameworks, there are also high-level interfaces that are wrapped around one or multiple frameworks. Released in 2017 by Amazon and supported by Microsoft. High-level Python deep learning interface Released in 2016 by François Chollet (Google). It wraps MXNet and soon it will also include CNTK. It wraps MXNet and soon it will also include CNTK Python-based library for fast experimentation with DNN. Gluon is a direct competitor for Keras. It runs on top of TensorFlow, CNTK, Theano, or PlaidML. User-friendly, modular, and extensible. Quote: "API designed for human beings, not machines" . Released in 2017 by Google’s DeepMind. It allows creating a DNN by stacking layers without specifying math operations, but only layer types. It is built on top of TensorFlow. 7 8 High-level APIs Frameworks at a glance Open source deep learning frameworks (2017) Open Neural Network Exchange In 2017, Microsoft, Amazon, Facebook, and others launched ONNX , an Open Neural Network Exchange format to represent deep learning models and port them between different frameworks between different frameworks. ONNX enables models to be trained in one framework and transferred to another for inference. ONNX supports Caffe2, CNTK, MXNet, PyTorch, and Google Microsoft Amazon Microsoft Facebook TensorFlow. 9 10 The complete stack Popularity GitHub users can “star” a repository to indicate that they like it. Appl. DNN So GitHub Stars can measure how popular a project is. ONNX High ‐ level TensorFlow APIs Keras Gluon Mid ‐ level Mid l l GitHubStars s Tensor Theano Caffe PyTorch CNTK MXNet APIs Flow Language Python C++ Java Caffe PyTorch Windows Linux Android iOS OS CNTK MXNet HW CPU DSP GPU TPU 11 12 2

3/18/2020 Combined metrics Power score computed by mixing several criteria, as articles, At the moment, TensorFlow is the most used framework, books, citations, Github activities, Google search volume, etc. followed by Caffe and PyTorch. Released in 2015 by Google Power score 2018 Main features Large community and support TensorBoard visualization tool Scalability to many platforms Good library mgmt and updates Not so intuitive interface Slower than other frameworks 13 14 The TensorFlow Stack Convolution Architecture For Feature Extraction High-level Released in 2013 by UC Berkeley Estimators Keras TF learn TF-sim TF APIs Main features Mid-level Datasets Layers Metrics Losses TF APIs Excellent for CNNs for image processing. While in TF the network is created by programming, in Language Python Frontend C++ Caffe layers are created by specifying a set of parameters. TF runtime TensorFlow Distributed Execution Engine Quite fast compared to other frameworks. Command line, Python, C++, and MatLab interface. Operating Windows Linux Android iOS Systems Not so easy to learn. Hardware CPU DSP GPU TPU Not so good with recurrent neural networks and sequence models. 15 16 Comparing frameworks Released in 2017 by Facebook Tutorial CNN RNN Easy-to-use Multiple GPU Keras Languages Speed Material modeling modeling API support compatible Main features Python, ++ ++ ++ + ++ Theano NO YES C++ Support for dynamic graphs. This is efficient when the input Tensor- Python, +++ varies, as in text processing. +++ +++ ++ ++ YES YES In 2017, TensorFlow introduced Flow C++ Eager Execution , to evaluate Interactive debugging. gg g Python, + + +++ +++ ++ ++ ++ ++ +++ +++ Pytorch Pytorch YES YES NO NO operations immediately, without operations immediately without C++ C++ building graphs. Easier to get started. Python, + +++ + + Caffe NO YES NO C++ Blend of high-level and low-level APIs. Python, R, ++ ++ + ++ ++ YES YES MXNet Julia, Scala Limited documentation. Python, + + +++ + ++ CNTK YES YES C++ No graphic visualization tools. Java, ++ +++ +++ +++ ++ DL4J YES YES No support for Windows (only Linux and macOS). Scala 17 18 3

3/18/2020 NVIDIA framework NVIDIA also provides support for DNN development, but only on top of their GPU platforms: Appl. DNN Mid ‐ level Caffe Caffe P Torch PyTorch Theano Theano APIs Optimiz. cuDNN cuBLAS Libraries Language CUDA HW GPU 19 20 Data Set Finders Data sets Natural images There are a lot of data sets on the internet for training DNNs. MNIST: handwritten digits (yann.lecun.com/exdb/mnist/). The following are general data set repositories that allow CIFAR10 / CIFAR100: 32×32 image dataset with 10 / 100 categories searching for the one you need: (www.cs.utoronto.ca/~kriz/cifar.html). COCO: large dataset for object detection, segmentation, and captioning Kaggle: www.kaggle.com (cocodataset org) (cocodataset.org). UCI Machine Learning Repository: mlr.cs.umass.edu/ml ImageNet: large image database organized according to the WordNet hierarchy (www.image-net.org). VisualData: www.visualdata.io Pascal VOC: dataset for image classification, object detection, and CMU Library: guides.library.cmu.edu/machine-learning/datasets segmentation (https://pjreddie.com/projects/pascal-voc-dataset-mirror/). COIL 20: 128×128 images of 20 objects taken at different rotation angles (www.cs.columbia.edu/CAVE/software/softlib/coil-20.php). Data sets are usually divided into categories. COIL100: 128×128 images of 100 objects taken at different rotation angles (www1.cs.columbia.edu/CAVE/software/softlib/coil-100.php). 21 22 Data sets Data sets Faces Speech Labelled Faces in the Wild: 13,000 images of faces collected from the TIMIT Speech Corpus: DARPA Acoustic-Phonetic Continuous Speech web, labelled with the person name (vis-www.cs.umass.edu/lfw). Corpus for phoneme classification (github.com/philipperemy/timit). Olivetti: Aurora: Timit with noise and additional information (aurora.hsnr.de). images of several people faces at different angles (www.cs.nyu.edu/~roweis/data.html). Music Sheffield: 564 images of 20 individuals each shown in a range of poses Sheffield: 564 images of 20 individuals each shown in a range of poses (https://www.sheffield.ac.uk/eee/research/iel/research/face). Piano-midi.de: classical piano pieces (www.piano-midi.de). Text Nottingham: over 1000 folk tunes (abc.sourceforge.net/NMD). 20 newsgroups: Classification task to map word occurrences into 20 MuseData: a collection of classical music scores (musedata.stanford.edu). newsgroup IDs (qwone.com/~jason/20Newsgroups). JSB Chorales: a dataset of four-part harmonized chorales Penn Treebank: used for next word prediction or next character prediction (https://github.com/czhuang/JSB-Chorales-dataset). (corochann.com/penn-tree-bank-ptb-dataset-introduction-1456.html). FMA: a dataset For Music Analysis (github.com/mdeff/fma). Broadcast News: large text dataset used for next word prediction (https://github.com/cyrta/broadcast-news-videos-dataset). 23 24 4

Recommend

More recommend