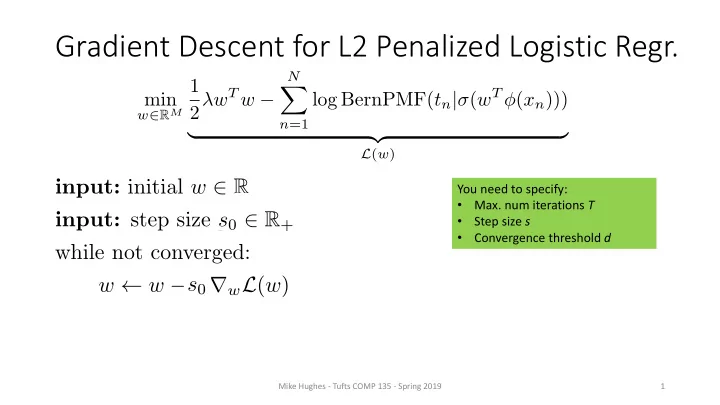

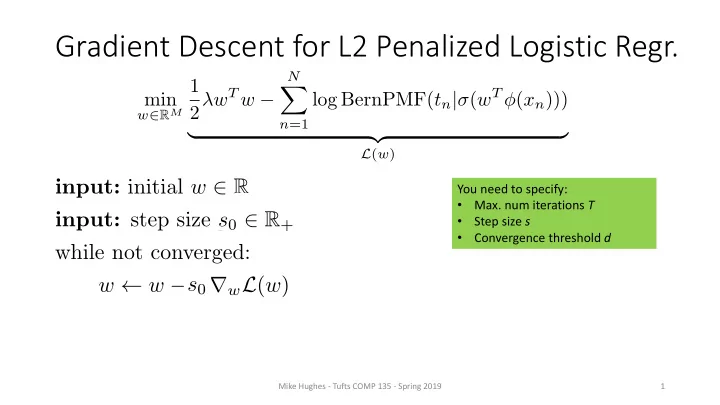

<latexit sha1_base64="WVKZbGz9XgDFns5dP5ZCmxq463Y=">ACgHicbVFdixMxFM2MX2v9qvroy8UizIJbZ6qgCMKygvjgSpXt7kLTDplMpg2bZIYkY7fE/A7/l2/+GMF0tg+6w0h3Puzb05KRrBjU3TX1F87fqNm7d2bvfu3L13/0H/4aNjU7easgmtRa1PC2KY4IpNLeCnTaEVkIdlKcvd/oJ9+YNrxWR3bdsJkC8UrTokNVN7/gSVXuVsB5gqwJHZFO6rnx96wK0qmS40oczhKhwu824UeBGuLwms5kewAtgDbFqZO/Uu8/PQa0XgC07t+6AaTU+/OATsLmC7yGPLyRJukLcLHlynqtdCMvnrmtNiXCfLaBZ/3B+kw7QKugmwLBmgb47z/E5c1bSVTlgpizDRLGztzRFtOBfM93BrWEHpGFmwaoCKSmZnrDPTwLDAlVLUOW1no2L8rHJHGrGURMjdzmsvahvyfNm1t9WbmuGpayxS9aFS1AmwNm9+AkmtGrVgHQKjmYVagSxK8tuHPesGE7PKTr4Lj0TB7ORx9eTXYP9jasYOeoKcoQRl6jfbRzRGE0TR72gQPY/24jhO4hdxdpEaR9uax+ifiN/+AcCfwBM=</latexit> Gradient Descent for L2 Penalized Logistic Regr. N 1 X log BernPMF( t n | σ ( w T φ ( x n ))) 2 λ w T w − min w ∈ R M n =1 | {z } L ( w ) input: initial w 2 R 2 You need to specify: Max. num iterations T • input: initial step size s 0 2 R + al step size s 0 2 R + Step size s • Convergence threshold d • while not converged: e s 0 w w � s t r w L ( w ) s t decay( s 0 , t ) t t + 1 Mike Hughes - Tufts COMP 135 - Spring 2019 1

Will gradient descent always find same solution? Mike Hughes - Tufts COMP 135 - Spring 2019 2

Will gradient descent always find same solution? Yes, if loss looks like this Not if multiple local minima exist Mike Hughes - Tufts COMP 135 - Spring 2019 3

Loss for logistic regression is convex! Mike Hughes - Tufts COMP 135 - Spring 2019 4

Intuition: 1D gradient descent Choosing good step size matters! 𝑔(𝒚) 𝑔(𝒚) 𝒚 𝒚 𝒚 𝒚 Mike Hughes - Tufts COMP 135 - Spring 2019 5

Log likelihood vs iterations Figure Credit: Emily Fox (UW) Maximizing likelihood: Higher is better! (could multiply by -1 and minimize instead) Mike Hughes - Tufts COMP 135 - Spring 2019 6

If step size is to too small Figure Credit: Emily Fox (UW) Mike Hughes - Tufts COMP 135 - Spring 2019 7

If step size is lar large Figure Credit: Emily Fox (UW) Mike Hughes - Tufts COMP 135 - Spring 2019 8

If step size is to too large Figure Credit: Emily Fox (UW) Mike Hughes - Tufts COMP 135 - Spring 2019 9

If step size is wa way too large Figure Credit: Emily Fox (UW) Mike Hughes - Tufts COMP 135 - Spring 2019 10

Rule for picking step sizes • Never try just one! • Usually: Want largest step size that doesn’t diverge • Try several values (exponentially spaced) until • Find one clearly too small • Find one clearly too large (unhelpful oscillation / divergence) • Always make trace plots! • Show the loss, norm of gradient, and parameter values versus epoch • Smarter choices for step size: • Decaying methods • Search methods • Second-order methods Mike Hughes - Tufts COMP 135 - Spring 2019 11

Decaying step sizes input: initial w 2 R input: initial step size s 0 2 R + while not converged: s 0 Linear decay w w � s t r w L ( w ) kt s t decay( s 0 , t ) Exponential decay s 0 e − kt t t + 1 Often helpful, but hard to get right! Mike Hughes - Tufts COMP 135 - Spring 2019 12

Searching for good step size min x f ( x ) Goal: x Step ∆ x = �r x f ( x ) ∆ x Direction: Possible step lengths Exact Line Search: Expensive but gold standard Search for the best scalar s >= 0, such that: s ∗ = arg min s ≥ 0 f ( x + s ∆ x ) Mike Hughes - Tufts COMP 135 - Spring 2019 13

Searching for good step size min x f ( x ) Goal: x Step ∆ x = �r x f ( x ) ∆ x Direction: Possible step lengths Backtracking Line Search: More Efficient! s = 1 ˆ while reduced slope linear extrapolation f ( x + s ∆ x ) < f ( x + s ∆ x ) : s ← 0 . 9 · s Mike Hughes - Tufts COMP 135 - Spring 2019 14

Backtracking line search Python : scipy.optimize.line_search Linear extrapolation with reduced slope by factor alpha acceptable step sizes rejected step sizes s = 1 ˆ while reduced slope linear extrapolation f ( x + s ∆ x ) < f ( x + s ∆ x ) : s ← 0 . 9 · s Mike Hughes - Tufts COMP 135 - Spring 2019 15

More resources on step sizes! Online Textbook: Convex Optimization http://web.stanford.edu/~boyd/cvxbook/bv_cvxbook.pdf Mike Hughes - Tufts COMP 135 - Spring 2019 16

2 nd order methods for gradient descent Big Idea: 2 nd deriv. can help! Mike Hughes - Tufts COMP 135 - Spring 2019 17

Newton’s method: Use second-derivative to rescale step size! min x f ( x ) Goal: Step Direction: ∆ x Will step directly to minimum ∆ x = � H ( x ) − 1 r x f ( x ) if f is quadratic! In high dimensions, need the Hessian matrix Mike Hughes - Tufts COMP 135 - Spring 2019 18

Animation of Newton’s method f’(x) To optimize, we want to find zeros of first derivative! Mike Hughes - Tufts COMP 135 - Spring 2019 19

L-BFGS: gold standard approximate 2 nd order GD Python : scipy.optimize.fmin_l_bfgs_b L-BFGS : Limited Memory Broyden–Fletcher–Goldfarb–Shanno (BFGS) • Provide loss and gradient functions • Approximates the Hessian via recent history of gradient steps ∆ x = � ˆ ∆ x = � H ( x ) − 1 r x f ( x ) H ( x ) − 1 r x f ( x ) In high dimensions, need the Hessian matrix Instead, use low-rank But this is quadratic in length of x , expensive approximation Mike Hughes - Tufts COMP 135 - Spring 2019 20

Recommend

More recommend