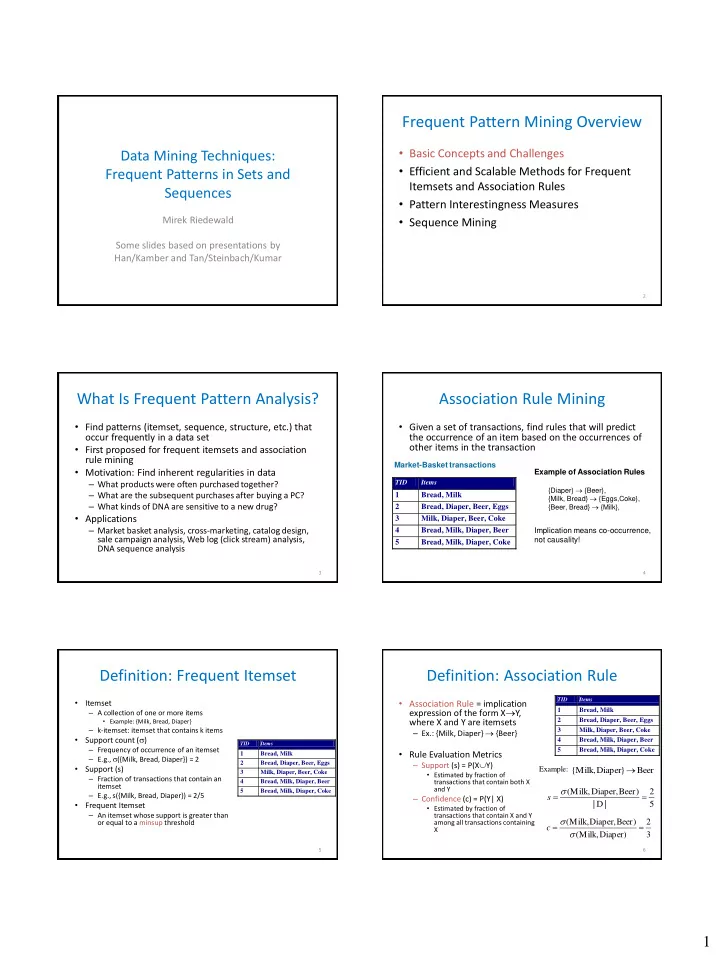

Frequent Pattern Mining Overview • Basic Concepts and Challenges Data Mining Techniques: • Efficient and Scalable Methods for Frequent Frequent Patterns in Sets and Itemsets and Association Rules Sequences • Pattern Interestingness Measures • Sequence Mining Mirek Riedewald Some slides based on presentations by Han/Kamber and Tan/Steinbach/Kumar 2 What Is Frequent Pattern Analysis? Association Rule Mining • Find patterns (itemset, sequence, structure, etc.) that • Given a set of transactions, find rules that will predict occur frequently in a data set the occurrence of an item based on the occurrences of • First proposed for frequent itemsets and association other items in the transaction rule mining Market-Basket transactions • Motivation: Find inherent regularities in data Example of Association Rules – What products were often purchased together? TID Items {Diaper} {Beer}, – What are the subsequent purchases after buying a PC? 1 Bread, Milk {Milk, Bread} {Eggs,Coke}, – What kinds of DNA are sensitive to a new drug? {Beer, Bread} {Milk}, 2 Bread, Diaper, Beer, Eggs • Applications 3 Milk, Diaper, Beer, Coke – Market basket analysis, cross-marketing, catalog design, 4 Bread, Milk, Diaper, Beer Implication means co-occurrence, sale campaign analysis, Web log (click stream) analysis, not causality! 5 Bread, Milk, Diaper, Coke DNA sequence analysis 3 4 Definition: Frequent Itemset Definition: Association Rule TID Items • • Association Rule = implication Itemset expression of the form X Y, – A collection of one or more items 1 Bread, Milk 2 Bread, Diaper, Beer, Eggs • Example: {Milk, Bread, Diaper} where X and Y are itemsets – k-itemset: itemset that contains k items – Ex.: {Milk, Diaper} {Beer} 3 Milk, Diaper, Beer, Coke Support count ( ) • 4 Bread, Milk, Diaper, Beer TID Items – Frequency of occurrence of an itemset 5 Bread, Milk, Diaper, Coke • Rule Evaluation Metrics 1 Bread, Milk – E.g., ({Milk, Bread, Diaper}) = 2 2 Bread, Diaper, Beer, Eggs – Support (s) = P(X Y) • Support (s) Example: { Milk , Diaper } Beer 3 Milk, Diaper, Beer, Coke • Estimated by fraction of – Fraction of transactions that contain an 4 Bread, Milk, Diaper, Beer transactions that contain both X itemset and Y ( Milk , Diaper, Beer ) 2 5 Bread, Milk, Diaper, Coke – E.g., s({Milk, Bread, Diaper}) = 2/5 – Confidence (c) = P(Y| X) s • | D | 5 Frequent Itemset • Estimated by fraction of – An itemset whose support is greater than transactions that contain X and Y ( Milk, Diaper, Beer ) 2 or equal to a minsup threshold among all transactions containing c X ( Milk , Diaper ) 3 5 6 1

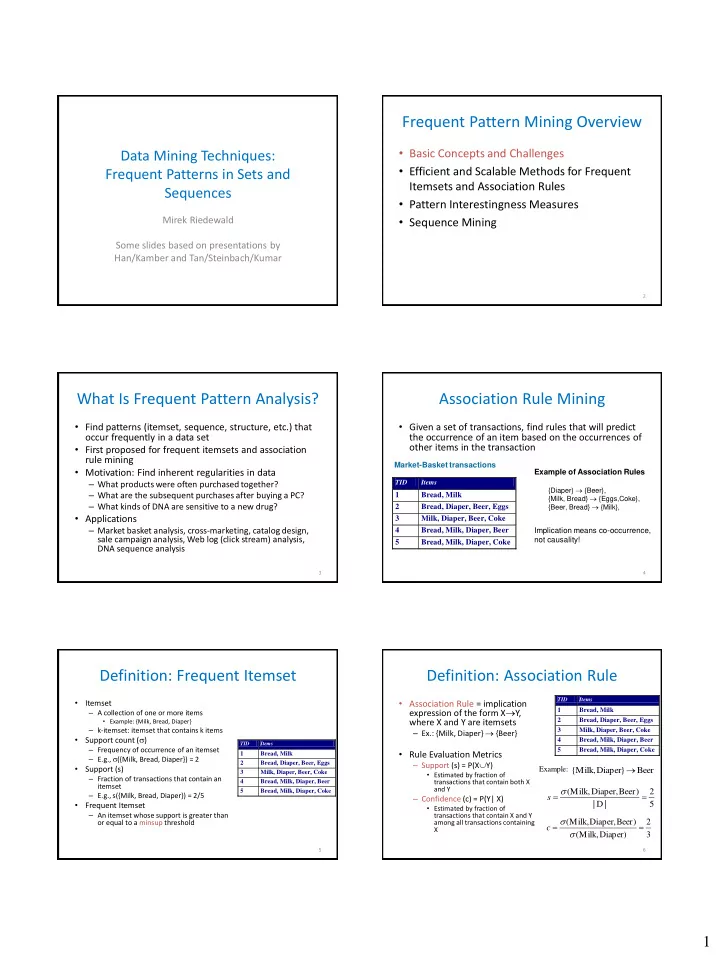

Association Rule Mining Task Mining Association Rules Example rules: • Given a transaction database DB, find all rules TID Items 1 Bread, Milk {Milk,Diaper} {Beer} (s=0.4, c=0.67) having support ≥ minsup and confidence ≥ {Milk,Beer} {Diaper} (s=0.4, c=1.0) 2 Bread, Diaper, Beer, Eggs {Diaper,Beer} {Milk} (s=0.4, c=0.67) minconf 3 Milk, Diaper, Beer, Coke {Beer} {Milk,Diaper} (s=0.4, c=0.67) 4 Bread, Milk, Diaper, Beer • Brute-force approach: {Diaper} {Milk,Beer} (s=0.4, c=0.5) 5 Bread, Milk, Diaper, Coke {Milk} {Diaper,Beer} (s=0.4, c=0.5) – List all possible association rules – Compute support and confidence for each rule Observations : • All the above rules are binary partitions of the same itemset – Remove rules that fail the minsup or minconf {Milk, Diaper, Beer} thresholds • Rules originating from the same itemset have identical support but can have different confidence – Computationally prohibitive! • Thus, we may decouple the support and confidence requirements 7 8 Mining Association Rules Frequent Itemset Generation null • Two-step approach: A B C D E 1. Frequent Itemset Generation • Generate all itemsets that have support minsup AB AC AD AE BC BD BE CD CE DE 2. Rule Generation • Generate high-confidence rules from each frequent itemset, where each rule is a binary partitioning of the ABC ABD ABE ACD ACE ADE BCD BCE BDE CDE frequent itemset • Frequent itemset generation is still Given d items, there computationally expensive ABCD ABCE ABDE ACDE BCDE are 2 d possible candidate itemsets ABCDE 9 10 Frequent Itemset Generation Computational Complexity • Brute-force approach: • Given d unique items, total number of itemsets = 2 d – Each itemset in the lattice is a candidate frequent itemset • Total number of possible association rules? – Count the support of each candidate by scanning the database – Match each transaction against every candidate 1 d d d k d k – Complexity O(N*M*w) => expensive since M=2 d R k j 1 1 List of k j Transactions Candidates d d 1 3 2 1 TID Items 1 Bread, Milk 2 Bread, Diaper, Beer, Eggs If d=6, R = 602 possible M 3 Milk, Diaper, Beer, Coke rules N Bread, Milk, Diaper, Beer 4 5 Bread, Milk, Diaper, Coke w 11 12 2

Frequent Pattern Mining Overview Reducing Number of Candidates • Apriori principle: • Basic Concepts and Challenges – If an itemset is frequent, then all of its subsets must • Efficient and Scalable Methods for Frequent also be frequent • Apriori principle holds due to the following Itemsets and Association Rules property of the support measure: • Pattern Interestingness Measures X , Y : ( X Y ) s ( X ) s ( Y ) • Sequence Mining – Support of an itemset never exceeds the support of its subsets – This is known as the anti-monotone property of support 13 14 Illustrating the Apriori Principle Illustrating the Apriori Principle null null Items (1-itemsets) Item Count A A B B C C D D E E Bread 4 Coke 2 Pairs (2-itemsets) Milk 4 Itemset Count Beer 3 {Bread,Milk} 3 Diaper 4 (No need to generate AB AB AC AC AD AD AE AE BC BC BD BD BE BE CD CD CE CE DE DE {Bread,Beer} 2 Eggs 1 candidates involving Coke {Bread,Diaper} 3 {Milk,Beer} 2 or Eggs) Found to be {Milk,Diaper} 3 infrequent {Beer,Diaper} 3 ABC ABC ABD ABD ABE ABE ACD ACD ACE ACE ADE ADE BCD BCD BCE BCE BDE BDE CDE CDE Minimum Support = 3 Triplets (3-itemsets) If every subset is considered, Itemset Count 6 C 1 + 6 C 2 + 6 C 3 = 41 {Bread,Milk,Diaper} 3 ABCD ABCD ABCE ABCE ABDE ABDE ACDE ACDE BCDE BCDE With support-based pruning, Pruned 6 + 6 + 1 = 13 ABCDE ABCDE supersets 15 16 Apriori Algorithm Important Details of Apriori • How to generate candidates? • Generate L 1 = frequent itemsets of length k=1 – Step 1: self-joining L k • Repeat until no new frequent itemsets are found – Step 2: pruning – Generate C k+1 , the length-(k+1) candidate itemsets, • Example of Candidate-generation for from L k L 3 ={ {a,b,c}, {a,b,d}, {a,c,d}, {a,c,e}, {b,c,d} } – Prune candidate itemsets in C k+1 containing subsets of – Self-joining L 3 • {a,b,c,d} from {a,b,c} and {a,b,d} length k that are not in L k (and hence infrequent) • {a,c,d,e} from {a,c,d} and {a,c,e} – Count support of each remaining candidate by – Pruning: scanning DB; eliminate infrequent ones from C k+1 • {a,c,d,e} is removed because {a,d,e} is not in L 3 – L k+1 =C k+1 ; k = k+1 – C 4 ={ {a,b,c,d} } 17 18 3

How to Generate Candidates? How to Count Supports of Candidates? • Step 1: self-joining L k-1 • Why is counting supports of candidates a problem? insert into C k – Total number of candidates can be very large select p.item 1 , p.item 2 ,…, p.item k-1 , q.item k-1 – One transaction may contain many candidates from L k-1 p, L k-1 q where p.item 1 =q.item 1 AND … AND p.item k-2 =q.item k-2 AND p.item k-1 < q.item k-1 • Method: – Candidate itemsets stored in a hash-tree • Step 2: pruning – Leaf node contains list of itemsets – forall itemsets c in C k do – Interior node contains a hash table • forall (k-1)-subsets s of c do – Subset function finds all candidates contained in a – if (s is not in L k-1 ) then delete c from C k transaction 19 20 Generate Hash Tree Subset Operation Using Hash Tree Hash Function 1 2 3 5 6 transaction • Suppose we have 15 candidate itemsets of length 3: – {1 4 5}, {1 2 4}, {4 5 7}, {1 2 5}, {4 5 8}, {1 5 9}, {1 3 6}, {2 3 4}, {5 6 7}, {3 1 + 2 3 5 6 4 5}, {3 5 6}, {3 5 7}, {6 8 9}, {3 6 7}, {3 6 8} 2 + 3 5 6 1,4,7 3,6,9 • We need: 2,5,8 – Hash function 3 + 5 6 – Max leaf size: max number of itemsets stored in a leaf node (if number of candidate itemsets exceeds max leaf size, split the node) 2 3 4 5 6 7 1 4 5 1 3 6 2 3 4 Hash function 3 4 5 3 5 6 3 6 7 5 6 7 3,6,9 1,4,7 3 6 7 3 5 7 3 6 8 1 4 5 3 5 6 3 4 5 1 3 6 3 6 8 6 8 9 2,5,8 3 5 7 1 2 4 1 5 9 1 2 5 6 8 9 1 2 4 4 5 7 4 5 8 1 2 5 1 5 9 4 5 7 4 5 8 21 22 Subset Operation Using Hash Tree Subset Operation Using Hash Tree Hash Function Hash Function transaction transaction 1 2 3 5 6 1 2 3 5 6 1 + 2 3 5 6 1 + 2 3 5 6 2 + 3 5 6 2 + 3 5 6 1,4,7 3,6,9 1,4,7 3,6,9 1 2 + 3 5 6 1 2 + 3 5 6 2,5,8 2,5,8 3 + 5 6 3 + 5 6 1 3 + 5 6 1 3 + 5 6 2 3 4 2 3 4 1 5 + 6 1 5 + 6 5 6 7 5 6 7 1 4 5 1 3 6 1 4 5 1 3 6 3 4 5 3 5 6 3 6 7 3 4 5 3 5 6 3 6 7 3 5 7 3 6 8 3 5 7 3 6 8 6 8 9 6 8 9 1 2 4 1 5 9 1 2 4 1 5 9 1 2 5 1 2 5 4 5 7 4 5 7 4 5 8 4 5 8 Match transaction against 9 out of 15 candidates 23 24 4

Recommend

More recommend