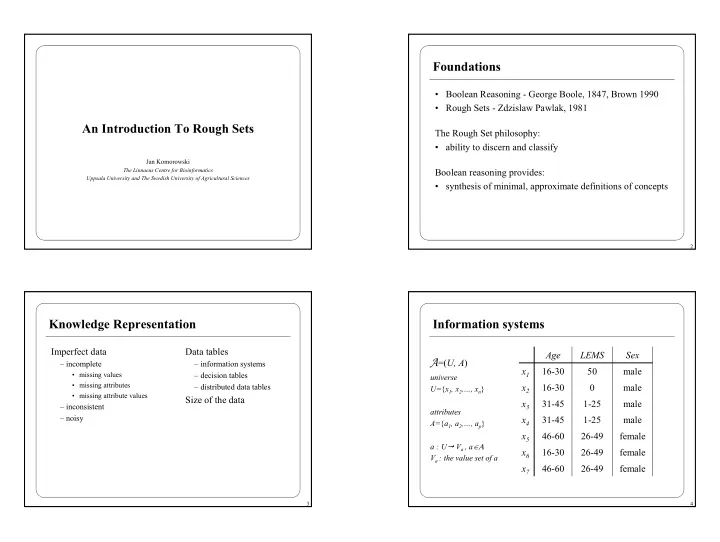

Foundations • Boolean Reasoning - George Boole, 1847, Brown 1990 • Rough Sets - Zdzislaw Pawlak, 1981 An Introduction To Rough Sets The Rough Set philosophy: • ability to discern and classify Jan Komorowski The Linnaeus Centre for Bioinformatics Boolean reasoning provides: Uppsala University and The Swedish University of Agricultural Sciences • synthesis of minimal, approximate definitions of concepts 2 Knowledge Representation Information systems Imperfect data Data tables Age LEMS Sex A =( U, A ) – incomplete – information systems x 1 16-30 50 male • missing values – decision tables universe • missing attributes x 2 16-30 0 male – distributed data tables U= { x 1 , x 2 ,…, x n } • missing attribute values Size of the data x 3 31-45 1-25 male – inconsistent attributes – noisy x 4 31-45 1-25 male A= { a 1 , a 2 ,…, a p } x 5 46-60 26-49 female a : U � V a , a ∈Α x 6 16-30 26-49 female V a : the value set of a x 7 46-60 26-49 female 3 4

Indiscernibility Decision system IND A ( B ) = {( x , x´ ) ∈ U 2 | ∀ a ∈ B a ( x) = a ( x ´)} A =( U, A ∪ { d }) Age LEMS Walk B ⊆ A: subset of attributes Age LEMS Sex x 1 16-30 50 Yes A : decision system x 1 16-30 50 male Example x 2 16-30 0 No x 2 16-30 0 male IND A ({ Age }) = {{ x 1 , x 2 , x 6 }, { x 3 , x 4 }, { x 5 , x 7 }} x 3 31-45 1-25 No x 3 31-45 1-25 male IND A ({ LEMS }) = {{ x 1 }, { x 2 }, { x 3 , x 4 } , { x 5 , x 6 , x 7 }} x 4 31-45 1-25 male x 4 31-45 1-25 Yes IND A ({ Age , LEMS }) x 5 46-60 26-49 female x 5 46-60 26-49 No = {{ x 1 }, { x 2 }, { x 3 , x 4 } , { x 5 , x 7 } , { x 6 }} x 6 16-30 26-49 female x 6 16-30 26-49 Yes Nota bene x 7 46-60 26-49 female Objects x 3 and x 4 , x 5 and x 7 are (pair-wise) indiscernible x 7 46-60 26-49 No IND A ({ Age , LEMS, Sex }) = IND A ({ Age , LEMS }) 5 6 Decision system Decision system Decision rules A =( U, A ∪ { d }) Age LEMS Walk Age LEMS Walk “if Age is 16-30 and LEMS is 50 Objects x 3 and x 4 , x 5 and x 7 x 1 16-30 50 Yes x 1 16-30 50 Yes then Walk is Yes” are (pairwise) x 2 16-30 0 No x 2 16-30 0 No indiscernible x 3 31-45 1-25 No x 3 31-45 1-25 No NB: x 3 and x 4 have different x 4 31-45 1-25 Yes decision (outcome) x 4 31-45 1-25 Yes x 5 46-60 26-49 No x 5 46-60 26-49 No x 6 16-30 26-49 Yes x 7 46-60 26-49 No x 6 16-30 26-49 Yes x 7 46-60 26-49 No 7 8

Set approximation Set approximation Example : ⊂ ⊂ B Walk Walk B Walk A =( U, A ), B ⊆ A Approximating the set of Walk- ing { [ ] } − = ⊆ B lower approximat ion of X : B X x | x X patients, using the two condition B { [ ] } attributes Age and LEMS . − = ∩ ≠ / B upper approximat ion of X : B X x | x X 0 B Equivalence classes contained in the ( ) − = − B boundary region of X : BN X B X B X corresponding regions are B shown. ( ) ≠ / A set is rough if BN X 0 B B X ( ) α = Accuracy of approximat ion : X B B X ( ) ≤ α ≤ − 0 X 1 vagueness ( X ) B 9 10 Rough Membership Reducts Towards approximate minimal definitions of concepts The rough membership function quantifies the degree of relative Diploma Experience French Reference A reduct is a minimal set of overlap between the set X and attributes B ⊆ A such that x 1 MBA Medium Yes Excellent the equivalence class to which x belongs. x 2 MBA Low Yes Neutral IND A ( B ) = IND A ( A ) x 3 MCE Low Yes Good x 4 MSc High Yes Neutral Example of a reduct: x 5 MSc Medium Yes Neutral IND A ({ Experience , Reference }) = IND A ( A ) x 6 MSc High Yes Excellent x 7 MBA High No Good [ ] x 8 x ∩ X MCE Low No Excellent ( ) [ ] ( ) µ B x : U → 0, 1 and µ B x = B [ ] X X x B ( ) ( ) ∈ Interpreta tion of µ B x : a frequency - based estimate of Pr x X | x , B X 11 12

Discernibility Matrices and Functions Discernibility Matrices and Functions A =( U, A ) An implicant of a Boolean function f is any conjunction of literals (variables or their negations) such that, if the values of these A discernibility matrix of A is a symmetric n × n matrix with entries literals are true under an arbitrary valuation v of variables, then c ij = { a ∈ A | a ( x i ) ≠ a ( x j )} for i , j = 1,… , n . the value of the function f under v is also true. A discernibility function f A for an information system A is a Boolean function of m Boolean variables a * 1 ,… , a * m A prime implicant is a minimal implicant. (corresponding to attributes a 1 ,… , a m ) f A ( a * 1 ,… , a * m ) = ∧ { ∨ c * ij | 1 ≤ j ≤ i ≤ n , c * ij ≠ ∅ } The set of all prime implicants of f A determines the set of all reducts of A . ij = { a * | a ∈ c ij } where c * Note: we use a instead of a * in the sequel 13 14 Discernibility Matrices and Functions Discernibility Matrices and Functions Example Example Hiring : unreduced table Hiring : discernibility matrix Diploma Experience French Reference [ x 1 ] [ x 2 ] [ x 3 ] [ x 4 ] [ x 5 ] [ x 6 ] [ x 7 ] [ x 8 ] x 1 MBA Medium Yes Excellent [ x 1 ] ∅ x 2 MBA Low Yes Neutral [ x 2 ] e , r ∅ x 3 MCE Low Yes Good [ x 3 ] d , e , r d , r ∅ x 4 MSc High Yes Neutral [ x 4 ] d , e , r d , e d , e , r ∅ x 5 MSc Medium Yes Neutral [ x 5 ] d , r d , e d , e , r e ∅ x 6 MSc High Yes Excellent [ x 6 ] d , e d , e , r d , e , r r e , r ∅ x 7 MBA High No Good [ x 7 ] e , f , r e , f , r d , e , f d , f , r d , e , f , r d , f , r ∅ x 8 MCE Low No Excellent [ x 8 ] d , e , f d , f , r f , r d , e , f , r d , e , f , r d , e , f d , e , r ∅ 15 16

Discernibility Matrices and Functions k -relative Reducts Towards approximate description of decision classes Example Hiring : discernibility function Example Hiring : consider one complete column in the discernibility matrix f A ( d , e , f , r ) = ( e ∨ r ) ( d ∨ e ∨ r ) ( d ∨ e ∨ r ) ( d ∨ r ) ( d ∨ e ) ( e ∨ f ∨ r ) ( d ∨ e ∨ f ) ( d ∨ r ) ( d ∨ e ) ( d ∨ e ) ( d ∨ e ∨ r ) ( e ∨ f ∨ r ) ( d ∨ f ∨ r ) [ x 1 ] [ x 2 ] [ x 3 ] [ x 4 ] [ x 5 ] [ x 6 ] [ x 7 ] [ x 8 ] ( d ∨ e ∨ r ) ( d ∨ e ∨ r ) ( d ∨ e ∨ r ) ( d ∨ e ∨ f ) ( f ∨ r ) [ x 1 ] d, e ∅ ( e ) ( r ) ( d ∨ f ∨ r ) ( d ∨ e ∨ f ∨ r ) [ x 2 ] e , r d , e , r ∅ ( e ∨ r ) ( d ∨ e ∨ f ∨ r ) ( d ∨ e ∨ f ∨ r ) [ x 3 ] d , e , r d , r d , e , r ∅ ( d ∨ f ∨ r ) ( d ∨ e ∨ f ) r [ x 4 ] d , e , r d , e d , e , r ∅ ( d ∨ e ∨ r ) e , r [ x 5 ] d , r d , e d , e , r e ∅ After simplification: [ x 6 ] d , e d , e , r d , e , r r e , r ∅ f A ( d , e , f , r ) = e ∧ r [ x 7 ] e , f , r e , f , r d , e , f d , f , r d , e , f , r d , f , r ∅ [ x 8 ] d , e , f d , f , r f , r d , e , f , r d , e , f , r d , e , f d , e , r ∅ IND A ({ Experience , Reference }) = IND A ( A ) f A [ x 6 ] ( d , e , f , r ) = ( d ∨ e ) ( d ∨ e ∨ r ) ( d ∨ e ∨ r ) ( r ) ( e ∨ r ) ( d ∨ f ∨ r ) ( d ∨ e ∨ f ) After simplification: f A [ x 6 ] = r ∧ ( d ∨ e ) 17 18 k -relative Reducts The Classification Problem Towards approximate description of decision classes A =( U, A ∪ { d }) Example Example “The sixth equivalence class can be discerned from the other Hiring : the (reordered) decision table classes by the attributes Reference and Diploma or Reference Given a training set (a decision sub-table U’ ⊂ U ) find an and Experience ”. approximation of decision d . Diploma Experience French Reference Decision A Boolean function restricted to the conjunction running only over x 1 MBA Medium Yes Excellent Accept column k in the discernibility matrix (instead of over all columns) gives the k-relative discernibility function . x 4 MSc High Yes Neutral Accept x 6 MSc High Yes Excellent Accept The set of all prime implicants of this function determines the set of all k-relative reducts of A . x 7 MBA High No Good Accept k -relative reducts reveal the minimum amount of information x 2 MBA Low Yes Neutral Reject needed to discern x k ∈ U (or, more precisely, [ x k ] ⊆ U ) from all x 3 MCE Low Yes Good Reject other objects. x 5 MSc Medium Yes Neutral Reject x 8 MCE Low No Excellent Reject 19 20

Recommend

More recommend