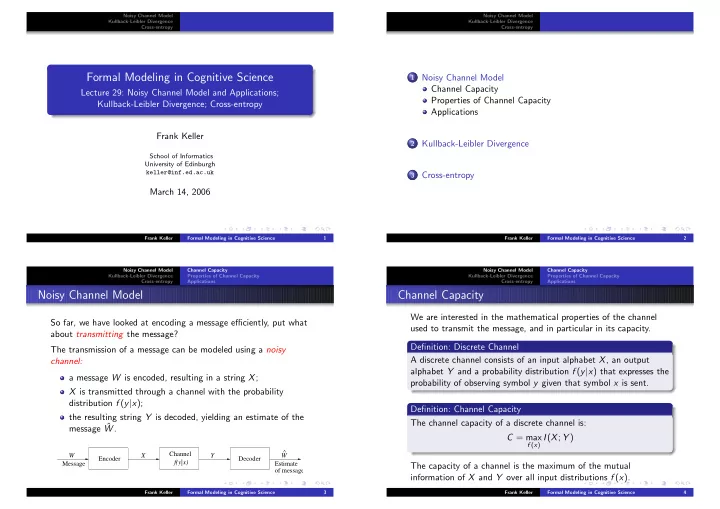

Noisy Channel Model Noisy Channel Model Kullback-Leibler Divergence Kullback-Leibler Divergence Cross-entropy Cross-entropy Formal Modeling in Cognitive Science 1 Noisy Channel Model Channel Capacity Lecture 29: Noisy Channel Model and Applications; Properties of Channel Capacity Kullback-Leibler Divergence; Cross-entropy Applications Frank Keller 2 Kullback-Leibler Divergence School of Informatics University of Edinburgh keller@inf.ed.ac.uk 3 Cross-entropy March 14, 2006 Frank Keller Formal Modeling in Cognitive Science 1 Frank Keller Formal Modeling in Cognitive Science 2 Noisy Channel Model Channel Capacity Noisy Channel Model Channel Capacity Kullback-Leibler Divergence Properties of Channel Capacity Kullback-Leibler Divergence Properties of Channel Capacity Cross-entropy Applications Cross-entropy Applications Noisy Channel Model Channel Capacity We are interested in the mathematical properties of the channel So far, we have looked at encoding a message efficiently, put what used to transmit the message, and in particular in its capacity. about transmitting the message? Definition: Discrete Channel The transmission of a message can be modeled using a noisy A discrete channel consists of an input alphabet X , an output channel: alphabet Y and a probability distribution f ( y | x ) that expresses the a message W is encoded, resulting in a string X ; probability of observing symbol y given that symbol x is sent. X is transmitted through a channel with the probability distribution f ( y | x ); Definition: Channel Capacity the resulting string Y is decoded, yielding an estimate of the The channel capacity of a discrete channel is: message ˆ W . C = max f ( x ) I ( X ; Y ) ^ Channel W X Y W Encoder Decoder f(y|x) Message Estimate The capacity of a channel is the maximum of the mutual of message information of X and Y over all input distributions f ( x ). Frank Keller Formal Modeling in Cognitive Science 3 Frank Keller Formal Modeling in Cognitive Science 4

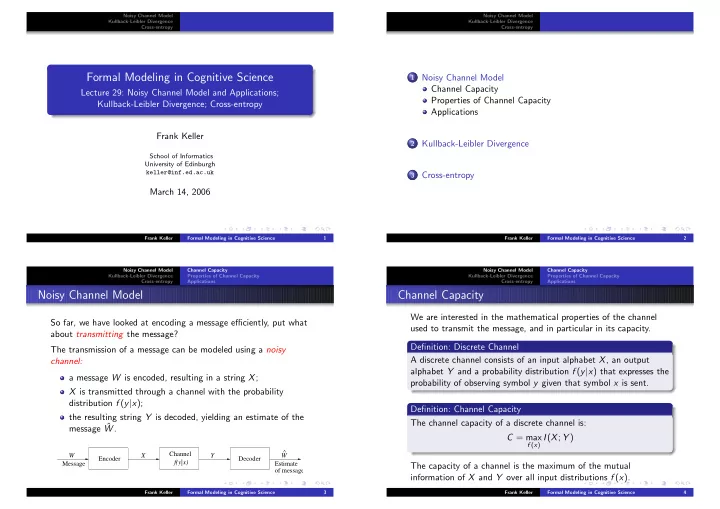

Noisy Channel Model Channel Capacity Noisy Channel Model Channel Capacity Kullback-Leibler Divergence Properties of Channel Capacity Kullback-Leibler Divergence Properties of Channel Capacity Cross-entropy Applications Cross-entropy Applications Channel Capacity Channel Capacity Example: Binary Symmetric Channel Example: Noiseless Binary Channel Assume a binary channel whose input is flipped (0 transmitted a 1 or 1 Assume a binary channel whose input is reproduced exactly at the transmitted as 0) with probability p : output. Each transmitted bit is received without error: 1 − p 0 0 0 0 p p 1 1 1 1 1 − p The channel capacity of this channel is: The mutual information of this channel is bounded by: C = max f ( x ) I ( X ; Y ) = 1 bit I ( X ; Y ) = H ( Y ) − H ( X | Y ) = H ( Y ) − � x f ( x ) H ( Y | X = x ) = H ( Y ) − � x f ( x ) H ( p ) = H ( Y ) − H ( p ) ≤ 1 − H ( p ) This maximum is achieved with f (0) = 1 2 and f (1) = 1 2 . The channel capacity is therefore: C = max f ( x ) I ( X ; Y ) = 1 − H ( p ) bits Frank Keller Formal Modeling in Cognitive Science 5 Frank Keller Formal Modeling in Cognitive Science 6 Noisy Channel Model Channel Capacity Noisy Channel Model Channel Capacity Kullback-Leibler Divergence Properties of Channel Capacity Kullback-Leibler Divergence Properties of Channel Capacity Cross-entropy Applications Cross-entropy Applications Channel Capacity Properties Channel Capacity A binary data sequence of length 10,000 transmitted over a binary symmetric channel with p = 0 . 1: Theorem: Properties of Channel Capacity 1 C ≥ 0 since I ( X ; Y ) ≥ 0; 1 − p 0 0 2 C ≤ log | X | , since C = max I ( X ; Y ) ≤ max H ( X ) ≤ log | X | ; p 3 C ≤ log | Y | for the same reason. p 1 1 1 − p Frank Keller Formal Modeling in Cognitive Science 7 Frank Keller Formal Modeling in Cognitive Science 8

Noisy Channel Model Channel Capacity Noisy Channel Model Channel Capacity Kullback-Leibler Divergence Properties of Channel Capacity Kullback-Leibler Divergence Properties of Channel Capacity Cross-entropy Applications Cross-entropy Applications Applications of the Noisy Channel Model Applications of the Noisy Channel Model Application Input Output f ( i ) f ( o | i ) The noisy channel can be applied to decoding processes involving Machine trans- target source target translation linguistic information. A typical formulation of such a problem is: lation language language language model we start with a linguistic input I ; word word model sequences sequences I is transmitted through a noisy channel with the probability Optical charac- actual text text with language model of distribution f ( o | i ); ter recognition mistakes model OCR errors the resulting output O is decoded, yielding an estimate of the Part of speech POS word probability f ( w | t ) input ˆ I . tagging sequences sequences of POS sequences ^ Speech recog- word speech sig- language acoustic Noisy Channel I O I Decoder nition sequences nal model model f(o|i) Frank Keller Formal Modeling in Cognitive Science 9 Frank Keller Formal Modeling in Cognitive Science 10 Noisy Channel Model Channel Capacity Noisy Channel Model Channel Capacity Kullback-Leibler Divergence Properties of Channel Capacity Kullback-Leibler Divergence Properties of Channel Capacity Cross-entropy Applications Cross-entropy Applications Applications of the Noisy Channel Model Example Output: Spanish–English we all know very well that the current treaties are insufficient and that , Let’s look at machine translation in more detail. Assume that the in the future , it will be necessary to develop a better structure and French text ( F ) passed through a noisy channel and came out as different for the european union , a structure more constitutional also English ( E ). We decode it to estimate the original French (ˆ F ): make it clear what the competences of the member states and which belong to the union . messages of concern in the first place just before ^ F Noisy Channel E F Decoder the economic and social problems for the present situation , and in spite f(e|f) of sustained growth , as a result of years of effort on the part of our citizens . the current situation , unsustainable above all for many We compute ˆ F using Bayes’ theorem: self-employed drivers and in the area of agriculture , we must improve without doubt . in itself , it is good to reach an agreement on procedures f ( f ) f ( e | f ) ˆ , but we have to ensure that this system is not likely to be used as a F = arg max f ( f | e ) = arg max = arg max f ( f ) f ( e | f ) f ( e ) f f f weapon policy . now they are also clear rights to be respected . i agree with the signal warning against the return , which some are tempted to Here f ( e | f ) is the translation model, f ( f ) is the French language the intergovernmental methods . there are many of us that we want a model, and f ( e ) is the English language model (constant). federation of nation states . Frank Keller Formal Modeling in Cognitive Science 11 Frank Keller Formal Modeling in Cognitive Science 12

Recommend

More recommend