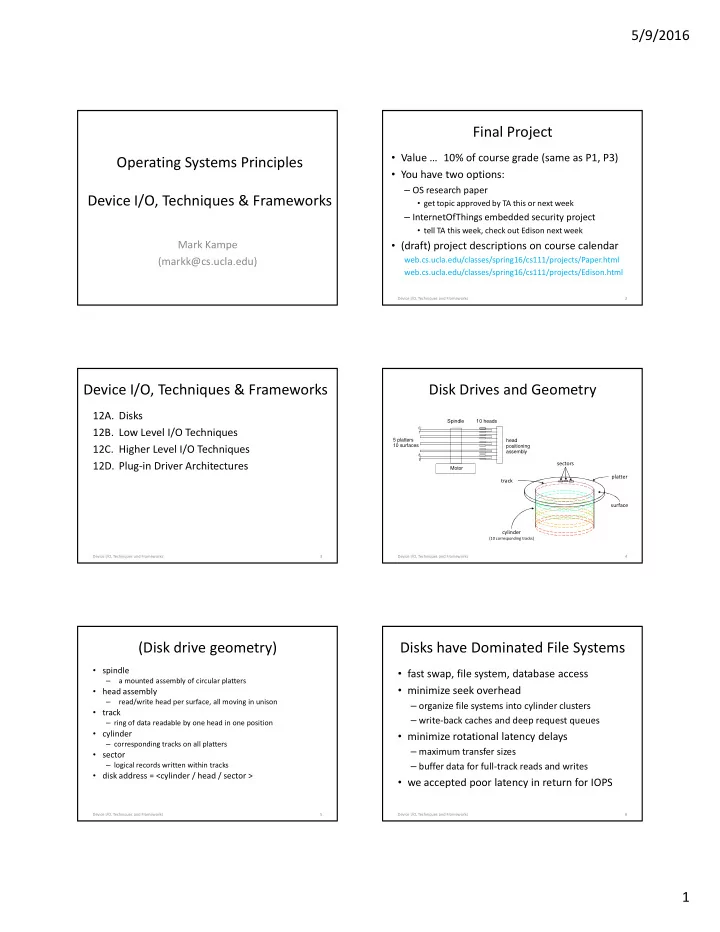

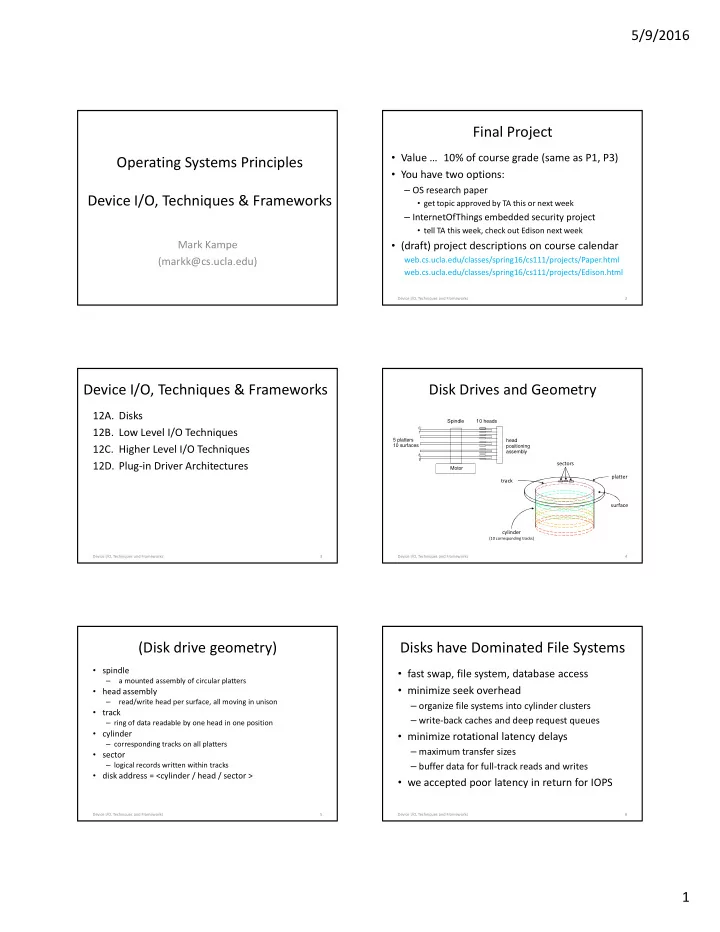

5/9/2016 Final Project • Value … 10% of course grade (same as P1, P3) Operating Systems Principles • You have two options: – OS research paper Device I/O, Techniques & Frameworks • get topic approved by TA this or next week – InternetOfThings embedded security project • tell TA this week, check out Edison next week Mark Kampe • (draft) project descriptions on course calendar (markk@cs.ucla.edu) web.cs.ucla.edu/classes/spring16/cs111/projects/Paper.html web.cs.ucla.edu/classes/spring16/cs111/projects/Edison.html Device I/O, Techniques and Frameworks 2 Device I/O, Techniques & Frameworks Disk Drives and Geometry 12A. Disks Spindle 10 heads 0 12B. Low Level I/O Techniques 1 5 platters head 10 surfaces 12C. Higher Level I/O Techniques positioning assembly 8 9 12D. Plug-in Driver Architectures sectors Motor platter track surface cylinder (10 corresponding tracks) Device I/O, Techniques and Frameworks 3 Device I/O, Techniques and Frameworks 4 (Disk drive geometry) Disks have Dominated File Systems • spindle • fast swap, file system, database access – a mounted assembly of circular platters • minimize seek overhead • head assembly – read/write head per surface, all moving in unison – organize file systems into cylinder clusters • track – write-back caches and deep request queues – ring of data readable by one head in one position • cylinder • minimize rotational latency delays – corresponding tracks on all platters – maximum transfer sizes • sector – logical records written within tracks – buffer data for full-track reads and writes • disk address = <cylinder / head / sector > • we accepted poor latency in return for IOPS Device I/O, Techniques and Frameworks 5 Device I/O, Techniques and Frameworks 6 1

5/9/2016 Disk vs SSD Performance Random Access: Game Over Cheeta Barracuda Extreme/Pro (archival) (high perf) (SSD) RPM 7,000 15,000 n/a average latency 4.3ms 2ms n/a average seek 9ms 4ms n/a transfer speed 105MB/s 125MB/s 540MB/s sequential 4KB read 39us 33us 10us sequential 4KB write 39us 33us 11us random 4KB read 13.2ms 6ms 10us random 4KB write 13.2ms 6ms 11us Device I/O, Techniques and Frameworks 7 Device I/O, Techniques and Frameworks 8 The Changing I/O Landscape importance of good device utilization • key system devices limit system performance • Storage paradigms – file system I/O, swapping, network communication – old: swapping, paging, file systems, data bases • if device sits idle, its throughput drops – new: NAS, distributed object/key-value stores – this may result in lower system throughput • I/O traffic – longer service queues, slower response times – old: most I/O was disk I/O • delays can disrupt real-time data flows – new: network and video dominate many systems – resulting in unacceptable performance – possible loss of irreplaceable data • Performance goals: • it is very important to keep key devices busy – old: maximize throughput, IOPS – start request n+1 immediately when n finishes – new: low latency, scalability, reliability, availability Device I/O, Techniques and Frameworks 9 Device I/O, Techniques and Frameworks 10 Direct Memory Access Poor I/O device Utilization • bus facilitates data flow in all directions between IDLE I/O device – CPU, memory, and device controllers BUSY • CPU can be the bus-master process – initiating data transfers w/memory, device controllers 1. process waits to run • device controllers can also master the bus 2. process does computation in preparation for I/O operation 3. process issues read system call, blocks awaiting completion – CPU instructs controller what transfer is desired 4. device performs requested operation • what data to move to/from what part of memory 5. completion interrupt awakens blocked process – controller does transfer w/o CPU assistance 6. process runs again, finishes read system call – controller generates interrupt at end of transfer 7. process does more computation 8. Process issues read system call, blocks awaiting completion Device I/O, Techniques and Frameworks 11 Device I/O, Techniques and Frameworks 12 2

5/9/2016 Interrupt Handling I/O Interrupts Application Program • device controllers, busses, and interrupts instr ; instr ; instr ; instr ; instr ; instr ; – busses have ability to send interrupts to the CPU user mode supervisor mode PS/PC – devices signal controller when they are done/ready PS/PC device – when device finishes, controller puts interrupt on bus PS/PC requests PS/PC interrupt • CPUs and interrupts 1 st level interrupt vector table return to – interrupts look very much like traps interrupt handler user mode • traps come from CPU, interrupts are caused externally – unlike traps, interrupts can be enabled/disabled driver 2 nd level handler driver • a device can be told it can or cannot generate interrupts driver (device driver driver • special instructions can enable/disable interrupts to CPU interrupt routine) • interrupt may be held pending until s/w is ready for it list of device interrupt handlers Device I/O, Techniques and Frameworks 14 Device I/O, Techniques and Frameworks 13 Keeping Key Devices Busy Interrupt Driven Chain Scheduled I/O xx_read/write() { • allow multiple requests pending at a time allocate a new request descriptor fill in type, address, count, location xx_intr() { – queue them, just like processes in the ready queue insert request into service queue extract completion info from controller if (device is idle) { – requesters block to await eventual completions update completion info in current req wakeup current request disable_device_interrupt(); • use DMA to perform the actual data transfers xx_start(); if (more requests in queue) xx_start() – data transferred, with no delay, at device speed enable_device_interrupt(); else } mark device idle await completion of request – minimal overhead imposed on CPU } extract completion info for caller } • when the currently active request completes xx_start() { – device controller generates a completion interrupt get next request from queue get address, count, disk address – interrupt handler posts completion to requester load request parameters into controller start the DMA operation – interrupt handler selects and initiates next transfer mark device busy } Device I/O, Techniques and Frameworks 15 Device I/O, Techniques and Frameworks 16 Multi-Tasking & Interrupt Driven I/O Bigger Transfers are Better device 1A 2A 1B 2B 1B 1C process 1 1A process 2 2A 2B process 3 1. P 1 runs, requests a read, and blocks 7. Awaken P 2 and start next read operation 2. P 2 runs, requests a read, and blocks 8. P 2 runs, requests a read, and blocks 9. P 3 runs until interrupted 3. P 3 runs until interrupted 10. Awaken P 1 and start next read operation 4. Awaken P 1 and start next read operation 11. P 1 runs, requests a read, and blocks 5. P 1 runs, requests a read, and blocks 6. P 3 runs until interrupted Device I/O, Techniques and Frameworks 17 Device I/O, Techniques and Frameworks 18 3

5/9/2016 (Bigger Transfers are Better) Input/Output Buffering • disks have high seek/rotation overheads • Fewer/larger transfers are more efficient – larger transfers amortize down the cost/byte – they may not be convenient for applications – natural record sizes tend to be relatively small • all transfers have per-operation overhead • Operating system can buffer process I/O – instructions to set up operation – device time to start new operation – maintain a cache of recently used disk blocks – time and cycles to service completion interrupt – accumulate small writes, flush out as blocks fill • larger transfers have lower overhead/byte – read whole blocks, deliver data as requested • Enables read-ahead – this is not limited to s/w implementations – OS reads/caches blocks not yet requested Device I/O, Techniques and Frameworks 19 Device I/O, Techniques and Frameworks 20 Deep Request Queues Double-Buffered Output • Having many I/O operations queued is good application – maintains high device utilization (little idle time) – reduces mean seek distance/rotational delay – may be possible to combine adjacent requests buffer buffer #1 #2 • Ways to achieve deep queues: – many processes making requests – individual processes making parallel requests – read-ahead for expected data requests device – write-back cache flushing Device I/O, Techniques and Frameworks 22 Device I/O, Techniques and Frameworks 21 (double-buffered output) Double-Buffered Input • multiple buffers queued up, ready to write application – each write completion interrupt starts next write • application and device I/O proceed in parallel – application queues successive writes buffer buffer • don’t bother waiting for previous operation to finish #1 #2 – device picks up next buffer as soon as it is ready • if we're CPU-bound (more CPU than output) – application speeds up because it doesn't wait for I/O • if we're I/O-bound (more output than CPU) device – device is kept busy, which improves throughput – but eventually we may have to block the process Device I/O, Techniques and Frameworks 24 Device I/O, Techniques and Frameworks 23 4

Recommend

More recommend