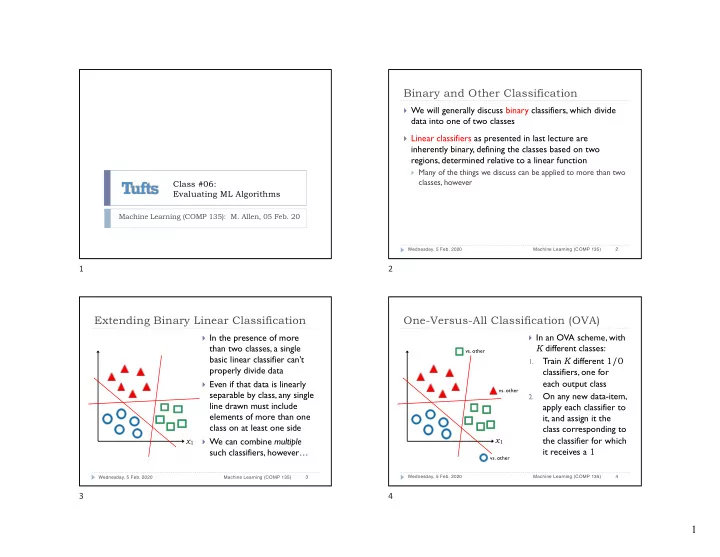

Binary and Other Classification } We will generally discuss binary classifiers, which divide data into one of two classes } Linear classifiers as presented in last lecture are inherently binary, defining the classes based on two regions, determined relative to a linear function } Many of the things we discuss can be applied to more than two classes, however Class #06: Evaluating ML Algorithms Machine Learning (COMP 135): M. Allen, 05 Feb. 20 2 Wednesday, 5 Feb. 2020 Machine Learning (COMP 135) 1 2 Extending Binary Linear Classification One-Versus-All Classification (OVA) } In the presence of more } In an OVA scheme, with than two classes, a single K different classes: vs. other basic linear classifier can’t Train K different 1/0 1. properly divide data classifiers, one for } Even if that data is linearly each output class vs. other separable by class, any single On any new data-item, 2. line drawn must include apply each classifier to elements of more than one it, and assign it the class on at least one side class corresponding to x 1 the classifier for which x 1 } We can combine multiple it receives a 1 such classifiers, however… vs. other 4 Wednesday, 5 Feb. 2020 Machine Learning (COMP 135) 3 Wednesday, 5 Feb. 2020 Machine Learning (COMP 135) 3 4 1

<latexit sha1_base64="evkQHLVykRY7iCndPBMb6cUrKRc=">ACHXicZVDLSsNAFJ34flt1IeJmUIQKWpK4sBuh6EboQgVbBVPLZDKpg5OZMLkRS8iv6M/oTos70Y1bP8PpY2H1wIXDmXv3HP8WPAEbPvDGhkdG5+YnJqemZ2bX1gsLC3XE5VqympUCaUvfZIwSWrAQfBLmPNSOQLduHfHnXfL+6YTriS59COWSMiLclDTgkYqVkoez6XKsqebm2MHprxQE5pVcbG62z/1oHdQ3aSF6vX7nazsGmX7B7wf+IMyGal/PW2+vm9dtosdLxA0TRiEqgSXLl2DE0MqKBU8HyGS9NWEzoLWmxrOcrx1tGCnCotCkJuKcO9UkFPR9D01cphOVGxmWcApO0vyZMBQaFuxHgGtGQbQNIVRz8z+mN8R4BhPU0CadChbs4LtuoG5VbSU6b+JXHOvCcD5a/c/qbslZ6/knpkDlEfU2gdbaAictA+qBjdIpqiKIH9IReUcd6tJ6tF6vTbx2xBjMraAjW+w87TaNf</latexit> Issues with OVA Classification One-Versus-One Classification (OVO) } Another idea is to train a separate classifier for each possible } The basic OVA idea pair of output classes requires that each linear } Only requires each such pair to be individually separable, which is classifier separate one somewhat more reasonable class from all others } For K classes, it requires a larger number of classifiers: } As the number of ✓ K ◆ = K ( K − 1) = O( K 2 ) classes increases, this 2 2 added linear separability } Relative to the size of data sets, this is generally manageable, and constraint gets harder each classifier is often simpler than in an OVA setting to satisfy } A new data-item is again tested against all of the classifiers, and x 1 given the class of the majority of those for which it is given a non-negative ( 1 ) value } May still suffer from some ambiguities 6 Wednesday, 5 Feb. 2020 Machine Learning (COMP 135) 5 Wednesday, 5 Feb. 2020 Machine Learning (COMP 135) 5 6 Evaluating a Classifier Evaluating a Classifier } It is often useful to separate the results generated by a } It is often useful to separate the results generated by a classifier, according to what it gets right or not: classifier, according to what it gets right or not: } True Positives (TP): those that it identifies correctly as relevant } True Positives (TP): those that it identifies correctly as relevant } False Positives (FP): those that if identifies wrongly as relevant } False Negatives (FN): those that are relevant, but missed } False Positives (FP): those that if identifies wrongly as relevant } True Negatives (TN): those it correctly labels as non-relevant } False Negatives (FN): those that are relevant, but missed } These categories make sense when we are interested in } True Negatives (TN): those it correctly labels as non-relevant separating out one relevant class from another (again, we return to binary classification for simplicity) Classifier Output } Of course, relevance depends upon what we care about: Negative (0) Positive (1) } Picking out the actual earthquakes in seismic data (earthquakes are relevant; explosions are not) Negative (0) TN FP Ground } Picking out the explosions in seismic data (explosions are relevant; earthquakes are not) Truth Positive (1) FN TP 8 Wednesday, 5 Feb. 2020 Machine Learning (COMP 135) 7 Wednesday, 5 Feb. 2020 Machine Learning (COMP 135) 7 8 2

<latexit sha1_base64="RwZB5+KFnC9tY8sG7aIYAOlNc=">ACZ3icbVHRShtBFJ1dtdpYNVqwQl+uDYLQNuzGBwURpBbxqUQwKrghTGbvxsHJzjJ7V5RlofiB9rkg+Nr+hbNJRKNeGDice86dO2e6iZIped5fx52YnHo3PfO+Mvthbn6hurh0nOrMCGwJrbQ57fIUlYyxRZIUniYGeb+r8KR7sVf2Ty7RpFLHR3SdYLvPe7GMpOBkqU71JogMF3lAeEV5UIM9bQwKo8ENyEo8ZPTvx7ipYuoBLADgTw3HfULOArjPCv0vsW/Qj3m0/QiqFTrXl1b1DwGvgjUNvdv/z+27DNDvV2yDUIutjTELxND3zvYTaOTckhcKiEmQpJlxc8B7mg4QKWLNUCJE29sQEA3ZMF2saJDLmPso2mrnMk4ywlgMx0SZAtJQhgmhLMNS1xZwYaS9H8Q5t8GQjXxskskUht/gsvyn0O6qetrqz/sNu68NwH/53NfguFH3N+qNQ5vEDzasGfaZfWHrzGebJcdsCZrMcH+OfPOJ2fF+e8uMvuylDqOiPRzZW7uoDAHW+Kw=</latexit> <latexit sha1_base64="RwZB5+KFnC9tY8sG7aIYAOlNc=">ACZ3icbVHRShtBFJ1dtdpYNVqwQl+uDYLQNuzGBwURpBbxqUQwKrghTGbvxsHJzjJ7V5RlofiB9rkg+Nr+hbNJRKNeGDice86dO2e6iZIped5fx52YnHo3PfO+Mvthbn6hurh0nOrMCGwJrbQ57fIUlYyxRZIUniYGeb+r8KR7sVf2Ty7RpFLHR3SdYLvPe7GMpOBkqU71JogMF3lAeEV5UIM9bQwKo8ENyEo8ZPTvx7ipYuoBLADgTw3HfULOArjPCv0vsW/Qj3m0/QiqFTrXl1b1DwGvgjUNvdv/z+27DNDvV2yDUIutjTELxND3zvYTaOTckhcKiEmQpJlxc8B7mg4QKWLNUCJE29sQEA3ZMF2saJDLmPso2mrnMk4ywlgMx0SZAtJQhgmhLMNS1xZwYaS9H8Q5t8GQjXxskskUht/gsvyn0O6qetrqz/sNu68NwH/53NfguFH3N+qNQ5vEDzasGfaZfWHrzGebJcdsCZrMcH+OfPOJ2fF+e8uMvuylDqOiPRzZW7uoDAHW+Kw=</latexit> Basic Accuracy Basic Accuracy } The simplest measure of accuracy is just the fraction of } The simplest measure of accuracy can also be misleading, correct classifications: depending upon the data-set itself: # Correct TP + TN # Correct TP + TN = = | Data-set | TP + TN + FP + FN | Data-set | TP + TN + FP + FN } Basic accuracy treats both types of correctness—and } In a data-set of 100 examples, with 99 positive, and only a therefore both types of error—as the same single negative example, any classifier that simply says positive ( 1 ) for everything would have 99% “accuracy” } This isn’t always what we want however; sometimes false positives and false negatives are quite different things } Such a classifier might be entirely useless for real-world classification problems, however! 10 Wednesday, 5 Feb. 2020 Machine Learning (COMP 135) 9 Wednesday, 5 Feb. 2020 Machine Learning (COMP 135) 9 10 Confusion Matrices Confusion Matrices } One way to separate out positive and negative examples, and Classifier Output better analyze the behavior of a classifier is to break down the Negative (0) Positive (1) overall success/failure case by case } For 100 data-points, 50 of each type, we might have behavior Negative (0) 40 10 Ground as shown in the following table: Truth Positive (1) 1 49 Classifier Output } In this data, the overall accuracy is 89/100 = 89% Negative (0) Positive (1) } However, we see that the accuracy over the two types of data is quite different: Negative (0) 40 10 Ground For negative data, accuracy is just 40/50 = 80%, with a 20% rate of 1. Truth Positive (1) 1 49 false positives For positive data, accuracy is 49/50 = 98%, with only a 2% rate of 2. } What can this tell us? false negatives 12 Wednesday, 5 Feb. 2020 Machine Learning (COMP 135) 11 Wednesday, 5 Feb. 2020 Machine Learning (COMP 135) 11 12 3

Recommend

More recommend