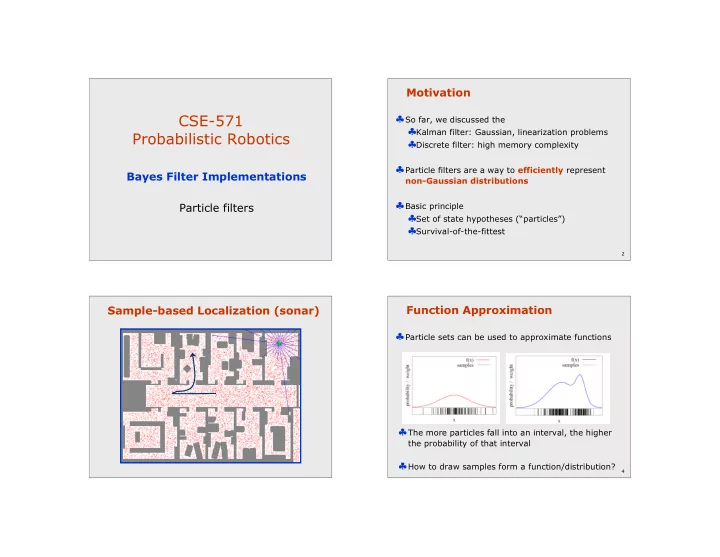

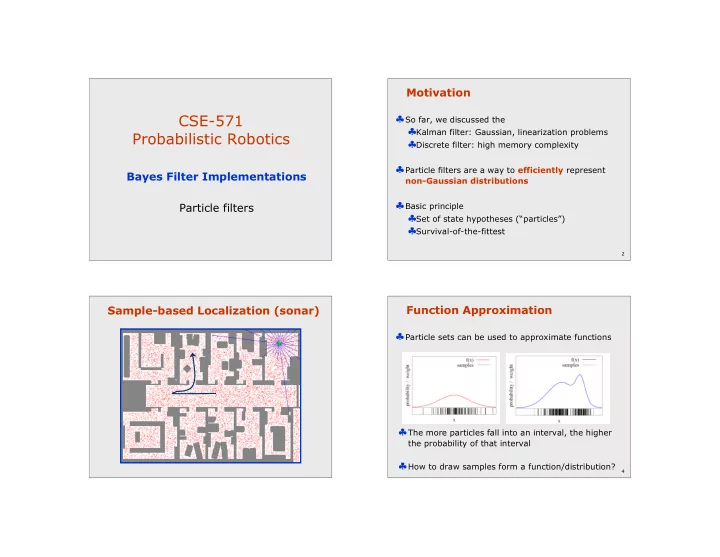

Motivation CSE-571 ♣ So far, we discussed the ♣ Kalman filter: Gaussian, linearization problems Probabilistic Robotics ♣ Discrete filter: high memory complexity ♣ Particle filters are a way to efficiently represent Bayes Filter Implementations non-Gaussian distributions Particle filters ♣ Basic principle ♣ Set of state hypotheses (“particles”) ♣ Survival-of-the-fittest 2 Function Approximation Sample-based Localization (sonar) ♣ Particle sets can be used to approximate functions ♣ The more particles fall into an interval, the higher the probability of that interval ♣ How to draw samples form a function/distribution? 4

Rejection Sampling Importance Sampling Principle ♣ Let us assume that f(x)< 1 for all x ♣ We can even use a different distribution g to generate samples from f ♣ Sample x from a uniform distribution ♣ By introducing an importance weight w , we can ♣ Sample c from [0,1] account for the “differences between g and f ” ♣ if f(x) > c keep the sample ♣ w = f / g otherwise reject the sampe ♣ f is often called target ♣ g is often called f(x’) c c’ proposal OK f(x) x x’ 5 6 Importance Sampling with Resampling: Distributions Landmark Detection Example Wanted: samples distributed according to p(x| z 1 , z 2 , z 3 )

Importance Sampling with This is Easy! Resampling We can draw samples from p(x|z l ) by adding p ( z | x ) p ( x ) � noise to the detection parameters. k Target distributi on f : p ( x | z , z ,..., z ) k = 1 2 n p ( z , z ,..., z ) 1 2 n p ( z | x ) p ( x ) l Sampling distributi on g : p ( x | z ) = l p ( z ) l p ( z ) p ( z | x ) � l k f p ( x | z , z ,..., z ) Importance weights w : 1 2 n k l � = = g p ( x | z ) p ( z , z ,..., z ) l 1 2 n Weighted samples After resampling Importance Sampling with Importance Sampling with Resampling Resampling p ( z | x ) p ( x ) � k Target distributi on f : p ( x | z , z ,..., z ) k = 1 2 n p ( z , z ,..., z ) 1 2 n p ( z | x ) p ( x ) Sampling distributi on g : p ( x | z ) l = l p ( z ) l p ( z ) � p ( z | x ) f p ( x | z , z ,..., z ) l k 1 2 n k l Importance weights w : � = = g p ( x | z ) p ( z , z ,..., z ) l 1 2 n Weighted samples After resampling

Particle Filter Projection Density Extraction Sampling Variance Particle Filters

Sensor Information: Importance Sampling Robot Motion Bel ( x ) p ( z | x ) Bel � ( x ) � � Bel � ( x ) p ( x | u x ' ) Bel ( x ' ) d x ' � � , p ( z | x ) Bel � ( x ) � w p ( z | x ) � = � Bel ( x ) � Robot Motion Sensor Information: Importance Sampling Bel ( x ) p ( z | x ) Bel � ( x ) � � Bel � ( x ) p ( x | u x ' ) Bel ( x ' ) d x ' � � , p ( z | x ) Bel � ( x ) � w p ( z | x ) � = � Bel � ( x )

Particle Filter Algorithm Particle Filter Algorithm 1. Algorithm particle_filter ( S t-1 , u t-1 z t ): Bel ( x ) p ( z | x ) p ( x | x , u ) Bel ( x ) dx = � � t t t t t 1 t 1 t 1 t 1 � � � � 2. S , 0 = � � = t 3. For Generate new samples K i 1 n = draw x i t − 1 from Bel (x t − 1 ) 4. Sample index j(i) from the discrete distribution given by w t-1 draw x i t from p ( x t | x i t − 1 , u t − 1 ) 5. Sample from using and i p ( x | x , u ) j ( i ) u x x � t t t � 1 t � 1 t 1 t � 1 Importance factor for x i t : 6. i i Compute importance weight w = p ( z | x ) t t t target distributi on i w i = 7. w Update normalization factor � = � + t proposal distributi on t p ( z | x ) p ( x | x , u ) Bel ( x ) 8. i i Insert � S S { x , w } = � < > t t t t 1 t 1 t 1 = � � � t t t t p ( x | x , u ) Bel ( x ) 9. For K t t 1 t 1 t 1 i 1 n � � � = p ( z | x ) � t t 10. w = i w i / Normalize weights � t t Resampling Resampling • Given : Set S of weighted samples. w 1 w 1 w n w n w 2 w 2 W n-1 W n-1 w 3 • Wanted : Random sample, where the w 3 probability of drawing x i is given by w i . • Typically done n times with replacement to • Stochastic universal sampling generate new sample set S’ . • Roulette wheel • Systematic resampling • Binary search, n log n • Linear time complexity • Easy to implement, low variance

Resampling Algorithm Motion Model Reminder 1. Algorithm systematic_resampling ( S,n ): 2. S ' , c w 1 = � = 1 3. For K Generate cdf i 2 n = i 4. c c w = + i i � 1 1 5. u ~ U [ 0 , n � ], i 1 Initialize threshold = 1 K 6. For j 1 n Draw samples … = 7. While ( ) u > c Skip until next threshold reached j i 8. i = i 1 + { } 9. S ' S ' x i , n � 1 Insert = � < > Start 10. Increment threshold u u n 1 � = + j j 11. Return S’ Also called stochastic universal sampling Proximity Sensor Model Reminder Sonar sensor Laser sensor 28

29 30 31 32

33 34 35 36

37 38 39 40

41 42 43 44

Sample-based Localization (sonar) 45 Vision-based Localization Using Ceiling Maps for Localization P(z|x) z h(x) [Dellaert et al. 99]

Under a Light Next to a Light Measurement z: P(z|x) : Measurement z: P(z|x) : Global Localization Using Vision Elsewhere Measurement z: P(z|x) :

Recovery from Failure Localization for AIBO robots Adaptive Sampling KLD-sampling • Idea : • Assume we know the true belief. • Represent this belief as a multinomial distribution. • Determine number of samples such that we can guarantee that, with probability (1- δ ) , the KL-distance between the true posterior and the sample-based approximation is less than ε . • Observation : • For fixed δ and ε , number of samples only depends on number k of bins with support: 3 1 k 1 � 2 2 � � 2 n ( k 1 , 1 ) 1 z = � � � � � � + � � 1 � � 2 2 9 ( k 1 ) 9 ( k 1 ) � � � � � �

Adaptive Particle Filter Algorithm Evaluation 1. Algorithm adaptive_particle_filter ( S t-1 , u t-1 z t, ): , � , � � S t , 0 , n 0 , k 0 , b 2. = � � = = = = � 3. Do Generate new samples 4. Sample index j(n) from the discrete distribution given by w t-1 5. Sample from using and x n p ( x | x , u ) x � j ( n ) u t t 1 t 1 t 1 t � � t 1 � w = n p ( z | x n ) 6. Compute importance weight t t t n w 7. Update normalization factor � = � + t 8. n n Insert S S { x , w } = � < > t t t t 9. If ( falls into an empty bin b ) Update bins with support x n t 10. k=k+1, b = non-empty 11. n=n+1 1 12. While ( ) 2 n ( k 1 , 1 ) < � � � � 2 � 13. For K i 1 n = w = i w i / 14. Normalize weights � t t Example Run Sonar Example Run Laser

Localization Algorithms - Comparison Kalman Multi- Topological Grid-based Particle filter hypothesis filter maps (fixed/variable) tracking Sensors Gaussian Gaussian Features Non-Gaussian Non- Gaussian Posterior Gaussian Multi-modal Piecewise Piecewise Samples constant constant Efficiency (memory) ++ ++ ++ -/o +/++ Efficiency (time) ++ ++ ++ o/+ +/++ Implementation + o + +/o ++ Accuracy ++ ++ - +/++ ++ Robustness - + + ++ +/++ Global No Yes Yes Yes Yes localization

Recommend

More recommend