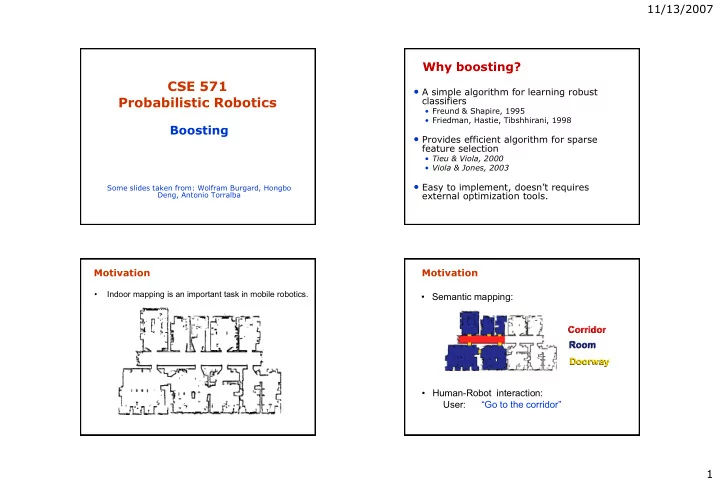

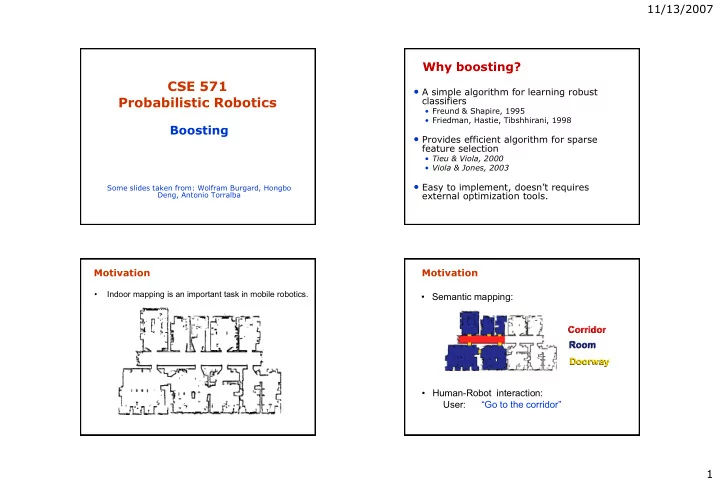

11/13/2007 Why boosting? CSE 571 • A simple algorithm for learning robust Probabilistic Robotics classifiers • Freund & Shapire, 1995 • Friedman, Hastie, Tibshhirani, 1998 Boosting • Provides efficient algorithm for sparse feature selection • Tieu & Viola, 2000 • Viola & Jones, 2003 • Easy to implement, doesn’t requires Some slides taken from: Wolfram Burgard, Hongbo Deng, Antonio Torralba external optimization tools. SA-1 Motivation Motivation • Indoor mapping is an important task in mobile robotics. • Semantic mapping: Corridor Corridor Room Room Doorway Doorway • Human-Robot interaction: User: “Go to the corridor” SA-1 SA-1 1

11/13/2007 Goal Observations • Classification of the position of the robot using one single observation: a 360 o laser range data. Room Room SA-1 SA-1 Observations Observations Doorway Doorway Room Doorway Room Doorway Room Corridor Room Corridor SA-1 SA-1 2

11/13/2007 Simple Features Combining Features • Observation: d There are many simple features f i . d d i • Problem: • Gap = d > θ Minimum 1 • Σ d i Each single feature f i gives poor classification f • f = # Gaps • f = d N rates. • Solution: d Combine multiple simple features to form a final classifier using AdaBoost. • f =Area • f = d • f =Perimeter SA-1 SA-1 Idea Example 12 3

11/13/2007 Example Example 13 14 Example Example 15 16 4

11/13/2007 Example Example 17 18 AdaBoost Algorithm Sequential Optimization • Original motivation in learning theory • Can be derived as sequential optimization of exponential error function N E exp t f ( x ) n m n n 1 with m 1 f ( ) x y ( ) x m l l 2 l 1 • Fixing first m-1 classifiers and minimizing wrt m-th classifier gives AdaBoost equations! 5

11/13/2007 Exponential loss Further Observations • Test error often decreases even when training error is already zero Squared error Loss 4 Misclassification error 3.5 Squared error • Many other boosting algorithms possible 3 Exponential loss 2.5 by changing error function 2 Exponential loss 1.5 • Regression 1 0.5 0 -1.5 -1 -0.5 0 0.5 1 1.5 2 • Multiclass Multiple Classes Summary of the Approach (1) • Training: learn a strong classifier using • Sequential AdaBoost for K different classes. previously labeled observations: Room Corridor Doorway Room Corridor Doorway H(x)=0 H(x)=0 Binary Binary . . . Class K Classifier 1 Classifier K-1 features features features features features features H(x)=1 H(x)=1 AdaBoost Class 1 Class K-1 Multi-class Classifier SA-1 SA-1 6

11/13/2007 Experiments Summary of the Approach (2) • Test: using the classifier to classify new Training (top) observations: # examples: 16045 Test (bottom) New observation # examples: 18726 classification: features Multi-class 93.94% Corridor Corridor Classifier Building 079 Uni. Freiburg Corridor Corridor Room Room Doorway Doorway SA-1 SA-1 Application to a New Environment Experiments Training (left) # examples: 13906 Test (right) # examples: Training map 10445 classification: 89.52% Building 101 Intel Research Lab in Uni. Freiburg Seattle Corridor Corridor Room Room Doorway Doorway Hallway Hallway SA-1 SA-1 7

11/13/2007 Application to a New Environment Sequential AdaBoost vs AdaBoost.M2 Training map Sequential AdaBoost AdaBoost.M2 Intel Research Lab in classification: 92.10 % classification: 91.83 % Seattle Corridor Corridor Room Room Doorway Doorway Corridor Corridor Room Room Doorway Doorway SA-1 SA-1 Boosting • Extremely flexible framework • Handles high-dimensional continuous data • Easy to implement • Limitation: • Only models local classification problems 8

Recommend

More recommend