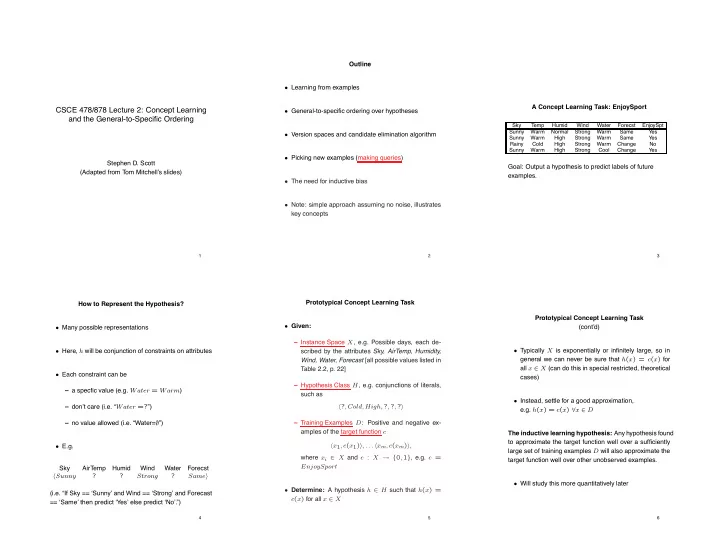

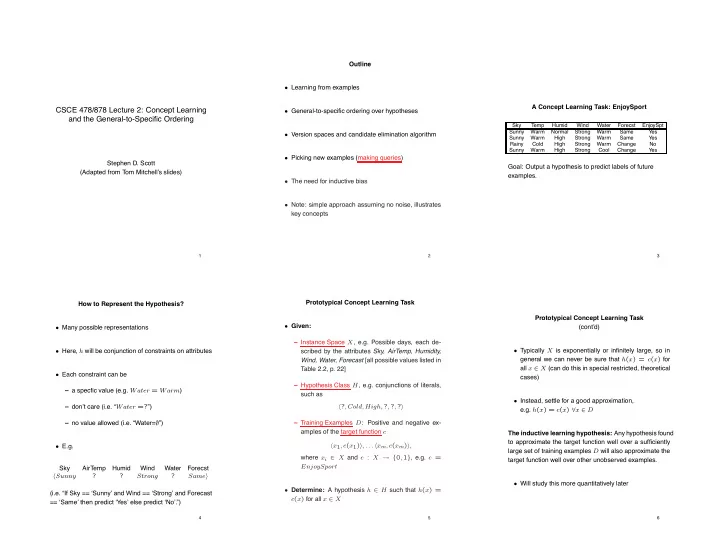

Outline • Learning from examples A Concept Learning Task: EnjoySport CSCE 478/878 Lecture 2: Concept Learning • General-to-specific ordering over hypotheses and the General-to-Speci fi c Ordering Sky Temp Humid Wind Water Forecst EnjoySpt Sunny Warm Normal Strong Warm Same Yes • Version spaces and candidate elimination algorithm Sunny Warm High Strong Warm Same Yes Rainy Cold High Strong Warm Change No Sunny Warm High Strong Cool Change Yes • Picking new examples (making queries) Stephen D. Scott Goal: Output a hypothesis to predict labels of future (Adapted from Tom Mitchell’s slides) examples. • The need for inductive bias • Note: simple approach assuming no noise, illustrates key concepts 1 2 3 Prototypical Concept Learning Task How to Represent the Hypothesis? Prototypical Concept Learning Task • Given: (cont’d) • Many possible representations – Instance Space X , e.g. Possible days, each de- • Here, h will be conjunction of constraints on attributes • Typically X is exponentially or infinitely large, so in scribed by the attributes Sky, AirTemp, Humidity, Wind, Water, Forecast [all possible values listed in general we can never be sure that h ( x ) = c ( x ) for all x ∈ X (can do this in special restricted, theoretical Table 2.2, p. 22] • Each constraint can be cases) – Hypothesis Class H , e.g. conjunctions of literals, – a specfic value (e.g. Water = Warm ) such as • Instead, settle for a good approximation, � ? , Cold, High, ? , ? , ? � – don’t care (i.e. “ Water =? ”) e.g. h ( x ) = c ( x ) ∀ x ∈ D – Training Examples D : Positive and negative ex- – no value allowed (i.e. “Water= ∅ ”) amples of the target function c The inductive learning hypothesis: Any hypothesis found to approximate the target function well over a sufficiently � x 1 , c ( x 1 ) � , . . . � x m , c ( x m ) � , • E.g. large set of training examples D will also approximate the where x i ∈ X and c : X → { 0 , 1 } , e.g. c = target function well over other unobserved examples. EnjoySport Sky AirTemp Humid Wind Water Forecst � Sunny ? ? Strong ? Same � • Will study this more quantitatively later • Determine: A hypothesis h ∈ H such that h ( x ) = (i.e. “If Sky == ‘Sunny’ and Wind == ‘Strong’ and Forecast c ( x ) for all x ∈ X == ‘Same’ then predict ‘Yes’ else predict ‘No’.”) 4 5 6

The More-General-Than Relation Find-S Algorithm Instances X Hypotheses H (Find Maximally Specific Hypothesis) Specific Hypothesis Space Search by Find-S 1. Initialize h to �∅ , ∅ , ∅ , ∅ , ∅ , ∅� , the most specific h h x 1 3 hypothesis in H 1 Instances X h Hypotheses H x 2 2 General h 0 2. For each positive training instance x - Specific x 3 h 1 x = <Sunny, Warm, High, Strong, Cool, Same> h = <Sunny, ?, ?, Strong, ?, ?> • For each attribute constraint a i in h h 2,3 1 1 x + + x x = <Sunny, Warm, High, Light, Warm, Same> h = <Sunny, ?, ?, ?, ?, ?> 1 2 2 2 h = <Sunny, ?, ?, ?, Cool, ?> – If the constraint a i in h is satisfied by x , then do 3 + General x 4 h 4 nothing h j ≥ g h k iff ( h k ( x ) = 1) ⇒ ( h j ( x ) = 1) ∀ x ∈ X – Else replace a i in h by the next more general h = < ! , ! , ! , ! , ! , ! > 0 x = <Sunny Warm Normal Strong Warm Same>, + h = <Sunny Warm Normal Strong Warm Same> 1 h 2 ≥ g h 1 , h 2 ≥ g h 3 , h 1 �≥ g h 3 , h 3 �≥ g h 1 1 constraint that is satisfied by x x = <Sunny Warm High Strong Warm Same>, + h = <Sunny Warm ? Strong Warm Same> 2 2 x = <Rainy Cold High Strong Warm Change>, - h = <Sunny Warm ? Strong Warm Same> 3 3 x = <Sunny Warm High Strong Cool Change>, + h = <Sunny Warm ? Strong ? ? > 4 4 • So ≥ g induces a partial order on hyps from H 3. Output hypothesis h • Can define > g similarly Why can we ignore negative examples? 7 8 9 Complaints about Find-S • Assuming there exists some function in H consistent with D , Find-S will find one The List-Then-Eliminate Algorithm Version Spaces • But Find-S cannot detect if there are other consistent 1. V ersionSpace ← a list containing every hypothesis • A hypothesis h is consistent with a set of training ex- hypotheses, or how many there are. In other words, if in H amples D of target concept c if and only if h ( x ) = c ∈ H , has Find-S found it? c ( x ) for each training example � x, c ( x ) � in D 2. For each training example, � x, c ( x ) � Consistent ( h, D ) ≡ ( ∀� x, c ( x ) � ∈ D ) h ( x ) = c ( x ) • Is a maximally specific hypothesis really the best one? • Remove from V ersionSpace any hypothesis h for which h ( x ) � = c ( x ) • The version space, V S H,D , with respect to hypothe- • Depending on H , there might be several maximally sis space H and training examples D , is the subset specific hyps, and Find-S doesn’t backtrack of hypotheses from H consistent with all training ex- 3. Output the list of hypotheses in V ersionSpace amples in D • Not robust against errors or noise, ignores negative V S H,D ≡ { h ∈ H : Consistent ( h, D ) } • Problem: Requires Ω ( | H | ) time to enumerate all hyps. examples • Can address many of these concerns by tracking the entire set of consistent hyps. 10 11 12

Candidate Elimination Algorithm G ← set of maximally general hypotheses in H S ← set of maximally specific hypotheses in H Representing Version Spaces For each training example d ∈ D , do Example Version Space • The General boundary, G , of version space V S H,D is the set of its maximally general members • If d is a positive example { <Sunny, Warm, ?, Strong, ?, ?> } S: – Remove from G any hyp. inconsistent with d • The Specific boundary, S , of version space V S H,D is the set of its maximally specific members <Sunny, ?, ?, Strong, ?, ?> <Sunny, Warm, ?, ?, ?, ?> <?, Warm, ?, Strong, ?, ?> – For each hypothesis s ∈ S that is not consistent with d ∗ Remove s from S • Every member of the version space lies between these { <Sunny, ?, ?, ?, ?, ?>, <?, Warm, ?, ?, ?, ?> } G: boundaries ∗ Add to S all minimal generalizations h of s such V S H,D = { h ∈ H : ( ∃ s ∈ S )( ∃ g ∈ G )( g ≥ g h ≥ g s ) } that 1. h is consistent with d , and 2. some member of G is more general than h ∗ Remove from S any hypothesis that is more gen- eral than another hypothesis in S 13 14 15 Example Trace Example Trace (cont’d) Candidate Elimination Algorithm (cont’d) S0: {<Ø, Ø, Ø, Ø, Ø, Ø>} S 0 : { < > } ! , ! , ! , ! , ! , ! • If d is a negative example – Remove from S any hyp. inconsistent with d S 1 : { <Sunny, Warm, Normal, Strong, Warm, Same> } – For each hypothesis g ∈ G that is not consistent with d S 2 : ∗ Remove g from G { <Sunny, Warm, ?, Strong, Warm, Same> } ∗ Add to G all minimal specializations h of g such that G G , G : , { <?, ?, ?, ?, ?, ?> } 0 1 2 1. h is consistent with d , and 2. some member of S is more specific than h Training examples: ∗ Remove from G any hypothesis that is less gen- G 0: {<?, ?, ?, ?, ?, ?>} 1 . <Sunny, Warm, Normal, Strong, Warm, Same>, Enjoy Sport = Yes eral than another hypothesis in G 2 . <Sunny, Warm, High, Strong, Warm, Same>, Enjoy Sport = Yes 16 17 18

Recommend

More recommend