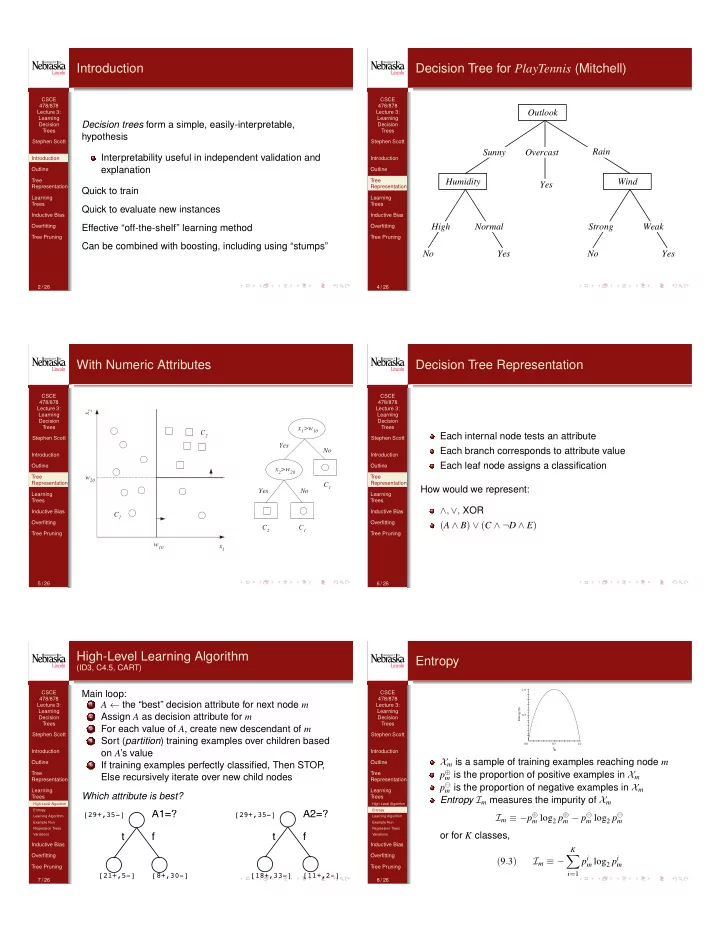

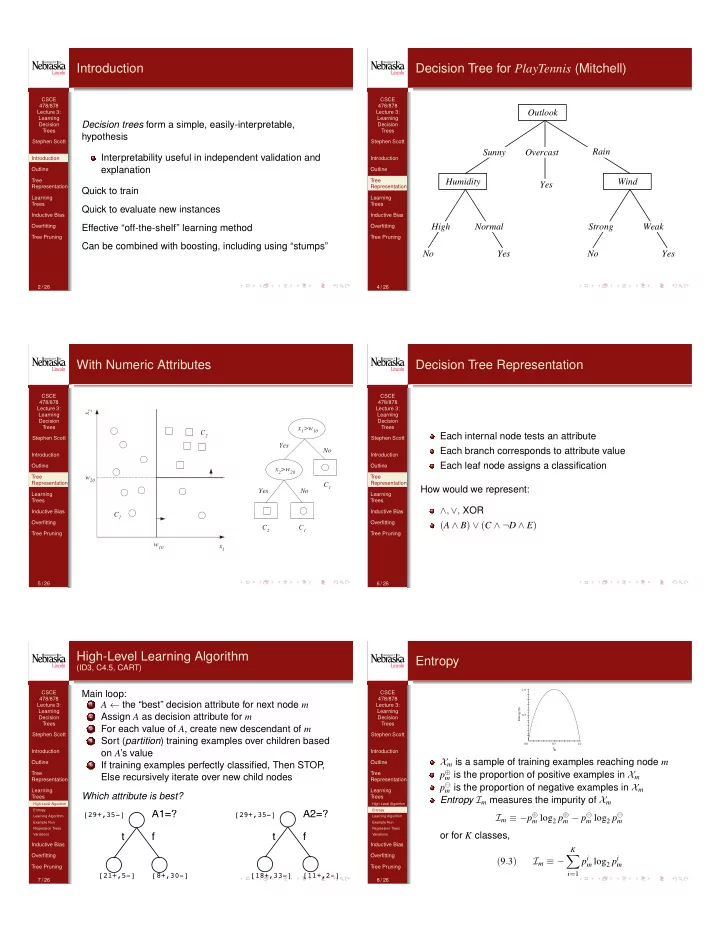

Introduction Decision Tree for PlayTennis (Mitchell) CSCE CSCE 478/878 478/878 Outlook Lecture 3: Lecture 3: Learning Learning Decision trees form a simple, easily-interpretable, Decision Decision Trees Trees hypothesis Stephen Scott Stephen Scott Rain Sunny Overcast Interpretability useful in independent validation and Introduction Introduction explanation Outline Outline Humidity Wind Tree Tree Yes Representation Representation Quick to train Learning Learning Trees Trees Quick to evaluate new instances Inductive Bias Inductive Bias High Normal Strong Weak Overfitting Effective “off-the-shelf” learning method Overfitting Tree Pruning Tree Pruning Can be combined with boosting, including using “stumps” No Yes No Yes 2 / 26 4 / 26 With Numeric Attributes Decision Tree Representation CSCE CSCE 478/878 478/878 Lecture 3: Lecture 3: 2 x Learning Learning Decision Decision Trees x >w Trees 1 10 C Each internal node tests an attribute 2 Stephen Scott Stephen Scott Yes Each branch corresponds to attribute value No Introduction Introduction Each leaf node assigns a classification Outline Outline x >w 2 20 Tree w Tree 20 Representation Representation C 1 How would we represent: Yes No Learning Learning Trees Trees ∧ , ∨ , XOR Inductive Bias Inductive Bias C 1 Overfitting Overfitting ( A ∧ B ) ∨ ( C ∧ ¬ D ∧ E ) C C 2 1 Tree Pruning Tree Pruning w x 10 1 5 / 26 6 / 26 High-Level Learning Algorithm Entropy (ID3, C4.5, CART) 1.0 CSCE Main loop: CSCE 478/878 478/878 A ← the “best” decision attribute for next node m 1 Lecture 3: Lecture 3: Entropy(S) Learning Learning Assign A as decision attribute for m 2 0.5 Decision Decision Trees Trees For each value of A , create new descendant of m 3 Stephen Scott Stephen Scott Sort ( partition ) training examples over children based 4 0.0 0.5 1.0 p + Introduction on A ’s value Introduction X m is a sample of training examples reaching node m Outline If training examples perfectly classified, Then STOP , Outline 5 p � m is the proportion of positive examples in X m Tree Tree Else recursively iterate over new child nodes Representation Representation p m is the proportion of negative examples in X m Learning Learning Which attribute is best? Trees Trees Entropy I m measures the impurity of X m High-Level Algorithm High-Level Algorithm Entropy A1=? A2=? Entropy [29+,35-] [29+,35-] I m ≡ − p � m log 2 p � m − p m log 2 p Learning Algorithm Learning Algorithm m Example Run Example Run Regression Trees Regression Trees or for K classes, t f t f Variations Variations Inductive Bias Inductive Bias K Overfitting Overfitting X p i m log 2 p i ( 9 . 3 ) I m ≡ − m Tree Pruning Tree Pruning i = 1 [21+,5-] [8+,30-] [18+,33-] [11+,2-] 7 / 26 8 / 26

Total Impurity Learning Algorithm CSCE CSCE Now can look for an attribute A , when used to partition 478/878 478/878 X m by value, produces the most pure (lowest-entropy) Lecture 3: Lecture 3: Learning Learning subsets Decision Decision Trees Trees Weight each subset by relative size Stephen Scott Stephen Scott E.g., size-3 subsets should carry less influence than size-300 ones Introduction Introduction Let N m = |X m | = number of instances reaching node m Outline Outline Let N mj = number of these instances with value Tree Tree Representation Representation j ∈ { 1 , . . . , n } for attribute A Learning Learning Let N i mj = number of these instances with label Trees Trees High-Level Algorithm High-Level Algorithm i ∈ { 1 , . . . , K } Entropy Entropy Learning Algorithm Learning Algorithm Let p i mj = N i mj / N mj Example Run Example Run Regression Trees Regression Trees Then the total impurity is Variations Variations Inductive Bias Inductive Bias n K N mj Overfitting X X Overfitting I 0 p i mj log 2 p i ( 9 . 8 ) m ( A ) ≡ − mj N m Tree Pruning Tree Pruning j = 1 i = 1 9 / 26 10 / 26 Example Run Example Run Training Examples Selecting the First Attribute CSCE CSCE 478/878 478/878 Lecture 3: Lecture 3: Learning Learning Comparing Humidity to Wind : Decision Decision Day Outlook Temperature Humidity Wind PlayTennis S: [9+,5-] S: [9+,5-] Trees Trees D1 Sunny Hot High Weak No E =0.940 E =0.940 Stephen Scott Stephen Scott D2 Sunny Hot High Strong No Humidity Wind D3 Overcast Hot High Weak Yes Introduction Introduction D4 Rain Mild High Weak Yes High Normal Weak Strong Outline Outline D5 Rain Cool Normal Weak Yes Tree Tree D6 Rain Cool Normal Strong No Representation Representation D7 Overcast Cool Normal Strong Yes [3+,4-] [6+,1-] [6+,2-] [3+,3-] Learning Learning Trees Trees E =0.985 E =0.592 E =0.811 E =1.00 D8 Sunny Mild High Weak No High-Level Algorithm High-Level Algorithm I 0 D9 Sunny Cool Normal Weak Yes m ( Humidity ) = ( 7 / 14 ) 0 . 985 + ( 7 / 14 ) 0 . 592 = 0 . 789 Entropy Entropy Learning Algorithm D10 Rain Mild Normal Weak Yes Learning Algorithm I 0 m ( Wind ) = ( 8 / 14 ) 0 . 811 + ( 6 / 14 ) 1 . 000 = 0 . 892 Example Run Example Run D11 Sunny Mild Normal Strong Yes Regression Trees Regression Trees I 0 m ( Outlook ) = ( 5 / 14 ) 0 . 971 + ( 4 / 14 ) 0 . 0 + ( 5 / 14 ) 0 . 971 = 0 . 694 Variations Variations D12 Overcast Mild High Strong Yes I 0 Inductive Bias Inductive Bias m ( Temp ) = ( 4 / 14 ) 1 . 000 + ( 6 / 14 ) 0 . 918 + ( 4 / 14 ) 0 . 811 = 0 . 911 D13 Overcast Hot Normal Weak Yes Overfitting Overfitting D14 Rain Mild High Strong No Tree Pruning Tree Pruning 11 / 26 12 / 26 Example Run Regression Trees Selecting the Next Attribute {D1, D2, ..., D14} CSCE CSCE [9+,5 � ] 478/878 478/878 Lecture 3: Lecture 3: Outlook Learning Learning Decision Decision Trees Trees A regression tree is similar to a decision tree, but with Stephen Scott Sunny Overcast Rain Stephen Scott real-valued labels at the leaves Introduction Introduction To measure impurity at a node m , replace entropy with {D1,D2,D8,D9,D11} {D3,D7,D12,D13} {D4,D5,D6,D10,D14} Outline [2+,3 � ] [4+,0 � ] [3+,2 � ] Outline variance of labels: Tree Tree ? ? Yes Representation Representation E m ≡ 1 ( r t − g m ) 2 , X Learning Learning Trees Trees N m ( x t , r t ) 2 X m High-Level Algorithm High-Level Algorithm Which attribute should be tested here? Entropy Entropy Learning Algorithm Learning Algorithm Example Run Example Run where g m is the mean (or median) label in X m X m = { D 1 , D 2 , D 8 , D 9 , D 11 } Regression Trees Regression Trees Variations Variations � � I 0 m ( Humidity ) = ( 3 / 5 ) 0 . 0 + ( 2 / 14 ) 0 . 0 = 0 . 0 Inductive Bias Inductive Bias � � � I 0 m ( Wind ) = ( 2 / 5 ) 1 . 0 + ( 3 / 5 ) 0 . 918 = 0 . 951 Overfitting � � Overfitting I 0 m ( Temp ) = ( 2 / 5 ) 0 . 0 + ( 2 / 5 ) 1 . 0 + ( 1 / 5 ) 0 . 0 = 0 . 400 Tree Pruning Tree Pruning 13 / 26 14 / 26

Recommend

More recommend