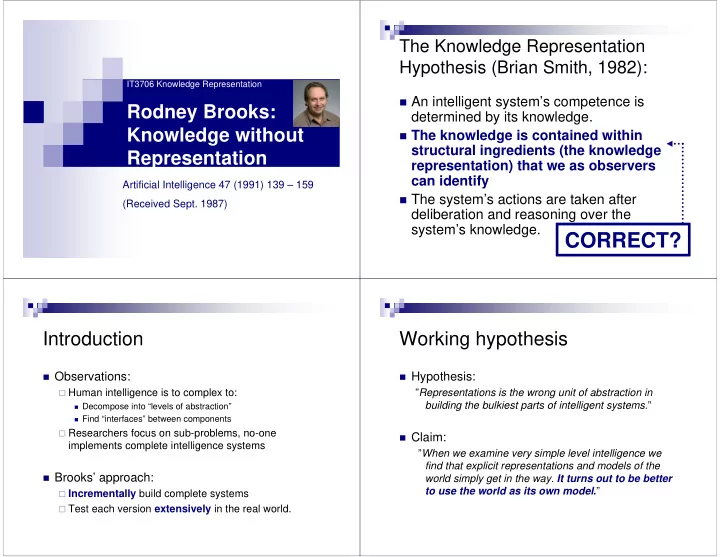

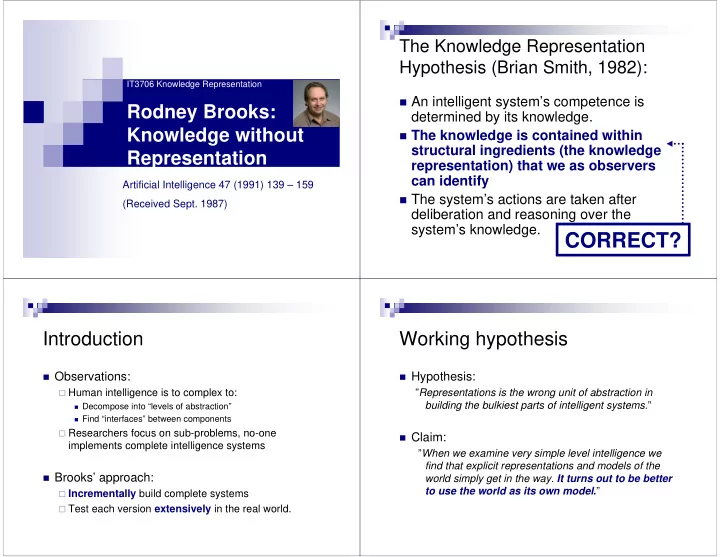

The Knowledge Representation Hypothesis (Brian Smith, 1982): IT3706 Knowledge Representation � An intelligent system’s competence is Rodney Brooks: determined by its knowledge. Knowledge without � The knowledge is contained within structural ingredients (the knowledge Representation representation) that we as observers can identify Artificial Intelligence 47 (1991) 139 – 159 � The system’s actions are taken after (Received Sept. 1987) deliberation and reasoning over the system’s knowledge. CORRECT? Introduction Working hypothesis � Observations: � Hypothesis: � Human intelligence is to complex to: ” Representations is the wrong unit of abstraction in building the bulkiest parts of intelligent systems. ” � Decompose into “levels of abstraction” � Find “interfaces” between components � Researchers focus on sub-problems, no-one � Claim: implements complete intelligence systems ” When we examine very simple level intelligence we find that explicit representations and models of the � Brooks’ approach: world simply get in the way. It turns out to be better to use the world as its own model . ” � Incrementally build complete systems � Test each version extensively in the real world.

The evolution Man: 2,5 million years ago A Story about Artificial flight Agriculture: 19 000 years ago of intelligence Writing: 5 000 years ago “Expert” knowledge : The last few hundred years Our evolution: Insects: 450 million years ago Looks fancy Time � Must be Single-cell organisms: 3,5 billion years ago First fish and vertebrates: 550 million years ago Important! Reptiles: 370 million years ago Primates: 220 million years ago � What can we learn from this? � Sensory- and motor-skills in a dynamic world are the No passenger difficult parts. understands it � The rest is “pretty simple” (!) � Waste of time! Abstraction is a dangerous weapon Intelligent Creatures � We can’t use the simplified solution to solve the Brooks defines a “Creature”, and wants to make it general problem intelligent: � The process of abstraction part of the intelligence � Block-worlds are dangerous, and at best: useless! � Must cope with changes in its dynamic environment � We don’t know how we represent things in our � Should be robust with respect to its environment. own mind � Maintains multiple goals depending on the � Even if we knew, it is not known that the same circumstances representation is right for AI � Should have a purpose ; do something in the � Brooks: Don’t try to make the computer use the same world abstractions as we (think we) do.

Decomposition of intelligence Who has the representation? Decomposition by function: � No central representation – This helps the Creature to quickly respond to dangers � Central system with perceptual modules as input, and actions as � No identifiable place where the output of the output. representation can be found – All layers help � Central systems are decomposed � Totally different processing and sensory data proceed into learning, reasoning, planning independently and in parallel . etc. � Competition between the layers replaces the need for � DON’T DO IT !! centrally figuring out what to do. � Intelligence emerges from the combination of layer Decomposition by activity: goals � No distinction between peripheral and central systems. � Hypothesis: “… much of human level activity is similarly � Divided into activity producing subsystems (layers) a reflection of the world through very simple mechanisms � Each subsystem connects sensing to action without detailed representation.” � THE WAY TO PROCEED !! Creating Creatures Creating Creatures (cont’d) Expand the Creature with one layer 1. � Each layer.. Test and debug it fully in the real world. 2. � Consists of multiple finite state machines Go to 1 3. � Communicates with the layers below through inhibiting and and suppressing signals there Sources of error: � � Has access to sensory input and can activate outputs � Current layer � Their simplest robot contains three layers: Connection between current and former layer � Avoid: Both static and dynamic obstacles Former layers (should not really happen ☺ ) � Wander: Walk in given – random – direction � Only one place to tweak when looking for Explore: Walk to interesting spaces the robot can see errors: The current layer

How it works: Finite state machines… What this is not � Connectionism: Subsumption uses fixed connections; non-uniform processing nodes � Neural networks: No biological plausibility in the Finite State Machines � Production rules: Subsumption system lacks, e.g., working memory � Blackboard: Subsumption systems use forced locations for collecting knowledge Limits to growth The future “Only experiments Problems to solve before world domination is with real Creatures in assured: real worlds � Complexity increases with the number of can answer the layers – does this bound usefulness? natural doubts about � Are there limits for how complex behavior that our approach. can emerge from such a system? � Do we need a central reasoning layer on top Time will tell.” after all? � Can higher level functions (e.g., learning ) occur Cardea – The new robot in a layered system?

Summary � Representation not required for intelligent behavior! � As far as Brooks’ system is “intelligent”, it contradicts the “Knowledge Representation Hypothesis” � Start simple – make an insect-like intelligence first. � If not: The Artificial Flight story � Always make things that operate in real world – no simulated environments! � The Subsumption Architecture � Layers, where higher layers are higher-level functions. � All work in parallel. � All receive sensory input. � No representation – only signals and state machines.

Recommend

More recommend