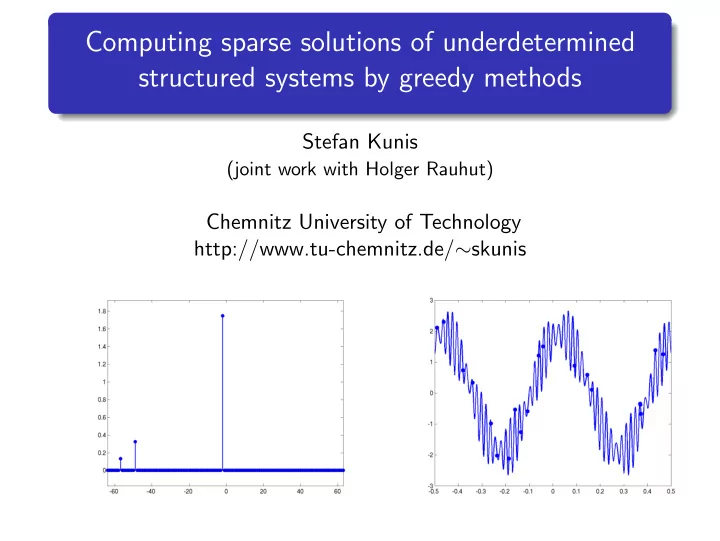

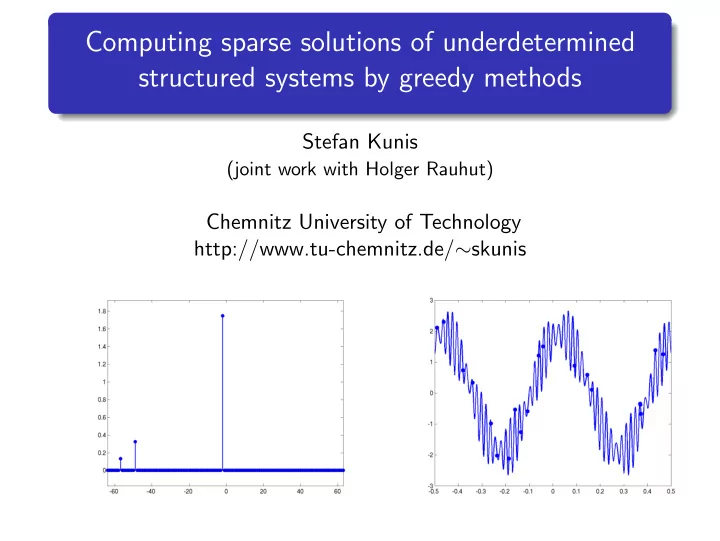

Computing sparse solutions of underdetermined structured systems by greedy methods Stefan Kunis (joint work with Holger Rauhut) Chemnitz University of Technology http://www.tu-chemnitz.de/ ∼ skunis

Outline Sparse reconstruction 1 Nonequispaced FFT 2 Reconstruction from samples 3 Algorithms and analysis 4 Numerical results 5

Sparse reconstruction compressed sensing, compressive sampling, sparse reconstruction, ... a sparse reconstruction problem, M ≪ N , A ∈ C M × N , b ∈ C M given, find c ∈ C N | supp( c ) | min s.t. Ac = b (1) denote sparsity S = | supp( c ) | , interesting case S ≈ M ≪ N structured matrices A with fast matrix-vector arithemtic

Sparse reconstruction basis pursuit principle, (Donoho, Stark [1989]; Candes, Romberg, Tao [2004-]; Donoho, Tanner [2004-]; Rauhut [2005-]; Rudelson, Vershynin [2006-]) c ∈ C N � c � 1 min s.t. Ac = b (2) smallest number δ S such that for all c ∈ C N , supp( c ) ≤ S : 1 − δ S ≤ c ⊢ ⊣ A ⊢ ⊣ Ac ≤ 1 + δ S (3) c ⊢ ⊣ c Theorem. [Candes, Tao] if A satisfies δ S + δ 2 S + δ 3 S < 1 then (2) solves (1) condition (3) is satisfied with probabilty 1 − ǫ for random matrices with Gaussian entries for M ≥ C δ · S · log( N /ǫ )

Sparse reconstruction general purpose schemes that solve basis pursuit (2) are slow theoretical computer science, computational time sublinear in N , (Mansour [1995]; Daubechies, Gilbert, Muthukrishnan, Strauss, Zou [2005-]) greedy methods for random matrices with Gaussian or Bernoulli entries, (Tropp, Gilbert [2005-]) connection to approximation theory, (Temlyakov [2003-]; Cohen, Dahmen, DeVore [2006])

Nonequispaced FFT trigonometric polynomials f : T → C , N / 2 − 1 � ˆ f k e − 2 π i kx f ( x ) = k = − N / 2 discrete Fourier transform - DFT ( O ( N 2 ) or by FFT O ( N log N )) N / 2 − 1 � ˆ f k e − 2 π i kj / N , f j = j = − N / 2 , . . . , N / 2 − 1 k = − N / 2 for a finite sampling set X ⊂ T - nonequispaced DFT ( O ( MN )) e − 2 π i kx j � ∈ C M × N f = Aˆ � f , ( a j , k ) =

Nonequispaced FFT fast Fourier tranform - FFT (Cooley, Tukey 1965; Frigo, Johnson 1997-) N d log N � � O nonequispaced FFT (Dutt, Rokhlin 1993; Beylkin 1995-; Potts, Steidl, Tasche 1997-; Greengard, Lee 2004; Potts, K. 2002-) N d log N + | log ε | d M � � O software NFFT3.0 (Keiner, Potts, K.) http://www.tu-chemnitz.de/ ∼ potts/nfft

Nonequispaced FFT building block for computation with curvelets, ridgelets sparse FFTs (Gilbert, Muthukrishnan, Strauss 2005) computation with RBF kernels (fast Gauss transform) reconstruction schemes in computerised tomography, magnetic resonance imaging typical example from CT phantom Fourier transform (log) polar grid

Reconstruction from samples reconstruction of signals from their Fourier transform, common practice (Shannon sampling theorem): “sampling rate” ∼ “bandwidth” (Nyquist criteria) T = [ − 1 2 , 1 2 ), N ∈ 2 N , ˆ f k ∈ C ; consider the trigonometric polynomial N / 2 − 1 � ˆ f k e − 2 π i kx f : T → C , f ( x ) = k = − N / 2 f can be reconstructed from M ≥ N samples y j = f ( x j ) conditions for d > 1 ...

Reconstruction from samples least squares approximation of ( x j , y j ) ∈ T d × C M − 1 � | f ( x j ) − y j | 2 min f j =0 well conditioned if max j | x j − x j +1 | < 1 / N (Gr¨ ochenig 1992) minimal norm interpolation of ( x j , y j ) ∈ T d × C min � f � 2 s.t. f ( x j ) = y j f well conditioned if min j | x j − x j +1 | > 1 . 6 / N (Potts, K. 2006)

Reconstruction from samples for support T ⊂ I N = {− N 2 , . . . , N 2 − 1 } , S = | T | ≪ N , we consider sparse trigonometric polynomials � f k e − 2 π i kx ˆ f : T → C , f ( x ) = k ∈ T (non)linear spaces of trigonometric polynomials � Π T ⊂ Π I N , vs. Π I N ( S ) = Π T T ⊂ I N : | T |≤ S reconstruct f ∈ Π I N ( S ) from samples y j at nodes x j ∈ T , i.e., � ˆ f k e − 2 π i kx j , y j = f ( x j ) = j = 0 , . . . , M − 1 k ∈ I N

Reconstruction from samples dimension N , Fourier coefficients ˆ f ∈ C N sparsity S = | T | , support T = supp( ˆ f ) number of samples M , samples ( x j , y j ) ∈ T × C interesting case S ∼ M ≪ N nonequispaced Fourier matrix and its T s -restriction A = ( e − 2 π i kx j ) j =0 ,..., M − 1; k ∈ I N = ( . . . φ k | φ k +1 . . . ) ∈ C M × N A T s = ( φ k ) k ∈ T s ∈ C M ×| T s | sampling a trigonometric polynomial y = Aˆ f

Algorithms and analysis - Thresholding Input: y ∈ C M , maximum sparsity S ∈ N 1: find T ⊂ I N to the S largest inner products {|� y , φ l �|} l ∈ I N c 2: solve � A T c − y � 2 → min 3: (ˆ f k ) k ∈ T = c Output: ˆ f ∈ C N , T ⊂ I N Remark: we might hope that M − 1 � y , φ l � = M − 1 � M − 1 j =0 f ( x j ) e 2 π i lx j ≈ T f ( x ) e 2 π i lx d x = ˆ � f l computation of the inner products by ( � y , φ l � ) l ∈ I N = A ⊢ ⊣ y in O ( N log N + M ) floating point operations

Algorithms and analysis - Orthogonal Matching Pursuit Input: y ∈ C M , ε > 0 1: s = 0, r 0 = y , T 0 = ∅ 2: repeat s = s + 1 3: T s = T s − 1 ∪ { arg max k ∈ I N |� r s − 1 , φ k �|} 4: d s solve � A T s d s − y � 2 → min 5: r s = y − A T s d s 6: 7: until s = M or � r s � ≤ ε 8: T = T s , (ˆ f k ) k ∈ T = d s Output: ˆ f ∈ C N , T ⊂ I N

Algorithms and analysis - Thresholding Theorem 1. [Rauhut,K.] fix f ∈ Π I N ( S ) define its dynamic range by R = max k ∈ T | ˆ f k | min k ∈ T | ˆ f k | choose sampling nodes x 0 , . . . , x M − 1 independently and uniformly at random on T or on the grid 1 N I N if for some ǫ > 0 M ≥ CR 2 · S · log( N /ǫ ) then with probability at least 1 − ǫ thresholding recovers T and ˆ f

Algorithms and analysis - OMP Theorem 2. [Rauhut,K.] fix f ∈ Π I N ( S ), choose sampling nodes as before if for some ǫ > 0 M ≥ C · S · log( N /ǫ ) , then with probability at least 1 − ǫ orthogonal matching pursuit selects k 1 ∈ supp( ˆ f ) Theorem 3. [Rauhut,K.] choose sampling nodes as before if for some ǫ > 0 M ≥ C · S 2 · log( N /ǫ ) then with probability at least 1 − ǫ OMP recovers every f ∈ Π I N ( S )

Algorithms and analysis- recent results Rauhut: If M ≤ C · S 2 /σ, then with probability exceeding 1 − c 1 / S − c 2 /σ 2 there exists an f ∈ Π I N ( S ) on which tresholding fails. Similar result for OMP with S iterations. Needell, Vershynin: If M ≥ C · S · log ∗ ( N ) log(1 /ǫ ) , then with probability at least 1 − ǫ a slighly more expensive greedy method recovers every f ∈ Π I N ( S ). Donoho, Tsaig: greedy methods for basis pursuit (2).

Numerical results fixed dimension N = 1000, fixed number of samples M = 40, normalised Fourier coefficients | ˆ f k | = 1 reconstruction rate vs. sparsity S computation time vs. sparsity S

Numerical results fixed reconstruction rate 90%, fixed sparsity S Thresholding ( | ˆ f k | = 1) OMP generalised oversampling factor M / S vs. dimension N

Numerical results - Implementation fast matrix vector arithmetic with A least squares solver QR factorisation with insert LSQR (uniformly bounded condition number, Rauhut) available Random sampling of sparse trigonometric polynomials II - orthogonal matching pursuit versus basis pursuit. (with Holger Rauhut, Found. Comput. Math., to appear) MATLAB toolbox OMP4NFFT (with Holger Rauhut) C subroutine library NFFT3 (with Jens Keiner, Daniel Potts) www.tu-chemnitz.de/ ∼ skunis

... - Matching Pursuit (Pure Greedy) Input: y ∈ C M , ε > 0, maximum number of iterations L ∈ N 1: s = 0, r 0 = y , T 0 = ∅ , ˆ f = 0 2: repeat s = s + 1 3: k s = arg max k ∈ I N |� r s − 1 , φ k �| 4: f k s = ˆ ˆ f k s + � r s − 1 , φ k s � 5: r s = r s − 1 − � r s − 1 , φ k s � φ k s 6: T s = T s − 1 ∪ { k s } 7: 8: until s = L or � r s � ≤ ε 9: T = T s Output: ˆ f ∈ C N , T ⊂ I N

... - Sketch of proof fix T ⊂ I N , c ∈ C S , and choose M sampling nodes independently and uniformly at random on T or on 1 N I N ∈ T and δ > 0 holds for k / � � M δ 2 P ( |� A T c , φ k �| ≥ M δ ) ≤ 4 exp − 4 4 � c � 2 2 + 2 � c � 1 δ √ 3 Remark: this quantifies the“quadrature rule” M − 1 � f ( x j ) e 2 π i kx j � A T c , φ k � = � y , φ k � = j =0 � f ( x ) e 2 π i kx d x = 0 ≈ M · T

... - Sketch of proof thresholding recovers the correct support if min j ∈ T |� φ j , A T c �| > max ∈ T |� φ k , A T c �| k / for l ∈ T , the triangle inequality yields | M − 1 � φ l , A T c �| = | c l + M − 1 � φ l , A T \{ l } c T \{ l } �| j ∈ T | M − 1 � φ j , A T \{ j } c T \{ j } �| ≥ min j ∈ T | c j | − max hence, thresholding succeeds if j ∈ T | M − 1 � φ j , A T \{ j } c T \{ j } �| max < min j ∈ T | c j | / 2 ∈ T | M − 1 � φ k , A T c �| j ∈ T | c j | / 2 max < min k /

Recommend

More recommend