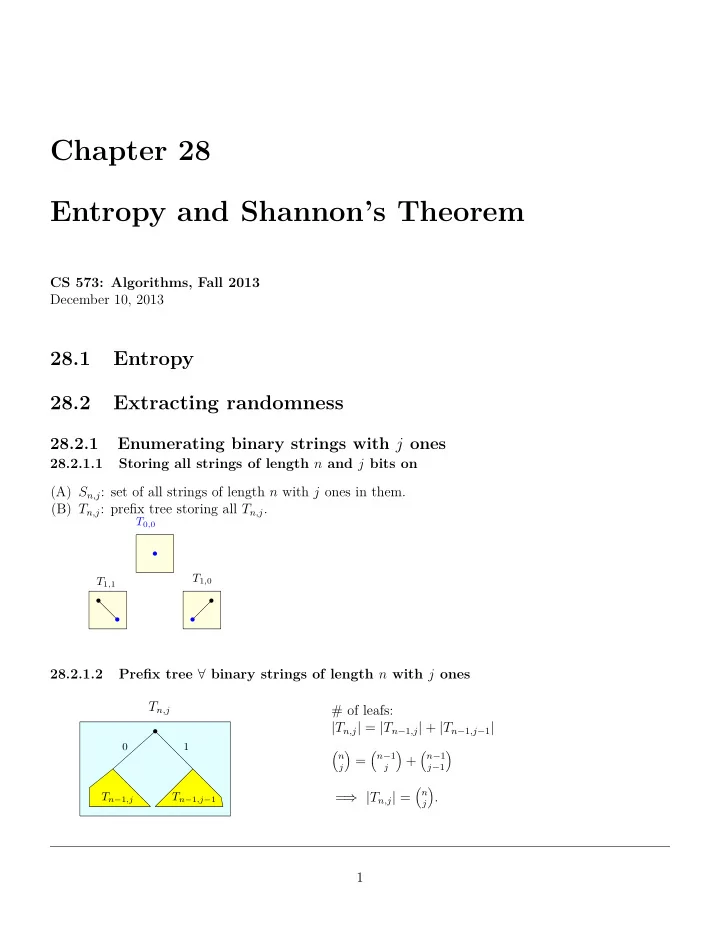

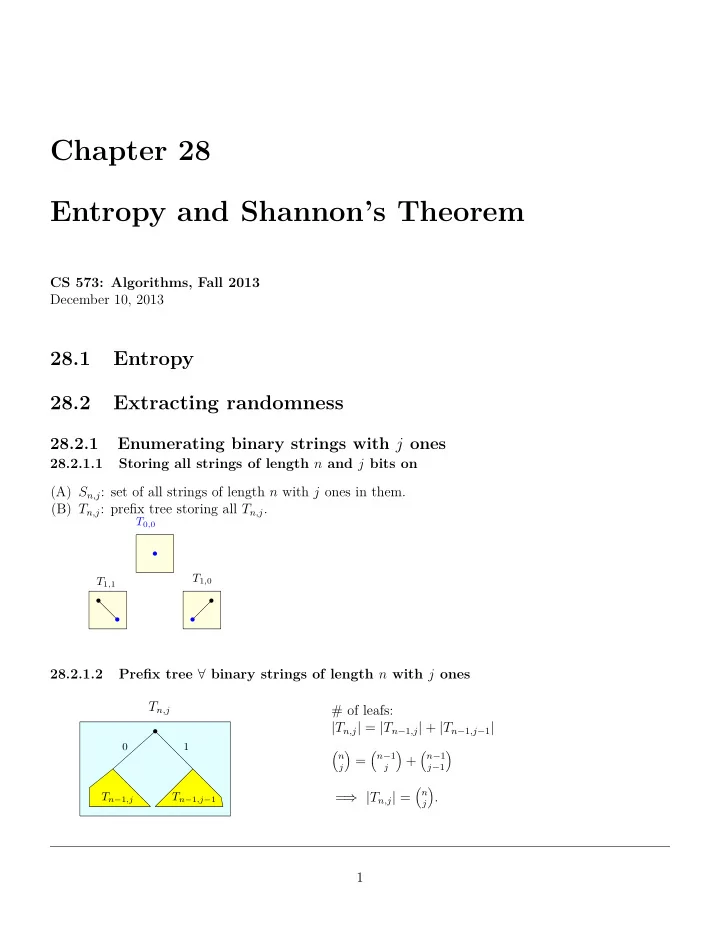

Chapter 28 Entropy and Shannon’s Theorem CS 573: Algorithms, Fall 2013 December 10, 2013 28.1 Entropy 28.2 Extracting randomness 28.2.1 Enumerating binary strings with j ones 28.2.1.1 Storing all strings of length n and j bits on (A) S n,j : set of all strings of length n with j ones in them. (B) T n,j : prefix tree storing all T n,j . T 0 , 0 T 1 , 0 T 1 , 1 28.2.1.2 Prefix tree ∀ binary strings of length n with j ones T n,j # of leafs: | T n,j | = | T n − 1 ,j | + | T n − 1 ,j − 1 | 0 1 ( n ) ( n − 1 ) ( n − 1 ) = + j j j − 1 ( n ) = ⇒ | T n,j | = . T n − 1 ,j T n − 1 ,j − 1 j 1

T n,n T n, 0 0 1 T n − 1 , 0 T n − 1 ,n − 1 28.2.1.3 Encoding a string in S n,j (A) T n,j leafs corresponds to strings of S n,j . (B) Order all strings of S n,j order in lexicographical ordering (C) ≡ ordering leafs of T n,j from left to right. T n,j 0 1 T n − 1 ,j T n − 1 ,j − 1 (D) Input: s ∈ S n,j : compute index of s in sorted set S n,j . (E) EncodeBinomCoeff ( s ) denote this polytime procedure. 28.2.1.4 Decoding a string in S n,j (A) T n,j leafs corresponds to strings of S n,j . (B) Order all strings of S n,j order in lexicographical ordering (C) ≡ ordering leafs of T n,j from left to right. T n,j 0 1 T n − 1 ,j T n − 1 ,j − 1 { ( n )} (D) x ∈ 1 , . . . , : compute x th string in S n,j in polytime. j (E) DecodeBinomCoeff ( x ) denote this procedure. 28.2.1.5 Encoding/decoding strings of S n,j Lemma 28.2.1. S n,j : Set of binary strings of length n with j ones, sorted lexicographically. (A) EncodeBinomCoeff ( α ): Input is string α ∈ S n,j , compute index x of α in S n,j in polynomial time in n . { ( n )} (B) DecodeBinomCoeff ( x ): Input index x ∈ 1 , . . . , . Output x th string α in S n,j , in time j O (polylog n + n ) . 2

28.2.2 Extracting randomness 28.2.2.1 Extracting randomness Theorem 28.2.2. Consider a coin that comes up heads with probability p > 1 / 2 . For any constant δ > 0 and for n sufficiently large: (A) One can extract, from an input of a sequence of n flips, an output sequence of (1 − δ ) n H ( p ) (unbiased) independent random bits. (B) One can not extract more than n H ( p ) bits from such a sequence. 28.2.2.2 Proof... ( n ) (A) There are input strings with exactly j heads. j (B) each has probability p j (1 − p ) n − j . { ( n )} (C) map string s like that to index number in the set S j = 1 , . . . , . j (D) Given that input string s has j ones (out of n bits) defines a uniform distribution on S n,j . (E) x ← EncodeBinomCoeff ( s ) ( n ) (F) x uniform distributed in { 1 , . . . , N } , N = . j (G) Seen in previous lecture... (H) ... extract in expectation, ⌊ lg N ⌋ − 1 bits from uniform random variable in the range 1 , . . . , N . (I) Extract bits using ExtractRandomness ( x, N ):. 28.2.2.3 Exciting proof continued... (A) Z : random variable: number of heads in input string s . (B) B : number of random bits extracted. n � [ ] [ ] ∑ B = Pr [ Z = k ] E B � Z = k , E � k =0 ⌊ ( n )⌋ [ � ] (C) Know: E B � Z = k ≥ lg − 1. � k (D) ε < p − 1 / 2: sufficiently small constant. (E) n ( p − ε ) ≤ k ≤ n ( p + ε ): ( n ) ( ) ≥ 2 n H ( p + ε ) n ≥ n + 1 , k ⌊ n ( p + ε ) ⌋ ( n (F) ... since 2 n H ( p ) is a good approximation to ) as proved in previous lecture. np 28.2.2.4 Super exciting proof continued... � [ ] [ ] = ∑ n B k =0 Pr [ Z = k ] E B � Z = k . E � [ ] ≥ ∑ ⌈ n ( p + ε ) ⌉ [ ] [ � ] B k = ⌊ n ( p − ε ) ⌋ Pr Z = k B � Z = k E E � ⌈ n ( p + ε ) ⌉ ] (⌊ ( n )⌋ ) [ ∑ ≥ Pr Z = k lg − 1 k k = ⌊ n ( p − ε ) ⌋ ⌈ n ( p + ε ) ⌉ lg 2 n H ( p + ε ) ]( ) [ ∑ ≥ Pr Z = k − 2 n + 1 k = ⌊ n ( p − ε ) ⌋ ( ) = n H ( p + ε ) − lg( n + 1) − 2 Pr [ | Z − np | ≤ εn ] 3

)( ( − nε 2 )) ( ≥ n H ( p + ε ) − lg( n + 1) − 2 1 − 2 exp , 4 p ( ) 2 ) [ ] ( ( ) − np − nε 2 | Z − np | ≥ ε ε since µ = E [ Z ] = np and Pr p pn ≤ 2 exp = 2 exp , by the Chernoff 4 4 p p inequality. 28.2.2.5 Hyper super exciting proof continued... (A) Fix ε > 0, such that H ( p + ε ) > (1 − δ/ 4) H ( p ), p is fixed. (B) = ⇒ n H ( p ) = Ω( n ), (C) For n sufficiently large: − lg( n + 1) ≥ − δ 10 n H ( p ). ( ) − nε 2 δ (D) ... also 2 exp ≤ 10 . 4 p (E) For n large enough; ( ) ( ) 1 − δ 4 − δ 1 − δ E [ B ] ≥ n H ( p ) 10 10 ≥ (1 − δ ) n H ( p ) , 28.2.2.6 Hyper super duper exciting proof continued... (A) Need to prove upper bound. (B) If input sequence x has probability Pr [ X = x ], then y = Ext ( x ) has probability to be generated ≥ Pr [ X = x ]. (C) All sequences of length | y | have equal probability to be generated (by definition). (D) 2 | Ext ( x ) | Pr [ X = x ] ≤ 2 | Ext ( x ) | Pr [ y = Ext ( x )] ≤ 1. (E) = ⇒ | Ext ( x ) | ≤ lg(1 / Pr [ X = x ]) [ ] [ ] [ ] 1 (F) E B = ∑ x Pr X = x | Ext ( x ) | ≤ ∑ x Pr X = x lg [ X = x ] = H ( X ) . Pr 28.3 Coding: Shannon’s Theorem 28.3.0.7 Shannon’s Theorem Definition 28.3.1. The input to a binary symmetric channel with parameter p is a sequence of bits x 1 , x 2 , . . . , and the output is a sequence of bits y 1 , y 2 , . . . , such that Pr [ x i = y i ] = 1 − p independently for each i . 28.3.0.8 Encoding/decoding with noise Definition 28.3.2. A ( k, n ) encoding function Enc : { 0 , 1 } k → { 0 , 1 } n takes as input a sequence of k bits and outputs a sequence of n bits. A ( k, n ) decoding function Dec : { 0 , 1 } n → { 0 , 1 } k takes as input a sequence of n bits and outputs a sequence of k bits. 28.3.0.9 Claude Elwood Shannon Claude Elwood Shannon (April 30, 1916 - February 24, 2001), an American electrical engineer and mathematician, has been called “the father of information theory”. His master thesis was how to building boolean circuits for any boolean function. 4

28.3.0.10 Shannon’s theorem (1948) Theorem 28.3.3 (Shannon’s theorem). For a binary symmetric channel with parameter p < 1 / 2 and for any constants δ, γ > 0 , where n is sufficiently large, the following holds: (i) For an k ≤ n (1 − H ( p ) − δ ) there exists ( k, n ) encoding and decoding functions such that the probability the receiver fails to obtain the correct message is at most γ for every possible k -bit input messages. (ii) There are no ( k, n ) encoding and decoding functions with k ≥ n (1 − H ( p ) + δ ) such that the probability of decoding correctly is at least γ for a k -bit input message chosen uniformly at random. 28.3.0.11 Intuition behind Shanon’s theorem 28.3.0.12 When the sender sends a string... S = s 1 s 2 . . . s n S S np S np S np (1 − δ ) np (1 + δ ) np One ring to rule them all! 28.3.0.13 Some intuition... (A) senders sent string S = s 1 s 2 . . . s n . (B) receiver got string T = t 1 t 2 . . . t n . (C) p = Pr [ t i ̸ = s i ], for all i . [ ] (D) U : Hamming distance between S and T : U = ∑ s i ̸ = t i . i (E) By assumption: E [ U ] = pn , and U is a binomial variable. [ ] (F) By Chernoff inequality: U ∈ (1 − δ ) np, (1 + δ ) np with high probability, where δ is tiny constant. (G) T is in a ring R centered at S , with inner radius (1 − δ ) np and outer radius (1 + δ ) np . (H) This ring has (1+ δ ) np ( n ) ( ) n ≤ α = 2 · 2 n H ((1+ δ ) p ) . ∑ ≤ 2 i (1 + δ ) np i =(1 − δ ) np strings in it. 5

28.3.0.14 Many rings for many codewords... 28.3.0.15 Some more intuition... (A) Pick as many disjoint rings as possible: R 1 , . . . , R κ . (B) If every word in the hypercube would be covered... (C) ... use 2 n codewords = ⇒ κ ≥ κ ≥ 2 n 2 n 2 · 2 n H ((1+ δ ) p ) ≈ 2 n (1 − H ((1+ δ ) p )) . | R | ≥ (D) Consider all possible strings of length k such that 2 k ≤ κ . (E) Map i th string in { 0 , 1 } k to the center C i of the i th ring R i . (F) If send C i = ⇒ receiver gets a string in R i . (G) Decoding is easy - find the ring R i containing the received string, take its center string C i , and output the original string it was mapped to. ( ( )) (H) How many bits? k = ⌊ log κ ⌋ = n 1 − H (1 + δ ) p ≈ n (1 − H ( p )), 28.3.0.16 What is wrong with the above? 28.3.0.17 What is wrong with the above? (A) Can not find such a large set of disjoint rings. (B) Reason is that when you pack rings (or balls) you are going to have wasted spaces around. (C) Overcome this: allow rings to overlap somewhat. (D) Makes things considerably more involved. (E) Details in class notes. 6

Recommend

More recommend