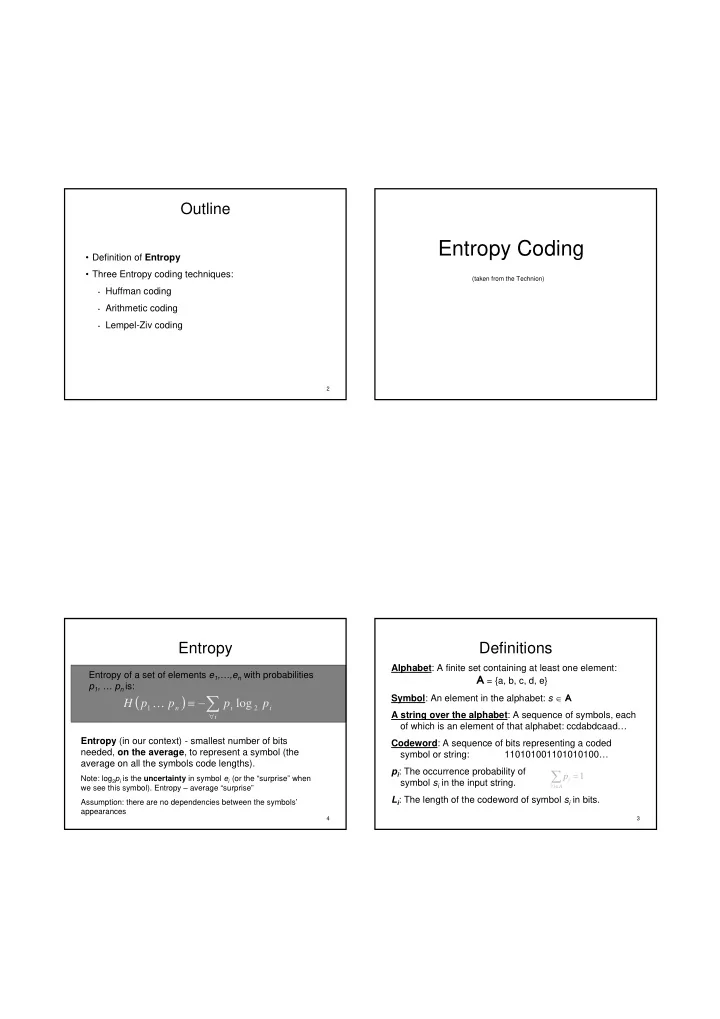

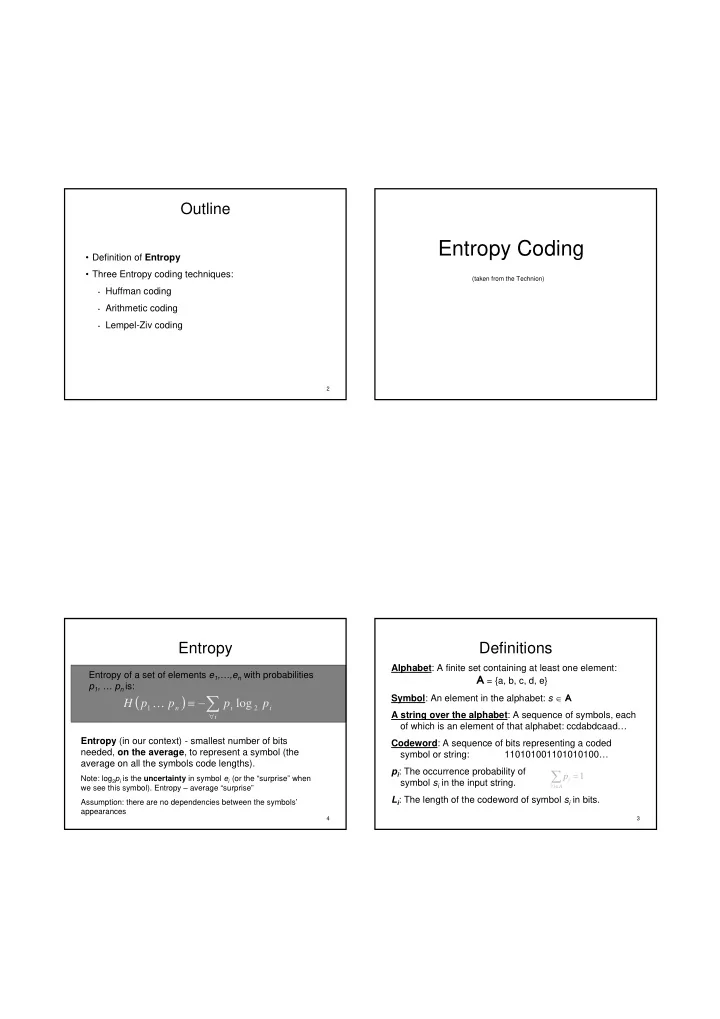

Outline Entropy Coding • Definition of Entropy • Three Entropy coding techniques: (taken from the Technion) • Huffman coding • Arithmetic coding • Lempel-Ziv coding 2 Entropy Definitions Alphabet : A finite set containing at least one element: Entropy of a set of elements e 1 ,…,e n with probabilities A = {a, b, c, d, e} p 1 , … p n is: Symbol : An element in the alphabet: s � A H p p p log p � � � � � 1 n i 2 i � A string over the alphabet : A sequence of symbols, each i � of which is an element of that alphabet: ccdabdcaad… Entropy (in our context) - smallest number of bits Codeword : A sequence of bits representing a coded needed, on the average , to represent a symbol (the symbol or string: 110101001101010100… average on all the symbols code lengths). p i : The occurrence probability of p 1 Note: log 2 p i is the uncertainty in symbol e i (or the “surprise” when � � i symbol s i in the input string. we see this symbol). Entropy – average “surprise” i A � L i : The length of the codeword of symbol s i in bits. Assumption: there are no dependencies between the symbols’ appearances 4 3

Entropy example Entropy examples Entropy calculation for a two symbol alphabet. • Entropy of e 1 ,…e n is maximized when Example 1: A p A =0.5 B p B =0.5 p 1 =p 2 =…=p n =1/n � H (e 1 ,…,e n )=log 2 n It requires one bit H A , B p log p p log p � � � � � � A 2 A B 2 B per symbol on the 0 . 5 log 0 . 5 0 . 5 log 0 . 5 1 average to ������������� ������ ��������������������������� � � � � 2 2 represent the data. ���������������� • 2 k symbols may be represented by k bits Example 2: A p A =0.8 It requires less than one bit per B p B =0.2 symbol on the • Entropy of p 1 ,…p n is minimized when average to H A , B p log p p log p � � � � � � represent the A 2 A B 2 B p 1 =1, p 2 =…=p n =0 � H (e 1 ,…,e n )=0 data. 0 . 8 log 0 . 8 0 . 2 log 0 . 2 0 . 7219 � � � � 2 2 How can we code this ? 6 5 Code types Entropy coding • Fixed-length codes - all codewords have the same • Entropy is a lower bound on the average number of length (number of bits) bits needed to represent the symbols (the data compression limit). A – 000, B – 001, C – 010, D – 011, E – 100, F – 101 • Entropy coding methods: • Aspire to achieve the entropy for a given alphabet, BPS � Entropy • Variable-length codes - may give different lengths to codewords • A code achieving the entropy limit is optimal BPS : bits per symbol A – 0, B – 00, C – 110, D – 111, E – 1000, F - 1011 encoded message BPS � original message 8 7

Huffman coding Code types (cont.) • Each symbol is assigned a variable-length code, depending • Prefix code - No codeword is a prefix of any other codeword. on its frequency. The higher its frequency, the shorter the codeword A = 0; B = 10; C = 110; D = 111 • Number of bits for each codeword is an integral number • Uniquely decodable code - Has only one possible source • A prefix code string producing it. • A variable-length code • Unambigously decoded • Huffman code is the optimal prefix and variable-length code, • Examples: given the symbols’ probabilities of occurrence • Prefix code - the end of a codeword is immediately recognized without ambiguity: 010011001110 � 0 | 10 | 0 | 110 | 0 | 111 | 0 • Codewords are generated by building a Huffman Tree • Fixed-length code 10 9 Huffman encoding Huffman tree example Each codeword is Use the codewords from the previous slide to encode the 1.0 Example: determined according string “BCAE”: decoding input to the path from the root 0 1 to the symbol. “110” (D) String: B C A E 0.55 When decoding, a tree Encoded: 10 00 01 111 traversal is performed, starting from the root. Number of bits used: 9 0 1 0.45 The BPS is (9 bits/4 symbols) = 2.25 0.3 Entropy: - 0.25log0.25 - 0.25log0.25 - 0.2log0.2 - 1 0 Probabilities 0 1 0.15log0.15 - 0.15log0.15 = 2.2854 0.25 0.2 0.25 0.15 0.15 BPS is lower than the entropy. WHY ? codewords: A-01 C-00 B-10 D-110 E-111 12 11

Huffman encoding Huffman tree construction • Initialization: • Leaf for each symbol x of alphabet A with weight =p x . • Build a table of per-symbol encodings (generated by • Note: One can work with integer weights in the leafs (for example, the Huffman tree). number of symbol occurrences) instead of probabilities. • Globally known to both encoder and decoder • while (tree not fully connected) do begin • Sent by encoder, read by decoder • Y, Z � lowest_root_weights_tree() • Encode one symbol after the other, using the encoding • r � new_root table. • r->attachSons(Y, Z) // attach one via a 0, the other via a • Encode the pseudo-eof symbol. 1 (order not significant) • weight(r) = weight(Y)+weight(Z) 14 13 Symbol probabilities Huffman decoding • Construct decoding tree based on encoding table • How are the probabilities known? • Read coded message bit-by-bit: • Travers the tree top to bottom accordingly. • Counting symbols in input string • When a leaf is reached, a codeword was found � o Data must be given in advance corresponding symbol is decoded o Requires an extra pass on the input string • Repeat until the pseudo-eof symbol is reached. • Data source’s distribution is known o Data not necessarily known in advance, but we No ambiguities when decoding codewords (prefix code) know its distribution 16 15

Example Huffman Entropy analysis “Global” English frequencies table: Best results (entropy wise) - only when symbols have Letter Prob. Letter Prob. occurrence probabilities which are negative powers of 2 (i.e. A 0.0721 N 0.0638 ½, ¼, …). Otherwise, BPS > entropy bound. B 0.0240 O 0.0681 C 0.0390 P 0.0290 Example: Symbol Probability Codeword D 0.0372 Q 0.0023 A 0.5 1 E 0.1224 R 0.0638 B 0.25 01 F 0.0272 S 0.0728 G 0.0178 T 0.0908 C 0.125 001 H 0.0449 U 0.0235 D 0.125 000 I 0.0779 V 0.0094 J 0.0013 W 0.0130 Entropy = 1.75 K 0.0054 X 0.0077 A representing probabilities input stream : AAAABBCD L 0.0426 Y 0.0126 Code: 11110101001000 M 0.0282 Z 0.0026 BPS = (14 bits/8 symbols) = 1.75 Total: 1.0000 18 17 Huffman tree Huffman summary construction complexity • Achieves entropy when occurrence probabilities are • Simple implementation - o(n 2 ). negative powers of 2 • Alphabet and its distribution must be known in advance • Using a Priority Queue - o(n·log(n)): • Given the Huffman tree, very easy (and fast) to encode and decode � Inserting a new node – o(log(n)) • Huffman code is not unique (because of some arbitrary � n nodes insertions - o(n·log(n)) decisions in the tree construction) � Retrieving 2 smallest node weights – o(log(n)) 20 19

Arithmetic coding • Assigns one (normally long) codeword to entire input stream • Reads the input stream symbol by symbol, appending Arithmetic coding more bits to the codeword each time • Codeword is a number , representing a segmental sub- section based on the symbols’ probabilities • Encodes symbols using a non-integer number of bits � very good results (entropy wise) 22 Example Mathematical definitions Coding of BCAE p A = p B = 0.25, p C = 0.2, p D = p E = 0.15 0.50 1.0 0.50 0.425 0.3875 Any E L – The smallest binary value E E E E number in 0.4625 0.85 0.4625 0.4175 0.385625 consistent with a code this range D represents D D D D representing the symbols 0.425 BCAE. 0.70 0.425 0.41 0.38375 processed so far. C R i+1 C C C C L i+1 � 0.375 0.50 0.375 0.4 0.38125 R i R – The product of the B B B B B probabilities of those symbols. 0.3125 0.25 0.3125 0.3875 0.378125 A A A A A L i � 0.25 0.0 0.25 0.375 0.375 24 23

Arithmetic encoding (cont.) Arithmetic encoding Initially L = 0, R = 1. Two possibilities for the encoder to signal to the j 1 � When encoding next symbol, L L R p R R p � � � � decoder end of the transmission: � i j L and R are refined. i 1 1. Send initially the number of symbols encoded. At the end of the message, a binary value between L and L+R will 2. Assign a new EOF symbol in the alphabet, with a very unambiguously specify the input message. The shortest such binary small probability, and encode it at the end of the string is transmitted. message. In the previous example: • Any number between 385625 and 3875 (discard the ‘0.’). Note: The order of the symbols in the alphabet must remain • Shortest number - 386, in binary: 110000010 consistent throughout the algorithm. • BPS = (9 bits/4 symbols) = 2.25 26 25 Arithmetic decoding example Arithmetic decoding Decoding of 0.386 1.0 0.50 0.425 0. 3 875 E E E 0.386 � E In order to decode the message, the symbols order and Decoding: 0.85 0.4625 0.4175 0.385625 probabilities must be passed to the decoder. D D D D B C A E 0.70 0.425 0.41 0.38375 The decoding process is identical to the encoding. Given the codeword (the final number), at each iteration the C 0.386 � C C C corresponding sub-range is entered, decoding the 0.50 0.375 0.4 0.38125 symbol representing the specific range. 0.386 � B B B B 0.25 0.3125 0.3875 0.378125 0.386 � A A A A 0.0 0.25 0.375 0. 3 75 28 27

Recommend

More recommend