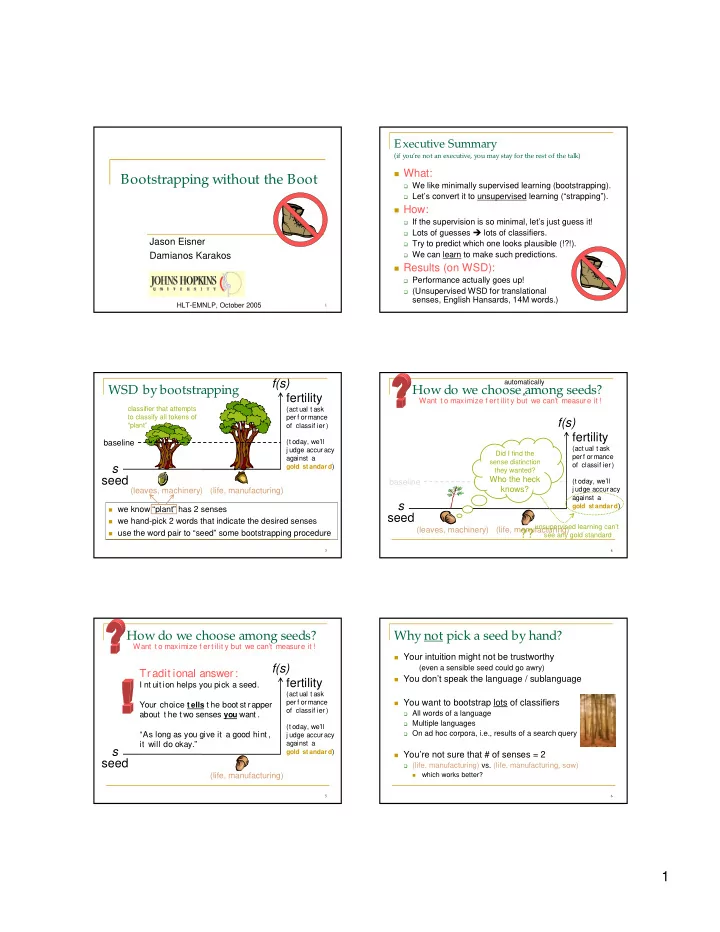

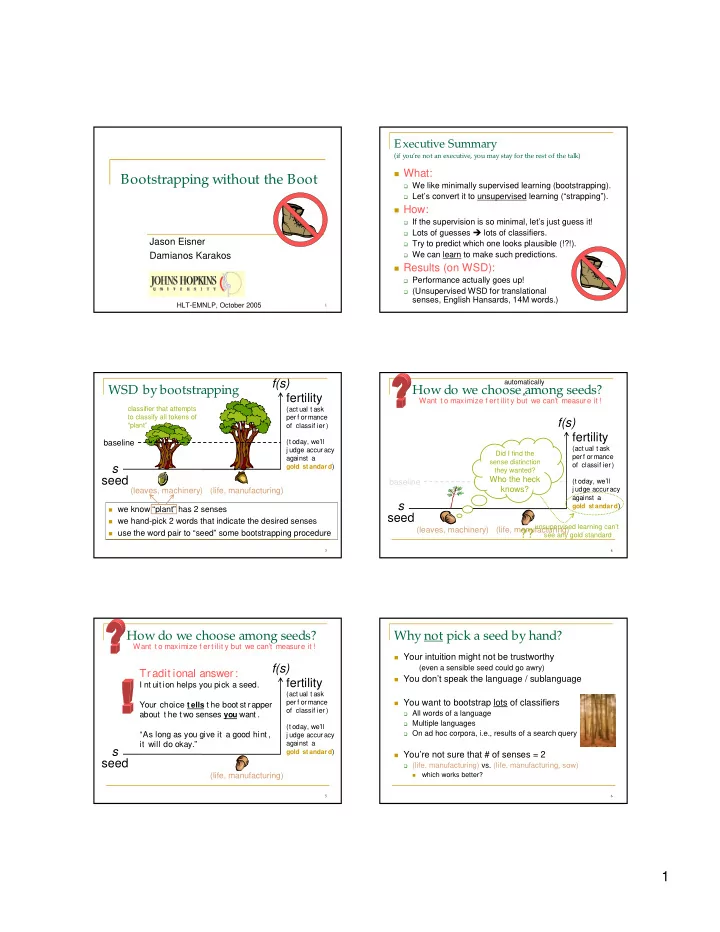

Executive Summary (if you’re not an executive, you may stay for the rest of the talk) � What: Bootstrapping without the Boot � We like minimally supervised learning (bootstrapping). � Let’s convert it to unsupervised learning (“strapping”). � How: � If the supervision is so minimal, let’s just guess it! � Lots of guesses � lots of classifiers. Jason Eisner � Try to predict which one looks plausible (!?!). Damianos Karakos � We can learn to make such predictions. � Results (on WSD): � Performance actually goes up! � (Unsupervised WSD for translational senses, English Hansards, 14M words.) HLT-EMNLP, October 2005 1 2 f(s) automatically WSD by bootstrapping How do we choose among seeds? fertility ^ Want t o maximize f ert ilit y but we can’t measure it ! classifier that attempts (act ual t ask to classify all tokens of per f or mance f(s) “plant” of classif ier ) fertility baseline (t oday, we’ll (act ual t ask j udge accur acy Did I find the per f or mance against a sense distinction of classif ier ) s gold st andard ) they wanted? seed Who the heck baseline (t oday, we’ll knows? (leaves, machinery) (life, manufacturing) j udge accur acy against a s gold st andard ) � we know “plant” has 2 senses seed � we hand-pick 2 words that indicate the desired senses unsupervised learning can’t (leaves, machinery) (life, manufacturing) ?? � use the word pair to “seed” some bootstrapping procedure see any gold standard 3 4 How do we choose among seeds? Why not pick a seed by hand? Want t o maximize f ert ilit y but we can’t measure it ! � Your intuition might not be trustworthy f(s) (even a sensible seed could go awry) Tradit ional answer: � You don’t speak the language / sublanguage fertility I nt uit ion helps you pick a seed. (act ual t ask � You want to bootstrap lots of classifiers per f or mance Your choice t ells t he boot st rapper of classif ier ) � All words of a language about t he t wo senses you want . � Multiple languages (t oday, we’ll � On ad hoc corpora, i.e., results of a search query “As long as you give it a good hint , j udge accur acy it will do okay.” against a s gold st andard ) � You’re not sure that # of senses = 2 seed � (life, manufacturing) vs. (life, manufacturing, sow) (life, manufacturing) which works better? � 5 6 1

“Strapping” This name is supposed t o r emind How do we choose among seeds? you of bagging and boost ing, Want t o maximize f ert ilit y but we can’t measure it ! which also t r ain many classif ier s. (But t hose met hods ar e super vised, & have t heor ems … ) h(s) Quickly pick a bunch of candidate seeds 1. f(s) Our answer : For each candidate seed s: 2. Bad classif iers smell f unny. predict ed � grow a classifier C s St ick wit h t he ones t hat smell like fertility real classif iers. � compute h(s) (i.e., guess whether s was fertile) ^ Return C s where s maximizes h(s) 3. Single classif ier t hat we guess t o be best . s Fut ur e work: Ret ur n a combinat ion of classif ier s? seed 7 8 table taken from Yarowsky (1995) Review: Yarowsky’s bootstrapping algorithm Review: Yarowsky’s bootstrapping algorithm life (1%) To test the idea, we chose to ot her target word: t asks? work on word-sense disambiguation 98% plant ot her and bootstrap decision-list classifiers classif ier s? using the method of Yarowsky (1995). ot her boot st r appers? manufacturing (1%) Possible f ut ure work (life, manufacturing) 9 10 figure taken from Yarowsky (1995) figure taken from Yarowsky (1995) Review: Yarowsky’s bootstrapping algorithm Review: Yarowsky’s bootstrapping algorithm Now learn a new classif ier and r epeat … Lear n a classif ier t hat & r epeat … That conf ident ly classif ies some dist inguishes A f rom B. & r epeat … of t he r emaining examples. I t will not ice f eat ur es like “animal” � A, “aut omat e” � B. (life, manufacturing) (life, manufacturing) 11 12 2

figure taken from Yarowsky (1995) table taken from Yarowsky (1995) Review: Yarowsky’s bootstrapping algorithm Review: Yarowsky’s bootstrapping algorithm Should be a good classif ier, unless we accident ally lear ned some bad cues along t he way t hat pollut ed t he or iginal sense dist inct ion. (life, manufacturing) (life, manufacturing) 13 14 ambiguous words from Gale, Church, & Yarowsky (1992) ambiguous words from Gale, Church, & Yarowsky (1992) Data for this talk Data for this talk � Unsupervised learning from 14M English words � Unsupervised learning from 14M English words (transcribed formal speech). (transcribed formal speech). � Focus on 6 ambiguous word types: � Focus on 6 ambiguous word types: � drug, duty, land, language, position, sentence � drug, duty, land, language, position, sentence each has from 300 to 3000 tokens t r y t o learn t hese dist inct ions To learn an English monolingually � French MT model, we would first hope to (assume insuf f icient drug 1 drug 2 drug 1 drug 2 sentence 1 sentence 2 sentence 1 sentence 2 discover the 2 bilingual dat a t o translational senses lear n when t o use medicament drogue peine phrase medicament drogue peine phrase of each word. each t r anslat ion) 15 16 ambiguous words from Gale, Church, & Yarowsky (1992) Strapping word-sense classifiers Data for this talk Canadian par liament ary pr oceedings (Hansards) Quickly pick a bunch of candidate seeds � Unsupervised learning from 14M English words 1. (transcribed formal speech). For each candidate seed s: 2. Automatically generate 200 seeds (x,y) � grow a classifier C s � Focus on 6 ambiguous word types: � compute h(s) (i.e., guess whether s was fertile) Get x, y to select distinct senses of target t : � drug, duty, land, language, position, sentence � x and y each have high MI with t Return C s where s maximizes h(s) 3. but evaluat e � but x and y never co-occur bilingually : Also, for safety: f or t his cor pus, x and y are not too rare drug 1 drug 2 happen t o have a � sentence 1 sentence 2 x isn’t far more frequent than y Fr ench t r anslat ion � � gold st andar d f or medicament drogue peine phrase t he senses we want . 17 18 3

Strapping word-sense classifiers Strapping word-sense classifiers Quickly pick a bunch of candidate seeds Quickly pick a bunch of candidate seeds 1. 1. For each candidate seed s: For each candidate seed s: 2. 2. � grow a classifier C s � grow a classifier C s � compute h(s) (i.e., guess whether s was fertile) � compute h(s) (i.e., guess whether s was fertile) replicate Yarowsky (1995) Return C s where s maximizes h(s) Return C s where s maximizes h(s) 3. 3. h(s) is the interesting part. (with fewer kinds of features, and some small algorithmic differences) best good lousy drug (alcohol, (abuse, (traffickers, medical) information) trafficking) sentence (reads, (quote, (length, served) death) life) 19 20 Strapping word-sense classifiers Strapping word-sense classifiers Quickly pick a bunch of candidate seeds Quickly pick a bunch of candidate seeds 1. 1. For each candidate seed s: For each candidate seed s: 2. 2. � grow a classifier C s � grow a classifier C s � compute h(s) (i.e., guess whether s was fertile) � compute h(s) (i.e., guess whether s was fertile) Return C s where s maximizes h(s) Return C s where s maximizes h(s) 3. 3. h(s) is the interesting part. For comparison, hand-picked 2 seeds. How can you possibly t ell, Casually selected (< 2 min.) – one author picked a reasonable (x,y) from the 200 candidates. wit hout supervision, Carefully constructed (< 10 min.) – other author whet her a classif ier is any good? studied gold standard, then separately picked high-MI x and y that retrieved appropriate initial examples. 21 22 oversimplified slide Unsupervised WSD as clustering Clue # 1: Confidence of the classifier bad “skewed” good Yes! These tokens Um, maybe I found are sense A! And some senses, but + + – + + + these are B! I’m not sure. + + + – + + + + + + + + though maybe the though this could senses are truly be overconfidence: – – + – – – – + + – may have found the hard to distinguish + + wrong senses + – – � Final decision list for C s Easy to tell which clustering is “best” � Does it confidently classify the � A good unsupervised clustering has high � training tokens, on average? � p(data | label) – minimum-variance clustering � Opens the “black box” classifier � p(data) – EM clustering to assess confidence � MI(data, label) – information bottleneck clustering (but so does bootstrapping itself) 23 24 possible variants – e.g., is the label overdetermined by many features? 4

Recommend

More recommend