Basic Statistics and Probability Theory Based on Foundations of - PowerPoint PPT Presentation

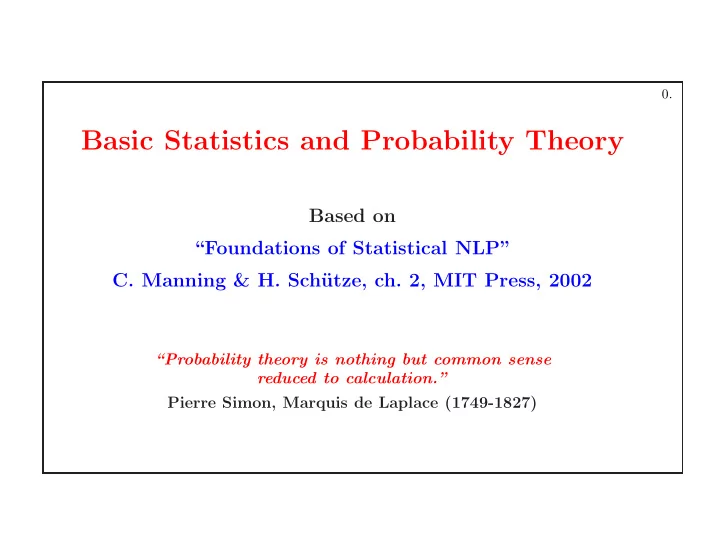

0. Basic Statistics and Probability Theory Based on Foundations of Statistical NLP C. Manning & H. Sch utze, ch. 2, MIT Press, 2002 Probability theory is nothing but common sense reduced to calculation. Pierre Simon,

0. Basic Statistics and Probability Theory Based on “Foundations of Statistical NLP” C. Manning & H. Sch¨ utze, ch. 2, MIT Press, 2002 “Probability theory is nothing but common sense reduced to calculation.” Pierre Simon, Marquis de Laplace (1749-1827)

1. PLAN 1. Elementary Probability Notions: • Sample Space, Event Space, and Probability Function • Conditional Probability • Bayes’ Theorem • Independence of Probabilistic Events 2. Random Variables: • Discrete Variables and Continuous Variables • Mean, Variance and Standard Deviation • Standard Distributions • Joint, Marginal and and Conditional Distributions • Independence of Random Variables

2. PLAN (cont’d) 3. Limit Theorems • Laws of Large Numbers • Central Limit Theorems 4. Estimating the parameters of probabilistic models from data • Maximum Likelihood Estimation (MLE) • Maximum A Posteriori (MAP) Estimation 5. Elementary Information Theory • Entropy; Conditional Entropy; Joint Entropy • Information Gain / Mutual Information • Cross-Entropy • Relative Entropy / Kullback-Leibler (KL) Divergence • Properties: bounds, chain rules, (non-)symmetries, properties pertaining to independence

3. 1. Elementary Probability Notions • sample space: Ω (either discrete or continuous) • event: A ⊆ Ω – the certain event: Ω – the impossible event: ∅ – elementary event: any { ω } , where ω ∈ Ω • event space: F = 2 Ω (or a subspace of 2 Ω that contains ∅ and is closed under complement and countable union) • probability function/distribution: P : F → [0 , 1] such that: – P (Ω) = 1 – the “countable additivity” property: ∀ A 1 , ..., A k disjoint events, P ( ∪ A i ) = � P ( A i ) Consequence: for a uniform distribution in a finite sample space: P ( A ) = # favorable elementary events # all elementary events

4. Conditional Probability • P ( A | B ) = P ( A ∩ B ) P ( B ) Note: P ( A | B ) is called the a posteriory probability of A, given B. • The “multiplication” rule: P ( A ∩ B ) = P ( A | B ) P ( B ) = P ( B | A ) P ( A ) • The “chain” rule: P ( A 1 ∩ A 2 ∩ . . . ∩ A n ) = P ( A 1 ) P ( A 2 | A 1 ) P ( A 3 | A 1 , A 2 ) . . . P ( A n | A 1 , A 2 , . . . , A n − 1 )

5. • The “total probability” formula: P ( A ) = P ( A | B ) P ( B ) + P ( A | ¬ B ) P ( ¬ B ) More generally: if A ⊆ ∪ B i and ∀ i � = j B i ∩ B j = ∅ , then P ( A ) = � i P ( A | B i ) P ( B i ) • Bayes’ Theorem: P ( B | A ) = P ( A | B ) P ( B ) P ( A ) P ( A | B ) P ( B ) or P ( B | A ) = P ( A | B ) P ( B ) + P ( A | ¬ B ) P ( ¬ B ) or ...

6. Independence of Probabilistic Events • Independent events: P ( A ∩ B ) = P ( A ) P ( B ) Note: When P ( B ) � = 0 , the above definition is equivalent to P ( A | B ) = P ( A ) . • Conditionally independent events: P ( A ∩ B | C ) = P ( A | C ) P ( B | C ) , assuming, of course, that P ( C ) � = 0 . Note: When P ( B ∩ C ) � = 0 , the above definition is equivalent to P ( A | B, C ) = P ( A | C ) .

7. 2. Random Variables 2.1 Basic Definitions Let Ω be a sample space, and P : 2 Ω → [0 , 1] a probability function. • A random variable of distribution P is a function X : Ω → R n ◦ For now, let us consider n = 1 . ◦ The cumulative distribution function of X is F : R → [0 , ∞ ) defined by F ( x ) = P ( X ≤ x ) = P ( { ω ∈ Ω | X ( ω ) ≤ x } )

8. 2.2 Discrete Random Variables Definition: Let P : 2 Ω → [0 , 1] be a probability function, and X be a random variable of distribution P . • If Val ( X ) is either finite or unfinite countable, then X is called a discrete random variable. ◦ For such a variable we define the probability mass function (pmf) def. not. p : R → [0 , 1] as p ( x ) = p ( X = x ) = P ( { ω ∈ Ω | X ( ω ) = x } ) . (Obviously, it follows that � x i ∈ V al ( X ) p ( x i ) = 1 .) Mean, Variance, and Standard Deviation: • Expectation / mean of X : not. E ( X ) = E [ X ] = � x xp ( x ) if X is a discrete random variable. not. = Var [ X ] = E (( X − E ( X )) 2 ) . • Variance of X : Var ( X ) � • Standard deviation: σ = Var ( X ) . Covariance of X and Y , two random variables of distribution P : • Cov ( X, Y ) = E [( X − E [ X ])( Y − E [ Y ])]

9. Exemplification: n p r (1 − p ) n − r for r = 0 , . . . , n • the Binomial distribution: b ( r ; n, p ) = C r mean: np , variance: np (1 − p ) ◦ the Bernoulli distribution: b ( r ; 1 , p ) mean: p , variance: p (1 − p ) , entropy: − p log 2 p − (1 − p ) log 2 (1 − p ) Binomial cumulative distribution function Binomial probability mass function 0.25 0.25 1.0 1.0 p = 0.5, n = 20 p = 0.7, n = 20 0.20 0.20 0.8 0.8 p = 0.5, n = 40 0.15 0.15 b(r ; n, p) 0.6 0.6 F(r) 0.10 0.10 0.4 0.4 0.05 0.05 0.2 0.2 p = 0.5, n = 20 p = 0.7, n = 20 p = 0.5, n = 40 0.00 0.00 0.0 0.0 0 0 10 10 20 20 30 30 40 40 0 0 10 10 20 20 30 30 40 40 r r

10. 2.3 Continuous Random Variables Definitions: Let P : 2 Ω → [0 , 1] be a probability function, and X : Ω → R be a random variable of distribution P . • If Val ( X ) is unfinite non-countable set, and F , the cumulative distribution function of X is continuous, then X is called a continuous random variable. (It follows, naturally, that P ( X = x ) = 0 , for all x ∈ R .) � x • If there exists p : R → [0 , ∞ ) such that F ( x ) = −∞ p ( t ) dt , then X is called absolutely continuous. In such a case, p is called the probability density function (pdf) of X . B p ( x ) dx exists, P ( X − 1 ( B )) = � � ◦ For B ⊆ R for which B p ( x ) dx , not. where X − 1 ( B ) = { ω ∈ Ω | X ( ω ) ∈ B } . � + ∞ In particular, −∞ p ( x ) dx = 1 . not. � • Expectation / mean of X : E ( X ) = E [ X ] = xp ( x ) dx .

11. Exemplification: − ( x − µ ) 2 2 πσ e √ 1 2 σ 2 • Normal (Gaussean) distribution: N ( x ; µ, σ ) = mean: µ , variance: σ 2 ◦ Standard Normal distribution: N ( x ; 0 , 1) • Remark: For n, p such that np (1 − p ) > 5 , the Binomial distributions can be approximated by Normal distributions.

12. Gaussian probability density function Gaussian cumulative distribution function 1.0 1.0 1.0 1.0 µ = 0, σ = 0.2 µ = 0, σ = 1.0 µ = 0, σ = 5.0 0.8 0.8 0.8 0.8 µ = −2, σ = 0.5 0.6 0.6 0.6 0.6 N µ , σ 2 (X=x) φ µ , σ 2 (x) 0.4 0.4 0.4 0.4 µ = 0, σ = 0.2 0.2 0.2 0.2 0.2 µ = 0, σ = 1.0 µ = 0, σ = 5.0 µ = −2, σ = 0.5 0.0 0.0 0.0 0.0 −4 −4 −2 −2 0 0 2 2 4 4 −4 −4 −2 −2 0 0 2 2 4 4 x x

13. 2.4 Basic Properties of Random Variables Let P : 2 Ω → [0 , 1] be a probability function, X : Ω → R n be a random discrete/continuous variable of distribution P . • If g : R n → R m is a function, then g ( X ) is a random variable. If g ( X ) is discrete, then E ( g ( X )) = � x g ( x ) p ( x ) . � If g ( X ) is continuous, then E ( g ( X )) = g ( x ) p ( x ) dx . ◦ If g is non-linear �⇒ E ( g ( X )) = g ( E ( X )) . • E ( aX ) = aE ( X ) . • E ( X + Y ) = E ( X ) + E ( Y ) , therefore E [ � n i =1 a i X i ] = � n i =1 a i E [ X i ] . ◦ Var ( aX ) = a 2 Var ( X ). ◦ Var ( X + a ) = Var ( X ). • Var ( X ) = E ( X 2 ) − E 2 ( X ) . • Cov ( X, Y ) = E [ XY ] − E [ X ] E [ Y ] .

14. 2.5 Joint, Marginal and Conditional Distributions Exemplification for the bi-variate case: Let Ω be a sample space, P : 2 Ω → [0 , 1] a probability function, and V : Ω → R 2 be a random variable of distribution P . One could naturally see V as a pair of two random variables X : Ω → R and Y : Ω → R . (More precisely, V ( ω ) = ( x, y ) = ( X ( ω ) , Y ( ω )) .) • the joint pmf/pdf of X and Y is defined by not. p ( x, y ) = p X,Y ( x, y ) = P ( X = x, Y = y ) = P ( ω ∈ Ω | X ( ω ) = x, Y ( ω ) = y ) . • the marginal pmf/pdf functions of X and Y are: for the discrete case: p X ( x ) = � p Y ( y ) = � y p ( x, y ) , x p ( x, y ) for the continuous case: � � p X ( x ) = y p ( x, y ) dy , p Y ( y ) = x p ( x, y ) dx • the conditional pmf/pdf of X given Y is: p X | Y ( x | y ) = p X,Y ( x, y ) p Y ( y )

15. 2.6 Independence of Random Variables Definitions: • Let X, Y be random variables of the same type (i.e. either discrete or continuous), and p X,Y their joint pmf/pdf. X and Y are said to be independent if p X,Y ( x, y ) = p X ( x ) · p Y ( y ) for all possible values x and y of X and Y respectively. • Similarly, let X, Y and Z be random variables of the same type, and p their joint pmf/pdf. X and Y are conditionally independent given Z if p X,Y | Z ( x, y | z ) = p X | Z ( x | z ) · p Y | Z ( y | z ) for all possible values x, y and z of X, Y and Z respectively.

16. Properties of random variables pertaining to independence • If X, Y are independent, then Var ( X + Y ) = Var ( X ) + Var ( Y ). • If X, Y are independent, then E ( XY ) = E ( X ) E ( Y ) , i.e. Cov ( X, Y ) = 0 . ◦ Cov ( X, Y ) = 0 �⇒ X, Y are independent. ◦ The covariance matrix corresponding to a vector of random variables is symmetric and positive semi-definite. • If the covariance matrix of a multi-variate Gaussian distribution is diagonal, then the marginal distributions are independent.

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.