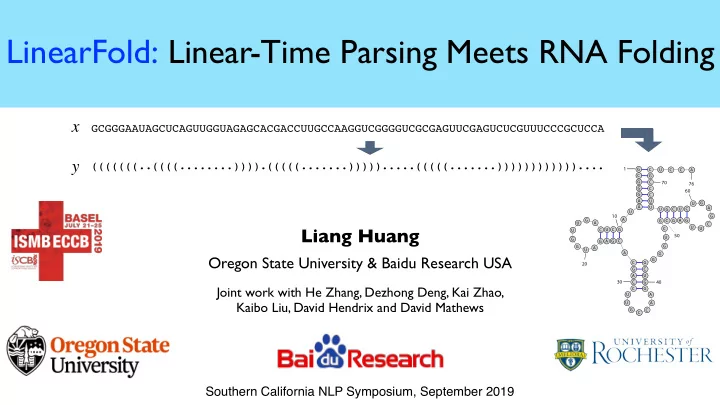

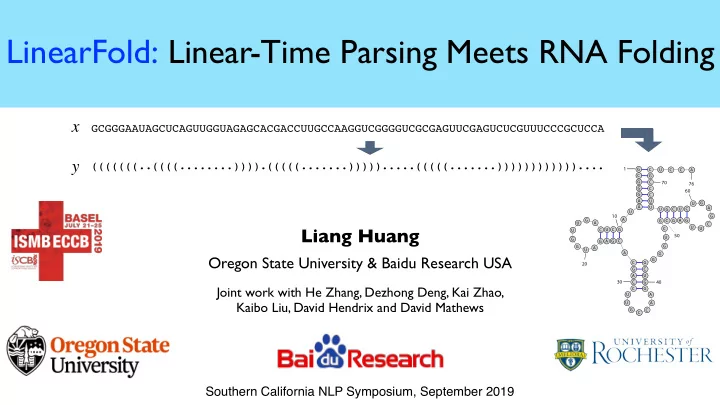

Background: RNAs and RNA folding RNA has dual roles: RNA sequence informational (DNA=>RNA=>protein) GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA functional (non-coding RNAs) structure prediction knowing structures can infer function (“RNA folding”) secondary structure 6

RNA Secondary Structure Prediction allowed pairs: G-C A-U G-U example: transfer RNA (tRNA) assume no crossing pairs (no pseudoknots) input x GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA 7 7

RNA Secondary Structure Prediction allowed pairs: G-C A-U G-U example: transfer RNA (tRNA) assume no crossing pairs (no pseudoknots) input x GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA output y (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... 7 7

RNA Secondary Structure Prediction allowed pairs: G-C A-U G-U example: transfer RNA (tRNA) assume no crossing pairs (no pseudoknots) input x GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA output y (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... 1 G C U C C A C G G C 70 76 G C 60 G C A U G U A U A C C U U G U 10 G A G A G G C G A U C U C U G C U C U 50 U G C G A G G G A U A G G C G 20 G C A U 30 C 40 G C G U A U A G C C 7 7

RNA Secondary Structure Prediction allowed pairs: G-C A-U G-U example: transfer RNA (tRNA) assume no crossing pairs (no pseudoknots) input x GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA output y (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... 1 G C U C C A C G G C 70 76 G C 60 G C A U G U A U A C C U U G U 10 G A G A G G C G A U C U C U G C U C U 50 U G C G A G G G A U A G G C G 20 G C A U 30 C 40 G C G parse tree U A U A G C C 7 7

RNA Secondary Structure Prediction allowed pairs: G-C A-U G-U example: transfer RNA (tRNA) assume no crossing pairs (no pseudoknots) input x GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA output y (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... . S O ( n 3 ) . 1 G C U C C A NP VP C G . G C 70 76 DT NN VB NP G C 60 G C the man bit DT NN A U G U A U A C C U U G U the dog 10 G A G A G G C G A U C U C U G C U C U problem: standard structure prediction 50 U G C G A G G G A U algorithms are way too slow: O ( n 3 ) A G G C G 20 G C A U 30 C 40 G C G parse tree U A U A G C C 7 7

RNA Secondary Structure Prediction allowed pairs: G-C A-U G-U example: transfer RNA (tRNA) assume no crossing pairs (no pseudoknots) input x GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA output y (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... . S O ( n 3 ) . 1 G C U C C A NP VP C G . G C 70 76 DT NN VB NP G C 60 G C the man bit DT NN A U G U A U A C C U U G U the dog 10 G A G A G G C G A U C U C U G C U C U problem: standard structure prediction 50 U G C G A G G G A U algorithms are way too slow: O ( n 3 ) A G G C G 20 G C A U 30 C 40 G solution: adapt my linear-time dynamic C G parse tree U A programming algorithms from parsing U A G C C 7 7

Fast Structure Prediction Enables RNA Design RNA sequence GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA structure prediction design (“folding”) detecting active TB using RNA design which needs our fast RNA folding RNA secondary structure RNA 3D structure Professor Rhiju Das EteRNA game (RNA design) Stanford Medical School 8

From Linguistics to Biology x GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA y (((((((..((((........)))).(((((.......))))).....(((((.......))))))))))))....

From Linguistics to Biology x GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA y (((((((..((((........)))).(((((.......))))).....(((((.......))))))))))))....

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) i k j A C A G U 10

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) i k j . . . A C A G U 10

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) i k j . . . . . . . . A C A G U 10

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) i k j . . . .. . . . . . A C A G U 10

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) i k j . . . .. .. . . . . . A C A G U 10

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) i k j . . . .. .. .. () . . . . . A C A G U 10

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) i k j . . . ... .. .. .. () . . . . . A C A G U 10

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) i k j . . . ... (.) .. .. .. () . . . . . A C A G U 10

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) i k j . . . ... (.) (.) .. .. .. () . . . . . A C A G U 10

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) i k j . .(.) . . ... (.) (.) .. .. .. () . . . . . A C A G U 10

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) i k j . .(.) (.). . . ... (.) (.) .. .. .. () . . . . . A C A G U 10

Background: CKY for RNA Folding: O ( n 3 ) • Dynamic Programming — O ( n 3 ) ( ) • bottom-up CKY parsing i i+1 j-1 j • example: maximize # of pairs (A-U, G-C, or G-U) ((.)) i k j . .(.) (.). . . ... (.) (.) .. .. .. () . . . . . A C A G U 10

Computational Linguistics => Computational Biology linguistics compiler theory comp. linguistics computational biology 1955 Chomsky: context-free dynamic O ( n 3 ) 1958 Backus & Naur: grammars (CFGs) … programming CFGs for program, lang. S 1964 C ocke \ bottom-up NP VP 1965 K asami - CKY O ( n 3 ) DT NN VB NP 1967 Y ounger / for all CFGs the man bit DT NN the dog 1978: Nussinov O ( n 3 ) RNA folding 1981: Zuker & Siegler 11

Computational Linguistics => Computational Biology linguistics compiler theory comp. linguistics computational biology 1955 Chomsky: context-free dynamic O ( n 3 ) 1958 Backus & Naur: grammars (CFGs) … programming CFGs for program, lang. S 1964 C ocke \ bottom-up NP VP 1965 K asami - CKY O ( n 3 ) DT NN VB NP 1967 Y ounger / for all CFGs the man bit DT NN the dog 1965 Knuth: LR parsing for := restricted CFGs: O ( n ) id + x id const 1978: Nussinov O ( n 3 ) RNA folding y 3 x = y + 3; 1981: Zuker & Siegler O ( n ) 11

Computational Linguistics => Computational Biology linguistics compiler theory comp. linguistics computational biology 1955 Chomsky: context-free dynamic O ( n 3 ) 1958 Backus & Naur: grammars (CFGs) … programming CFGs for program, lang. S 1964 C ocke \ bottom-up NP VP 1965 K asami - CKY O ( n 3 ) DT NN VB NP 1967 Y ounger / for all CFGs the man bit DT NN the dog 1965 Knuth: LR parsing for := restricted CFGs: O ( n ) id + x id const 1978: Nussinov O ( n 3 ) RNA folding y 3 x = y + 3; 1986 Tomita: G eneralized LR 1981: Zuker & Siegler O ( n ) for all CFGs: O ( n 3 ) O ( n 3 ) 11

Computational Linguistics => Computational Biology linguistics compiler theory comp. linguistics computational biology 1955 Chomsky: context-free dynamic O ( n 3 ) 1958 Backus & Naur: grammars (CFGs) … programming CFGs for program, lang. S 1964 C ocke \ bottom-up NP VP 1965 K asami - CKY O ( n 3 ) DT NN VB NP 1967 Y ounger / for all CFGs the man bit DT NN the dog 1965 Knuth: LR parsing for := restricted CFGs: O ( n ) id + x id const 1978: Nussinov O ( n 3 ) RNA folding y 3 x = y + 3; 1986 Tomita: G eneralized LR 1981: Zuker & Siegler O ( n ) for all CFGs: O ( n 3 ) O ( n 3 ) 2010: Huang & Sagae: O ( n ) O ( n ) (approx.) DP for all CFGs 11

Computational Linguistics => Computational Biology linguistics compiler theory comp. linguistics computational biology 1955 Chomsky: context-free dynamic O ( n 3 ) 1958 Backus & Naur: grammars (CFGs) … programming CFGs for program, lang. S 1964 C ocke \ bottom-up NP VP 1965 K asami - CKY O ( n 3 ) DT NN VB NP 1967 Y ounger / for all CFGs the man bit DT NN the dog 1965 Knuth: LR parsing for := restricted CFGs: O ( n ) id + x id const 1978: Nussinov O ( n 3 ) RNA folding y 3 x = y + 3; 1986 Tomita: G eneralized LR 1981: Zuker & Siegler O ( n ) for all CFGs: O ( n 3 ) O ( n 3 ) 2010: Huang & Sagae: O ( n ) O ( n ) (approx.) DP for all CFGs 2019: LinearFold O ( n ) (approx.) RNA folding 11

How to Fold RNAs in Linear-Time? 5’ 3’ GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA • idea 0: tag each nucleotide from left to right • maintain a stack: push “(”, pop “)”, skip “.” • naive: O (3 n ) ( . ) 12

How to Fold RNAs in Linear-Time? 5’ 3’ GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... • idea 0: tag each nucleotide from left to right • maintain a stack: push “(”, pop “)”, skip “.” • naive: O (3 n ) ( . ) 12

How to Fold RNAs in Linear-Time? 5’ 3’ GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... • idea 1: DP by packing “equivalent states” • maintain graph-structured stacks • DP: O ( n 3 ) ( . ) 13

How to Fold RNAs in Linear-Time? 5’ 3’ GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... • idea 1: DP by packing “equivalent states” • maintain graph-structured stacks • DP: O ( n 3 ) ( . ) 14

How to Fold RNAs in Linear-Time? 5’ 3’ GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... • idea 2: approximate search: beam pruning • keep only top b states per step • DP+beam: O ( n ) 15

How to Fold RNAs in Linear-Time? 5’ 3’ GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... • idea 2: approximate search: beam pruning • keep only top b states per step • DP+beam: O ( n ) each DP state corresponds to exponentially many non-DP states 15

How to Fold RNAs in Linear-Time? 5’ 3’ GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... • idea 2: approximate search: beam pruning • keep only top b states per step • DP+beam: O ( n ) each DP state corresponds to exponentially many non-DP states 15

How to Fold RNAs in Linear-Time? 5’ 3’ GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA (((((((..((((........)))).(((((.......))))).....(((((.......)))))))))))).... • idea 2: approximate search: beam pruning • keep only top b states per step • DP+beam: O ( n ) h c r a e s m a e b each DP state corresponds to exponentially many non-DP states 15

On to details... x GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA y (((((((..((((........)))).(((((.......))))).....(((((.......))))))))))))....

On to details... x GCGGGAAUAGCUCAGUUGGUAGAGCACGACCUUGCCAAGGUCGGGGUCGCGAGUUCGAGUCUCGUUUCCCGCUCCA y (((((((..((((........)))).(((((.......))))).....(((((.......))))))))))))....

An Example (Optimal) Path A Example state: Nussinov-style scoring model: structure ( (.) +1.0 for each pair score +0.9 -0.1 for each unpaired nucleotide stack 1 0 Example path: step: 1 2 3 4 5 C CC CCA CCAG CCAGG skip push pop pop push . ( (( (( . ( (.) ((.)) ( ) ) ( ✏ 0.0 0.0 -0.1 +0.9 +1.9 0 0 1 0 1 2 1 2 1 0 0 0 17

An Example (Optimal) Path A Example state: Nussinov-style scoring model: structure ( (.) +1.0 for each pair score +0.9 -0.1 for each unpaired nucleotide stack 1 0 Example path: step: 1 2 3 4 5 C CC CCA CCAG CCAGG skip push pop pop push . ( (( (( . ( (.) ((.)) ( ) ) ( ✏ 0.0 0.0 -0.1 +0.9 +1.9 0 0 1 0 1 2 1 2 1 0 0 0 17

An Example (Optimal) Path A Example state: Nussinov-style scoring model: structure ( (.) +1.0 for each pair score +0.9 -0.1 for each unpaired nucleotide stack 1 0 Example path: step: 1 2 3 4 5 C CC CCA CCAG CCAGG skip push pop pop push . ( (( (( . ( (.) ((.)) ( ) ) ( ✏ 0.0 0.0 -0.1 +0.9 +1.9 0 0 1 0 1 2 1 2 1 0 0 0 17

An Example (Optimal) Path A Example state: Nussinov-style scoring model: structure ( (.) +1.0 for each pair score +0.9 -0.1 for each unpaired nucleotide stack 1 0 Example path: step: 1 2 3 4 5 C CC CCA CCAG CCAGG skip push pop pop push . ( (( (( . ( (.) ((.)) ( ) ) ( ✏ 0.0 0.0 -0.1 +0.9 +1.9 0 0 1 0 1 2 1 2 1 0 0 0 17

An Example (Optimal) Path A Example state: Nussinov-style scoring model: structure ( (.) +1.0 for each pair score +0.9 -0.1 for each unpaired nucleotide stack 1 0 Example path: step: 1 2 3 4 5 C CC CCA CCAG CCAGG skip push pop pop push . ( (( (( . ( (.) ((.)) ( ) ) ( ✏ 0.0 0.0 -0.1 +0.9 +1.9 0 0 1 0 1 2 1 2 1 0 0 0 17

Version 1: Naive Exhaustive Search: O (3 n ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG . ( (( (( . ( (.) ((.)) ( ) ( ) ✏ 0.0 0.0 -0.1 +0.9 +1.9 . 0 0 1 0 1 2 1 2 1 0 0 0 . . ( . ( .. (..) (..). ) -0.1 -0.2 +0.8 +0.7 . . 1 0 1 0 0 0 0 0 ( ... (...) ) -0.3 +0.7 1 0 0 0 . . . . ( . ( . .(.) .(.). ) ( -0.1 -0.1 -0.2 +0.8 +0.7 . 0 0 2 0 2 0 0 0 0 0 . . ( .. .(..) ) -0.3 +0.7 2 0 0 0 .. ... .... ..... . . . -0.2 -0.3 -0.4 -0.5 0 0 0 0 0 0 0 0 18

Version 1: Naive Exhaustive Search: O (3 n ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG . ( (( (( . ( (.) ((.)) ( ) ( ) ✏ 0.0 0.0 -0.1 +0.9 +1.9 . 0 0 1 0 1 2 1 2 1 0 0 0 . . ( . ( .. (..) (..). ) -0.1 -0.2 +0.8 +0.7 . . 1 0 1 0 0 0 0 0 ( ... (...) ) -0.3 +0.7 1 0 0 0 . . . . ( . ( . .(.) .(.). ) ( -0.1 -0.1 -0.2 +0.8 +0.7 . 0 0 2 0 2 0 0 0 0 0 . . ( .. .(..) ) -0.3 +0.7 2 0 0 0 .. ... .... ..... . . . -0.2 -0.3 -0.4 -0.5 0 0 0 0 0 0 0 0 18

Version 1: Naive Exhaustive Search: O (3 n ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG . ( (( (( . ( (.) ((.)) ( ) ( ) ✏ 0.0 0.0 -0.1 +0.9 +1.9 . 0 0 1 0 1 2 1 2 1 0 0 0 . . ( . ( .. (..) (..). ) -0.1 -0.2 +0.8 +0.7 . . 1 0 1 0 0 0 0 0 ( ... (...) ) -0.3 +0.7 1 0 0 0 . . . . ( . ( . .(.) .(.). ) ( -0.1 -0.1 -0.2 +0.8 +0.7 . 0 0 2 0 2 0 0 0 0 0 . . ( .. .(..) ) -0.3 +0.7 2 0 0 0 .. ... .... ..... . . . -0.2 -0.3 -0.4 -0.5 0 0 0 0 0 0 0 0 18

Version 1: Naive Exhaustive Search: O (3 n ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG . ( (( (( . ( (.) ((.)) ( ) ( ) ✏ 0.0 0.0 -0.1 +0.9 +1.9 . 0 0 1 0 1 2 1 2 1 0 0 0 . . ( . ( .. (..) (..). ) -0.1 -0.2 +0.8 +0.7 . . 1 0 1 0 0 0 0 0 ( ... (...) ) -0.3 +0.7 1 0 0 0 . . . . ( . ( . .(.) .(.). ) ( -0.1 -0.1 -0.2 +0.8 +0.7 . 0 0 2 0 2 0 0 0 0 0 . . ( .. .(..) ) -0.3 +0.7 2 0 0 0 .. ... .... ..... . . . -0.2 -0.3 -0.4 -0.5 0 0 0 0 0 0 0 0 18

Version 1: Naive Exhaustive Search: O (3 n ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG . ( (( (( . ( (.) ((.)) ( ) ( ) ✏ 0.0 0.0 -0.1 +0.9 +1.9 . 0 0 1 0 1 2 1 2 1 0 0 0 . . ( . ( .. (..) (..). ) -0.1 -0.2 +0.8 +0.7 . . 1 0 1 0 0 0 0 0 ( ... (...) ) -0.3 +0.7 1 0 0 0 . . . . ( . ( . .(.) .(.). ) ( -0.1 -0.1 -0.2 +0.8 +0.7 . 0 0 2 0 2 0 0 0 0 0 . . ( .. .(..) ) -0.3 +0.7 2 0 0 0 .. ... .... ..... . . . -0.2 -0.3 -0.4 -0.5 0 0 0 0 0 0 0 0 18

Idea 1: Merge Identical Stacks => Version 2: O (2 n ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG . ( (( (( . ( (.) ((.)) ( ) ( ) ✏ 0.0 0.0 -0.1 +0.9 +1.9 . 0 0 1 0 1 2 1 2 1 0 0 0 . . ( . ( .. (..) (..). ) -0.1 -0.2 +0.8 +0.7 . . 1 0 1 0 0 0 0 0 ( ... (...) ) -0.3 +0.7 1 0 0 0 . . . . ( . ( . .(.) .(.). ) ( -0.1 -0.1 -0.2 +0.8 +0.7 . 0 0 2 0 2 0 0 0 0 0 . . ( .. .(..) ) -0.3 +0.7 2 0 0 0 .. ... .... ..... . . . -0.2 -0.3 -0.4 -0.5 0 0 0 0 0 0 0 0 19

Idea 1: Merge Identical Stacks => Version 2: O (2 n ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG E ( (( . (( . ( (.) ((.)) ( ) ) ( ✏ 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 1 0 1 2 1 2 1 0 0 0 . ( . . ( .. . -0.1 -0.2 ) ) 1 0 1 0 . ( . . ( . . .(.) ( ) . -0.1 -0.1 -0.2 +0.8 . 0 0 2 0 2 0 0 0 . .. ... . ( .. . -0.2 -0.3 -0.3 0 0 0 0 2 0 20

Details: Pack “Equivalent” States => O ( n 3 ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG E ( (( . (( . ( (.) ((.)) ( ) ) ( ✏ 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 1 0 1 2 1 2 1 0 0 0 . ( . . ( .. . -0.1 -0.2 two states are ) ) 1 0 1 0 equivalent if they . ( . . ( . . .(.) ( ) . share the same -0.1 -0.1 -0.2 +0.8 . 0 0 2 0 2 0 0 0 stack top . .. ... . ( .. . -0.2 -0.3 -0.3 0 0 0 0 2 0 . ( ? ( ( (.) ((.)) ( ( ( ? ( . ) ✏ ) . 0.0 +0.9 +1.9 . . 0.0 ( -0.1 0 0 ... 1 ) ... 1 0 0 ... 2 2 ... 2 . .(.) .(.) -0.1 packing unpacking +0.8 0 0 0 0 21

Details: Pack “Equivalent” States => O ( n 3 ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG E ( (( . (( . ( (.) ((.)) ( ) ) ( ✏ 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 1 0 1 2 1 2 1 0 0 0 . ( . . ( .. . -0.1 -0.2 two states are ) ) 1 0 1 0 equivalent if they . ( . . ( . . .(.) ( ) . share the same -0.1 -0.1 -0.2 +0.8 . 0 0 2 0 2 0 0 0 stack top . .. ... . ( .. . -0.2 -0.3 -0.3 0 0 0 0 2 0 . ( ? ( ( (.) ((.)) ( ( ( ? ( . ) ✏ ) . 0.0 +0.9 +1.9 . . 0.0 ( -0.1 0 0 ... 1 ) ... 1 0 0 ... 2 2 ... 2 . .(.) .(.) -0.1 packing unpacking +0.8 0 0 0 0 21

Details: Pack “Equivalent” States => O ( n 3 ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG E ( (( . (( . ( (.) ((.)) ( ) ) ( ✏ 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 1 0 1 2 1 2 1 0 0 0 . ( . . ( .. . -0.1 -0.2 two states are ) ) 1 0 1 0 equivalent if they . ( . . ( . . .(.) ( ) . share the same -0.1 -0.1 -0.2 +0.8 . 0 0 2 0 2 0 0 0 stack top . .. ... . ( .. . -0.2 -0.3 -0.3 0 0 0 0 2 0 . ( ? ( ( (.) ((.)) ( ( ( ? ( . ) ✏ ) . 0.0 +0.9 +1.9 . . 0.0 ( -0.1 0 0 ... 1 ) ... 1 0 0 ... 2 2 ... 2 . .(.) .(.) -0.1 packing unpacking +0.8 0 0 0 0 21

Details: Pack “Equivalent” States => O ( n 3 ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG E ( (( . (( . ( (.) ((.)) ( ) ) ( ✏ 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 1 0 1 2 1 2 1 0 0 0 . ( . . ( .. . -0.1 -0.2 two states are ) ) 1 0 1 0 equivalent if they . ( . . ( . . .(.) ( ) . share the same -0.1 -0.1 -0.2 +0.8 . 0 0 2 0 2 0 0 0 stack top . .. ... . ( .. . -0.2 -0.3 -0.3 0 0 0 0 2 0 . ( ? ( ( (.) ((.)) ( ( ( ? ( . ) ✏ ) . 0.0 +0.9 +1.9 . . 0.0 ( -0.1 0 0 ... 1 ) ... 1 0 0 ... 2 2 ... 2 . .(.) .(.) -0.1 packing unpacking +0.8 0 0 0 0 21

Details: Pack “Equivalent” States => O ( n 3 ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG E ( (( . (( . ( (.) ((.)) ( ) ) ( ✏ 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 1 0 1 2 1 2 1 0 0 0 . ( . . ( .. . -0.1 -0.2 two states are ) ) 1 0 1 0 equivalent if they . ( . . ( . . .(.) ( ) . share the same -0.1 -0.1 -0.2 +0.8 . 0 0 2 0 2 0 0 0 stack top . .. ... . ( .. . -0.2 -0.3 -0.3 0 0 0 0 2 0 . ( ? ( ( (.) ((.)) ( ( ( ? ( . ) ✏ ) . 0.0 +0.9 +1.9 . . 0.0 ( -0.1 0 0 ... 1 ) ... 1 0 0 ... 2 2 ... 2 . .(.) .(.) -0.1 packing unpacking +0.8 0 0 0 0 21

Details: Packing “Temporarily Equivalent” States • two states are “temporarily equivalent” if they share the same stack top index • because they are looking for the same nucleotide(s) to match this stack-top nucleotide • we pack them as a single state until a matching is found, when they unpack • this is how we can explore exponentially many structures in linear time ( (( . (( . ( (.) ((.)) ( ( ) ) ‘ . h c . ( . . ( . . . .(.) .(.). r ) ( a e s m a e b 22

Details: Packing “Temporarily Equivalent” States • two states are “temporarily equivalent” if they share the same stack top index • because they are looking for the same nucleotide(s) to match this stack-top nucleotide • we pack them as a single state until a matching is found, when they unpack • this is how we can explore exponentially many structures in linear time ( (( . (( . ( (.) ((.)) ( ( ) ) ‘ . h c . ( . . ( . . . .(.) .(.). r ) ( a e s m a e b . ( ? ( ? ( . ( (.) ((.)) ( ( . ) ) ‘ . . ) ( . .(.) 22

Details: Packing “Temporarily Equivalent” States • two states are “temporarily equivalent” if they share the same stack top index • because they are looking for the same nucleotide(s) to match this stack-top nucleotide • we pack them as a single state until a matching is found, when they unpack • this is how we can explore exponentially many structures in linear time ( (( . (( . ( (.) ((.)) ( ( ) ) ‘ . h c . ( . . ( . . . .(.) .(.). r ) ( a e s m a e b . ( ? ( ? ( . ( (.) ((.)) ( ( . ) ) ‘ . . ) ( packing unpacking . .(.) 22

Idea 3: Beam Search => Version 4: O ( n ), approx. C Idea 3 F high score . ( ? ( ? ( . ( (.) ((.)) ( . ) ) ( ✏ (low energy) . . b 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ) ( . . ( .. .(.) . ) ) ( -0.1 -0.2 +0.8 low score ... 1 ... 1 0 0 (high energy) . ? ( .. . .. ... . . -0.1 -0.2 -0.3 -0.2 0 0 0 0 0 0 ... 2 G . ( ? ( ? ( . ( (.) ((.)) ( ( . ) ) ✏ . 0.0 0.0 -0.1 +0.9 +1.9 . . ) 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ( . ( . ( .. . .(.) ) -0.1 -0.1 -0.2 +0.8 0 0 ... 1 ... 1 0 0 23

From O ( n 3 ) to O (3 n ) back to O ( n 3 ) finally to O ( n ) • v0: conventional bottom-up DP: O ( n 3 ) step: 1 2 3 4 5 D C CC CCA CCAG CCAGG no DP O (3 n ) ( (( . (( . ( (.) ((.)) ( ( ) ) ✏ 0.0 0.0 -0.1 +0.9 +1.9 . 0 0 1 0 1 2 1 2 1 0 0 0 ( . . ( .. (..) . (..). ) • 4 versions of incremental (5’-to-3’) search -0.1 -0.2 +0.8 +0.7 +identical stack merging . . 1 0 1 0 0 0 0 0 ( ... (...) ) + Idea 1 -0.3 +0.7 1 0 0 0 . . ( . . ( . .(.) . .(.). ( ) • v1: exhaustive: O (3 n ) -0.1 -0.1 -0.2 +0.8 +0.7 . 0 0 2 0 2 0 0 0 0 0 . . ( .. .(..) ) -0.3 +0.7 2 0 0 0 .. ... .... ..... . . . • v2: DP -0.2 -0.3 -0.4 -0.5 0 0 0 0 0 0 0 0 , merge by full stack: O (2 n ) E ( (( . (( . ( (.) ((.)) naive DP ( ( ) ) ✏ O (2 n ) 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 1 0 1 2 1 2 1 0 0 0 • v3: DP . ( . . ( .. . +stack-top packing , pack by stack top: O ( n 3 ) -0.1 -0.2 ) ) 1 0 1 0 + Idea 2 . . . ( . ( . .(.) ( ) . -0.1 -0.1 -0.2 +0.8 . 0 0 2 0 2 0 0 0 • v4: approx. DP via beam search: O ( n ) . .. ... . ( .. . -0.2 -0.3 -0.3 0 0 0 0 2 0 F . ( ? ( ? ( . ( (.) stacktop DP ((.)) ( ( . ) ) ✏ • this is a simple illustration on the toy O ( n 3 ) . . 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ) . ( . . ( .. .(.) ) ( ) +beam prune + Idea 3 -0.1 -0.2 +0.8 ... 1 ... 1 0 0 . “Nussinov-like” scoring model ? ( .. . .. ... . . -0.1 -0.2 -0.3 -0.2 0 0 0 0 0 0 ... 2 G • our real systems have full scoring functions . LinearFold ( ? ( ? ( . ( (.) ((.)) ( ( . ) ) ✏ O ( n ) . 0.0 0.0 -0.1 +0.9 +1.9 . . ) 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ( . . ( . ( .. .(.) ) -0.1 -0.1 -0.2 +0.8 ( approx. ) 0 0 ... 1 ... 1 0 0 24

From O ( n 3 ) to O (3 n ) back to O ( n 3 ) finally to O ( n ) . . • v0: conventional bottom-up DP: O ( n 3 ) step: 1 2 3 4 5 D . C CC CCA CCAG CCAGG no DP O (3 n ) ( (( . (( . ( (.) ((.)) ( ( ) ) ✏ 0.0 0.0 -0.1 +0.9 +1.9 . 0 0 1 0 1 2 1 2 1 0 0 0 ( . . ( .. (..) . (..). ) • 4 versions of incremental (5’-to-3’) search -0.1 -0.2 +0.8 +0.7 +identical stack merging . . 1 0 1 0 0 0 0 0 v0: O ( n 3 ) ( ... (...) ) + Idea 1 -0.3 +0.7 1 0 0 0 . . ( . . ( . .(.) . .(.). ( ) • v1: exhaustive: O (3 n ) -0.1 -0.1 -0.2 +0.8 +0.7 . 0 0 2 0 2 0 0 0 0 0 . . ( .. .(..) ) -0.3 +0.7 2 0 0 0 .. ... .... ..... . . . • v2: DP -0.2 -0.3 -0.4 -0.5 0 0 0 0 0 0 0 0 , merge by full stack: O (2 n ) E ( (( . (( . ( (.) ((.)) naive DP ( ( ) ) ✏ O (2 n ) 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 1 0 1 2 1 2 1 0 0 0 • v3: DP . ( . . ( .. . +stack-top packing , pack by stack top: O ( n 3 ) -0.1 -0.2 ) ) 1 0 1 0 + Idea 2 . . . ( . ( . .(.) ( ) . -0.1 -0.1 -0.2 +0.8 . 0 0 2 0 2 0 0 0 • v4: approx. DP via beam search: O ( n ) . .. ... . ( .. . -0.2 -0.3 -0.3 0 0 0 0 2 0 F . ( ? ( ? ( . ( (.) stacktop DP ((.)) ( ( . ) ) ✏ • this is a simple illustration on the toy O ( n 3 ) . . 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ) . ( . . ( .. .(.) ) ( ) +beam prune + Idea 3 -0.1 -0.2 +0.8 ... 1 ... 1 0 0 . “Nussinov-like” scoring model ? ( .. . .. ... . . -0.1 -0.2 -0.3 -0.2 0 0 0 0 0 0 ... 2 G • our real systems have full scoring functions . LinearFold ( ? ( ? ( . ( (.) ((.)) ( ( . ) ) ✏ O ( n ) . 0.0 0.0 -0.1 +0.9 +1.9 . . ) 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ( . . ( . ( .. .(.) ) -0.1 -0.1 -0.2 +0.8 ( approx. ) 0 0 ... 1 ... 1 0 0 24

From O ( n 3 ) to O (3 n ) back to O ( n 3 ) finally to O ( n ) . . • v0: conventional bottom-up DP: O ( n 3 ) step: 1 2 3 4 5 D . C CC CCA CCAG CCAGG no DP O (3 n ) ( (( . (( . ( (.) ((.)) ( ( ) ) ✏ 0.0 0.0 -0.1 +0.9 +1.9 . 0 0 1 0 1 2 1 2 1 0 0 0 ( . . ( .. (..) . (..). ) • 4 versions of incremental (5’-to-3’) search -0.1 -0.2 +0.8 +0.7 +identical stack merging . . 1 0 1 0 0 0 0 0 v0: O ( n 3 ) ( ... (...) ) + Idea 1 -0.3 +0.7 1 0 0 0 . . ( . . ( . .(.) . .(.). ( ) • v1: exhaustive: O (3 n ) v1: O (3 n ) -0.1 -0.1 -0.2 +0.8 +0.7 . 0 0 2 0 2 0 0 0 0 0 . . ( .. .(..) ) -0.3 +0.7 2 0 0 0 .. ... .... ..... . . . • v2: DP -0.2 -0.3 -0.4 -0.5 0 0 0 0 0 0 0 0 , merge by full stack: O (2 n ) E ( (( . (( . ( (.) ((.)) naive DP ( ( ) ) ✏ O (2 n ) 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 1 0 1 2 1 2 1 0 0 0 • v3: DP . ( . . ( .. . +stack-top packing , pack by stack top: O ( n 3 ) -0.1 -0.2 ) ) 1 0 1 0 + Idea 2 . . . ( . ( . .(.) ( ) . -0.1 -0.1 -0.2 +0.8 . 0 0 2 0 2 0 0 0 • v4: approx. DP via beam search: O ( n ) . .. ... . ( .. . -0.2 -0.3 -0.3 0 0 0 0 2 0 F . ( ? ( ? ( . ( (.) stacktop DP ((.)) ( ( . ) ) ✏ • this is a simple illustration on the toy O ( n 3 ) . . 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ) . ( . . ( .. .(.) ) ( ) +beam prune + Idea 3 -0.1 -0.2 +0.8 ... 1 ... 1 0 0 . “Nussinov-like” scoring model ? ( .. . .. ... . . -0.1 -0.2 -0.3 -0.2 0 0 0 0 0 0 ... 2 G • our real systems have full scoring functions . LinearFold ( ? ( ? ( . ( (.) ((.)) ( ( . ) ) ✏ O ( n ) . 0.0 0.0 -0.1 +0.9 +1.9 . . ) 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ( . . ( . ( .. .(.) ) -0.1 -0.1 -0.2 +0.8 ( approx. ) 0 0 ... 1 ... 1 0 0 24

From O ( n 3 ) to O (3 n ) back to O ( n 3 ) finally to O ( n ) . . • v0: conventional bottom-up DP: O ( n 3 ) step: 1 2 3 4 5 D . C CC CCA CCAG CCAGG no DP O (3 n ) ( (( . (( . ( (.) ((.)) ( ( ) ) ✏ 0.0 0.0 -0.1 +0.9 +1.9 . 0 0 1 0 1 2 1 2 1 0 0 0 ( . . ( .. (..) . (..). ) • 4 versions of incremental (5’-to-3’) search -0.1 -0.2 +0.8 +0.7 +identical stack merging . . 1 0 1 0 0 0 0 0 v0: O ( n 3 ) ( ... (...) ) + Idea 1 -0.3 +0.7 1 0 0 0 . . ( . . ( . .(.) . .(.). ( ) • v1: exhaustive: O (3 n ) v1: O (3 n ) -0.1 -0.1 -0.2 +0.8 +0.7 . 0 0 2 0 2 0 0 0 0 0 . . ( .. .(..) ) -0.3 +0.7 2 0 0 0 .. ... .... ..... . . . • v2: DP -0.2 -0.3 -0.4 -0.5 0 0 0 0 0 0 0 0 , merge by full stack: O (2 n ) E ( (( . (( . ( (.) ((.)) naive DP ( ( ) ) ✏ O (2 n ) 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 1 0 1 2 1 2 1 0 0 0 • v3: DP . ( . . ( .. . +stack-top packing , pack by stack top: O ( n 3 ) -0.1 -0.2 ) ) 1 0 1 0 + Idea 2 . . . ( . ( . .(.) ( ) . -0.1 -0.1 -0.2 +0.8 . 0 0 2 0 2 0 0 0 • v4: approx. DP via beam search: O ( n ) . .. ... . ( .. . v4: O ( n ) -0.2 -0.3 -0.3 0 0 0 0 2 0 F . ( ? ( ? ( . ( (.) stacktop DP ((.)) ( ( . ) ) ✏ • this is a simple illustration on the toy O ( n 3 ) . . 0.0 0.0 -0.1 +0.9 +1.9 . . v3: O ( n 3 ) 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ) . ( . . ( .. .(.) ) ( ) +beam prune + Idea 3 -0.1 -0.2 +0.8 ... 1 ... 1 0 0 . “Nussinov-like” scoring model ? ( .. . .. ... . . -0.1 -0.2 -0.3 -0.2 0 0 0 0 0 0 ... 2 G • our real systems have full scoring functions . LinearFold ( ? ( ? ( . ( (.) ((.)) ( ( . ) ) ✏ O ( n ) . 0.0 0.0 -0.1 +0.9 +1.9 . . ) 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ( . . ( . ( .. .(.) ) -0.1 -0.1 -0.2 +0.8 ( approx. ) 0 0 ... 1 ... 1 0 0 24

From O ( n 3 ) to O (3 n ) back to O ( n 3 ) finally to O ( n ) . . • v0: conventional bottom-up DP: O ( n 3 ) step: 1 2 3 4 5 D . C CC CCA CCAG CCAGG no DP O (3 n ) ( (( . (( . ( (.) ((.)) ( ( ) ) ✏ 0.0 0.0 -0.1 +0.9 +1.9 . 0 0 1 0 1 2 1 2 1 0 0 0 ( . . ( .. (..) . (..). ) • 4 versions of incremental (5’-to-3’) search -0.1 -0.2 +0.8 +0.7 +identical stack merging . . 1 0 1 0 0 0 0 0 v0: O ( n 3 ) ( ... (...) ) + Idea 1 -0.3 +0.7 1 0 0 0 . . ( . . ( . .(.) . .(.). ( ) • v1: exhaustive: O (3 n ) v1: O (3 n ) -0.1 -0.1 -0.2 +0.8 +0.7 . 0 0 2 0 2 0 0 0 0 0 . . ( .. .(..) ) -0.3 +0.7 2 0 0 0 .. ... .... ..... . . . • v2: DP -0.2 -0.3 -0.4 -0.5 0 0 0 0 0 0 0 0 , merge by full stack: O (2 n ) E ( (( . (( . ( (.) ((.)) naive DP ( ( ) ) ✏ O (2 n ) 0.0 0.0 -0.1 +0.9 +1.9 . . 0 0 1 0 1 2 1 2 1 0 0 0 • v3: DP . ( . . ( .. . +stack-top packing , pack by stack top: O ( n 3 ) -0.1 -0.2 ) ) 1 0 1 0 + Idea 2 . . . ( . ( . .(.) ( ) . -0.1 -0.1 -0.2 +0.8 . 0 0 2 0 2 0 0 0 • v4: approx. DP via beam search: O ( n ) . .. ... . ( .. . v4: O ( n ) -0.2 -0.3 -0.3 0 0 0 0 2 0 F . ( ? ( ? ( . ( (.) stacktop DP ((.)) ( ( . ) ) ✏ • this is a simple illustration on the toy O ( n 3 ) . . 0.0 0.0 -0.1 +0.9 +1.9 . . v3: O ( n 3 ) 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ) . ( . . ( .. .(.) ) ( ) +beam prune + Idea 3 -0.1 -0.2 +0.8 ... 1 ... 1 0 0 . “Nussinov-like” scoring model ? ( .. . .. ... . . -0.1 -0.2 -0.3 -0.2 0 0 0 0 0 0 ... 2 G • our real systems have full scoring functions . LinearFold ( ? ( ? ( . ( (.) ((.)) ( ( . ) ) ✏ O ( n ) . 0.0 0.0 -0.1 +0.9 +1.9 . . ) 0 0 ... 1 ... 2 ... 2 ... 1 0 0 ( . . ( . ( .. .(.) ) -0.1 -0.1 -0.2 +0.8 ( approx. ) 0 0 ... 1 ... 1 0 0 24

Computational Linguistics => Computational Biology linguistics compiler theory comp. linguistics computational biology 1955 Chomsky: context-free 1958 Backus & Naur: grammars (CFGs) CFGs for program, lang. 1964 C ocke \ 1965 K asami - CKY O ( n 3 ) 1967 Y ounger / for all CFGs 1965 Knuth: LR parsing for restricted CFGs: O ( n ) 1978: Nussinov O ( n 3 ) RNA folding 1981: Zuker & Siegler 1986 Tomita: G eneralized LR for all CFGs: O ( n 3 ) 2019: 5’-to-3’ O ( n 3 ) RNA folding (LinearFold with + ∞ beam) 2010: Huang & Sagae: O ( n ) (approx.) DP for all CFGs 2019: LinearFold O ( n ) (approx.) RNA folding 25

Computational Linguistics => Computational Biology linguistics compiler theory comp. linguistics computational biology . . 1955 Chomsky: context-free . 1958 Backus & Naur: grammars (CFGs) CFGs for program, lang. v0 : O ( n 3 ) v0 1964 C ocke \ 1965 K asami - CKY O ( n 3 ) 1967 Y ounger / for all CFGs 1965 Knuth: LR parsing for restricted CFGs: O ( n ) 1978: Nussinov O ( n 3 ) RNA folding 1981: Zuker & Siegler v0 1986 Tomita: G eneralized LR for all CFGs: O ( n 3 ) 2019: 5’-to-3’ O ( n 3 ) RNA folding (LinearFold with + ∞ beam) 2010: Huang & Sagae: O ( n ) (approx.) DP for all CFGs 2019: LinearFold O ( n ) (approx.) RNA folding 25

Computational Linguistics => Computational Biology linguistics compiler theory comp. linguistics computational biology . . 1955 Chomsky: context-free . v4 : O ( n ) 1958 Backus & Naur: grammars (CFGs) CFGs for program, lang. v0 : O ( n 3 ) v3 : O ( n 3 ) v0 1964 C ocke \ 1965 K asami - CKY O ( n 3 ) 1967 Y ounger / for all CFGs 1965 Knuth: LR parsing for restricted CFGs: O ( n ) 1978: Nussinov O ( n 3 ) RNA folding 1981: Zuker & Siegler v0 1986 Tomita: G eneralized LR for all CFGs: O ( n 3 ) v3 2019: 5’-to-3’ O ( n 3 ) RNA folding (LinearFold with + ∞ beam) 2010: Huang & Sagae: O ( n ) v3 (approx.) DP for all CFGs v4 2019: LinearFold O ( n ) (approx.) RNA folding v4 25

Connections to Incremental Parsing • shared key observation: local ambiguity packing • pack non-crucial local ambiguities along the way • unpack (in a reduce action) only when needed psycholinguistic evidence (eye-tracking experiments): delayed disambiguation John and Mary had 2 papers John and Mary had 2 papers Frazier and Rayner (1990), Frazier (1999) 26 (Huang and Sagae, 2010)

Connections to Incremental Parsing • shared key observation: local ambiguity packing • pack non-crucial local ambiguities along the way • unpack (in a reduce action) only when needed psycholinguistic evidence (eye-tracking experiments): delayed disambiguation each John and Mary had 2 papers together John and Mary had 2 papers Frazier and Rayner (1990), Frazier (1999) 26 (Huang and Sagae, 2010)

Connections to Incremental Parsing • shared key observation: local ambiguity packing • pack non-crucial local ambiguities along the way • unpack (in a reduce action) only when needed psycholinguistic evidence ( (( . (( . ( (.) ((.)) ( ( ) ) ‘ (eye-tracking experiments): . . ( . . ( . . . .(.) .(.). ) ( delayed disambiguation packing unpacking John and Mary had 2 papers . ( ? ( ? ( . ( (.) ((.)) ( ( . ) ) ‘ . John and Mary had 2 papers . ) ( . .(.) Frazier and Rayner (1990), Frazier (1999) 27

Connections to Incremental Parsing • shared key observation: local ambiguity packing • pack non-crucial local ambiguities along the way • unpack (in a reduce action) only when needed psycholinguistic evidence ( (( . (( . ( (.) ((.)) ( ( ) ) ‘ (eye-tracking experiments): . . ( . . ( . . . .(.) .(.). ) ( delayed disambiguation packing unpacking each John and Mary had 2 papers . ( ? ( ? ( . ( (.) ((.)) ( ( . ) ) ‘ . together John and Mary had 2 papers . ) ( . .(.) Frazier and Rayner (1990), Frazier (1999) 27

Connections to Incremental Parsing • shared key observation: local ambiguity packing • pack non-crucial local ambiguities along the way • unpack (in a reduce action) only when needed psycholinguistic evidence ( (( . (( . ( (.) ((.)) ( ( ) ) ‘ (eye-tracking experiments): . . ( . . ( . . . .(.) .(.). ) ( delayed disambiguation packing unpacking each John and Mary had 2 papers . ( ? ( ? ( . ( (.) ((.)) ( ( . ) ) ‘ … each . together John and Mary had 2 papers . ) ( . .(.) John and Mary … together Frazier and Rayner (1990), Frazier (1999) … had 2 papers 27

Results

LinearFold with SOTA Prediction Models • models from two widely-used folding engines • CONTRAfold MFE (machine-learned) • Vienna RNAfold (thermodynamic) • we linearized both systems from O ( n 3 ) to O ( n ) efficiency systems time space machine-learned thermo-dynamic O ( n 3 ) O ( n 2 ) C ONTRAfold V ienna RNAfold baselines O ( n ) O ( n ) LinearFold- C LinearFold- V our work 29

LinearFold with SOTA Prediction Models • models from two widely-used folding engines • CONTRAfold MFE (machine-learned) • Vienna RNAfold (thermodynamic) • we linearized both systems from O ( n 3 ) to O ( n ) efficiency systems time space machine-learned thermo-dynamic O ( n 3 ) O ( n 2 ) C ONTRAfold V ienna RNAfold baselines O ( n ) O ( n ) LinearFold- C LinearFold- V our work 29

Efficiency & Scalability: O ( n ) time, O ( n ) memory A B 10 Vienna RNAfold: ~ n 2.4 d 10,000 nt (~HIV) l o f CONTRAfold MFE: ~ n 2.2 d A l d 4min 7s o N l o LinearFold-V: ~ n 1.2 f R A f 10 8 running time (seconds) A N a LinearFold-C: ~ n 1.1 R n R T n 244,296 nt e N a i O V n n C (longest in RNAcentral) LinearFold-V 6 e CONTRAfold 10 i V ~200hrs 120s LinearFold-C 4 V - d l o F r a e n i L 2 C - d l o F r a e n i L 0 0 1000 nt 2000 nt 3000 nt C Archive II data set RNAcentral data set (~3,000 seqs, max len: ~3,000 nt ) (sampled, max len: ~250,000 nt ) 30

Accuracy • Tested on Archive II dataset 80 Standard O ( n 3 ) search 70 LinearFold: O ( n ) search (on a family-by-family basis) Precision * 60 • significantly better on 3 long families 50 • biggest boost on the longest families: * * 40 16S/23S rRNAs 80 tRNA 5S rRNA SRP RNaseP tmRNA Group I Intron telomerase RNA 16S rRNA 23S rRNA Standard O ( n 3 ) search • LinearFold-V vs. Vienna is similar 70 LinearFold: O ( n ) search Recall 60 * * 50 (precision) (recall) * * 40 ** tRNA 5S rRNA SRP RNaseP tmRNA Group I Intron telomerase RNA 16S rRNA 23S rRNA 31

Accuracy • Tested on Archive II dataset 80 Standard O ( n 3 ) search 70 LinearFold: O ( n ) search (on a family-by-family basis) Precision * 60 • significantly better on 3 long families 50 • biggest boost on the longest families: * * 40 16S/23S rRNAs 80 tRNA 5S rRNA SRP RNaseP tmRNA Group I Intron telomerase RNA 16S rRNA 23S rRNA Standard O ( n 3 ) search • LinearFold-V vs. Vienna is similar 70 LinearFold: O ( n ) search Recall 60 * * 50 (precision) (recall) * * 40 ** tRNA 5S rRNA SRP RNaseP tmRNA Group I Intron telomerase RNA 16S rRNA 23S rRNA 31

Improvements on Long-Range Base Pairs • long-distance pairs are well-known to be hard to predict • LinearFold outputs less long-range base pairs, but more correct ones CONTRAfold MFE CONTRAfold MFE 70 70 LinearFold-C b=100 LinearFold-C b=100 60 60 50 50 Sensitivity PPV 40 40 30 30 20 20 10 10 0 0 1-200 201-500 >500 1-200 201-500 >500 32 base pair distance base pair distance

Recommend

More recommend