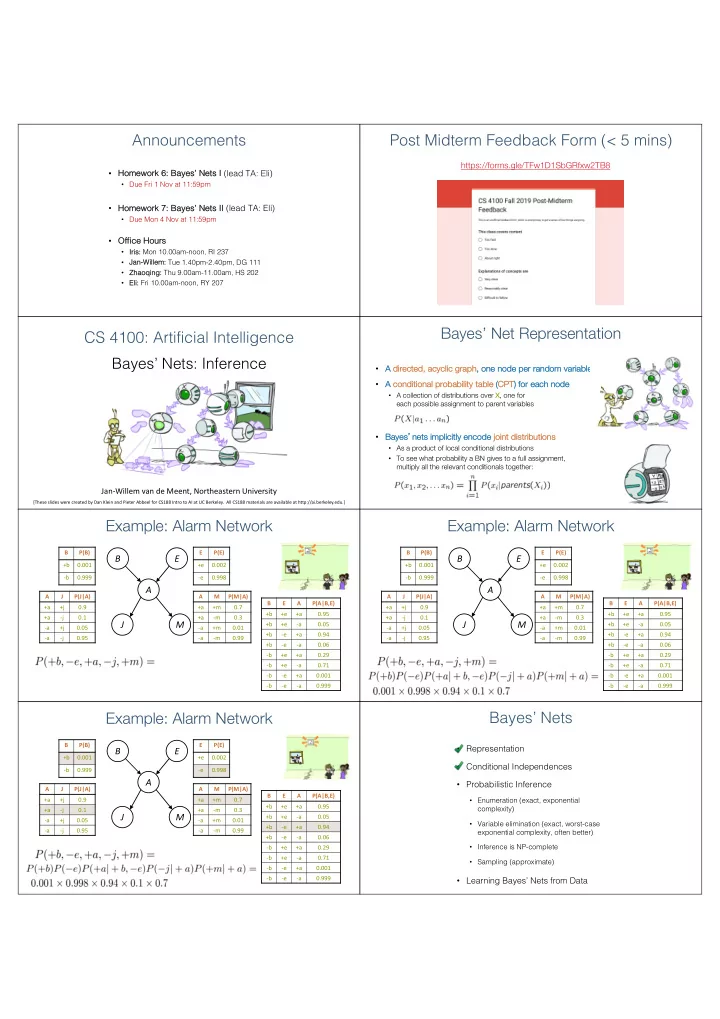

Announcements Post Midterm Feedback Form (< 5 mins) https://forms.gle/TFw1D1SbGRfxw2TB8 • Homework k 6: Baye yes’ s’ Nets s I (lead TA: Eli) • Due Fri 1 Nov at 11:59pm • Homework k 7: Baye yes’ s’ Nets s II (lead TA: Eli) • Due Mon 4 Nov at 11:59pm • Offi Office H Hours • Iris: s: Mon 10.00am-noon, RI 237 • Ja Jan-Wi Willem: m: Tue 1.40pm-2.40pm, DG 111 • Zh Zhaoqi qing: : Thu 9.00am-11.00am, HS 202 • El Eli: Fri 10.00am-noon, RY 207 Bayes’ Net Representation CS 4100: Artificial Intelligence Bayes’ Nets: Inference • A A di directed, d, acyclic graph ph, o , one n node p per r random v variable • A A co conditional al probab ability tab able (CP CPT) ) for each node de • A collection of distributions over X , one for each possible assignment to parent variables • Ba Bayes ’ ne nets implicitly enc ncode jo join int dis istrib ributio ions • As a product of local conditional distributions • To see what probability a BN gives to a full assignment, multiply all the relevant conditionals together: Jan-Willem van de Meent, Northeastern University [These slides were created by Dan Klein and Pieter Abbeel for CS188 Intro to AI at UC Berkeley. All CS188 materials are available at http://ai.berkeley.edu.] Example: Alarm Network Example: Alarm Network B P(B) E P(E) B P(B) E P(E) B E B E +b 0.001 +e 0.002 +b 0.001 +e 0.002 -b 0.999 -e 0.998 -b 0.999 -e 0.998 A A A J P(J|A) A M P(M|A) A J P(J|A) A M P(M|A) B E A P(A|B,E) B E A P(A|B,E) +a +j 0.9 +a +m 0.7 +a +j 0.9 +a +m 0.7 +b +e +a 0.95 +b +e +a 0.95 +a -j 0.1 +a -m 0.3 +a -j 0.1 +a -m 0.3 J M J M +b +e -a 0.05 +b +e -a 0.05 -a +j 0.05 -a +m 0.01 -a +j 0.05 -a +m 0.01 +b -e +a 0.94 +b -e +a 0.94 -a -j 0.95 -a -m 0.99 -a -j 0.95 -a -m 0.99 +b -e -a 0.06 +b -e -a 0.06 -b +e +a 0.29 -b +e +a 0.29 -b +e -a 0.71 -b +e -a 0.71 -b -e +a 0.001 -b -e +a 0.001 -b -e -a 0.999 -b -e -a 0.999 Example: Alarm Network Bayes’ Nets B P(B) E P(E) • Representation B E +b 0.001 +e 0.002 • Conditional Independences -b 0.999 -e 0.998 A • Probabilistic Inference A J P(J|A) A M P(M|A) B E A P(A|B,E) +a +j 0.9 +a +m 0.7 • Enumeration (exact, exponential +b +e +a 0.95 +a -j 0.1 +a -m 0.3 complexity) J M +b +e -a 0.05 -a +j 0.05 -a +m 0.01 • Variable elimination (exact, worst-case +b -e +a 0.94 -a -j 0.95 -a -m 0.99 exponential complexity, often better) +b -e -a 0.06 • Inference is NP-complete -b +e +a 0.29 -b +e -a 0.71 • Sampling (approximate) -b -e +a 0.001 -b -e -a 0.999 • Learning Bayes’ Nets from Data

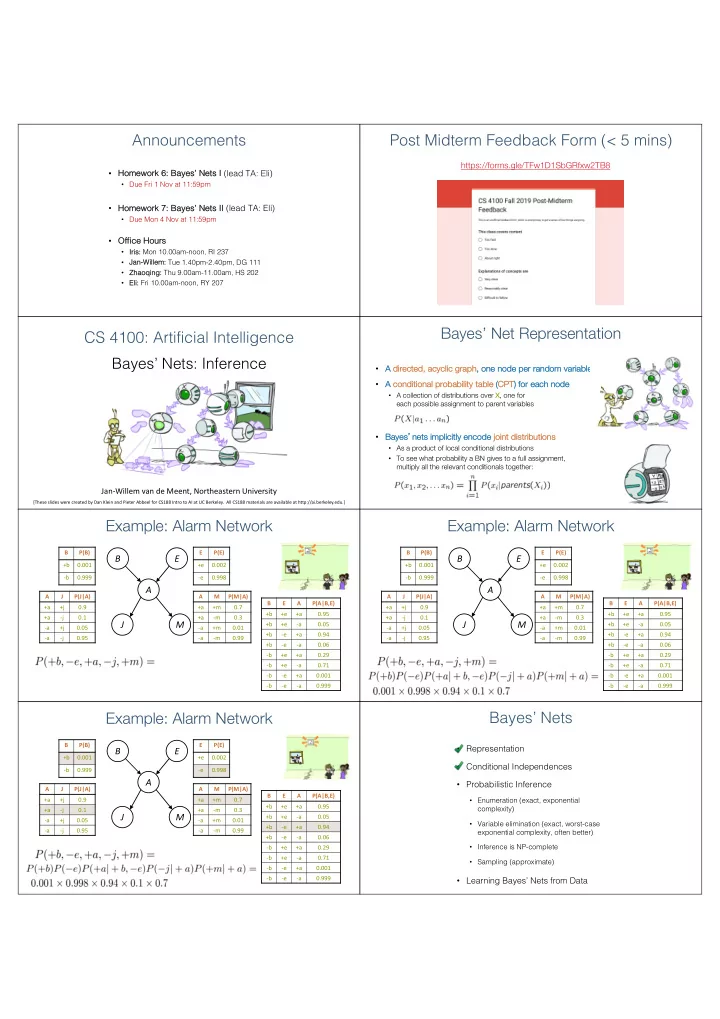

<latexit sha1_base64="QeIUGpwmkQOMpLFOW6kPQY/Mnw=">AHR3icfZXdbtMwFMe9Ad0oXxtchPRIQ0tGsk6uoGENG1M4ysQ+pqSYncduszgeO07Uzfgyehlu4BF4Cu4Ql9hJBkc5irt6fHv/H3sHNt2hL2YGsaPufkbN281FhZvN+/cvXf/wdLyw+M4TIiDjpwQh+TUhjHCXoCOqEcxOo0Igr6N0Yk93pP9JxNEYi8MPtBZhPo+HAbewHMgFa6zpefd1V3tk7Z2rmtr/jPtWYNCHSY8OpXTi7+XZna2VLWDfSpqmGmRutHZC17tlyA1hu6CQ+CqiDYRz3TCOifQYJ9RyMeNKYhRBZwyHqCfMAPo7rN0ZlxrNp8W+lmALqIpRVPKa/w+pCPeLOox6MeZt+IchAGNy14MhW48te2y8r9tA0ColM3z1PYmqHUy5TdNFArH+aM3NtnCDODg92OTP0Tls3N7Z4lSHIzRFz29DFRyGBKEgZ7Y3dbOzXQNFCYkw+kcZkpMZF6k3kIy7Oej4s7FIa73T0cW70o30abe5GrGbziLjzRy7j+UM7qSN1L1LOxFDbw3g0FZXD7mf+iDbCkKuRvXqr8jMBiYjaFCcvkxQIRJGrGCX0fBi6zJsjhPbPLBTECUGyZphlh9gVBSF+WMvknCtBWYiITfuFaLEXTSDmzNJF0AjR5YKW7Ewgm5MwxVuvaoGTHl5+AmbpoMWmZnCzBTmUmEuFWakMBaisGaOSRlMqkIfK0J0lOpUZXAFw+KAcms4WpWDyoiDChKNPGXtIRn6UK5nGCECaUjkoXLh0RH2fI/GLO/napQXB8l+quD7VcSkt+2zfa5Qjo2TktGWOVtlpZPGSWugsodVkMOiUJmG6aGjVQ2PxlqYGemwOm+rUFDVTfhBJO74yXsnX+3hCqcbyxbrbX2+83Wzsb+e2xCB6DJ2AVmGAL7IC3oAuOgAM+gy/gK/jW+N742fjV+J2h83N5zCNQagtzfwCgbJVC</latexit> Inference Inference by Enumeration in Bayes’ Net • Gi Given unlimited d time, inference in BNs Ns is easy B E • Exa Examples: s: • In Inference: calculating some • Na Naïve strategy: In Infer eren ence ce by en enumer erat ation useful quantity from a joint • Po Posterior probability probability distribution capital B means: compute for all values b A P ( B | + j, + m ) ∝ B P ( B, + j, + m ) • Most like kely explanation P ( B | + j, + m ) = P ( B, + j, + m ) J J M M X = P ( B, e, a, + j, + m ) P (+ j, + m ) e,a X = P ( B ) P ( e ) P ( a | B, e ) P (+ j | a ) P (+ m | a ) e,a = P ( B ) P (+ e ) P (+ a | B, + e ) P (+ j | + a ) P (+ m | + a ) + P ( B ) P (+ e ) P ( − a | B, + e ) P (+ j | − a ) P (+ m | − a ) P ( B ) P ( − e ) P (+ a | B, − e ) P (+ j | + a ) P (+ m | + a ) + P ( B ) P ( − e ) P ( − a | B, − e ) P (+ j | − a ) P (+ m | − a ) Inference by Enumeration Inference by Enumeration? We We want: • • Ge General l case: * Works ks fine with multiple qu query ry vari riabl bles, too oo • Ev Evidence vari riables: • Quer Qu ery* var variable: iable: All var Al variab ables es Hi Hidden vari riables: • St Step p 3: No Normaliz lize • St Step p 1: Se Select the • Step St p 2: Su Sum out H to get joint • entries consistent of Query and evidence with the evidence × 1 Z Inference by Enumeration vs. Variable Elimination Factor Zoo • Why is inference by enumeration so slow? • Id Idea: ea: inter erleav eave e joining an and mar arginal alizing! • You join up the whole joint distribution before • Called “ Va Variable Elimination ” you sum out the hidden variables • Still NP-hard, but usually much faster than inference by enumeration Go Goal: Let’s make a ta taxon onom omy of co conditional al probab ability tab ables es (we have seen most of these before) • First we’ll need some new notation: fa factors rs Factor Zoo II Factor Zoo I • Jo • Si Single conditional: P( P(Y | x) Joint dist stribution: P( P(X,Y) X,Y) T W P P(y | x) for fi • Entries P( • Entries P( fixed x , al all y P(x, x,y) for all x , y hot sun 0.4 T W P • Sums to 1 • Sums to 1 hot rain 0.1 cold sun 0.4 cold sun 0.2 cold rain 0.6 cold rain 0.3 • Se Selecte ted j d joint: t: P( P(x, x,Y) • A slice of the joint distribution • Entries P( P(x, x,y) for fixe xed x , al all y T W P • Fa Family of cond nditiona nals: P( P(Y | X) T W P • Sums to P(x) x) (usually not 1 ) cold sun 0.2 hot sun 0.8 • Multiple conditionals cold rain 0.3 • Entries P( P(y | x) for al all x , y hot rain 0.2 • Number of capitals = cold sun 0.4 • Sums to |X |X| (size of domain) number of dimensions in table cold rain 0.6

Factor Zoo III Factor Zoo Summary • In In gen ener eral al, when en we e write e P( P(Y 1 … … Y N | | X 1 … … X M ) • Specified family: P( P( y y | X ) • Entries P( P(y | x) for fi fixed y , bu but for all x • This is a fa factor , a multi-dimensional array containing numbers ≥ 0 • Sums to … who knows! • Its values are P( P(y 1 … … y N | x | x 1 … … x M ) • Any assi assigned ed (=lower-case) X or Y is a dimension missing (selected) from the array T W P hot rain 0.2 cold rain 0.6 Example: Traffic Domain Inference by Enumeration: Procedural Outline • Track k all objects s (fa facto tors) • Ra Random Varia iable les • Initial factors s are lo local l CPTs s (one per node) +r 0.1 • R : Ra R -r 0.9 Raining • T : Traf Traffic +r 0.1 +r +t 0.8 +t +l 0.3 -r 0.9 +r -t 0.2 +t -l 0.7 • L : Late for class! ss! T +r +t 0.8 -r +t 0.1 -t +l 0.1 -r -t 0.9 -t -l 0.9 +r -t 0.2 • Any y kn known va values s are se selected -r +t 0.1 -r -t 0.9 • E.g. if we know , the initial factors are P ( L ) = ? L X = P ( r, t, L ) +r 0.1 +r +t 0.8 +t +l 0.3 +t +l 0.3 -r 0.9 +r -t 0.2 -t +l 0.1 r,t +t -l 0.7 -r +t 0.1 -t +l 0.1 X -r -t 0.9 = P ( r ) P ( t | r ) P ( L | t ) -t -l 0.9 • Pr Procedu dure: Jo Join all factors, el eliminat nate all hidden variables, normalize ze r,t Operation 1: Join Factors Example: Multiple Joins • First st basi sic operation: jo join inin ing fa facto tors • Combining factors: s: • Ju Just st like ke a database se join • Get all factors over the joining variable • Build a new factor over the union of the variables involved • Exa xample: Join on R R +r 0.1 +r +t 0.8 +r +t 0.08 R,T -r 0.9 +r -t 0.2 +r -t 0.02 -r +t 0.1 -r +t 0.09 T -r -t 0.9 -r -t 0.81 • Computation for each entry: y: pointwise products Example: Multiple Joins Operation 2: Eliminate • Second basi sic operation: marginaliza zation +r 0.1 • Take ke a factor and su sum out a va variable R -r 0.9 Join R Join T • Shrinks ks a factor to a smaller one +r +t 0.08 R, T, L • A pr +r -t 0.02 projection operation -r +t 0.09 T +r +t 0.8 R, T O(2^3) -r -t 0.81 • Exa O(2^2) xample: +r -t 0.2 -r +t 0.1 0.024 +r +t +l -r -t 0.9 0.056 +r +t -l L L 0.002 +r -t +l +r +t 0.08 0.018 +r -t -l +t 0.17 +r -t 0.02 +t +l 0.3 +t +l 0.3 0.027 -r +t +l -r +t 0.09 -t 0.83 +t -l 0.7 +t -l 0.7 0.063 -r +t -l -r -t 0.81 -t +l 0.1 -t +l 0.1 0.081 -r -t +l -t -l 0.9 -t -l 0.9 0.729 -r -t -l

Recommend

More recommend