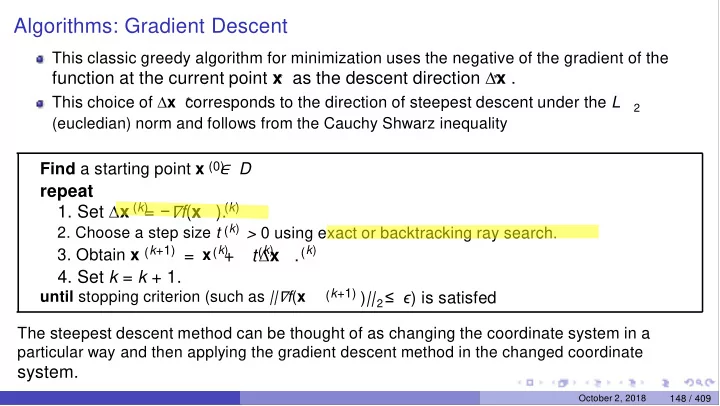

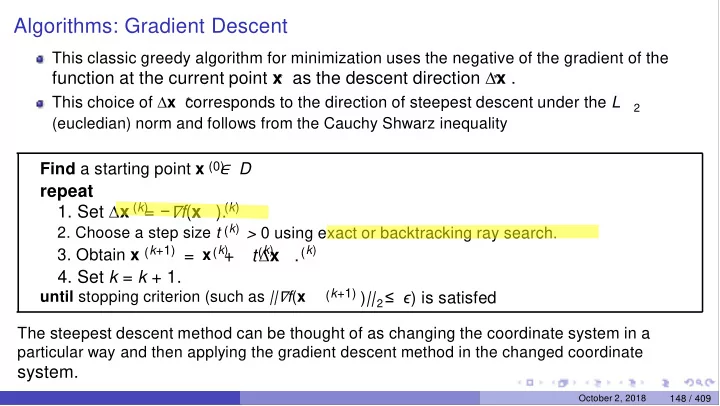

Algorithms: Gradient Descent This classic greedy algorithm for minimization uses the negative of the gradient of the function at the current point x as the descent direction ∆ x . ∗ ∗ ∗ This choice of ∆ x corresponds to the direction of steepest descent under the L 2 (eucledian) norm and follows from the Cauchy Shwarz inequality ∈ D Find a starting point x (0) repeat = − ∇ f ( x 1. Set ∆ x ( k ) ( k ) ). 2. Choose a step size t ( k ) > 0 using exact or backtracking ray search. 3. Obtain x ( k +1) = x ( k ) t ( k ) ∆ x ( k ) + . 4. Set k = k + 1. ( k +1) ) || ≤ ϵ ) is satisfed until stopping criterion (such as || ∇ f ( x 2 The steepest descent method can be thought of as changing the coordinate system in a particular way and then applying the gradient descent method in the changed coordinate system. October 2, 2018 148 / 409

Convergence of the Gradient Descent Algorithm We recap the (necessary) inequality (36) resulting from Lipschitz continuity of ∇ f ( x ): ⊤ f ( y ) ≤ f ( x ) + ∇ f ( x )( y − x ) + L ∥ y − x ∥ 2 2 = x − t ∇ f ( x ) ≡ y , we get Considering x ≡ x , and x k k +1 k k k October 2, 2018 149 / 409

Convergence of the Gradient Descent Algorithm We recap the (necessary) inequality (36) resulting from Lipschitz continuity of ∇ f ( x ): ( ) ⊤ f ( y ) ≤ f ( x ) + ∇ f ( x )( y − x ) + L ∥ y − x ∥ 2 2 2 = x − t ∇ f ( x ) ≡ y , we get Considering x ≡ x , and x k k +1 k k k ∇ f ( x ) 2 k L t 2 f ( x k +1 ) ≤ f ( x ) − t ∇ f ( x ) ∇ f ( x ) + k k ⊤ k k k 2 ) t ∇ f ( x ) Lt k = ⇒ f ( x k +1 ) ≤ f ( x ) − (1 − k k 2 2 We desire to have the following (46). It holds if.... ∇ f ( x ) b t f ( x k +1 ) ≤ f ( x ) − k k (46) 2 October 2, 2018 149 / 409

Convergence of the Gradient Descent Algorithm We recap the (necessary) inequality (36) resulting from Lipschitz continuity of ∇ f ( x ): ( ) ⊤ f ( y ) ≤ f ( x ) + ∇ f ( x )( y − x ) + L ∥ y − x ∥ 2 2 2 = x − t ∇ f ( x ) ≡ y , we get Considering x ≡ x , and x k k +1 k k k ∇ f ( x ) 2 k L t 2 f ( x k +1 ) ≤ f ( x ) − t ∇ f ( x ) ∇ f ( x ) + k k ⊤ k k k 2 ) t ∇ f ( x ) Lt k = ⇒ f ( x k +1 ) ≤ f ( x ) − (1 − k k 2 2 We desire to have the following (46). It holds if.... ∇ f ( x ) b t f ( x k +1 ) ≤ f ( x ) − k k { } (46) 2 the drop in the value of the objective will be atleast order of square of norm = ⇒ 1 − k t , we ensure that 0 < b t ≤ Lt 1 2 ≥ 1 ▶ With fxed step size t = b b 2 . of gradient L 2(1 − c t = min 1 , β 1 ) ▶ With backtracking step seach, (46) holds with b L derivation provided a few slides later See https://www.youtube.com/watch?v=SGZdsQviFYs&list=PLsd82ngobrvcYfCdnSnqM7lKLqE9qUUpX&index=17 October 2, 2018 149 / 409

Aside: Backtracking ray search and Lipschitz Continuity Recap the Backtracking ray search algorithm ▶ Choose a β ∈ (0 , 1) ▶ Start with t = 1 ▶ While f ( x + t ∆ x ) > f ( x ) + c t ∇ f ( x )∆ x , do T 1 ⋆ Update t ← βt k ∇ f ( x ) is satisfed if b On convergence, f ( x + t ∆ x ) ≤ f ( x ) + c t ∇ f ( x )∆ x T { } 1 For gradient descent, this means f ( x + t ∆ x ) ≤ f ( x ) − c t ∥∇ f ( x ) ∥ 2 1 For a function f with Lipschitz continuous ∇ f ( x ) we have that 2 f ( x k +1 ) ≤ f ( x k ) − b 2(1 − 1 ) c t t = min 1 , β { } L 2 Lt k Reason: With backtracking step seach, if 1 − 2 ≥ c , the Armijo rule will be satisfed. 1 2(1 − 1 ) c Lt k That is, 0 < t ≤ k = ⇒ 1 − 2 ≥ c . If not, there must exist an interger j for 1 L 2(1 −c 1 ) which β 2(1 − 1 ) c 2(1 − 1 ) c j t = min 1 , β ≤ β ≤ , we take b L L L October 2, 2018 152 / 409

∗ ⊤ ∗ Using convexity, we have f ( x ) ≥ f ( x ) + ∇ f ( x )( x − x ) k k k 2 = ⇒ f ( x ) ≤ f ( x ) + ∇ f ( x )( x − x ) ∗ ⊤ ∗ k k k k k ∇ f ( x ) Thus, + ∇ f ( x )( x − x ) − 2 ∇ f ( x ) − x − x f ( x k +1 ) ≤ f ( x ) − k t k ∇ f ( x ) 2 2 2 2 = ⇒ f ( x k +1 ) ≤ f ( x ) + ∇ f ( x )( x − x ) − ∗ ⊤ ∗ t k k k x − x 2 = ⇒ f ( x k +1 ) ≤ f ( x )+ ∗ ∗ T k k ∗ t k ∗ 1 1 2 t 2 t 2 October 2, 2018 150 / 409

∗ ⊤ ∗ Using convexity, we have f ( x ) ≥ f ( x ) + ∇ f ( x )( x − x ) k k k 2 = ⇒ f ( x ) ≤ f ( x ) + ∇ f ( x )( x − x ) ∗ ⊤ ∗ k k k k k ∇ f ( x ) Thus, + ∇ f ( x )( x − x ) − 2 ∇ f ( x ) − x − x f ( x k +1 ) ≤ f ( x ) − k t k k k ∇ f ( x ) 2 ( x − x 2 − x − x − t ∇ f ( x ) ) 2 2 = ⇒ f ( x k +1 ) ≤ f ( x ) + ∇ f ( x )( x − x ) − ∗ ⊤ ∗ t k k k k k +1 x − x 2 2 ( x − x − x 2 − x ) = ⇒ f ( x k +1 ) ≤ f ( x )+ ∗ ∗ T k k ∗ t k ∗ 1 1 2 t 2 t 2 2 2 = ⇒ f ( x k +1 ) ≤ f ( x ) + ∗ ∗ ∗ k 1 k 2 t ( x − x − x − x ) = ⇒ f ( x k +1 ) ≤ f ( x ∗ ) + ∗ ∗ 1 2 t 2 2 1 = ⇒ f ( x k +1 ) − f ( x ) ≤ ∗ ∗ ∗ k +1 (47) 2 t October 2, 2018 150 / 409

∑ ( ) 2 ∗ ) x ( (0) − x ∗ ) 1 i ∗ f ( x ) − f ( x ) ≤ 2 t i =1 i +1 ) ≤ f ( x ) ∀ i = 0 , 1 , . . . , k . We thus get 6 i The ray and line search ensure that f ( x i +1 ) ≤ f ( x ) + c 1 t ∇ f ( x )∆ x 6 By Armijo condition in (29), for some 0 < c 1 < 1, f ( x i i T i i October 2, 2018 151 / 409

∑ ( ) 2 ∗ ) x ( (0) − x ∗ ) 1 i ∗ f ( x ) − f ( x ) ≤ 2 t i =1 ∑ 2 i +1 ) ≤ f ( x ) ∀ i = 0 , 1 , . . . , k . We thus get 6 i The ray and line search ensure that f ( x k ( ) x (0) − x ∗ 1 k ∗ i ∗ f ( x ) − f ( x ) ≤ f ( x ) − f ( x ) ≤ k 2 tk i =1 k ∗ Thus, as k → ∞ , f ( x ) → f ( x ). This shows convergence for gradient descent. To get epsilon close to f(x*), it is su ffi cient for k to be O(1/epsilon) i +1 ) ≤ f ( x ) + c 1 t ∇ f ( x )∆ x 6 By Armijo condition in (29), for some 0 < c 1 < 1, f ( x i i T i i October 2, 2018 151 / 409

Rates of Convergence October 2, 2018 153 / 409

Convergence rate of convergence = slope increasing order observe acceleration (this is what we observed for the better algo for Rosenbrack function) October 2, 2018 154 / 409

Linear Convergence k +1 k 1 ∗ v , . . . , v is Linearly (or specifcally, Q-linearly) convergent if v ≤ r v − v − v k ∗ for some k ≥ θ , and r ∈ (0 , 1) ▶ ‘Q’ here stands for ‘quotient’ of the norms as shown above October 2, 2018 155 / 409

k +1 Q-convergence k ∗ v , . . . , v is Q-linearly convergent if 1 v ≤ r v − v − v k ∗ for some k ≥ θ , and r ∈ (0 , 1) ▶ ‘Q’ here stands for ‘quotient’ of the norms as shown above [ 11 n , . . . ] 2 , 21 4 , 41 1 ▶ Consider the sequence s s = 8 , . . . , 5 + 1 1 2 The sequence converges to 5 October 2, 2018 156 / 409

k +1 Q-convergence k ∗ v , . . . , v is Q-linearly convergent if 1 v ≤ r v − v − v k ∗ for some k ≥ θ , and r ∈ (0 , 1) ▶ ‘Q’ here stands for ‘quotient’ of the norms as shown above [ 11 n , . . . ] 2 , 21 4 , 41 1 ▶ Consider the sequence s s = 8 , . . . , 5 + 1 1 2 ∗ Q-linearly convergent The sequence converges to s = 5 and it is 1 October 2, 2018 156 / 409

k +1 Q-convergence k ∗ v , . . . , v is Q-linearly convergent if 1 v ≤ r v − v − v k ∗ for some k ≥ θ , and r ∈ (0 , 1) k +1 k +1 ▶ ‘Q’ here stands for ‘quotient’ of the norms as shown above k = [ 11 n , . . . ] 2 , 21 4 , 41 1 ▶ Consider the sequence s s = 8 , . . . , 5 + 1 1 2 ∗ ∗ The sequence converges to s = 5 and it is Q-linear convergence because: s 1 = − s 1 s − s 1 1 1 2 2 < 0 . 6(= M ) 1 k ∗ 1 1 1 2 ▶ How about the convergence result we got by assuming Lipschitz continuity with backtracking and exact line searches? October 2, 2018 156 / 409

Generalizing Q-convergence to R-convergence 21 4 , 21 1 Consider the sequence r r = 5 , 4 , . . . , 5 + 2 ⌋ , . . . 1 1 ⌊ n 4 5 The sequence converges to October 2, 2018 157 / 409

Generalizing Q-convergence to R-convergence 21 4 , 21 1 Consider the sequence r r = 5 , 4 , . . . , 5 + 2 ⌋ , . . . 1 1 ⌊ n 4 ∗ The sequence converges to s = 5 but not Q-linearly! 1 Let us consider the convergence result we got by assuming Lipschitz continuity with 2 backtracking and exact line searches: − x x ∗ (0) ∗ f ( x ) − f ( x ) ≤ k 2 tk October 2, 2018 157 / 409

Generalizing Q-convergence to R-convergence 21 4 , 21 1 Consider the sequence r r = 5 , 4 , . . . , 5 + 2 ⌋ , . . . 1 1 ⌊ n 4 ∗ The sequence converges to s = 5 but not Q-linearly! 1 Let us consider the convergence result we got by assuming Lipschitz continuity with 2 backtracking and exact line searches: − x x ∗ (0) ∗ f ( x ) − f ( x ) ≤ k 2 tk ≤ v , ∀ k , and We say that the sequence s , . . . , s is R-linearly convergent if s − s Q-convergence by itself insufcient. We will generalize it to R-convergence . { } ‘R’ here stands for ‘root’, as we are looking at convergence rooted at x ∗ k k ∗ k 1 v k converges Q-linearly to zero October 2, 2018 157 / 409

R-convergence assuming Lipschitz continuity ∥ x (0) − x ∥ 2 ∗ k α k , where α is a constant Consider v = = 2 tk Here, we have ∥ v k +1 −v ∥ ∗ <= k/(k+1) -- > 1 as k tends to infinity ∥ v −v ∥ k ∗ October 2, 2018 158 / 409

Recommend

More recommend