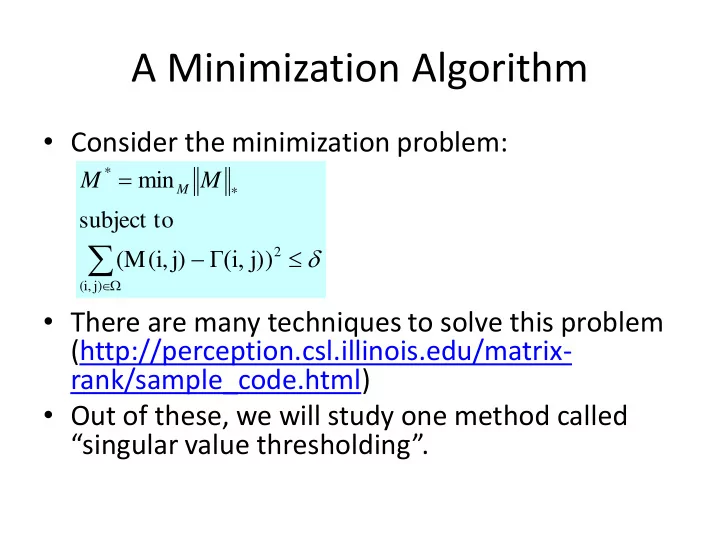

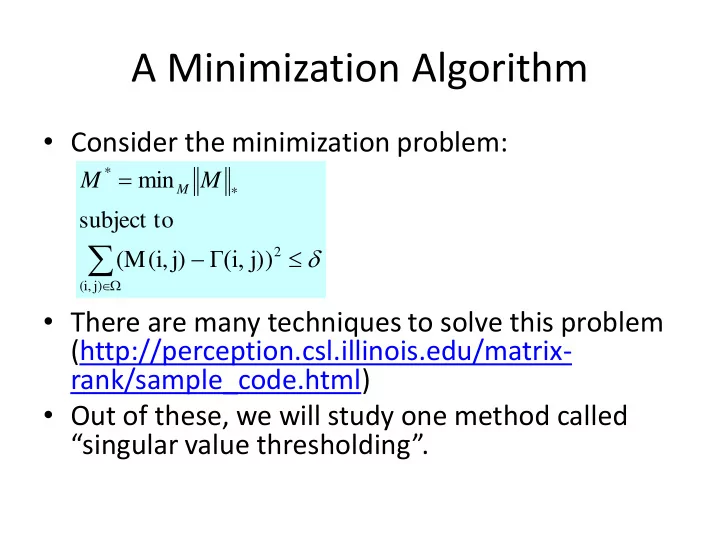

A Minimization Algorithm • Consider the minimization problem: * M min M M * subject to Γ(i, 2 (M(i, j) j) ) (i, j) • There are many techniques to solve this problem (http://perception.csl.illinois.edu/matrix- rank/sample_code.html) • Out of these, we will study one method called “singular value thresholding ”.

Ref: Cai et al, A singular value thresholding algorithm for matrix completion , SIAM Journal on Optimization, 2010. Singular Value Thresholding (SVT) ˆ * n n SVT ( , 0 ) Y soft threshold ( Y R ; ) 1 2 { { ( 0 ) n n T Y 0 R Y USV ( using svd ) 1 2 for ( k 1 : rank ( Y )) k 1 while(conv ergence criterion not met) { { S ( k , k ) max( 0 , S ( k , k ) ); The soft- } ( k ) ( k 1 ) soft threshold ( Y ; ) thresholding rank ( Y ) ( k ) ( k 1 ) ( k ) procedure obeys the Y Y P ( ); k k 1 ; ˆ t Y S ( k , k ) u v k following property k k } (which we state w/o i 1 proof). } * ( k ) ; } soft threshold ( Y ; ) 1 2 arg min X Y X X F * 2

Properties of SVT (stated w/o proof) • The sequence { k } converges to the true solution of the problem below provided the step-sizes { k } all lie between 0 and 2. 2 * M min M 0 . 5 M M * F subject to Γ ( i , j ) , M ( i , j ) ( i , j ) • For large values of , this converges to the solution of the original problem (i.e. without the Frobenius norm term).

Properties of SVT (stated w/o proof) • The matrices { k } turn out to have low rank (empirical observation – proof not established). • The matrices { Y k } also turn out to be sparse (empirical observation – rigorous proof not established). • The SVT step does not require computation of full SVD – we need only those singular vectors whose singular values exceed τ . There are special iterative methods for that.

Results • The SVT algorithm works very efficiently and is easily implementable in MATLAB. • The authors report reconstruction of a 30,000 by 30,000 matrix in just 17 minutes on a 1.86 GHz dual-core desktop with 3 GB RAM and with MATLAB’s multithreading option enabled.

Results (Data without noise) https://arxiv.org/abs/0810.3286

Results (Noisy Data) https://arxiv.org/abs/0810.3286

Results on real data • Dataset consists of a matrix M of geodesic distances between 312 cities in the USA/Canada. • This matrix is of approximately low-rank (in fact, the relative Frobenius error between M and its rank-3 approximation is 0.1159). • 70% of the entries of this matrix (chosen uniformly at random) were blanked out.

Results on real data https://arxiv.org/abs/0810.3286

Algorithm for Robust PCA • The algorithm uses the augmented Lagrangian technique. • See https://en.wikipedia.org/wiki/Augmented_Lag rangian_method and https://www.him.uni- bonn.de/fileadmin/him/Section6_HIM_v1.pdf • Suppose you want to solve: min f ( x ) w.r.t. x s.t. i I , c ( x ) 0 i

Algorithm for Robust PCA • Suppose you want to solve: min f ( x ) w.r.t. x s.t. i I , c ( x ) 0 i • The augmented Lagrangian method (ALM) adopts the following iterative updates: 2 x arg min f ( x ) c ( x ) c ( x ) k x k i i i i I i I c ( x ) i i k i k Augmentation term Lagrangian term

ALM: Some intuition • What is the intuition behind the update of the Lagrange parameters { λ i }? • The problem is: λ t min max f ( x ) ( x ) c min f ( x ) λ x ( x ) ( c ( x ), c ( x ),..., c ( x )) s.t. i I, c ( x ) 0 c 1 2 | I | i The maximum w.r.t. λ will be ∞ unless the constraint is satisfied. Hence these problems are equivalent.

ALM: Some intuition • The problem is: λ t min f ( x ) min max f ( x ) ( x ) c λ x s.t. i I, c ( x ) 0 ( x ) ( c ( x ), c ( x ),..., c ( x )) c i 1 2 | I | Due to non-smoothness of the max function, the equivalence has little computational benefit. We smooth it by adding another term that penalizes deviations from a prior estimate of the λ parameters. 2 λ λ - λ λ ( x ) c λ t min max f ( x ) ( x ) c λ x 2 Maximization w.r.t. λ is now easy

ALM: Some inutuion – inequality constraints min f ( x ) λ t min max f ( x ) ( x ) c λ x 0 s.t. i I, c ( x ) 0 ( x ) ( c ( x ), c ( x ),..., c ( x )) c i 1 2 | I | 2 λ λ - λ λ max( ( x ), 0 ) c λ t min max f ( x ) ( x ) c λ x 2 Maximization w.r.t. λ is now easy

Theorem 1 (Informal Statement) • Consider a matrix M of size n 1 by n 2 which is the sum of a “sufficiently low - rank” component L and a “sufficiently sparse” component S whose support is uniformly randomly distributed in the entries of M . • Then the solution of the following optimization problem (known as principal component pursuit ) yields exact estimates of L and S with “very high” probability: 1 E ( L ' , S ' ) min L S ( L , S ) * 1 max( n , n ) 1 2 This is a convex subject to L S M . optimization problem. n n 1 2 Note : S | S | ij 1 i 1 j 1

Algorithm for Robust PCA • In our case, we seek to optimize: • Basic algorithm: Lagrange matrix ( L , S ) arg min l ( L , S , Y ), Y Y ( M L S ) k k ( L , S ) k k 1 k k k Update of S using soft-thresholding Update of L using singular-value soft-thresholding

Alternating Minimization Algorithm for Robust PCA

https://statweb.stanford.edu/~candes/papers/RobustPCA.pdf Results

(Compressive) Low Rank Matrix Recovery

Compressive RPCA: Algorithm and an Application Primarily based on the paper: Waters et al, “ SpaRCS: Recovering Low-Rank and Sparse Matrices from Compressive Measurements ”, NIPS 2011

Problem statement • Let M be a matrix which is the sum of low rank matrix L and sparse matrix S . • We observed compressive measurements of M in the following form: A n n n n m ( ), R , R R , m n n y L S L S , y 1 2 1 2 1 2 A linear operator acting/map on M A Retrieve , given , L S y

Scenarios • M could be a matrix representing a video – each column of M is a vectorized frame from the video. • M could also be a matrix representing a hyperspectral image – each column is the vectorized form of a slice at a given wavelength. • Robust Matrix completion – a special form of a compressive L + S recovery problem.

Objective function: SpaRCS Free parameters SpaRCS = sparse and low rank decomposition via compressive sampling

SparCS Algorithm https://papers.nips.cc/pap er/4438-sparcs-recovering- low-rank-and-sparse- matrices-from- compressive- measurements.pdf Very simple to implement; but requires tuning of K , r parameters; convergence guarantees not established.

Results: Phase transition https://papers.nips.cc/paper/4438-sparcs- recovering-low-rank-and-sparse-matrices- from-compressive-measurements.pdf Code: https://www.ece.rice.edu/~aew2/sparcs.html

Results: Video CS Follows Rice SPC model, independent compressive measurements on each frame of the matrix M representing the video. https://papers.nips.cc/paper/4438-sparcs- recovering-low-rank-and-sparse-matrices- from-compressive-measurements.pdf

Results: Video CS Follows Rice SPC model, independent compressive measurements on each frame of the matrix M representing the video. https://papers.nips.cc/paper/4438-sparcs- recovering-low-rank-and-sparse-matrices- from-compressive-measurements.pdf

Results: Hyperspectral CS Rice SPC model of CS measurements on https://papers.nips.cc/paper/4438-sparcs-recovering-low-rank-and-sparse-matrices- from-compressive-measurements.pdf every spectral band

Results: Robust matrix completion https://papers.nips.cc/paper/4438-sparcs-recovering-low-rank-and-sparse- matrices-from-compressive-measurements.pdf

Theorem for Compressive PCP Q is obtained from the linear span of different independent N(0,1) matrices with iid entries Wright et al, “Compressive Principal Component Pursuit” http://yima.csl.illinois.edu/psfile/CPCP.pdf

Summary • Low rank matrix completion: motivation, key theorems, numerical results • Algorithm for low rank matrix completion • Robust PCA • (Compressive) low rank matrix recovery • Compressive RPCA • Several papers linked on moodle

Recommend

More recommend