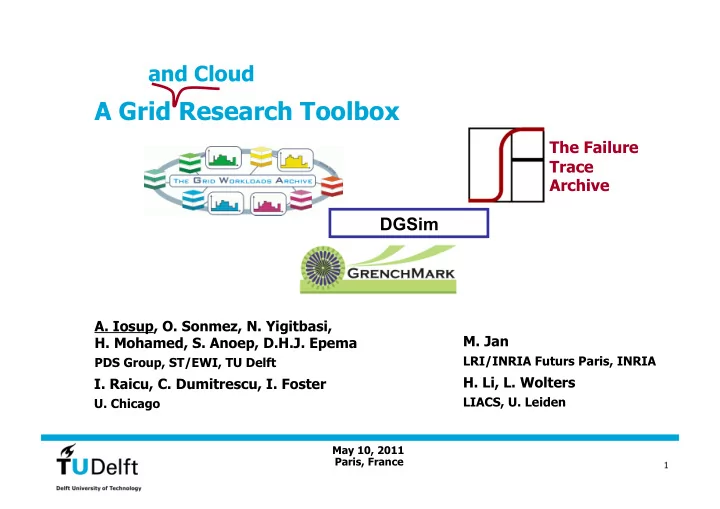

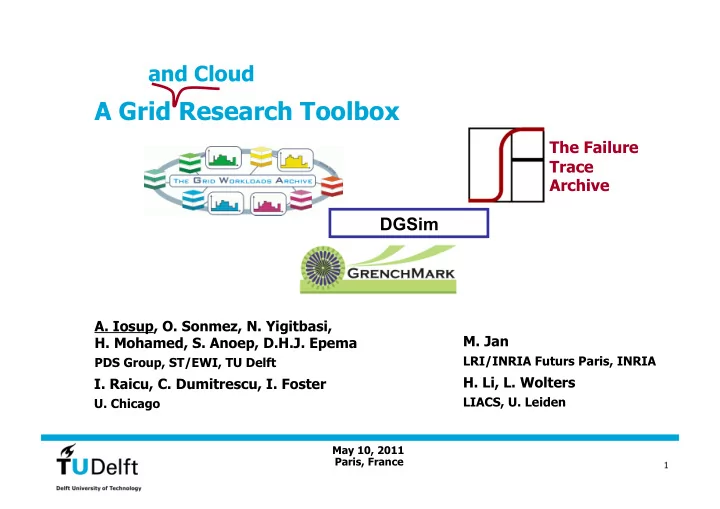

and Cloud A Grid Research Toolbox The Failure Trace Archive DGSim A. Iosup, O. Sonmez, N. Yigitbasi, M. Jan H. Mohamed, S. Anoep, D.H.J. Epema LRI/INRIA Futurs Paris, INRIA PDS Group, ST/EWI, TU Delft H. Li, L. Wolters I. Raicu, C. Dumitrescu, I. Foster LIACS, U. Leiden U. Chicago May 10, 2011 Paris, France 1

A Layered View of the Grid World • Layer 1: Hardware + OS • Automated • Non-grid (XtreemOS?) Grid Applications • Layers 2-4: Grid Middleware Stack • Low Level: file transfers, local resource allocation, etc. Grid MW Stack Grid Very High Level MW • High Level: grid scheduling • Very High Level: application environments (e.g., distributed Grid High Level MW objects) • Automated/user control Grid Low Level MW • Simple to complex • Layer 5: Grid Applications • User control HW + OS • Simple to complex May 10, 2011 2

Grid Work: Science or Engineering? • Work on Grid Middleware and Applications • When is work in grid computing science ? • Studying systems to uncover their hidden laws • Designing innovative systems • Proposing novel algorithms • Methodological aspects: repeatable experiments to verify and extend hypotheses • When is work in grid computing engineering ? • Showing that the system works in a common case, or in a special case of great importance (e.g., weather prediction) • When our students can do it (H. Casanova’s argument) May 10, 2011 3

Grid Research Problem: We Are Missing Both Data and Tools • Lack of data • Infrastructure • number and type of resources, resource availability and failures • Workloads • arrival process, resource consumption • … • Lack of tools • Simulators • SimGrid, GridSim, MicroGrid, GangSim, OptorGrid, MONARC, … • Testing tools that operate in real environments • DiPerF, QUAKE/FAIL-FCI • … May 10, 2011 4

Anecdote: Grids are far from being reliable job execution environments Server • 99.99999% reliable Small Cluster • 99.999% reliable Production • 5x decrease in failure rate Cluster after first year [Schroeder and Gibson, DSN‘06] DAS-2 • >10% jobs fail [Iosup et al., CCGrid’06] TeraGrid • 20-45% failures [Khalili et al., Grid’06] Grid3 • 27% failures, 5-10 retries [Dumitrescu et al., GCC’05] May 10, 2011 5 Source: dboard-gr.cern.ch, May’07.

The Anecdote at Scale • NMI Build-and-Test Environment at U.Wisc.-Madison: 112 hosts, >40 platforms (e.g., X86-32/Solaris/5, X86-64/RH/9) • Serves >50 grid middleware packages : Condor, Globus, VDT, gLite, GridFTP, RLS, NWS, INCA(-2), APST, NINF-G, BOINC … A. Iosup, D.H.J.Epema, P. Couvares, A. Karp, M. Livny, Build May 10, 2011 -and-Test Workloads for Grid Middleware: Problem, Analysis, 6 and Applications, CCGrid, 2007.

A Grid Research Toolbox • Hypothesis: (a) is better than (b). For scenario 1, … 1 3 DGSim 2 May 10, 2011 7

Research Questions May 10, 2011 8

Outline 1. Introduction and Motivation 2. Q1: Exchange Data 1. The Grid Workloads Archive 2. The Failure Trace Archive 3. The Cloud Workloads Archive (?) 3. Q2: System Characteristics 1. Grid Workloads 2. Grid Infrastructure 4. Q3: System Testing and Evaluation May 10, 2011 9

Traces in Distributed Systems Research • “My system/method/algorithm is better than yours (on my carefully crafted workload)” • Unrealistic (trivial): Prove that “ prioritize jobs from users whose name starts with A ” is a good scheduling policy • Realistic? “85% jobs are short”; “10% Writes”; ... • Major problem in Computer Systems research • Workload Trace = recording of real activity from a (real) system, often as a sequence of jobs / requests submitted by users for execution • Main use: compare and cross-validate new job and resource management techniques and algorithms • Major problem : real workload traces from several sources August 26, 2010 10

2.1. The Grid Workloads Archive [1/3] Content 6 traces online 1.5 yrs >750K >250 A. Iosup, H. Li, M. Jan, S. Anoep, C. Dumitrescu, L. Wolters, D. Epema, The Grid Workloads Archive, FGCS 24, 672—686, 2008. May 10, 2011 11

2.1. The Grid Workloads Archive [2/3] Approach: Standard Data Format (GWF) • Goals • Provide a unitary format for Grid workloads; • Same format in plain text and relational DB (SQLite/SQL92); • To ease adoption, base on the Parallel Workloads Format (SWF). • Existing • Identification data: Job/User/Group/Application ID • Time and Status: Sub/Start/Finish Time, Job Status and Exit code • Request vs. consumption: CPU/Wallclock/Mem • Added • Job submission site • Job structure: bag-of-tasks, workflows • Extensions: co-allocation, reservations, others possible May 10, 2011 A. Iosup, H. Li, M. Jan, S. Anoep, C. Dumitrescu, L. Wolters, 12 D. Epema, The Grid Workloads Archive, FGCS 24, 672—686, 2008.

2.1. The Grid Workloads Archive [3/3] Approach: GWF Example Used Req Submit Wait[s] Run #CPUs Mem [KB] #CPUs A. Iosup, H. Li, M. Jan, S. Anoep, C. Dumitrescu, L. Wolters, D. Epema, The Grid Workloads Archive, FGCS 24, 672—686, 2008. May 10, 2011 13

2.2. The Failure Trace Archive The Failure Trace Presentation Archive Types of systems • (Desktop) Grids • DNS servers • HPC Clusters • P2P systems Stats • 25 traces • 100,000 nodes • Decades of operation May 10, 2011 14

2.2. The Cloud Workloads Archive [1/2] One Format Fits Them All • Flat format CWJ CWJD CWT CWTD • Job and Tasks • Summary (20 unique data fields) and Detail (60 fields) • Categories of information • Shared with GWA, PWA: Time, Disk, Memory, Net • Jobs/Tasks that change resource consumption profile • MapReduce-specific (two-thirds data fields) A. Iosup, R. Griffith, A. Konwinski, M. Zaharia, A. Ghodsi, I. Stoica, Data Format for the Cloud Workloads Archive, v.3, 13/07/10 May 10, 2011 15 15

2.2. The Cloud Workloads Archive [2/2] The Cloud Workloads Archive • Looking for invariants • Wr [%] ~40% Total IO, but absolute values vary Trace ID Total IO [MB] Rd. [MB] Wr [%] HDFS Wr[MB] CWA-01 10,934 6,805 38% 1,538 CWA-02 75,546 47,539 37% 8,563 • # Tasks/Job, ratio M:(M+R) Tasks, vary • Understanding workload evolution May 10, 2011 16

Outline 1. Introduction and Motivation 2. Q1: Exchange Data 1. The Grid Workloads Archive 2. The Failure Trace Archive 3. The Cloud Workloads Archive (?) 3. Q2: System Characteristics 1. Grid Workloads 2. Grid Infrastructure 4. Q3: System Testing and Evaluation May 10, 2011 17

3.1. Grid Workloads [1/7] Analysis Summary: Grid workloads different, e.g., from parallel production envs. (HPC) Traces: LCG, Grid3, TeraGrid, and DAS • • long traces (6+ months), active environments (500+K jobs per trace, 100s of users), >4 million jobs Analysis • • System-wide, VO, group, user characteristics • Environment, user evolution • System performance Selected findings • • Almost no parallel jobs • Top 2-5 groups/users dominate the workloads • Performance problems : high job wait time, high failure rates A. Iosup, C. Dumitrescu, D.H.J. Epema, H. Li, L. Wolters, How are Real Grids Used? The Analysis of Four Grid Traces and Its Implications, Grid 2006. May 10, 2011 18

3.1. Grid Workloads [2/7] Analysis Summary: Grids vs. Parallel Production Systems Similar CPUTime/Year, 5x larger arrival bursts • LCG cluster daily peak: 22.5k jobs Grids Parallel Production Environments (Large clusters, supercomputers) A. Iosup, D.H.J. Epema, C. Franke, A. Papaspyrou, L. Schley, B. Song, R. Yahyapour, On Grid Performance Evaluation using Synthetic Workloads, JSSPP’06. May 10, 2011 19

3.1. Grid Workloads [3/7] More Analysis: Special Workload Components Bags-of-Tasks ( BoTs ) Workflows ( WFs ) Time [units] BoT = set of jobs… WF = set of jobs with precedence (think Direct Acyclic Graph) …that start at most Δ s after the first job Parameter Sweep App. = BoT with same binary May 10, 2011 20

3.1. Grid Workloads [4/7] BoTs are predominant in grids • Selected Findings • Batches predominant in grid workloads; up to 96% CPUTime Grid’5000 NorduGrid GLOW (Condor) Submissions 26k 50k 13k Jobs 808k (951k) 738k (781k) 205k (216k) CPU time 193y (651y) 2192y (2443y) 53y (55y) • Average batch size ( Δ ≤ 120s) is 15-30 (500 max) • 75% of the batches are sized 20 jobs or less A. Iosup, M. Jan, O. Sonmez, and D.H.J. Epema, The Characteristics and Performance of Groups of Jobs in Grids, Euro-Par, LNCS, vol.4641, pp. 382-393, 2007 . May 10, 2011 21

3.1. Grid Workloads [5/7] Workflows exist, but they seem small • Traces • Selected Findings • Loose coupling • Graph with 3-4 levels • Average WF size is 30/44 jobs • 75%+ WFs are sized 40 jobs or less, 95% are sized 200 jobs or less S. Ostermann, A. Iosup, R. Prodan, D.H.J. Epema, and T. Fahringer. On the Characteristics of Grid Workflows, May 10, 2011 CoreGRID Integrated Research in Grid Computing (CGIW), 2008 . 22

3.1. Grid Workloads [6/7] Modeling Grid Workloads: Feitelson adapted • Adapted to grids: percentage parallel jobs, other values. • Validated with 4 grid and 7 parallel production env. traces A. Iosup, D.H.J. Epema, T. Tannenbaum, M. Farrellee, and M. Livny. Inter-Operating Grids Through Delegated MatchMaking, ACM/IEEE Conference on High Performance Networking and May 10, 2011 Computing (SC), pp. 13-21, 2007 . 23

Recommend

More recommend