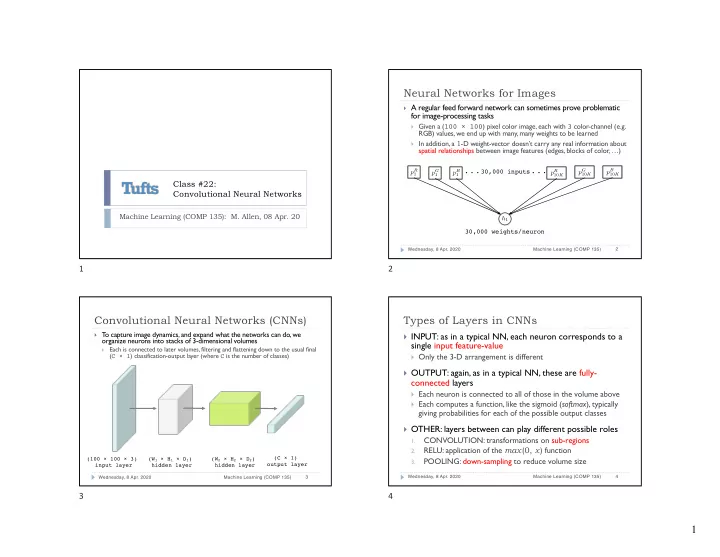

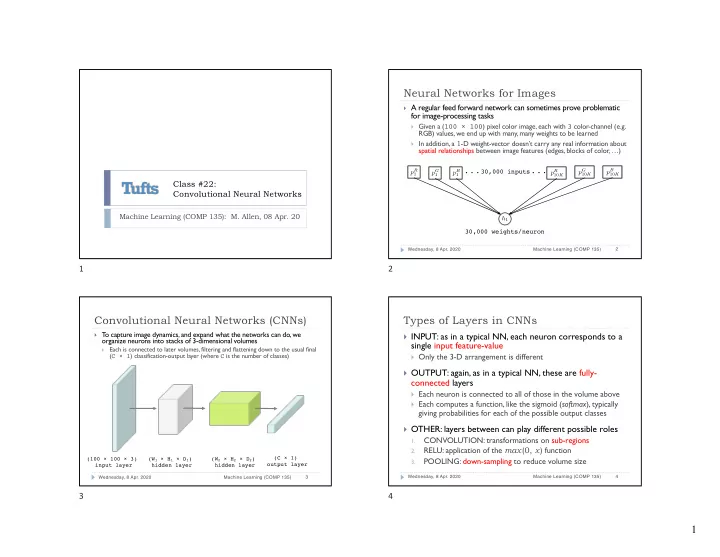

<latexit sha1_base64="JomuG3GYHOTnDcE/sFTr2w03D8=">AB5nicZVC7TsMwFL3hWcqrgMTCYlEhMaAqKQOMVkYW0EfUltVjuMkVh07cpxKVdVPgA268gP8BTM7C9+Cm3YJvZKlo+tz7z3nuDFnibtH2tjc2t7Z7ewV9w/ODw6Lp2cthOZKkJbRHKpui5OKGeCtjTnHZjRXHkctpxRw+L/86YqoRJ8awnMR1EOBDMZwRr03oKh86wVLYrdlZoHTgrUK6dN3/Z/2rMSx9z1J0ogKThOkp5jx3owxUozwums2E8TGmMywgGdZgJn6Mq0PORLZ7QKOvmeELqTFBupdq/34wZSJONRVkucZPOdISLbwgjylKNJ8YgIli5j4iIVaYaOM4t0mlnHo3aLyIyTNaeSANP4yqRq8JwPlvdx20qxXntlJtmiTqsKwCXMAlXIMDd1CDR2hACwgE8ALvMLdC69V6s+ZL6oa1mjmDXFkfx1gj8E=</latexit> <latexit sha1_base64="t/3XREbhyvJn9CDbe+t6Mxbi24I=">AB6XicZVDLTgIxFO3gC8cX6tJNIzFxYcgMLnRjJLpxiYk8EiCkdDpQ6bST9g4JIfyD7pQtH+POrfFvLAObkZM0OTk95zby8W3IDn/Tq5jc2t7Z38ru3f3B4VDg+qRuVaMpqVAmlmz1imOCS1YCDYM1YMxL1BGv0ho+L/8aIacOVfIFxzDoR6UseckrASvW2CBSYbqHolbwUeJ34K1K8/3Lv4vmPW+0WvtuBoknEJFBjGn5XgydCdHAqWBTt50YFhM6JH02STNO8YWVAhwqbZ8EnKoZn1SQZspUtxIbzsTLuMEmKTLNmEiMCi8WAcHXDMKYmwJoZrb+ZgOiCYU7NKZTjoRLjCo8WlAptV9JX1D6KyzWsP4P9fd53UyX/ulR+9oqVB7REHp2hc3SJfHSDKugJVENUfSK3tAnmjlD5935cGZLa85Z1ZyiDJz5H2JpkQk=</latexit> <latexit sha1_base64="Ti0VMdb/Cku5WvZkbUqdQUrIFyc=">AB7HicZVDLTgIxFL3jE/GFunTQExYGDKDC1wS3Ji4wUQeBpB0Oh1o6LSToeETPgK3SFb/8WNe6MfY3lsRm7S5OT23HvPOW7IWaRt+9va2t7Z3dvPHGQPj45PTnNn581IxorQBpFcqraLI8qZoA3NKftUFEcuJy23NHd4r81pipiUjzpSUh7AR4I5jOCtWk9h/3EsR+mL7V+rmCX7GWhTeCsQaGaL/7+VD5n9X7uq+tJEgdUaMJxFHUcO9S9BCvNCKfTbDeOaIjJCA9ospQ5RVem5SFfKvOERstuiekXspKTXdi7d/2EibCWFNBVmv8mCMt0cIR8piRPOJAZgoZu4jMsQKE218pzapmFPvGo0XYXlGKx9Iwx8GZaPXBOD8t7sJmuWSc1MqP5okarCqDFxCHorgQAWqcA91aACBAF7hHeaWsN6smTVfUbes9cwFpMr6+AN9W5JT</latexit> <latexit sha1_base64="GzcBwU3cxbAhY0QbRgzcqYudhwY=">AB7HicZVDLTgIxFL2DL8QX6tJNAzFhYcgMLnBJdKGJG0zkYQBJp1OgodNOh0SMuErdIds/Rc37o1+jGVgM3qTJie35957znEDzkJt219WZmNza3snu5vb2z84PMofnzRDGSlCG0RyqdouDilngjY05y2A0Wx73Lacsc3y/WhKqQSfGopwHt+Xgo2IARrE3rKejHjn0/e7t54t2U4K/QfOGhRrhdLPd/VjXu/nP7ueJFPhSYch2HsQPdi7HSjHA6y3WjkAaYjPGQxonMGTo3LQ8NpDJPaJR0UzwhdSIrNd2J9OCqFzMRJoKsloziDjSEi0dIY8pSjSfGoCJYuY+IiOsMNHGd2qTij1LtBkGZntPKhNPyRXzF6TQDOX7v/QbNSdi7LlQeTxDWsKgtnUIASOFCFGtxBHRpAwIcXeIOFJaxXa24tVtSMtZ45hVRZ7+E25JY</latexit> <latexit sha1_base64="/K5GSUEyuOwLYTjQ73mHTEKjiK0=">AB7HicZVDLTgIxFL2DL8QX6tJNAzFhYcgMLnBJdGPiBo08DCDpdAo0dNpJp0NCJnyF7pCt/+LGvdGPsQxsRm/S5OT23HvPOW7AWaht+8vKbGxube9kd3N7+weHR/njk2YoI0Vog0guVdvFIeVM0IZmtN2oCj2XU5b7vhm+d+aUBUyKR71NKA9Hw8FGzCtWk9Bf3Yse9mzw/9fNEu20mh/8BZg2KtUPr5rn7M6/38Z9eTJPKp0ITjMOw4dqB7MVaEU5nuW4U0gCTMR7SOJE5Q+em5aGBVOYJjZJuiekTmSlpjuRHlz1YiaCSFNBVmsGEUdaoqUj5DFieZTAzBRzNxHZIQVJtr4Tm1SEafeBZosw/KMVj6Uhj/yK0avCcD5a/c/aFbKzmW5cm+SuIZVZeEMClACB6pQg1uoQwMI+PACb7CwhPVqza3Fipqx1jOnkCr/ReVW5Jj</latexit> <latexit sha1_base64="uFlmIB5tH74BeK09VIrhC1L5Lho=">AB6HicZVBNTwIxEJ3FL8Qv1KOXBmLCwZBdPOCR4MUjJi6QAJutwuV0m6XRJC+A16U+LNf+PFu9EfY1m4rEzS5GX6Zua954WcRdq2v63M1vbO7l52P3dweHR8kj89a0YyVoS6RHKp2h6OKGeCupTtuhonjscdryRrfL/9aEqohJ8aCnIe2N8UCwgBGsTcsN+85jvZ8v2mU7KbQJnDUo1gql35/q53ujn/q+pLEYyo04TiKOo4d6t4MK80Ip/NcN45oiMkID+gskThHl6blo0Aq84RGSTfFE1InklLTnVgHN70ZE2GsqSCrNUHMkZo6Qb5TFGi+dQATBQz9xEZYoWJNp5Tm1TMqX+FJsugfKOVD6ThD8cVo9cE4Py3uwmalbJzXa7cmyTqsKosXEABSuBAFWpwBw1wgQCDZ3iDhfVkvViv1mJFzVjrmXNIlfXxB7O2kLg=</latexit> <latexit sha1_base64="TL7F4fUnUPQncJ2moBH/4EG/Y=">AB6HicZVBNTwIxEJ3FL8Qv1KOXBmLCwZBdPOCR6EGPmLhAkhKtwuVbrvpdkI4TfoTYk3/40X70Z/jGXhsjJk5fpm5n3Xj/kLNK2/W1lNja3tneyu7m9/YPDo/zxSOSsSLUJZJL1erjiHImqKuZ5rQVKoqDPqfN/uhm8d8cUxUxKR70JKTdA8E8xnB2rTcsOc83vbyRbtsJ4XWgbMCxVqh9PtT/Xyv9/JfHU+SOKBCE46jqO3Yoe5OsdKMcDrLdeKIhpiM8IBOE4kzdG5aHvKlMk9olHRTPCF1Iik13Y61f9WdMhHGmgqyXOPHGmJFm6QxQlmk8MwEQxcx+RIVaYaOM5tUnFnHoXaLwIyjNa+UAa/jCoGL0mAOe/3XQqJSdy3Ll3iRxDcvKwhkUoAQOVKEGd1AHFwgweIY3mFtP1ov1as2X1Iy1mjmFVFkf7s2kL0=</latexit> <latexit sha1_base64="t/3XREbhyvJn9CDbe+t6Mxbi24I=">AB6XicZVDLTgIxFO3gC8cX6tJNIzFxYcgMLnRjJLpxiYk8EiCkdDpQ6bST9g4JIfyD7pQtH+POrfFvLAObkZM0OTk95zby8W3IDn/Tq5jc2t7Z38ru3f3B4VDg+qRuVaMpqVAmlmz1imOCS1YCDYM1YMxL1BGv0ho+L/8aIacOVfIFxzDoR6UseckrASvW2CBSYbqHolbwUeJ34K1K8/3Lv4vmPW+0WvtuBoknEJFBjGn5XgydCdHAqWBTt50YFhM6JH02STNO8YWVAhwqbZ8EnKoZn1SQZspUtxIbzsTLuMEmKTLNmEiMCi8WAcHXDMKYmwJoZrb+ZgOiCYU7NKZTjoRLjCo8WlAptV9JX1D6KyzWsP4P9fd53UyX/ulR+9oqVB7REHp2hc3SJfHSDKugJVENUfSK3tAnmjlD5935cGZLa85Z1ZyiDJz5H2JpkQk=</latexit> <latexit sha1_base64="ZQy0CNlERCF67CVRpQZ3fDtDoM=">AB6HicZVBNTwIxEJ3FL8Qv1KOXBmLCwZBdPOCR6MUjGhdIAEnpdqHSbTfdLgkh/Aa9KfHmv/Hi3eiPsSxcViZp8jJ9M/Pe64ecRdq2v63MxubW9k52N7e3f3B4lD8+aUQyVoS6RHKpWn0cUc4EdTXTnLZCRXHQ57TZH90s/ptjqiImxYOehLQb4IFgPiNYm5Yb9pzH+16+aJftpNA6cFagWCuUfn+qn+/1Xv6r40kSB1RownEUtR071N0pVpoRTme5ThzREJMRHtBpInGzk3LQ75U5gmNkm6KJ6ROJKWm27H2r7pTJsJYU0GWa/yYIy3Rwg3ymKJE84kBmChm7iMyxAoTbTynNqmYU+8CjRdBeUYrH0jDHwYVo9cE4Py3uw4albJzWa7cmSuYVlZOIMClMCBKtTgFurgAgEGz/AGc+vJerFerfmSmrFWM6eQKuvjD8u2kMg=</latexit> Neural Networks for Images } A regular feed forward network can sometimes prove problematic for image-processing tasks } Given a ( 100 × 100 ) pixel color image, each with 3 color-channel (e.g. RGB) values, we end up with many, many weights to be learned } In addition, a 1 -D weight-vector doesn’t carry any real information about spatial relationships between image features (edges, blocks of color, …) p R p G p B 30,000 inputs p R p G p B 1 . . . . . . 10 K 10 K 1 1 10 K Class #22: Convolutional Neural Networks Machine Learning (COMP 135): M. Allen, 08 Apr. 20 h 1 30,000 weights/neuron 2 Wednesday, 8 Apr. 2020 Machine Learning (COMP 135) 1 2 Convolutional Neural Networks (CNNs) Types of Layers in CNNs } T o capture image dynamics, and expand what the networks can do, we } INPUT: as in a typical NN, each neuron corresponds to a organize neurons into stacks of 3-dimensional volumes single input feature-value Each is connected to later volumes, filtering and flattening down to the usual final } ( C × 1 ) classification-output layer (where C is the number of classes) } Only the 3-D arrangement is different } OUTPUT: again, as in a typical NN, these are fully- connected layers } Each neuron is connected to all of those in the volume above } Each computes a function, like the sigmoid ( softmax ), typically giving probabilities for each of the possible output classes } OTHER: layers between can play different possible roles CONVOLUTION: transformations on sub-regions 1. RELU: application of the max (0, x ) function 2. (C × 1) (100 × 100 × 3) (W 1 × H 1 × D 1 ) (W 2 × H 2 × D 2 ) POOLING: down-sampling to reduce volume size 3. input layer hidden layer hidden layer output layer 4 Wednesday, 8 Apr. 2020 Machine Learning (COMP 135) 3 Wednesday, 8 Apr. 2020 Machine Learning (COMP 135) 3 4 1

Deep Convolutional Networks Convolutional (CONV) Layers } For complex image-classification tasks, we may use many } The core innovation in a CNN is the idea of a spatial layers, combining the types over and over again filter, which is a 3-D volume where: Each neuron in one layer computes a function on a proper 1. POOL POOL sub-region of the layer above RELU RELU RELU RELU CONV CONV CONV CONV We form the CONV layer by “tiling” the prior layer, in 2. (possibly) overlapping sub-regions Every neuron in one layer shares a single set of weights, and 3. so computes the same function dog } Two main decisions in building such a layer: cat What size of sub-region should we use? 1. horse What is our stride; i.e., how far do we move over each time 2. we connect our next sub-region? 6 Wednesday, 8 Apr. 2020 Machine Learning (COMP 135) 5 Wednesday, 8 Apr. 2020 Machine Learning (COMP 135) 5 6 Result of filter function Result of filter function Convolutional Layer Convolutional Layer Stride: move 5 x 5 pixel filter 2 pixels right Input: (28 x 28) Input: (28 x 28) Suppose we also choose a Suppose we choose a sub-region stride-value = 2 size of ( 5 x 5 ) pixels 8 Wednesday, 8 Apr. 2020 Machine Learning (COMP 135) 7 Wednesday, 8 Apr. 2020 Machine Learning (COMP 135) 7 8 2

A Full Convolutional Layer Convolutional Layer: (14 x 14) “Off-edge” pixel values all set to 0 The 3-dimensional CONV layer consists of a stack of N such filters, of dimensionality: (14 x 14 x N) Every neuron in each filter-layer shares N different convolutions a single set of common weights, applied to inputs, with the products summed as usual. Input: (28 x 28) (28 x 28) N Since stride = 2 , the result is a layer with half (14 x 14) as many neurons in each dimension 10 Wednesday, 8 Apr. 2020 Machine Learning (COMP 135) 9 Wednesday, 8 Apr. 2020 Machine Learning (COMP 135) 9 10 ReLU (Activation) Layers Combining Layers } CONV layer may or may not change input size (depends upon stride) Using a 3-dimensional convolutional layer of } ReLU layer keeps size the same, simply applying its function to neurons multiple filters means that we will have a matching number of activation layers. ReLU is very popular, but other activation function layers are allowed } N different convolutions N different activations Filter value: Activation value: x ReLU( x ) (28 x 28) N N Convolutional Layer ReLU Layer (14 x 14) (14 x 14) (14 x 14) (14 x 14) 12 Wednesday, 8 Apr. 2020 Machine Learning (COMP 135) 11 Wednesday, 8 Apr. 2020 Machine Learning (COMP 135) 11 12 3

Recommend

More recommend