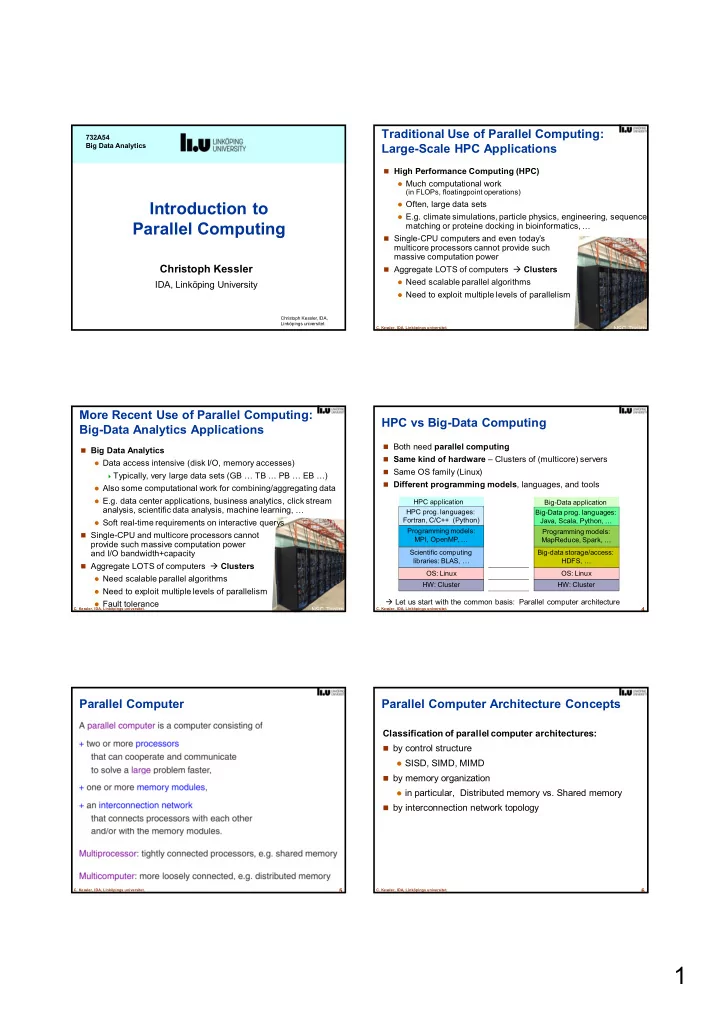

Traditional Use of Parallel Computing: 732A54 Big Data Analytics Large-Scale HPC Applications � High Performance Computing (HPC) � Much computational work (in FLOPs, floatingpoint operations) Introduction to � Often, large data sets � E.g. climate simulations, particle physics, engineering, sequence Parallel Computing matching or proteine docking in bioinformatics, … � Single-CPU computers and even today ’ s multicore processors cannot provide such massive computation power Christoph Kessler � Aggregate LOTS of computers � Clusters � Need scalable parallel algorithms IDA, Linköping University � Need to exploit multiple levels of parallelism Christoph Kessler, IDA, Linköpings universitet. NSC Triolith 2 C. Kessler, IDA, Linköpings universitet. More Recent Use of Parallel Computing: HPC vs Big-Data Computing Big-Data Analytics Applications � Both need parallel computing � Big Data Analytics � Same kind of hardware – Clusters of (multicore) servers � Data access intensive (disk I/O, memory accesses) � Same OS family (Linux) � Typically, very large data sets (GB … TB … PB … EB … ) � Different programming models , languages, and tools � Also some computational work for combining/aggregating data � E.g. data center applications, business analytics, click stream HPC application Big-Data application analysis, scientific data analysis, machine learning, … HPC prog. languages: Big-Data prog. languages: Fortran, C/C++ (Python) Java, Scala, Python, … � Soft real-time requirements on interactive querys Programming models: Programming models: � Single-CPU and multicore processors cannot MPI, OpenMP, … MapReduce, Spark, … provide such massive computation power and I/O bandwidth+capacity Scientific computing Big-data storage/access: libraries: BLAS, … HDFS, … � Aggregate LOTS of computers � Clusters OS: Linux OS: Linux � Need scalable parallel algorithms HW: Cluster HW: Cluster � Need to exploit multiple levels of parallelism � Let us start with the common basis: Parallel computer architecture � Fault tolerance NSC Triolith 3 4 C. Kessler, IDA, Linköpings universitet. C. Kessler, IDA, Linköpings universitet. Parallel Computer Parallel Computer Architecture Concepts Classification of parallel computer architectures: � by control structure � SISD, SIMD, MIMD � by memory organization � in particular, Distributed memory vs. Shared memory � by interconnection network topology C. Kessler, IDA, Linköpings universitet. 5 C. Kessler, IDA, Linköpings universitet. 6 1

Classification by Control Structure Classification by Memory Organization … e.g. (traditional) HPC cluster e.g. multiprocessor (SMP) or computer op with a standard multicoreCPU Most common today in HPC and Data centers: Hybrid Memory System • Cluster (distributed memory) op op op op of hundreds, thousands of 1 2 3 4 shared-memory servers each containing one or several multi-core CPUs 7 NSC Triolith 8 C. Kessler, IDA, Linköpings universitet. C. Kessler, IDA, Linköpings universitet. Hybrid (Distributed + Shared) Memory Interconnection Networks (1) � Network = physical interconnection medium (wires, switches) + communication protocol (a) connecting cluster nodes with each other (DMS) (b) connecting processors with memory modules (SMS) Classification M M � Direct / static interconnection networks � connecting nodes directly to each other P R � Hardware routers (communication coprocessors) can be used to offload processors from most communication work � Switched / dynamic interconnection networks 9 10 C. Kessler, IDA, Linköpings universitet. C. Kessler, IDA, Linköpings universitet. Interconnection Networks (3): Interconnection Networks (2): P P Fat-Tree Network Simple Topologies P P fully connected P P � Tree network extended for higher bandwidth (more switches, more links) closer to the root � avoids bandwidth bottleneck � Example: Infiniband network (www.mellanox.com) C. Kessler, IDA, Linköpings universitet. 11 C. Kessler, IDA, Linköpings universitet. 12 2

More about Interconnection Networks Example: Beowulf-class PC Clusters � Hypercube, Crossbar, Butterfly, Hybrid networks … � TDDC78 � Switching and routing algorithms with off-the-shelf CPUs (Xeon, Opteron, … ) � Discussion of interconnection network properties � Cost (#switches, #lines) � Scalability (asymptotically, cost grows not much faster than #nodes) � Node degree � Longest path ( � latency) � Accumulated bandwidth � Fault tolerance (worst-case impact of node or switch failure) � … 13 14 C. Kessler, IDA, Linköpings universitet. C. Kessler, IDA, Linköpings universitet. Cluster Example: The Challenge Triolith (NSC, 2012 / 2013) � Today, basically all computers are parallel computers! � Single-thread performance stagnating A so-called Capability cluster (fast network for parallel applications, � Dozens of cores and hundreds of HW threads available per server not for just lots of independent � May even be heterogeneous (core types, accelerators) sequential jobs) � Data locality matters � Large clusters for HPC and Data centers, require message passing 1200 HP SL230 servers (compute � Utilizing more than one CPU core requires thread-level parallelism nodes), each equipped with 2 Intel E5-2660 (2.2 GHz Sandybridge) � One of the biggest software challenges: Exploiting parallelism processors with 8 cores each � Need LOTS of (mostly, independent) tasks to keep cores/HW threads busy and overlap waiting times (cache misses, I/O accesses) � 19200 cores in total � All application areas, not only traditional HPC � General-purpose, data mining, graphics, games, embedded, DSP, … � Theoretical peak performance � Affects HW/SW system architecture, programming languages, of 338 Tflops/s algorithms, data structures … � Parallel programming is more error-prone Mellanox Infiniband network (deadlocks, races, further sources of inefficiencies) (Fat-tree topology) � And thus more expensive and time-consuming NSC Triolith 15 16 C. Kessler, IDA, Linköpings universitet. C. Kessler, IDA, Linköpings universitet. Can ’ t the compiler fix it for us? Insight � Design of efficient / scalable parallel algorithms is, � Automatic parallelization? in general , a creative task that is not automatizable � at compile time: � But some good recipes exist … � Requires static analysis – not effective for pointer-based � Parallel algorithmic design patterns � languages – inherently limited – missing runtime information � needs programmer hints / rewriting ... � ok only for few benign special cases: – loop vectorization – extraction of instruction-level parallelism � at run time (e.g. speculative multithreading) � High overheads, not scalable C. Kessler, IDA, Linköpings universitet. 17 C. Kessler, IDA, Linköpings universitet. 18 3

The remaining solution … Parallel Programming Model � System-software-enabled programmer ’ s view of the underlying hardware � Manual parallelization! � Abstracts from details of the underlying architecture, e.g. network topology � using a parallel programming language / framework, � Focuses on a few characteristic properties , e.g. memory model � e.g. MPI message passing interface for distributed memory; � Portability of algorithms/programs across a family of parallel architectures � Pthreads, OpenMP, TBB, … for shared-memory � Generally harder, more error-prone than sequential Programmer ’ s view of Message passing the underlying system programming, (Lang. constructs, API, … ) Shared Memory � requires special programming expertise to exploit the HW � Programming model resources effectively Mapping(s) performed by � Promising approach: programming toolchain (compiler, runtime system, Domain-specific languages/frameworks , library, OS, … ) � Restricted set of predefined constructs Underlying parallel doing most of the low-level stuff under the hood computer architecture � e.g. MapReduce, Spark, … for big-data computing 19 20 C. Kessler, IDA, Linköpings universitet. C. Kessler, IDA, Linköpings universitet. Foster ’ s Method for Design of Parallel Programs ( ” PCAM ” ) PROBLEM PARALLEL + algorithmic ALGORITHM P ARTITIONING approach DESIGN Design and Analysis Elementary C OMMUNICATION of Parallel Algorithms Tasks + SYNCHRONIZATION Textbook-style parallel algorithm Introduction A GGLOMERATION PARALLEL M APPING ALGORITHM + SCHEDULING ENGINEERING P1 P3 P2 (Implementation and adaptation for a specific Macrotasks (type of) parallel computer) Christoph Kessler, IDA, Linköpings universitet. 22 C. Kessler, IDA, Linköpings universitet. � I. Foster, Designing and Building Parallel Programs. Addison-Wesley, 1995. Parallel Computation Model = Programming Model + Cost Model Parallel Cost Models A Quantitative Basis for the Design of Parallel Algorithms Christoph Kessler, IDA, Linköpings universitet. C. Kessler, IDA, Linköpings universitet. 23 4

Recommend

More recommend