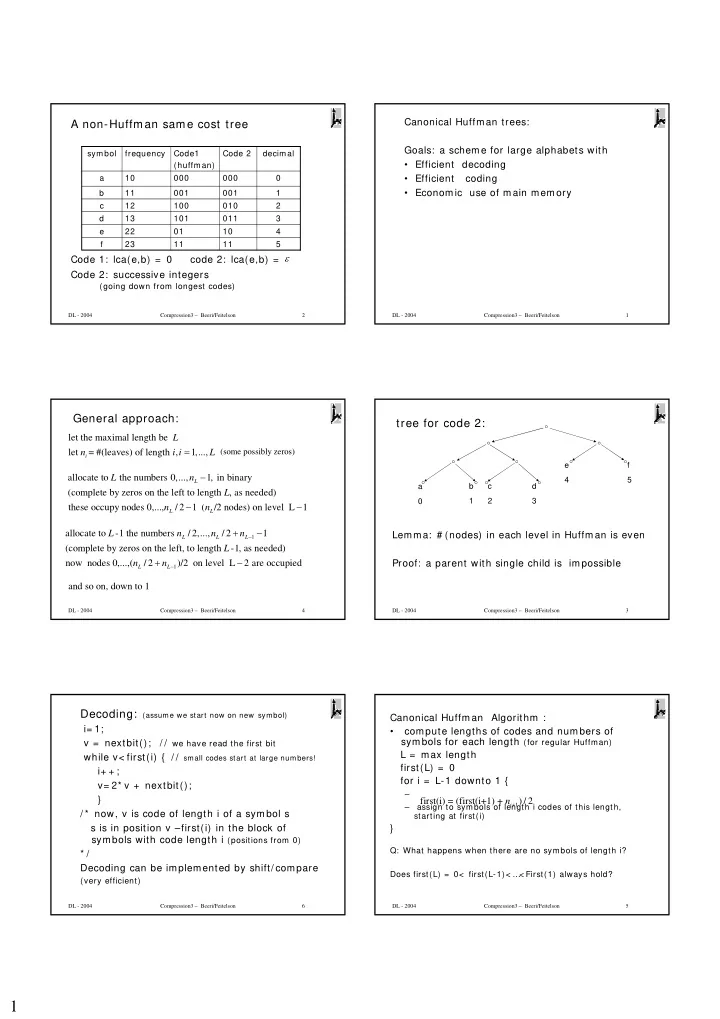

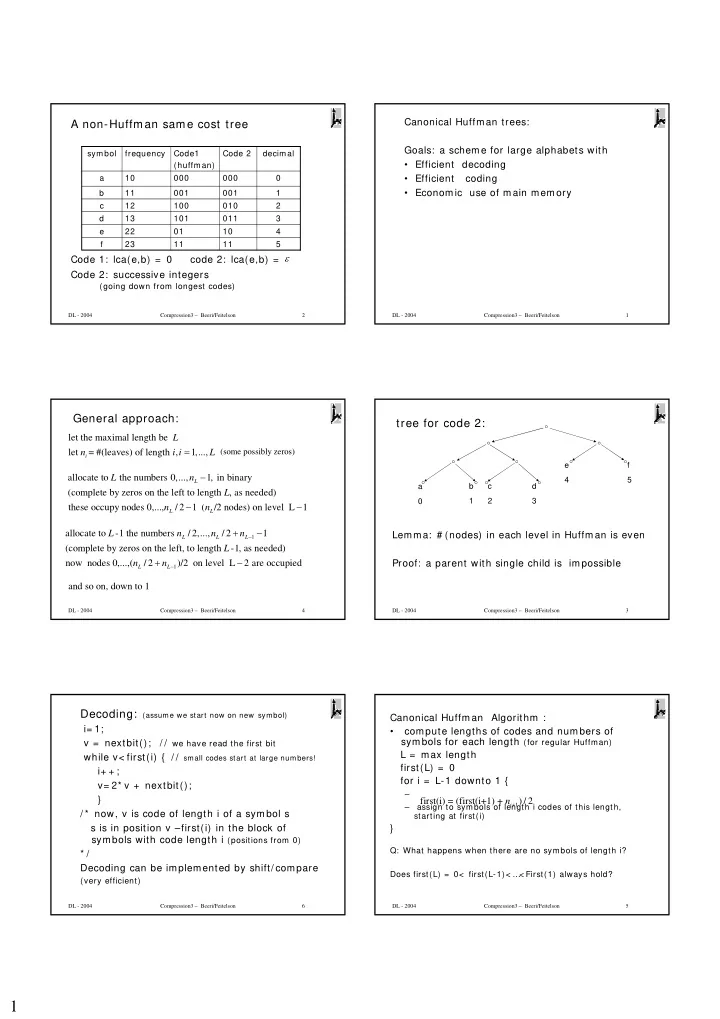

Canonical Huffman trees: A non-Huffman same cost tree Goals: a scheme for large alphabets with symbol frequency Code1 Code 2 decimal • Efficient decoding (huffman) a 10 000 000 0 • Efficient coding • Economic use of main memory b 11 001 001 1 c 12 100 010 2 d 13 101 011 3 e 22 01 10 4 f 23 11 11 5 ε Code 1: lca(e,b) = 0 code 2: lca(e,b) = Code 2: successive integers (going down from longest codes) DL - 2004 Compression3 – Beeri/Feitelson 2 DL - 2004 Compression3 – Beeri/Feitelson 1 General approach: tree for code 2: let the maximal length be L = let = #(leaves) of length , n i i 1,..., L (some possibly zeros) i e f − allocate to the numbers 0,..., L n 1, in binary 4 5 L b c d a (complete by zeros on the left to length , as needed) L 0 1 2 3 − − these occupy nodes 0,..., n / 2 1 ( n /2 nodes) on level L 1 L L + − allocate to -1 the numbers L n / 2,..., n / 2 n 1 Lemma: # (nodes) in each level in Huffman is even − L L L 1 (complete by zeros on the left, to length -1, as needed) L + − now nodes 0,...,( n / 2 n )/2 on level L 2 are occupied Proof: a parent with single child is impossible − L L 1 and so on, down to 1 DL - 2004 Compression3 – Beeri/Feitelson 4 DL - 2004 Compression3 – Beeri/Feitelson 3 Decoding: (assume we start now on new symbol) Canonical Huffman Algorithm : i= 1; • compute lengths of codes and numbers of symbols for each length (for regular Huffman) v = nextbit(); / / we have read the first bit L = max length while v< first(i) { / / small codes start at large numbers! first(L) = 0 i+ + ; for i = L-1 downto 1 { v= 2* v + nextbit(); – } first(i) = (first(i+1) + n + ) / 2 i 1 – assign to symbols of length i codes of this length, / * now, v is code of length i of a symbol s starting at first(i) s is in position v –first(i) in the block of } symbols with code length i (positions from 0) Q: What happens when there are no symbols of length i? * / Decoding can be implemented by shift/ compare Does first(L) = 0< first(L-1)< … < First(1) always hold? (very efficient) DL - 2004 Compression3 – Beeri/Feitelson 6 DL - 2004 Compression3 – Beeri/Feitelson 5 1

Data structures for decoder: Construction of canonical Huffman: (sketch) • The array first(i) assumption: we have the symbol frequencies • Arrays S(i) of the symbols with code length i, Input: a sequence of (symbol, freq) ordered by their code (v-first(i) is the index value to get the symbol for code v) Output: a sequence of (symbol, length) Idea: use an array to represent a heap for Thus, decoding uses efficient arithmetic operations creating the tree, and the resulting tree and + array look-up – more efficient then storing a tree and traversing pointers lengths What about coding (for large alphabets, where symbols = words or blocks)? We illustrate by example The problem: millions of symbols � large Huffman tree, … DL - 2004 Compression3 – Beeri/Feitelson 8 DL - 2004 Compression3 – Beeri/Feitelson 7 after one more step: Example: frequencies: 2, 8, 11, 12 (each cell with a freq. also contains a symbol – not shown) # 6 # 3 21 # 3 # 4 12 # 3 # 4 # 5 # 8 # 7 # 6 2 12 11 8 Finally, a representation of the Huffman tree: Now reps of 2, 8 (smallest) go out, rest percolate # 2 33 # 2 # 3 # 4 # 2 # 3 # 4 # 7 # 6 2 12 11 8 The sum 10 is put into cell4, and its rep into cell 3 Next, by i= 2 to 8, assign lengths (here shown after i= 4) Cell4 is the parent (“sum”) of cells 5, 8. # 2 0 1 2 # 4 # 2 # 3 # 4 # 7 # 6 # 3 10 # 4 12 11 # 4 DL - 2004 Compression3 – Beeri/Feitelson 10 DL - 2004 Compression3 – Beeri/Feitelson 9 Entropy H: a lower bound on compression Summary: How can one still improve? • Insertion of (symbol,freq) into array – O(n) • Creation of heap – kn log n Huffman works for given frequencies, e.g., for the English language – static modeling k log n • Creating tree from heap: each step is Plus: No need to store model in coder/ decoder kn log n total is • Computing lengths O(n) But, can construct frequency table for each file • Storage requirements: 2n (compare to tree!) semi-static modeling Minus: – Need to store model in compressed file (negligible for large files) – Takes more time to compress Plus: may provide for better compression DL - 2004 Compression3 – Beeri/Feitelson 12 DL - 2004 Compression3 – Beeri/Feitelson 11 2

3rd option: start compressing with default freqs Adaptive Huffman: As coding proceeds, update frequencies: • Construction of Huffman after each symbol: O(n) After reading a symbol: • Incremental adaptation in O(logn) is possible – compress it Both too expensive for practical use (large alphabets) – update freq table* Adaptive modeling Decoding must use precisely same algorithm for updating freqs � can follow coding Plus: We illustrate adaptive by arithmetic coding (soon) • Model need not be stored • May provide compression that adapts to file, including local changes of freqs Minus: less efficient then previous models * May use a sliding window to better reflect local changes DL - 2004 Compression3 – Beeri/Feitelson 14 DL - 2004 Compression3 – Beeri/Feitelson 13 Arithmetic coding : Higher-order modeling: use of context Can be static, semi-static, adaptive Basic idea: e.g.: for each block of 2 letters, construct freq. • Coder: start with the interval [ 0,1) table for the next letter (2-order compression) • 1 st symbol selects a sub-interval, based on its (uses conditional probabilities – hence improvement) probability This also can be static/ semi-static/ adaptive • i’th symbol selects a sub-interval of (i-1)’th interval, based on its probability • When file ends, store a number in the final interval • Decoder: reads the number, reconstructs the sequence of intervals, i.e. symbols • Important: Length of file stored at beginning of compressed file (otherwise, decoder does not know when to stop) DL - 2004 Compression3 – Beeri/Feitelson 16 DL - 2004 Compression3 – Beeri/Feitelson 15 Why is it a good approach in general? Example : (static) a ~ 3/ 4, b ~ 1/ 4 The file to be compressed: aaaba For a symbol with large probability, # of binary The sequence of intervals (& symbols creating them) : digits needed to represent an occurrence is [ 0,1), a [ 0,3/ 4), a [ 0,9/ 16), a [ 0, 27/ 64), smaller than 1 � poor compression with b [ 81/ 256, 108/ 256), a [ 324/ 1024, 405/ 1024) Huffman But, arithmetic represents such a symbol with a small shrinkage of interval, hence the extra Assuming this is the end, we store: number of digits is smaller than 1! • 5 –length of file • Any number in final interval, say 0.011 (3 digits) Consider the example above, after aaa (after first 3 a’s, one digit suffices!) (for a large file, the length will be negligible) DL - 2004 Compression3 – Beeri/Feitelson 18 DL - 2004 Compression3 – Beeri/Feitelson 17 3

Recommend

More recommend