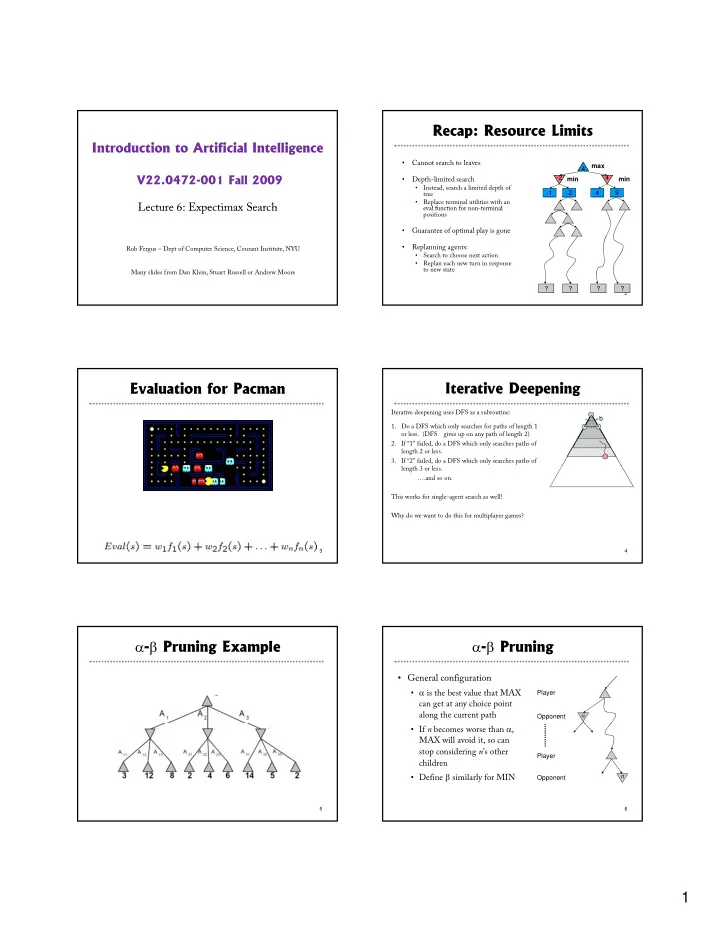

Recap: Resource Limits Introduction to Artificial Intelligence • Cannot search to leaves max 4 V22.0472-001 Fall 2009 -2 4 • Depth-limited search min min • Instead, search a limited depth of -1 -2 4 9 tree • Replace terminal utilities with an Lecture 6: Expectimax Search Lecture 6: Expectimax Search eval function for non-terminal eval function for non terminal positions • Guarantee of optimal play is gone • Replanning agents: Rob Fergus – Dept of Computer Science, Courant Institute, NYU • Search to choose next action • Replan each new turn in response to new state Many slides from Dan Klein, Stuart Russell or Andrew Moore ? ? ? ? 2 Evaluation for Pacman Iterative Deepening Iterative deepening uses DFS as a subroutine: b 1. Do a DFS which only searches for paths of length 1 … or less. (DFS gives up on any path of length 2) 2. If “1” failed, do a DFS which only searches paths of length 2 or less. 3. If “2” failed, do a DFS which only searches paths of length 3 or less. ….and so on. This works for single-agent search as well! Why do we want to do this for multiplayer games? 3 4 α - β Pruning Example α - β Pruning • General configuration • α is the best value that MAX Player can get at any choice point along the current path α Opponent • If n becomes worse than α , MAX will avoid it, so can stop considering n ’s other Player children • Define β similarly for MIN n Opponent 5 6 1

α - β Pruning Pseudocode α - β Pruning Properties • Pruning has no effect on final result • Good move ordering improves effectiveness of pruning • With “perfect ordering”: With perfect ordering : • Time complexity drops to O(b m/2 ) • Doubles solvable depth • Full search of, e.g. chess, is still hopeless! • A simple example of metareasoning, here reasoning about β which computations are relevant v 7 8 Expectimax Search Trees Maximum Expected Utility • What if we don’t know what the result • Why should we average utilities? Why not minimax? of an action will be? E.g., • In solitaire, next card is unknown • In minesweeper, mine locations max • Principle of maximum expected utility: an agent should chose • In pacman, the ghosts act randomly the action which maximizes its expected utility, given its • Can do expectimax search knowledge • Chance nodes, like min nodes, except Ch d lik i d t chance chance the outcome is uncertain • Calculate expected utilities • General principle for decision making • Max nodes as in minimax search • Often taken as the definition of rationality • Chance nodes take average (expectation) of value of children 10 4 5 7 • We’ll see this idea over and over in this course! Later, we’ll learn how to formalize the • underlying problem as a Markov • Let’s decompress this definition… Decision Process 9 10 Reminder: Probabilities What are Probabilities? • Objectivist / frequentist answer: • A random variable represents an event whose outcome is unknown A probability distribution is an assignment of weights to outcomes • • Averages over repeated experiments • E.g. empirically estimating P(rain) from historical observation • Example: traffic on freeway? • Assertion about how future experiments will go (in the limit) • Random variable: T = whether there’s traffic • New evidence changes the reference class • Outcomes: T in {none, light, heavy} • Makes one think of inherently random events like rolling dice Makes one think of inherently random events, like rolling dice • • Distribution: P(T=none) = 0.25, P(T=light) = 0.55, P(T=heavy) = 0.20 Di t ib ti P(T ) 0 25 P(T li ht) 0 55 P(T h ) 0 20 • Some laws of probability (more later): • Subjectivist / Bayesian answer: Probabilities are always non-negative • • Degrees of belief about unobserved variables • Probabilities over all possible outcomes sum to one • E.g. an agent’s belief that it’s raining, given the temperature • As we get more evidence, probabilities may change: • E.g. pacman’s belief that the ghost will turn left, given the state • P(T=heavy) = 0.20, P(T=heavy | Hour=8am) = 0.60 • Often learn probabilities from past experiences (more later) • We’ll talk about methods for reasoning and updating probabilities later • New evidence updates beliefs (more later) 11 12 2

Uncertainty Everywhere Reminder: Expectations • Often a quantity of interest depends on a random variable Not just for games of chance! • • I’m snuffling: am I sick? • The expected value of a function is its average output, weighted • Email contains “FREE!”: is it spam? • Tooth hurts: have cavity? by a given distribution over inputs • 60 min enough to get to the airport? • Robot rotated wheel three times, how far did it advance? • Example: How late if I leave 60 min before my flight? p y g • Safe to cross street? (Look both ways!) Safe to cross street? (Look both ways!) • Lateness is a function of traffic: • Why can a random variable have uncertainty? L(none) = -10, L(light) = -5, L(heavy) = 15 • Inherently random process (dice, etc) • What is my expected lateness? • Insufficient or weak evidence • Need to specify some belief over T to weight the outcomes • Ignorance of underlying processes • Say P(T) = {none: 2/5, light: 2/5, heavy: 1/5} • Unmodeled variables • The expected lateness: • The world’s just noisy! • Compare to fuzzy logic , which has degrees of truth , or rather than just degrees of belief 13 14 Expectations Utilities • Real valued functions of random variables: • Utilities are functions from outcomes (states of the world) to real numbers that describe an agent’s preferences • Expectation of a function of a random variable • Where do utilities come from? • In a game, may be simple (+1/-1) • Utilities summarize the agent’s goals Utilities summarize the agent s goals • Theorem: any set of preferences between outcomes can be summarized as a utility function (provided the preferences meet certain conditions) • Example: Expected value of a fair die roll • In general, we hard-wire utilities and let actions emerge (why P X f don’t we let agents decide their own utilities?) 1 1/6 1 2 1/6 2 • More on utilities soon… 3 1/6 3 4 1/6 4 5 1/6 5 15 16 6 1/6 6 Expectimax Search Expectimax Pseudocode • In expectimax search, we have a def value(s) probabilistic model of how the if s is a max node return maxValue(s) opponent (or environment) will if s is an exp node return expValue(s) behave in any state • Model could be a simple uniform if s is a terminal node return evaluation(s) distribution (roll a die) • Model could be sophisticated and def maxValue(s) def maxValue(s) require a great deal of computation require a great deal of computation • We have a node for every outcome values = [value(s’) for s’ in successors(s)] out of our control: opponent or return max(values) environment 8 4 5 6 • The model might say that adversarial actions are likely! def expValue(s) For now, assume for any state we • values = [value(s’) for s’ in successors(s)] magically have a distribution to assign probabilities to opponent weights = [probability(s, s’) for s’ in successors(s)] actions / environment outcomes return expectation(values, weights) Having a probabilistic belief about an agent’s action does not mean 17 18 that agent is flipping any coins! 3

Expectimax for Pacman Expectimax Pruning? • Notice that we’ve gotten away from thinking that the ghosts are trying to minimize pacman’s score • Instead, they are now a part of the environment • Pacman has a belief (distribution) over how they will act • Quiz: Can we see minimax as a special case of expectimax? • Quiz: what would pacman s computation look like if we • Q i h t ld ’ t ti l k lik if assumed that the ghosts were doing 1-ply minimax and taking the result 80% of the time, otherwise moving randomly? • If you take this further, you end up calculating belief distributions over your opponents’ belief distributions over your belief distributions, etc… • Can get unmanageable very quickly! 19 20 Expectimax Evaluation Mixed Layer Types • E.g. Backgammon • For minimax search, evaluation function insensitive • Expectiminimax to monotonic transformations • Environment is an extra • We just want better states to have higher evaluations (get player that moves after the ordering right) each agent • Chance nodes take • For expectimax, we need the magnitudes to be expectations, otherwise like minimax meaningful as well • E.g. must know whether a 50% / 50% lottery between A and B is better than 100% chance of C • 100 or -10 vs 0 is different than 10 or -100 vs 0 21 22 Stochastic Two-Player Non-Zero-Sum Games • Similar to • Dice rolls increase b : 21 possible rolls with minimax: 2 dice • Utilities are now • Backgammon ≈ 20 legal moves tuples • Depth 4 = 20 x (21 x 20) 3 1.2 x 10 9 • Each player • As depth increases, probability of reaching maximizes their a given node shrinks a given node shrinks own entry at own entry at each node • So value of lookahead is diminished • Propagate (or • So limiting depth is less damaging back up) nodes • But pruning is less possible… from children • TDGammon uses depth-2 search + very • Can give rise to good eval function + reinforcement cooperation and 1,2,6 4,3,2 6,1,2 7,4,1 5,1,1 1,5,2 7,7,1 5,4,5 learning: world-champion level play competition dynamically… 23 24 4

Recommend

More recommend