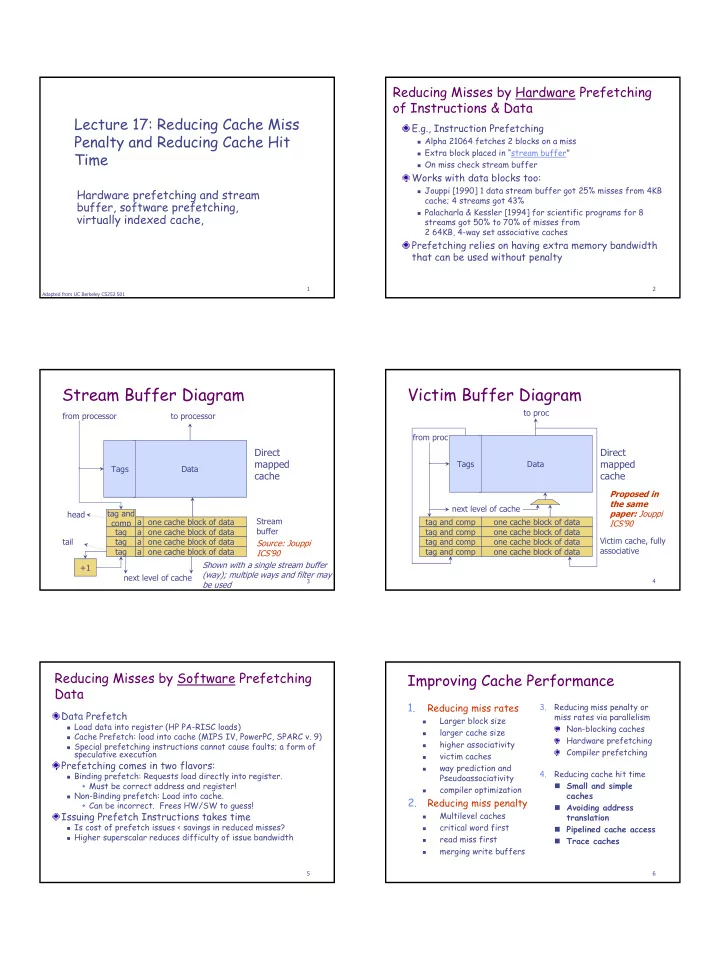

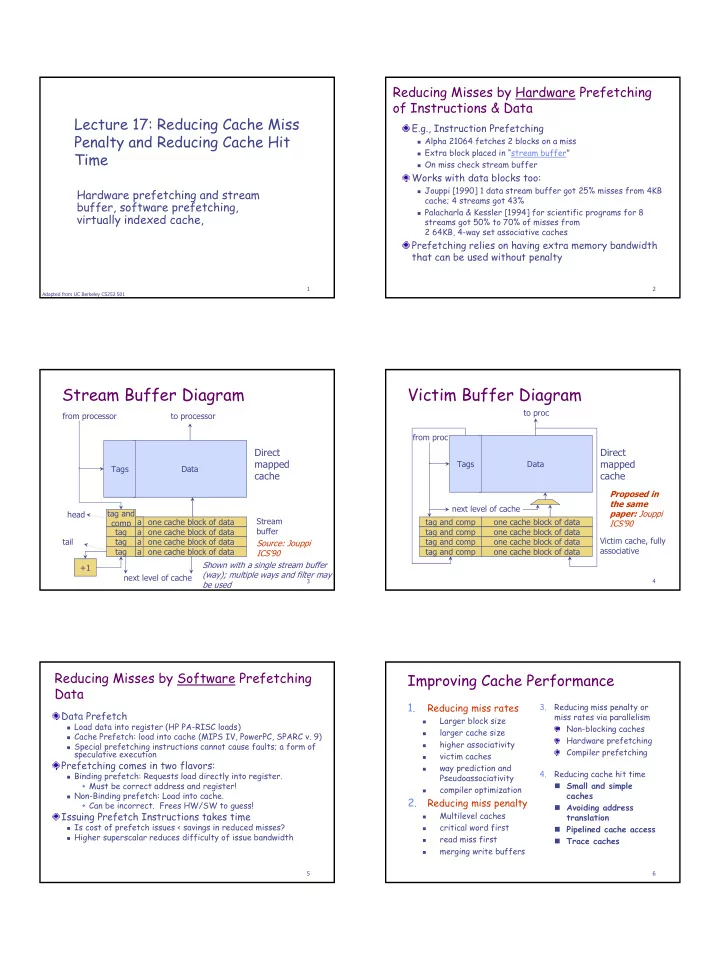

Reducing Misses by Hardware Prefetching of Instructions & Data Lecture 17: Reducing Cache Miss E.g., Instruction Prefetching Penalty and Reducing Cache Hit � Alpha 21064 fetches 2 blocks on a miss � Extra block placed in “stream buffer” Time � On miss check stream buffer Works with data blocks too: � Jouppi [1990] 1 data stream buffer got 25% misses from 4KB Hardware prefetching and stream cache; 4 streams got 43% buffer, software prefetching, � Palacharla & Kessler [1994] for scientific programs for 8 virtually indexed cache, streams got 50% to 70% of misses from 2 64KB, 4-way set associative caches Prefetching relies on having extra memory bandwidth that can be used without penalty 1 2 Adapted from UC Berkeley CS252 S01 Stream Buffer Diagram Victim Buffer Diagram to proc from processor to processor from proc Direct Direct mapped mapped Tags Data Tags Data cache cache Proposed in the same next level of cache head tag and paper: Jouppi a one cache block of data Stream tag and comp one cache block of data comp ICS’90 buffer tag a one cache block of data tag and comp one cache block of data Victim cache, fully tail tag a one cache block of data tag and comp one cache block of data Source: Jouppi tag a one cache block of data tag and comp one cache block of data associative ICS’90 Shown with a single stream buffer +1 (way); multiple ways and filter may next level of cache 3 4 be used Reducing Misses by Software Prefetching Improving Cache Performance Data 1. Reducing miss rates 3. Reducing miss penalty or Data Prefetch miss rates via parallelism Larger block size � � Load data into register (HP PA-RISC loads) Non-blocking caches larger cache size � Cache Prefetch: load into cache (MIPS IV, PowerPC, SPARC v. 9) � Hardware prefetching higher associativity � Special prefetching instructions cannot cause faults; a form of � Compiler prefetching speculative execution victim caches � Prefetching comes in two flavors: way prediction and � 4. Reducing cache hit time � Binding prefetch: Requests load directly into register. Pseudoassociativity � Must be correct address and register! � Small and simple compiler optimization � � Non-Binding prefetch: Load into cache. caches 2. Reducing miss penalty � Can be incorrect. Frees HW/SW to guess! � Avoiding address Issuing Prefetch Instructions takes time Multilevel caches translation � � Is cost of prefetch issues < savings in reduced misses? critical word first � Pipelined cache access � � Higher superscalar reduces difficulty of issue bandwidth read miss first � Trace caches � merging write buffers � 5 6 1

Fast hits by Avoiding Address Fast hits by Avoiding Address Translation Translation Send virtual address to cache? Called Virtually Addressed Cache or just Virtual Cache vs. Physical Cache � Every time process is switched logically must flush the cache; CPU CPU CPU otherwise get false hits � Cost is time to flush + “compulsory” misses from empty cache VA VA VA � Dealing with aliases (sometimes called synonyms ); VA PA TB $ $ TB Two different virtual addresses map to same physical address Tags Tags � I/O use physical addresses and must interact with cache, so need PA VA PA virtual address L2 $ $ TB Antialiasing solutions PA MEM PA � HW guarantees covers index field & direct mapped, they must be unique; called page coloring MEM MEM Solution to cache flush Overlap cache access with VA translation: � Add process identifier tag that identifies process as well as address Conventional Virtually Addressed Cache requires cache index to within process: can’t get a hit if wrong process Organization Translate only on miss remain invariant Synonym Problem across translation 7 8 Fast Cache Hits by Avoiding Fast Cache Hits by Avoiding Translation: Index with Physical Portion of Address Translation: Process ID impact If a direct mapped cache is no larger than a page, then Black is uniprocess the index is physical part of address Light Gray is multiprocess can start tag access in parallel with translation so that when flush cache can compare to physical tag Dark Gray is multiprocess Page Address 0 Page Offset when use Process ID tag Y axis: Miss Rates up to 12 31 11 0 Address Tag Index Block Offset 20% Limits cache to page size: what if want bigger caches and X axis: Cache size from 2 uses same trick? KB to 1024 KB � Higher associativity moves barrier to right � Page coloring Compared with virtual cache used with page coloring? 9 10 Pipelined Cache Access Pipelined Cache Access For multi-issue, cache bandwidth affects Alpha 21264 Data cache design effective cache hit time � The cache is 64KB, 2-way associative; � Queueing delay adds up if cache does not cannot be accessed within one-cycle have enough read/write ports � One-cycle used for address transfer and Pipelined cache accesses: reduce cache data transfer, pipelined with data array cycle time and improve bandwidth access Cache organization for high bandwidth � Cache clock frequency doubles processor � Duplicate cache frequency; wave pipelined to achieve the speed � Banked cache � Double clocked cache 11 12 2

Trace Cache Summary of Reducing Cache Hit Time Trace: a dynamic sequence of Small and simple caches: used for L1 instructions including taken branches inst/data cache � Most L1 caches today are small but set- Traces are dynamically constructed by associative and pipelined (emphasizing processor hardware and frequently throughput?) used traces are stored into trace � Used with large L2 cache or L2/L3 caches cache Avoiding address translation during indexing cache Example: Intel P4 processor, storing � Avoid additional delay for TLB access about 12K mops 13 14 What is the Impact of What Cache Optimization Summary We’ve Learned About Caches? Technique MP MR HT Complexity 1960-1985: Speed Multilevel cache + 2 = ƒ(no. operations) 1000 CPU Critical work first + 2 1990 penalty miss Read first + 1 � Pipelined Merging write buffer + 1 100 Execution & Victim caches + + 2 Fast Clock Rate Larger block - + 0 � Out-of-Order Larger cache + - 1 miss rate 10 execution Higher associativity + - 1 � Superscalar DRAM Way prediction + 2 Instruction Issue 1 Pseudoassociative + 2 1998: Speed = 1980 1981 1982 1983 1984 1985 1986 1987 1988 1989 1990 1991 1992 1993 1994 1995 1996 1997 1998 1999 2000 Compiler techniques + 0 ƒ(non-cached memory accesses) What does this mean for � Compilers? Operating Systems? Algorithms? Data Structures? 15 16 Cache Optimization Summary Technique MP MR HT Complexity Nonblocking caches + 3 penalty Hardware prefetching + 2/3 miss Software prefetching + + 3 Small and simple cache - + 0 Avoiding address translation + 2 hit time Pipeline cache access + 1 Trace cache + 3 17 3

Recommend

More recommend