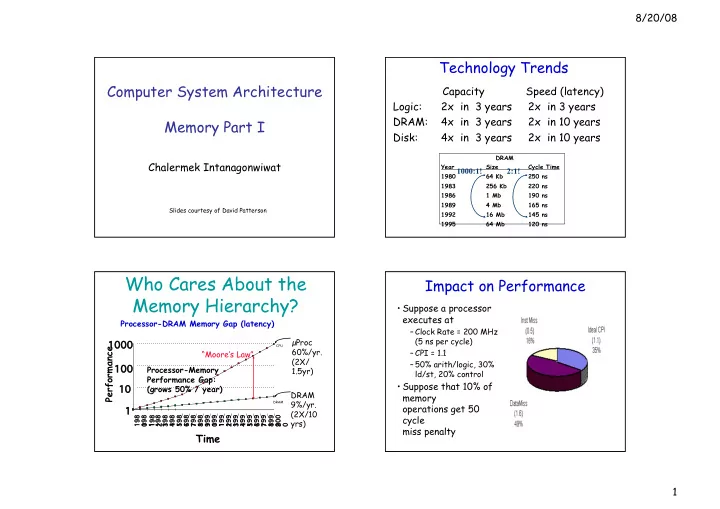

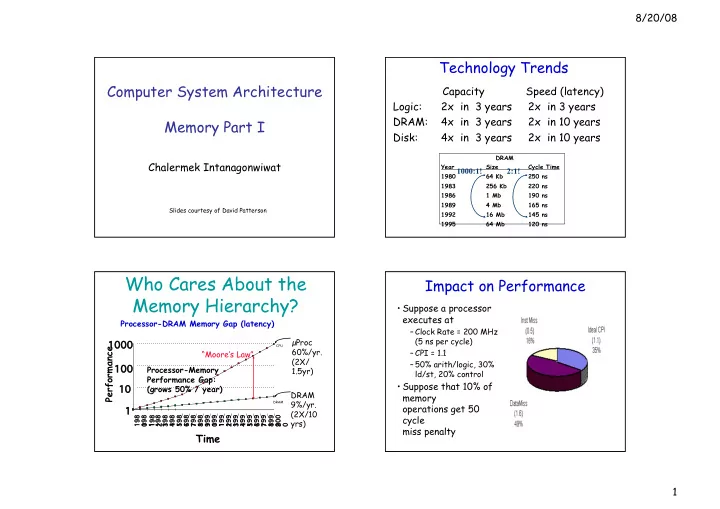

8/20/08 Technology Trends Computer System Architecture Capacity Speed (latency) Logic: 2x in 3 years 2x in 3 years DRAM: 4x in 3 years 2x in 10 years Memory Part I Disk: 4x in 3 years 2x in 10 years DRAM Chalermek Intanagonwiwat Year Size Cycle Time 1000:1! 2:1! 1980 64 Kb 250 ns 1983 256 Kb 220 ns 1986 1 Mb 190 ns 1989 4 Mb 165 ns Slides courtesy of David Patterson 1992 16 Mb 145 ns 1995 64 Mb 120 ns Who Cares About the Impact on Performance Memory Hierarchy? • Suppose a processor executes at Processor-DRAM Memory Gap (latency) – Clock Rate = 200 MHz (5 ns per cycle) µ Proc 1000 1000 Performance CPU 60%/yr. – CPI = 1.1 “Moore’s Law” (2X/ – 50% arith/logic, 30% 100 100 Processor-Memory 1.5yr) ld/st, 20% control Performance Gap: • Suppose that 10% of 10 10 (grows 50% / year) DRAM memory 9%/yr. DRAM operations get 50 1 (2X/10 cycle 198 198 198 198 198 198 198 198 198 198 198 198 198 198 198 198 198 198 198 198 199 199 199 199 199 199 199 199 199 199 199 199 199 199 199 199 199 199 199 199 200 200 yrs) 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 miss penalty Time 1

8/20/08 Impact on Performance (cont.) The Goal: illusion of large, fast, cheap memory • CPI = ideal CPI + average stalls per instruction = 1.1(cyc) + • Fact: Large memories are slow, fast ( 0.30 (datamops/ins) memories are small x 0.10 (miss/datamop) x 50 (cycle/miss) ) • How do we create a memory that is large, = 1.1 cycle + 1.5 cycle cheap and fast (most of the time)? • 58 % of the time the processor is stalled waiting for memory! – Hierarchy • a 1% instruction miss rate would add – Parallelism an additional 0.5 cycles to the CPI! An Expanded View of the Why hierarchy works Memory System • The Principle of Locality: – Program access a relatively small portion of Processor the address space at any instant of time. Control Memory Memory Probability of reference Memory Memory Memory Datapath Slowest Speed: Fastest Biggest Size: Smallest 0 2^n - 1 Lowest Address Space Cost: Highest 2

8/20/08 Memory Hierarchy: How Does it Memory Hierarchy: Terminology Work? • Hit: data appears in some block in the • Temporal Locality (Locality in Time): upper level (example: Block X) => Keep most recently accessed data items – Hit Rate: the fraction of memory access closer to the processor found in the upper level • Spatial Locality (Locality in Space): – Hit Time: Time to access the upper level => Move blocks consists of contiguous words which consists of to the upper levels RAM access time + Time to determine hit/miss Lower Level Lower Level Upper Level Memory Upper Level Memory To Processor To Processor Memory Memory Blk X Blk X From Processor From Processor Blk Y Blk Y Memory Hierarchy: Terminology Memory Hierarchy of a Modern (cont.) Computer System • Miss: data needs to be retrieve from a block in the lower level (Block Y) • By taking advantage of the principle of locality: – Miss Rate = 1 - (Hit Rate) – Miss Penalty: Time to replace a block in the – Present the user with as much memory as is upper level + available in the cheapest technology. Time to deliver the block the processor – Provide access at the speed offered by the • Hit Time << Miss Penalty fastest technology. Lower Level Upper Level Memory To Processor Memory Blk X From Processor Blk Y 3

8/20/08 Memory Hierarchy of a Modern How is the hierarchy managed? Computer System (cont.) • Registers <-> Memory – by compiler (programmer?) • cache <-> memory Processor – by the hardware Control Tertiary • memory <-> disks Secondary Storage Storage (Disk) Second Main – by the hardware and operating system (Disk) On-Chip Registers Level Memory Cache Datapath Cache (DRAM) (virtual memory) (SRAM) – by the programmer (files) Speed (ns): 1s 10s 100s 10,000,000s 10,000,000,000s (10s ms) (10s sec) Size (bytes): 100s Ks Ms Gs Ts Memory Hierarchy Technology Memory Hierarchy Technology (cont.) • Random Access: • “Non-so-random” Access Technology: – “Random” is good: access time is the same for all locations – Access time varies from location to location – DRAM: Dynamic Random Access Memory and from time to time • High density, low power, cheap, slow – Examples: Disk, CDROM • Dynamic: need to be “refreshed” regularly • Sequential Access Technology: access – SRAM: Static Random Access Memory time linear in location (e.g.,Tape) • Low density, high power, expensive, fast • Static: content will last “forever”(until lose power) 4

8/20/08 Summary Summary (cont.) • Two Different Types of Locality: • By taking advantage of the principle of – Temporal Locality (Locality in Time): If an locality: item is referenced, it will tend to be – Present the user with as much memory as is referenced again soon. available in the cheapest technology. – Spatial Locality (Locality in Space): If an – Provide access at the speed offered by the item is referenced, items whose addresses fastest technology. are close by tend to be referenced soon. Summary (cont.) • DRAM is slow but cheap and dense: – Good choice for presenting the user with a BIG memory system • SRAM is fast but expensive and not very dense: – Good choice for providing the user FAST access time. 5

Recommend

More recommend